????机器学习课程总结,

本系列文章以代码和注释为主。

????理论部分搬至博客上比较耗费时间,所以缺少理论部分。但是也欢迎大家一起探讨学习。

????如果需要理论部分的讲义,可私信(个人觉的讲的很好很全)。

问题需求

-

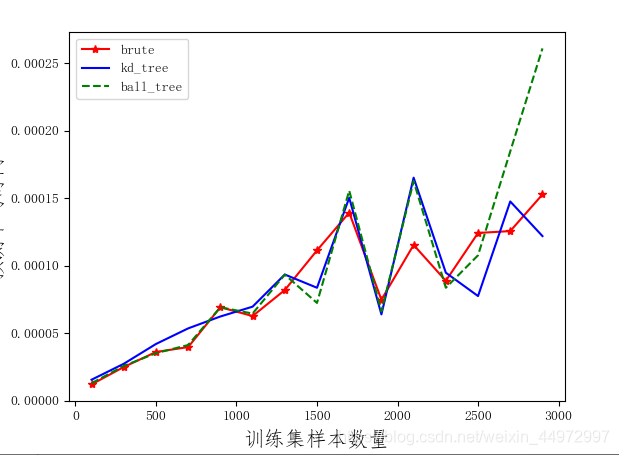

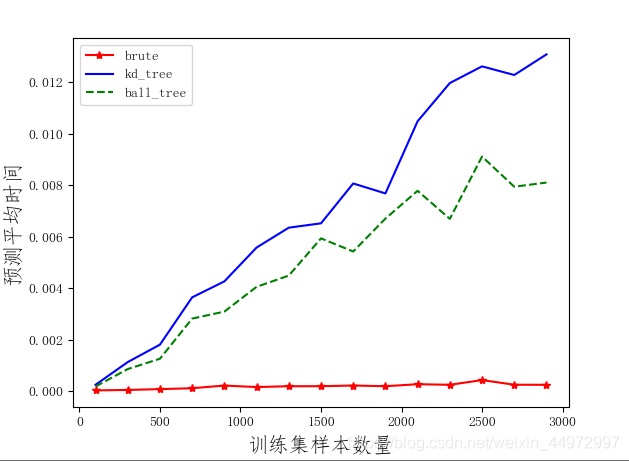

针对1024维的特征,考虑暴力搜索(穷举)、kd树、Ball树的查询时间与样本数量之间的关系。为此随机抽取N个样本作为训练集,随机抽取500个样本作为测试集,统计使用各方法时预测每个样本的平均时间T。请分别画出三种方法下T与N的曲线图。

-

针对64维的特征,重复第1题的过程。

-

将所有曲线画到同一个坐标系中,使用不同的颜色或者线型加以区分,并附上legend。

-

请对结果进行分析、总结(即得到什么结论)。

代码1(64*64)

# -*- coding: utf-8 -*-#

# Author: xhc

# Date: 2021-03-29 14:22

# project: 0329

# Name: knn.py

import pandas as pd

import numpy as np

import time

from pylab import *

import matplotlib.pyplot as plt

from sklearn.neighbors import KNeighborsClassifier

data_test = pd.read_csv('./data/UCI_digits.test',header = None)

data_train = pd.read_csv('./data/UCI_digits.train',header = None)

#对训练集数据进行分割

train = np.array(data_train)

data_train_new = []# 处理后的训练数据

data_test_new = [] # 处理后的测试数据

for x in train:

x = list(x[0])

x = list(map(int,x))

data_train_new.append(x)

#对测试集数据进行分割

test = np.array(data_test)

for x in test:

x = list(x[0])

x = list(map(int,x))

data_test_new.append(x)

#合并全部数据集

data = np.append(data_train_new,data_test_new,axis = 0) # 这样子合并也可以么

data = pd.DataFrame(data)# 转换数据格式

#测试集的特征和标签

data_test = np.array(data.sample(n=500,axis=0))# 随机读取若干行 0纵向 1 横向

data_test_train = data_test[:,:-1]

data_test_label = data_test[:,-1]

# 构建模型 训练 计算时间

def fun(method): # 函数 三种方式

# weight 根据距离加权(改进)

knc = KNeighborsClassifier(n_neighbors = 10,metric = 'minkowski',p = 2,weights = 'distance',algorithm = method)

knc.fit(data_train_train,data_train_label)

start1 = time.perf_counter()

label_predict = knc.predict(data_test_train)

end1 = time.perf_counter()

return end1-start1

time1 = []

time2 = []

time3 = []

for N in range(100,3000,200):

# axis=0 记住图像 是向下的 操作的是行 axis=1 相反

data_train = np.array(data.sample(n=N,axis=0))# 其中N表示的是抽取的行数 axis表示方向

data_train_train = data_train[:,:-1]# 取特征

data_train_label = data_train[:,-1]# 取标签

#暴力搜索

time_new=fun('brute')

time1.append(time_new/500) # 列表记录时间 方便作图

#kd树

time_new = fun('kd_tree')

time2.append(time_new/500)

#ball树

time_new=fun('ball_tree')

time3.append(time_new/500)

#1024转换成64

def fun2(data):

new_train1 = []

for z in data:

z = np.array(z)

z = z.reshape((32,32))

train1 = []

for i in range(8):

for j in range(8):

r1 = 4*i

r2 = 4*i+4

c1 = 4*j

c2 = 4*j+4

new_z = np.sum(z[r1:r2,c1:c2])

train1.append(new_z)

new_train1.append(train1)

return np.array(new_train1)

#测试集

data_test = np.array(data.sample(n=500,axis=0))

data_test_train = data_test[:,:-1]

data_test_label = data_test[:,-1]

data_test_train = fun2(data_test_train)

time1_64 = []

time2_64 = []

time3_64 = []

for N in range(100,3000,200):

data_train = np.array(data.sample(n=N,axis=0))

data_train_train = data_train[:,:-1]

data_train_label = data_train[:,-1]

data_train_train = fun2(data_train_train)

#暴力搜索

time_new=fun('brute')

time1_64.append(time_new/500)

#kd树

time_new=fun('kd_tree')

time2_64.append(time_new/500)

#ball树

time_new=fun('ball_tree')

time3_64.append(time_new/500)

#64转换成16

def fun3(data):

new_train1 = []

for z in data:

z = np.array(z)

z = z.reshape((8,8))

train1 = []

for i in range(4):

for j in range(4):

r1 = 2*i

r2 = 2*i+2

c1 = 2*j

c2 = 2*j+2

new_z = np.sum(z[r1:r2,c1:c2])

train1.append(new_z)

new_train1.append(train1)

return np.array(new_train1)

#测试集

data_test = np.array(data.sample(n=500,axis=0))

data_test_train = data_test[:,:-1]

data_test_label = data_test[:,-1]

data_test_train = fun2(data_test_train)

data_test_train = fun3(data_test_train)

time1_16 = []

time2_16 = []

time3_16 = []

for N in range(100,3000,200):

data_train = np.array(data.sample(n=N,axis=0))

data_train_train = data_train[:,:-1]

data_train_label = data_train[:,-1]

data_train_train = fun2(data_train_train)

data_train_train = fun3(data_train_train)

#暴力搜索

time_new=fun('brute')

time1_16.append(time_new/500)

#kd树

time_new=fun('kd_tree')

time2_16.append(time_new/500)

#ball树

time_new=fun('ball_tree')

time3_16.append(time_new/500)

def result_show(time1,time2,time3):

mpl.rcParams['font.sans-serif'] = ['FangSong'] # 指定默认字体

plt.rcParams['axes.unicode_minus'] = False

N = np.arange(100, 3000, 200)

plt.plot(N, time1, 'r-*', label='brute')

plt.plot(N, time2, 'b-', label='kd_tree')

plt.plot(N, time3, 'g--', label='ball_tree')

plt.legend()

plt.xlabel('训练集样本数量', fontsize=15)

plt.ylabel('预测平均时间', fontsize=15)

plt.show()

result_show(time1_16,time2_16,time3_16)

代码2(8*8)

# -*- coding: utf-8 -*-#

# Author: xhc

# Date: 2021-03-29 15:40

# project: 0329

# Name: knn1.py

import pandas as pd

import numpy as np

import time

from pylab import *

import matplotlib.pyplot as plt

from sklearn.neighbors import KNeighborsClassifier

#data 数据集合 n*x*x 转成 n*y*y

def fun2(data,x,y):

x=int(x)

y=int(y)

number=int(x/y)

new_train1 = []

for z in data:

z = np.array(z)

z = z.reshape((x,x))

train1 = []

for i in range(y):

for j in range(y):

r1 = number*i

r2 = number*i+number

c1 = number*j

c2 = number*j+number

new_z = np.sum(z[r1:r2,c1:c2])

train1.append(new_z)

new_train1.append(train1)

return np.array(new_train1)

# 数据格式处理合并

def data_slove():

data_test = pd.read_csv('./data/UCI_digits.test', header=None)

data_train = pd.read_csv('./data/UCI_digits.train', header=None)

# 对训练集数据进行分割

train = np.array(data_train)

data_train_new = [] # 处理后的训练数据

data_test_new = [] # 处理后的测试数据

for x in train:

x = list(x[0])

x = list(map(int, x))

data_train_new.append(x)

# 对测试集数据进行分割

test = np.array(data_test)

for x in test:

x = list(x[0])

x = list(map(int, x))

data_test_new.append(x)

# 合并全部数据集

data = np.append(data_train_new, data_test_new, axis=0)

data = pd.DataFrame(data) # 转换数据格式

return data

# 获取测试集的特征

def get_test_feature_label(data,N):

# 测试集的特征和标签

data_test = np.array(data.sample(n=N, axis=0)) # 随机读取若干行 0纵向 1 横向

data_test_feature = data_test[:, :-1]

data_test_label = data_test[:, -1]

return data_test_feature

# 构建模型 训练 计算时间

def fun(method,data_train_feature,data_train_label,data_test_feature): # 函数 三种方式

# weight 根据距离加权(改进)

knc = KNeighborsClassifier(n_neighbors = 10,metric = 'minkowski',p = 2,weights = 'distance',algorithm = method)

knc.fit(data_train_feature,data_train_label)

start1 = time.perf_counter()

label_predict = knc.predict(data_test_feature)

end1 = time.perf_counter()

return end1-start1

# 获取三种方法的时间列表

def get_time(data_test_feature,x,y):

time1 = []

time2 = []

time3 = []

if(x>y):

data_test_feature=fun2(data_test_feature,x,y)

for N in range(100,3000,200):

data_train = np.array(data.sample(n=N,axis=0))

data_train_feature = data_train[:,:-1]

data_train_label = data_train[:,-1]

if(x>y):

data_train_feature=fun2(data_train_feature,x,y)

#暴力搜索

time_new=fun('brute',data_train_feature,data_train_label,data_test_feature)

time1.append(time_new/500)

#kd树

time_new = fun('kd_tree',data_train_feature,data_train_label,data_test_feature)

time2.append(time_new/500)

#ball树

time_new=fun('ball_tree',data_train_feature,data_train_label,data_test_feature)

time3.append(time_new/500)

return time1,time2,time3

# 绘图显示

def result_show(time1,time2,time3):

mpl.rcParams['font.sans-serif'] = ['FangSong'] # 指定默认字体

plt.rcParams['axes.unicode_minus'] = False

N = np.arange(100, 3000, 200)

plt.plot(N, time1, 'r-*', label='brute')

plt.plot(N, time2, 'b-', label='kd_tree')

plt.plot(N, time3, 'g--', label='ball_tree')

plt.legend()

plt.xlabel('训练集样本数量', fontsize=15)

plt.ylabel('预测平均时间', fontsize=15)

plt.show()

if __name__=="__main__":

# 情况一

data = data_slove()# 数据格式处理合并 返回数据集

data_test_feature=get_test_feature_label(data,500)# 传入数据集 和 测试集样本数目返回 测试数据特征

time1,time2,time3=get_time(data_test_feature,32,32)# 参数:测试集特征 维度转换前x*x 维度转换后y*y

result_show(time1,time2,time3)

"""

# 情况二

data = data_slove()# 数据格式处理合并 返回数据集

data_test_feature=get_test_feature_label(data,500)# 传入数据集 和 测试集样本数目返回 测试数据特征

time1,time2,time3=get_time(data_test_feature,32,8)# 参数:测试集特征 维度转换前x*x 维度转换后y*y

result_show(time1,time2,time3)

"""

"""

# 情况三

data = data_slove()# 数据格式处理合并 返回数据集

data_test_feature=get_test_feature_label(data,500)# 传入数据集返回 测试数据特征

print(data_test_feature.shape)

time1,time2,time3=get_time(data_test_feature,32,4)

result_show(time1,time2,time3)

"""

结论

- 当训练样本多,或者数据维度很多的情况下,暴力求解的速度可能更快。

总结

如有错误,欢迎评论或私信指出。需要

测试数据可私信。