NLP-Task2:基于深度学习的文本分类

用Pytorch重写《任务一》,实现CNN、RNN的文本分类

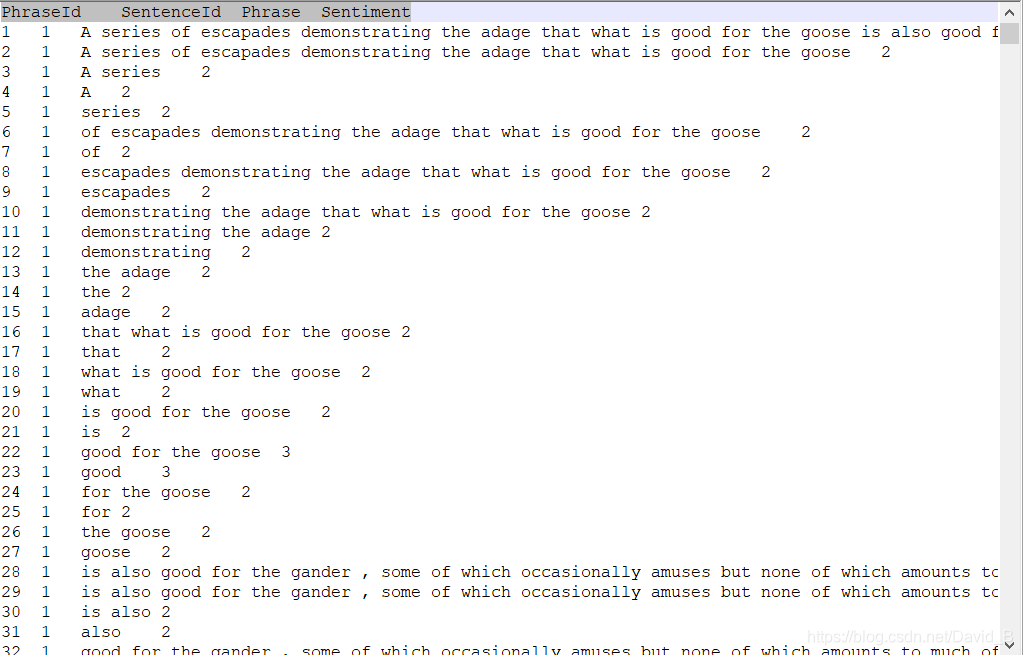

数据集:Classify the sentiment of sentences from the Rotten Tomatoes dataset

网盘下载链接见文末(包括GloVe预训练模型参数)

-

word embedding 的方式初始化

-

随机embedding的初始化方式

-

用glove 预训练的embedding进行初始化

-

知识点

- CNN/RNN的特征抽取

- 词嵌入

- Dropout

本文参考:NLP-Beginner 任务二:基于深度学习的文本分类+pytorch(超详细!!)

一、介绍

1.1 要求

NLP任务:利用深度学习中的卷积神经网络(CNN)和循环神经网络(RNN)对文本的情感进行分类

1.2 数据集

训练集共156060条英文评论,情感分共0-4类

二、特征提取—Word Embedding 词嵌入

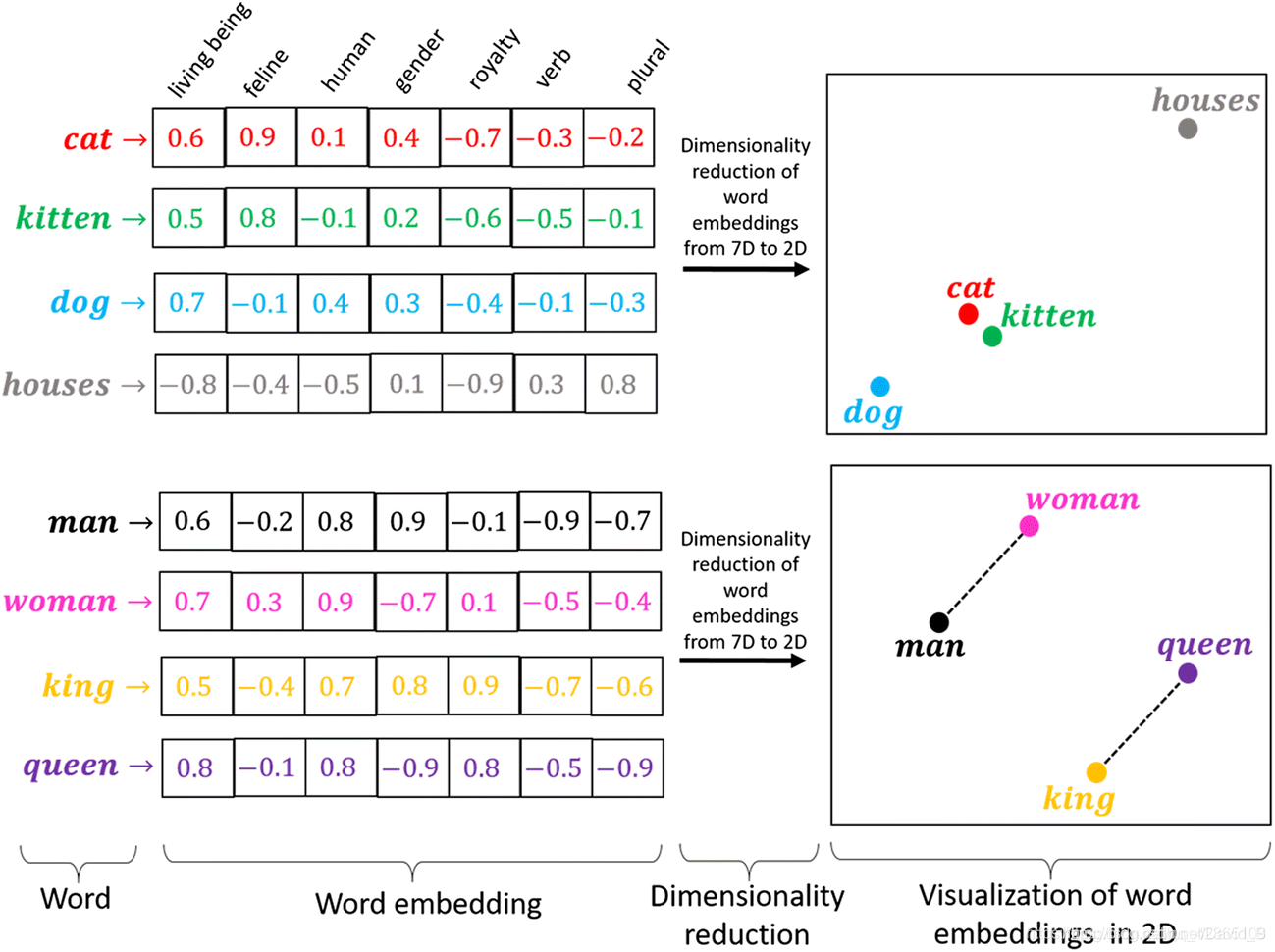

2.1 词嵌入定义

把每一个词映射到一个高维空间,每个词代表一个高维空间的向量

图中的例子是把每个单词映射到一个七维的空间

词嵌入模型优点:

- 当向量数值设计合理时,词向量与词向量之间的距离能体现出词与词之间的相似性

- 当向量数值设计合理时,词向量之间的距离也有一定的语义(如man–woman与king–queen)

- 可用较少的维数展现多角度特征

2.2 词嵌入的词向量

在词袋模型/N元特征中,只要设置好词和词组,就能把每一个句子(一堆词)转换成对应的0-1表示

但是在词嵌入模型中,并没有明确的转换规则,因此我们不可能提前知道每一个词对应的向量

上面的图的7维向量分别对应(living being, feline, human, gender, royalty, verb, plural),然而这只是为了方便理解所强行设计出的分类,很明显houses在gender维度的取值很难确定。因此,给每一个维度定义好分类标准,是不可能的

所以,在词嵌入模型中,我们选择不给每一个维度定义一个所谓的语义,我们只是单纯的把每一个词,对应到一个词向量上,我们不关心向量的数值大小代表什么意思,我们只关心这个数值设置得合不合理。换句话说,每个词向量都是参数,是待定的,需要求解,这点和词袋模型/N元特征是完全不同的。

2.3 词嵌入模型的初始化

词向量是一个参数,那么我们就要为它设置一个初始值,选取参数的初始值至关重要

如果选得好,就能求出一个更好的参数,甚至能起到加速模型优化的效果;初始值选的不好,那么优化模型求解的时候就会使参数值难以收敛,或者收敛到一个较差的极值

2.3.1 随机初始化

给定一个维度d,对每一个词 w w w,随机生成一个d维的向量 x ∈ R d x\in \mathbb{R}^d x∈Rd

这种方法可能会生成交劣的初值,也没有良好的解释性

2.3.2 预训练模型初始化

使用别人训练好的模型作为初值,比如训练好的词嵌入模型GloVe

2.4 特征表示

给定每个词的词向量,即可将一个句子量化成一个ID列表,再转化成特征矩阵

设 d=5

(ID:1) I : \quad \quad [+0.10,+0.20,+0.30,+0.50,+1.50]

(ID:2) love : \quad [?1.00,?2.20,+3.40,+1.00,+0.00]

(ID:3) you : \quad [?3.12,?1.14,+5.14,+1.60,+7.00]

(ID:4) hate : \quad [?8.00,?6.40,+3.60,+2.00,+3.00]

句子 I love you便可表示为:[1,2,3],经过词嵌入后得到

X = [ + 0.10 , + 0.20 , + 0.30 , + 0.50 , + 1.50 ? 1.00 , ? 2.20 , + 3.40 , + 1.00 , + 0.00 ? 3.12 , ? 1.14 , + 5.14 , + 1.60 , + 7.00 ] X=\begin{bmatrix} +0.10,+0.20,+0.30,+0.50,+1.50\\ ?1.00,?2.20,+3.40,+1.00,+0.00\\ ?3.12,?1.14,+5.14,+1.60,+7.00 \end{bmatrix} X=???+0.10,+0.20,+0.30,+0.50,+1.50?1.00,?2.20,+3.40,+1.00,+0.00?3.12,?1.14,+5.14,+1.60,+7.00????

句子 I hate you便可表示为:[1,4,3],经过词嵌入后得到

X = [ + 0.10 , + 0.20 , + 0.30 , + 0.50 , + 1.50 ? 8.00 , ? 6.40 , + 3.60 , + 2.00 , + 3.00 ? 3.12 , ? 1.14 , + 5.14 , + 1.60 , + 7.00 ] X=\begin{bmatrix} +0.10,+0.20,+0.30,+0.50,+1.50\\ ?8.00,?6.40,+3.60,+2.00,+3.00\\ ?3.12,?1.14,+5.14,+1.60,+7.00 \end{bmatrix} X=???+0.10,+0.20,+0.30,+0.50,+1.50?8.00,?6.40,+3.60,+2.00,+3.00?3.12,?1.14,+5.14,+1.60,+7.00????

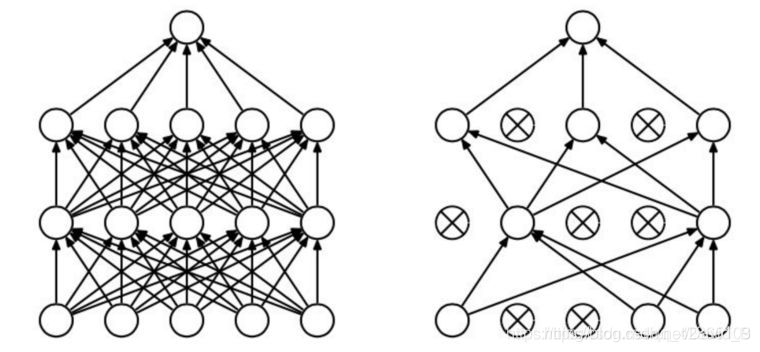

三、神经网络

3.1 卷积神经网络(Convolutional Neural Networks)

- 卷积层(convolution)

- 激活层(activation)

- 池化层(pooling)

- 全连接层(fully connected)

3.1.1 卷积层(convolution)

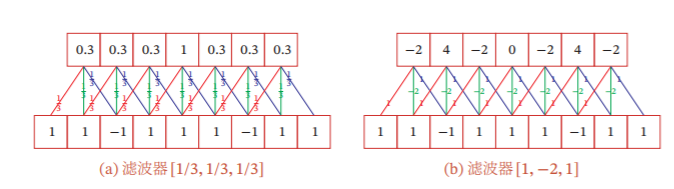

3.1.1.1 一维卷积

对于左图,待处理的向量

x

∈

R

n

x\in\mathbb{R}^n

x∈Rn :

[

1

,

1

,

?

1

,

1

,

1

,

1

,

?

1

,

1

,

1

]

[1,1,-1,1,1,1,-1,1,1]

[1,1,?1,1,1,1,?1,1,1]

卷积核 w ∈ R k w\in\mathbb{R}^k w∈Rk: [ 1 3 , 1 3 , 1 3 ] \quad\quad\quad\quad\quad\quad\quad\quad\quad\quad [\frac{1}{3},\frac{1}{3},\frac{1}{3}] [31?,31?,31?]

得到结果 y ∈ R n ? k + 1 y\in\mathbb{R}^{n-k+1} y∈Rn?k+1: [ 1 3 , 1 3 , 1 3 , 1 , 1 3 , 1 3 , 1 3 ] \quad\quad\quad\quad\quad\quad[\frac{1}{3},\frac{1}{3},\frac{1}{3},1,\frac{1}{3},\frac{1}{3},\frac{1}{3}] [31?,31?,31?,1,31?,31?,31?]

卷积操作记为: y = w ? x \quad \quad\quad\quad \quad\quad \quad\quad\quad \quad y=w*x y=w?x

公式表示: y t = ∑ k = 1 K w k x t + k ? 1 ( t = 1 , 2 , . . . , n ? k + 1 ) \quad \quad \quad \quad \quad \quad y_t=\sum\limits_{k=1}^Kw_kx_{t+k-1}\quad \quad (t=1,2,...,n-k+1) yt?=k=1∑K?wk?xt+k?1?(t=1,2,...,n?k+1)

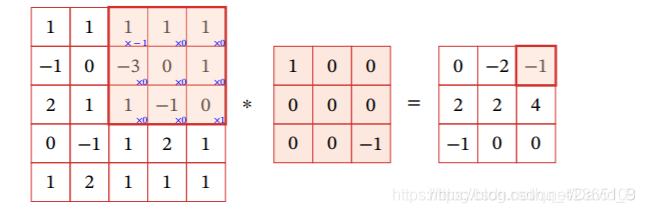

3.1.1.2 二维卷积

左一为待处理矩阵

X

∈

R

n

×

d

\quad X\in\mathbb{R}^{n\times d}

X∈Rn×d

左二为卷积核 W ∈ R K 1 × K 2 \quad\quad W\in\mathbb{R}^{K_1\times K_2} W∈RK1?×K2?

得到右一矩阵 Y ∈ R ( n ? K 1 + 1 ) × ( d ? K 2 + 1 ) \quad\quad Y\in\mathbb{R}^{(n-K_1+1)\times(d-K_2+1)} Y∈R(n?K1?+1)×(d?K2?+1)

记为 Y = W ? X \quad \quad \quad \quad \quad Y=W*X Y=W?X

公式表示:

Y

i

j

=

∑

k

1

=

1

K

1

∑

k

2

=

1

K

2

W

k

1

,

k

2

x

i

+

k

1

?

1

,

j

+

k

2

?

1

\quad \quad Y_{ij}=\sum\limits_{k_1=1}^{K_1}\sum\limits_{k_2=1}^{K_2}W_{k_1,k_2}x_{i+k_1-1,j+k_2-1}\quad \quad

Yij?=k1?=1∑K1??k2?=1∑K2??Wk1?,k2??xi+k1??1,j+k2??1?

(

i

=

1

,

2

,

.

.

.

,

n

?

K

1

+

1

,

j

=

1

,

2

,

.

.

.

,

n

?

K

2

+

1

)

\quad\quad\quad \quad\quad\quad\quad\quad\quad\quad\quad\quad\quad\quad\quad^{(i=1,2,...,n-K_1+1,j=1,2,...,n-K_2+1)}

(i=1,2,...,n?K1?+1,j=1,2,...,n?K2?+1)

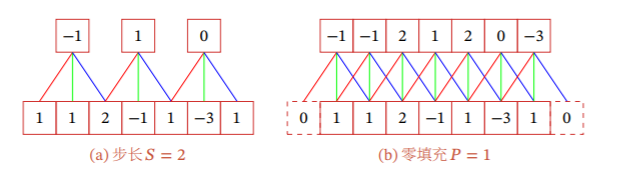

3.1.1.3 卷积步长与零填充

上面例子的步长都是1,但步长也可以不为1

值得注意的是右图进行了零填充操作,可使待处理向量的边界元素能进行多次卷积操作,尽可能保留边界元素的特征

3.1.1.4 卷积层设计

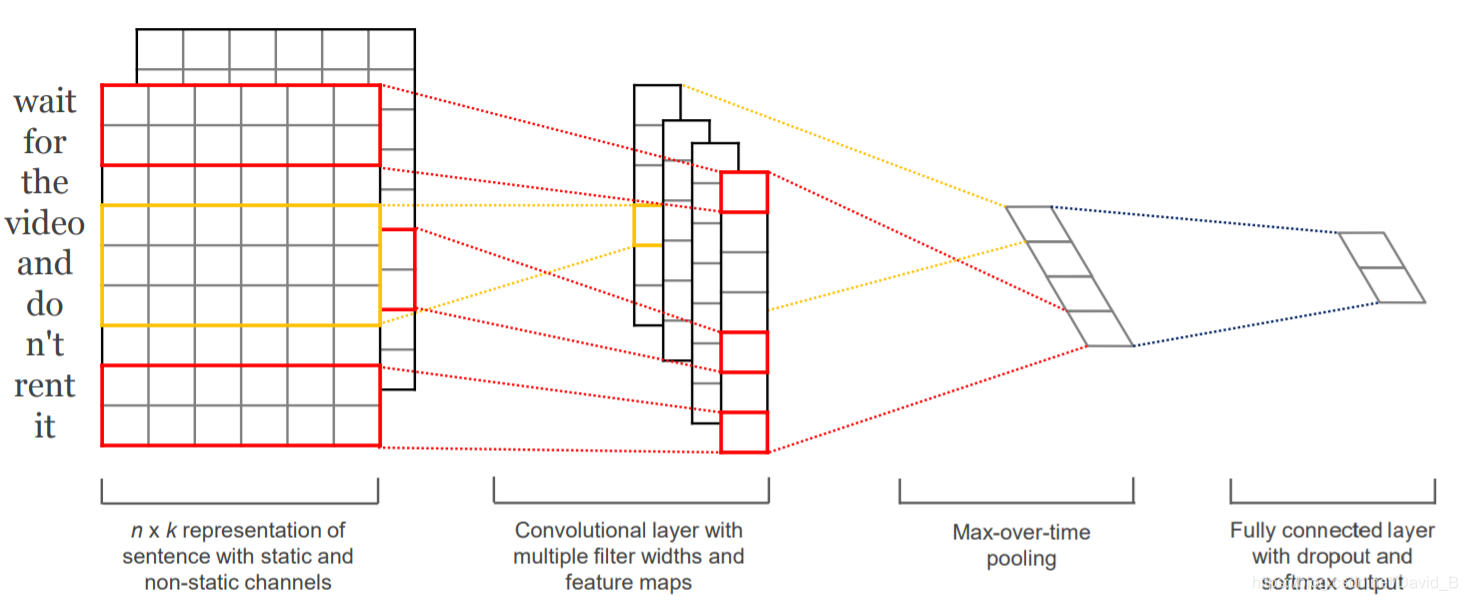

卷积层的设计参考论文Convolutional Neural Networks for Sentence Classification

符合定义: n n n–句子长度; d d d–词向量长度;句子的特征矩阵 X ∈ R n × d X\in\mathbb{R}^{n\times d} X∈Rn×d

本次任务中采用四种卷积核,大小分别为: 2 × d , 3 × d , 4 × d , 5 × d 2\times d, 3\times d, 4\times d, 5\times d 2×d,3×d,4×d,5×d

2 × d 2\times d 2×d的卷积核为上图红色框, 3 × d 3\times d 3×d为上图黄色框

例如:句子wait for的特征矩阵大小为 2 × d 2\times d 2×d,经过卷积核 2 × d 2\times d 2×d 卷积后得到一个值

特征矩阵 X ∈ R n × d X\in\mathbb{R}^{n\times d} X∈Rn×d与卷积核 W ∈ R 2 × d W\in\mathbb{R}^{2\times d} W∈R2×d卷积后,得到结果 Y ∈ R ( n ? 2 + 1 ) × ( d ? d + 1 ) Y\in\mathbb{R}^{(n-2+1)\times (d-d+1)} Y∈R(n?2+1)×(d?d+1)

用四个卷积核的原因是为了挖掘词组的特征,如卷积核 2 × d 2\times d 2×d 挖掘两个单词之间关系,卷积核 5 × d 5\times d 5×d 挖掘五个单词之间关系

于是,对于一个句子,特征矩阵 X ∈ R ( n × d ) X\in\mathbb{R}^{(n\times d)} X∈R(n×d),经过四个卷积核 W W W的卷积后,得到

Y 1 ∈ R ( n ? 1 ) × 1 , Y 2 ∈ R ( n ? 2 ) × 1 , Y 3 ∈ R ( n ? 3 ) × 1 , Y 4 ∈ R ( n ? 4 ) × 1 Y_1\in\mathbb{R}^{(n-1)\times 1},Y_2\in\mathbb{R}^{(n-2)\times 1},Y_3\in\mathbb{R}^{(n-3)\times 1},Y_4\in\mathbb{R}^{(n-4)\times 1} Y1?∈R(n?1)×1,Y2?∈R(n?2)×1,Y3?∈R(n?3)×1,Y4?∈R(n?4)×1

以上介绍的是一个通道的情况,也可以设置多个通道,每个通道都像上述一样的操作,不过每一个通道的卷积核不一样,都是待定的参数,设置 l l l个通道,就会得到 l l l组 ( Y 1 , Y 2 , Y 3 , Y 4 ) (Y_1,Y_2,Y_3,Y_4) (Y1?,Y2?,Y3?,Y4?)

3.1.2 激活层

在本次任务中,采用 R e L U ReLU ReLU函数: R e L U ( x ) = m a x ( x , 0 ) ReLU(x)=max(x,0) ReLU(x)=max(x,0)

3.1.3 池化层(Pooling)

pooling有两种方法:最大池化及平均池化,详见《神经网络与深度学习》

本次任务中,用最大池化,对 l l l组 ( Y 1 , Y 2 , Y 3 , Y 4 ) (Y_1,Y_2,Y_3,Y_4) (Y1?,Y2?,Y3?,Y4?),对任意一组 ( Y 1 , Y 2 , Y 3 , Y 4 ) (Y_1,Y_2,Y_3,Y_4) (Y1?,Y2?,Y3?,Y4?)里的任意一个向量 Y i ( l ) ∈ R ( n ? i ) × 1 Y_i^{(l)}\in\mathbb{R}^{(n-i)\times 1} Yi(l)?∈R(n?i)×1,取其最大值,即( Y i ( l ) ( j ) Y_i^{(l)}(j) Yi(l)?(j)表示第j个元素)

y i ( i ) = m a x j Y i ( l ) ( j ) y_i^{(i)}=max_jY_i^{(l)}(j) yi(i)?=maxj?Yi(l)?(j)

经过最大池化后,得到 l l l组 ( y 1 , y 2 , y 3 , y 4 ) (y_1,y_2,y_3,y_4) (y1?,y2?,y3?,y4?)

把它们按结果类别拼接起来,就可以得到一个长度为 l ? 4 l*4 l?4的向量

Y = ( y 1 1 , . . . , y 1 l , y 2 1 , . . . , y 2 l , y 3 1 , . . . , y 3 l , y 4 1 , . . . , y 4 l ) Y=(y_1^{1},...,y_1^{l},y_2^{1},...,y_2^{l},y_3^{1},...,y_3^{l},y_4^{1},...,y_4^{l}) Y=(y11?,...,y1l?,y21?,...,y2l?,y31?,...,y3l?,y41?,...,y4l?)

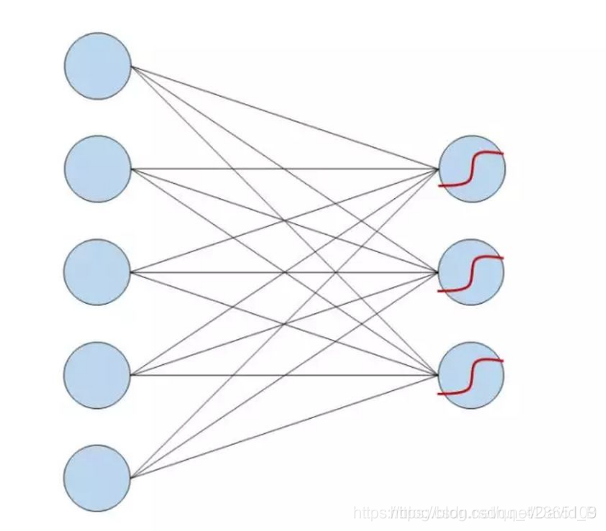

3.1.4 全连接层(Fully connected)

左边的神经元便是在最大池化后得到的向量(

l

?

4

l*4

l?4)

而我们的目标是输出一个句子的情感类别,即输出也应该是属于五类情感的概率

p = ( 0 , 0 , 0.7 , 0.25 , 0.05 ) T p=(0,0,0.7,0.25,0.05)^T p=(0,0,0.7,0.25,0.05)T

因此在全连接层,我们把长度为 l ? 4 l*4 l?4的向量转换成长度为5的向量

最简单的转换方式是线性变换 p = A Y + b p=AY+b p=AY+b其中 A ∈ R 5 × ( l ? 4 ) , Y ∈ R ( l ? 4 ) × 1 , A ∈ R 5 × 1 A\in\mathbb{R}^{5\times (l*4)},Y\in\mathbb{R}^{(l*4)\times 1},A\in\mathbb{R}^{5\times 1} A∈R5×(l?4),Y∈R(l?4)×1,A∈R5×1

于是 A , b A,b A,b便是待定的系数

3.1.5 总结

- 卷积层:特征矩阵 X ? l X\Rightarrow l X?l组 ( Y 1 , Y 2 , Y 3 , Y 4 ) (Y_1,Y_2,Y_3,Y_4) (Y1?,Y2?,Y3?,Y4?),神经网络参数:4 l l l个卷积核 W W W

- 激活层: l l l组 ( Y 1 , Y 2 , Y 3 , Y 4 ) ? (Y_1,Y_2,Y_3,Y_4)\Rightarrow (Y1?,Y2?,Y3?,Y4?)? l l l组 ( Y 1 , Y 2 , Y 3 , Y 4 ) (Y_1,Y_2,Y_3,Y_4) (Y1?,Y2?,Y3?,Y4?),无参数

- 池化层: l l l组 ( Y 1 , Y 2 , Y 3 , Y 4 ) ? (Y_1,Y_2,Y_3,Y_4)\Rightarrow (Y1?,Y2?,Y3?,Y4?)? l l l组 ( y 1 , y 2 , y 3 , y 4 ) (y_1,y_2,y_3,y_4) (y1?,y2?,y3?,y4?),无参数

- 全连接层: Y ? p Y\Rightarrow p Y?p,神经网络参数: A , b A,b A,b

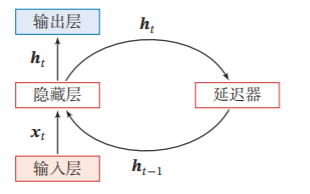

3.2 循环神经网络(RNN)

3.2.1 隐藏层(Hidden layer)

输入为特征矩阵

X

X

X

(ID:1) I : \quad \quad [+0.10,+0.20,+0.30,+0.50,+1.50]

(ID:2) love : \quad [?1.00,?2.20,+3.40,+1.00,+0.00]

(ID:3) you : \quad [?3.12,?1.14,+5.14,+1.60,+7.00]

I love you便可表示为:

X = [ + 0.10 , + 0.20 , + 0.30 , + 0.50 , + 1.50 ? 1.00 , ? 2.20 , + 3.40 , + 1.00 , + 0.00 ? 3.12 , ? 1.14 , + 5.14 , + 1.60 , + 7.00 ] = [ x 1 , x 2 , x 3 ] T X=\begin{bmatrix} +0.10,+0.20,+0.30,+0.50,+1.50\\ ?1.00,?2.20,+3.40,+1.00,+0.00\\ ?3.12,?1.14,+5.14,+1.60,+7.00 \end{bmatrix}=[x_1,x_2,x_3]^T X=???+0.10,+0.20,+0.30,+0.50,+1.50?1.00,?2.20,+3.40,+1.00,+0.00?3.12,?1.14,+5.14,+1.60,+7.00????=[x1?,x2?,x3?]T

在上述的CNN中,我们直接对矩阵 X X X进行操作,而在RNN中,我们逐个对 x i x_i xi?进行操作

-

初始化 h 0 ∈ R l h h_0\in\mathbb{R}^{l_h} h0?∈Rlh?

-

计算以下两个公式, t = 1 , 2 , . . , n t=1,2,..,n t=1,2,..,n

- z t = U h t ? 1 + W x t + b z_t=Uh_{t-1}+Wx_t+b zt?=Uht?1?+Wxt?+b,其中 U ∈ R l h × l h , W ∈ R l h × d , z 及 b ∈ R l h U\in\mathbb{R}^{l_h\times l_h},W\in\mathbb{R}^{l_h \times d},z及b\in\mathbb{R}^{l_h} U∈Rlh?×lh?,W∈Rlh?×d,z及b∈Rlh?

- h t = f ( z t ) h_t=f(z_t) ht?=f(zt?), f ( ? ) f(\cdot) f(?)是激活函数,本次任务中用 t a n h tanh tanh函数, t a n h ( x ) = e x p ( x ) ? e x p ( ? x ) e x p ( x ) + e x p ( ? x ) tanh(x)=\frac{exp(x)-exp(-x)}{exp(x)+exp(-x)} tanh(x)=exp(x)+exp(?x)exp(x)?exp(?x)?

-

最后得到 h n h_n hn?

这一层的目的是把序列 x i i = 1 n {x_i}_{i=1}^n xi?i=1n?逐个输入到隐藏层中,得到的输出也会参与到下一次循环计算中,实现短期记忆功能

3.2.2 激活层

参照CNN

3.2.3 全连接层(Fully connected)

与CNN全连接层类似

3.2.4 总结

- 隐藏层:特征矩阵 X = [ x 1 , x 2 , x 3 ] T ? h n X=[x_1,x_2,x_3]^T\Rightarrow h_n X=[x1?,x2?,x3?]T?hn?,神经网络参数: W , U , b h W,U,b_h W,U,bh?

- 全连接层: h n ? p h_n\Rightarrow p hn??p,神经网络参数: A , b l A,b_l A,bl?

3.3 训练神经网络

3.3.1 神经网络参数

先回顾CNN和RNN两个模型的参数:

- CNN:4 l l l个卷积核 W W W、 A , b A,b A,b

- RNN: W , U , b h , A , b l W,U,b_h,A,b_l W,U,bh?,A,bl?

对任意一个网络,把参数记作 θ \theta θ

句子 x Word?embedding → x\quad \underrightarrow{\text{Word embedding}}\quad xWord?embedding?特征矩阵 X X X Neural?Network → \quad \underrightarrow{\text{Neural Network}}\quad Neural?Network?类别概率向量 p p p

3.3.2 损失函数

在本次任务中使用交叉熵损失函数

给定一个神经网络NN,对于每一个样本n,其损失值为:

L ( N N θ ( x ( n ) ) , y ( n ) ) = ? ∑ c = 1 C y c ( n ) l o g p c ( n ) = ? ( y ( n ) ) T l o g p ( n ) L(NN_{\theta}(x^{(n)}),y^{(n)})=-\sum\limits_{c=1}^Cy_c^{(n)}logp_c^{(n)}=-(y^{(n)})^Tlogp^{(n)} L(NNθ?(x(n)),y(n))=?c=1∑C?yc(n)?logpc(n)?=?(y(n))Tlogp(n)

其中 y ( n ) = ( I ( c = 0 ) , I ( c = 1 ) , . . . , I ( c = C ) ) y^{(n)}=(I(c=0),I(c=1),...,I(c=C)) y(n)=(I(c=0),I(c=1),...,I(c=C))是one-hot向量

对于N个样本,总的损失值是每个样本损失值的平均,即

L ( θ ) = L ( N N θ ( x ) , y ) = 1 N ∑ n = 1 N L ( N N θ ( x ( n ) ) , y ( n ) ) L(\theta)=L(NN_{\theta}(x),y)=\frac{1}{N}\sum\limits_{n=1}^NL(NN_{\theta}(x^{(n)}),y^{(n)}) L(θ)=L(NNθ?(x),y)=N1?n=1∑N?L(NNθ?(x(n)),y(n))

通过找损失函数的最小值,求解最优的参数矩阵 θ \theta θ

3.3.3 最优参数求解—梯度下降法

公式: θ t + 1 ← θ t ? α ? L ( θ t ) ? θ t \theta_{t+1}\leftarrow\theta_t-\alpha\frac{\partial L(\theta_t)}{\partial \theta_t} θt+1?←θt??α?θt??L(θt?)?

代码实现

4.1 实验设置

- 样本个数:约150000

- 训练集与测试集:7:3

- 模型CNN、RNN

- 初始化方法:随机初始化、GloVe预训练模型初始化

- 学习率: 1 0 ? 3 10^{-3} 10?3

- l h , d l_h,d lh?,d:50

- l l l:最长句子的单词数

- Batch大小:500

4.2 代码

若不想用GPU加速,只需要把comparison_batch.py和Neural_Network_batch.py中所有的.cuda()和.cpu()删除,另外comparison_plot_batch.py中的.item()可能也要删除

数据处理

- 一批样本中句子长度不一可能需要进行零填充(padding),把同一个batch内的所有输入(句子)补充成一样的长度,但是若是句子之间长度差异过大(一句只有3个单词,一句有50个单词),就可能需要填充 多个无意义的0,会对性能造成影响,因此在本次任务中先把数据按句子长度进行排序,尽量使同一个batch内句子长度一致,避免零填充

- 加入Dropout层

代码

#特征提取feature_batch

import random

from torch.utils.data import Dataset, DataLoader

from torch.nn.utils.rnn import pad_sequence

import torch

def data_split(data, test_rate = 0.3):

train = list()

test = list()

for sentence in data:

if random.random() > test_rate:

train.append(sentence)

else:

test.append(sentence)

return train, test

class Random_embedding():

#随机初始化

def __init__(self, data, test_rate = 0.3):

self.dict_words = dict() #单词到ID的映射

data.sort(key = lambda x: len(x[2].split())) #按照句子长度排序,短的在前

self.data = data

self.len_words = 0

self.train, self.test = data_split(data, test_rate = test_rate)

self.train_y = [int(term[3]) for term in self.train]

self.test_y = [int(term[3]) for term in self.test]

self.train_matrix = list() #训练集的单词ID列表,叠成一个矩阵

self.test_matrix = list() #测试集的单词ID列表,叠成一个矩阵

self.longest = 0 #记录最长的单词

def get_words(self):

for term in self.data:

s = term[2] #取句子

s = s.upper() #转为大写

words = s.split() #分成单词

for word in words:

if word not in self.dict_words:

self.dict_words[word] = len(self.dict_words) + 1 #padding是第0个,所以加一,第一个为0

self.len_words = len(self.dict_words) #单词数目

def get_id(self):

#训练集

for term in self.train:

s = term[2]

s = s.upper()

words = s.split()

item = [self.dict_words[word] for word in words] #找到id列表(未进行padding)

self.longest = max(self.longest, len(item)) #记录最长句子的单词数

self.train_matrix.append(item)

#测试集

for term in self.test:

s = term[2]

s = s.upper()

words = s.split()

item = [self.dict_words[word] for word in words]

self.longest = max(self.longest, len(item))

self.test_matrix.append(item)

self.len_words += 1 #单词数目(包括padding的ID:0)

class Glove_embedding():

def __init__(self, data, trained_dict, test_rate = 0.3):

self.dict_words = dict() #单词到ID的映射

self.trained_dict = trained_dict #记录预训练词向量模型

data.sort(key = lambda x: len(x[2].split()))

self.data = data

self.len_words = 0 #单词数目(包括padding的ID:0)

self.train, self.test = data_split(data, test_rate = test_rate)

self.train_y = [int(term[3]) for term in self.train]

self.test_y = [int(term[3]) for term in self.test]

self.train_matrix = list() #训练集的单词ID列表,叠成一个矩阵

self.test_matrix = list() #测试集的单词ID列表,叠成一个矩阵

self.longest = 0

self.embedding = list() #抽取出用到的(预训练模型的)单词

def get_words(self):

self.embedding.append([0] * 50) #先填充词向量

for term in self.data:

s = term[2] #取句子

s = s.upper() #转大写

words = s.split()

for word in words:

if word not in self.dict_words:

self.dict_words[word] = len(self.dict_words) + 1 #padding是第0个

if word in self.trained_dict: #如果预训练模型有这个单词,直接记录词向量

self.embedding.append(self.trained_dict[word])

else: #预训练模型中没有这个单词,初始化该单词对应的词向量为0向量

self.embedding.append([0] * 50)

self.len_words = len(self.dict_words) #单词数目(未包括padding的ID:0)

def get_id(self):

#train

for term in self.train:

s = term[2]

s = s.upper()

words = s.split()

item = [self.dict_words[word] for word in words] #找到id列表

self.longest = max(self.longest, len(item)) #记录最长的单词

self.train_matrix.append(item)

for term in self.test:

s = term[2]

s = s.upper()

words = s.split()

item = [self.dict_words[word] for word in words]

self.longest = max(self.longest, len(item))

self.test_matrix.append(item)

self.len_words += 1 #单词数目(未包括padding的ID:0)

class ClsDataset(Dataset):

#自定义数据集的结构

def __init__(self, sentence, emotion):

self.sentence = sentence #句子

self.emotion = emotion #情感

def __getitem__(self, item):

return self.sentence[item], self.emotion[item]

def __len__(self):

return len(self.emotion)

def collate_fn(batch_data):

#自定义数据集的内数据返回方式,并进行padding

sentence, emotion = zip(*batch_data)

sentences = [torch.LongTensor(sent) for sent in sentence] #把句子变成Longtensor类型

padded_sents = pad_sequence(sentences, batch_first = True, padding_value = 0) #自动padding操作

return torch.LongTensor(padded_sents), torch.LongTensor(emotion)

def get_batch(x, y, batch_size):

#利用dataloader划分batch

dataset = ClsDataset(x, y)

dataloader = DataLoader(dataset, batch_size = batch_size, shuffle = False, drop_last = True, collate_fn = collate_fn)

#drop_last指不利用最后一个不完整的batch

return dataloader

#神经网络---Neural_network_batch

import torch

import torch.nn as nn

import torch.nn.functional as F

class MY_RNN(nn.Module):

def __init__(self, len_feature, len_hidden, len_words, typenum=5, weight=None, layer=1, nonlinearity='tanh',

batch_first=True, drop_out=0.5):

super(MY_RNN, self).__init__()

self.len_feature = len_feature #d的大小

self.len_hidden = len_hidden #l_h的大小

self.len_words = len_words #单词个数(包括padding)

self.layer = layer #隐藏层层数

self.dropout = nn.Dropout(drop_out) #dropout层

if weight is None: #随机初始化

x = nn.init.xavier_normal_(torch.Tensor(len_words, len_feature))

#xavier初始化方法中服从正态分布,通过网络层时,输入和输出的方差相同

self.embedding = nn.Embedding(num_embeddings=len_words, embedding_dim=len_feature, _weight=x).cuda()

else: #GloVe初始化

self.embedding = nn.Embedding(num_embeddings=len_words, embedding_dim=len_feature, _weight=weight).cuda()

#用nn.Module的内置函数定义隐藏层

self.rnn = nn.RNN(input_size=len_feature, hidden_size=len_hidden, num_layers=layer, nonlinearity=nonlinearity,

batch_first=batch_first, dropout=drop_out).cuda()

#全连接层

self.fc = nn.Linear(len_hidden, typenum).cuda()

#冗余的softmax层可以不加

#self.act = nn.Softmax(dim=1)

def forward(self, x):

#x:数据(维度为[batch_size, len(sentence)])

x = torch.LongTensor(x).cuda()

batch_size = x.size(0)

#经过词嵌入后,维度为[batch_size, len(sentence), d]

out_put = self.embedding(x) #词嵌入

out_put = self.dropout(out_put) #dropout层

#另一种初始化h_0的方式

#h_0 = torch.randn(self.layer, batch_size, self.len_hidden).cuda()

#初始化h_0为0向量

h0 = torch.autograd.Variable(torch.zeros(self.layer, batch_size, self.len_hidden)).cuda()

#dropout后不变,经过隐藏层后,维度为[1, batch_size, l_h]

_, hn = self.rnn(out_put, h0) #隐藏层计算

#经过全连接层后,维度为[1, batch_size, 5]

out_put = self.fc(hn).squeeze(0)

#挤掉第0维度,返回[batch_size, 5]的数据

#out_put = self.act(out_put) #softmax层可以不加

return out_put

class MY_CNN(nn.Module):

def __init__(self, len_feature, len_words, longest, typenum=5, weight=None, drop_out=0.5):

super(MY_CNN, self).__init__()

self.len_feature = len_feature # d的大小

self.len_words = len_words #单词数目

self.longest = longest #最长句子单词数目

self.dropout = nn.Dropout(drop_out) #dropout层

if weight is None: #随机初始化

x = nn.init.xavier_normal_(torch.Tensor(len_words, len_feature))

self.embedding = nn.Embedding(num_embeddings=len_words, embedding_dim=len_feature, _weight=x).cuda()

else: #GloVe初始化

self.embedding = nn.Embedding(num_embeddings=len_words, embedding_dim=len_feature, _weight=weight).cuda()

#Conv2d参数详解:(输入通道数:1,输出通道数:l_l,卷积核大小:(行数,列数))

#padding指往句子两侧加0

#若X为1*50,当W=2*50时,没办法进行卷积操作,因此要在x的两侧加0,(padding=(1,0)变成3*50)

self.conv1 = nn.Sequential(nn.Conv2d(1, longest, (2, len_feature), padding=(1, 0)), nn.ReLU()).cuda() #第一个卷积核+激活层

self.conv2 = nn.Sequential(nn.Conv2d(1, longest, (3, len_feature), padding=(1, 0)), nn.ReLU()).cuda() #第二个卷积核+激活层

self.conv3 = nn.Sequential(nn.Conv2d(1, longest, (4, len_feature), padding=(2, 0)), nn.ReLU()).cuda() #第三个卷积核+激活层

self.conv4 = nn.Sequential(nn.Conv2d(1, longest, (5, len_feature), padding=(2, 0)), nn.ReLU()).cuda() #第四个卷积核+激活层

#全连接层

self.fc = nn.Linear(4*longest, typenum).cuda()

#softmax层

#self.act = nn.Softmax(dim=1)

def forward(self, x):

#x:数据,维度[batch_size, 句子长度]

x = torch.LongTensor(x).cuda()

#经过词嵌入后,维度为[batch_size, 1, 句子长度, d]

out_put = self.embedding(x).view(x.shape[0], 1, x.shape[1], self.len_feature) #词嵌入

#dropout后不变,记为X

out_put = self.dropout(out_put) #dropout层

#X经过2*d卷积后,维度为[batch_size, l_l, 句子长度+2-1, 1]

#挤掉第三维度, [batch_size, l_l, 句子长度+2-1]记为 Y_1, (句子长度+2-1中的2是padding造成的行数扩张)

conv1 = self.conv1(out_put).squeeze(3) #第1个卷积

#X经过3*d卷积后,维度为[batch_size, l_l, 句子长度+2-2, 1]

#挤掉第三维度,记为Y_2

conv2 = self.conv2(out_put).squeeze(3) #第2个卷积

#X经过4*d卷积后,维度为[batch_size, l_l, 句子长度+4-3, 1]

#挤掉第三维度,记为Y_2

conv3 = self.conv3(out_put).squeeze(3) #第3个卷积

#X经过3*d卷积后,维度为[batch_size, l_l, 句子长度+4-4, 1]

#挤掉第三维度,记为Y_2

conv4 = self.conv4(out_put).squeeze(3) #第4个卷积

#分别对(Y_1, Y_2, Y_3, Y_4)第第二维进行pooling

#得到4个[batch_size, l_l, 1]向量

pool1 = F.max_pool1d(conv1, conv1.shape[2])

pool2 = F.max_pool1d(conv2, conv2.shape[2])

pool3 = F.max_pool1d(conv3, conv3.shape[2])

pool4 = F.max_pool1d(conv4, conv4.shape[2])

#拼接得到[batch_size, l_l*4, 1]的向量

#挤掉第二维为[batch_size, l_l*4]

pool = torch.cat([pool1, pool2, pool3, pool4], 1).squeeze(2) #拼接起来

#经过全连接层后,维度为[batch_size, 5]

out_put = self.fc(pool) #全连接层

#out_put = self.act(out_put) #softmax层可以不加

return out_put

#结果&作图---comparison_plot_batch.py

import matplotlib.pyplot as plt

from torch import optim

def NN_embedding(model, train, test, learning_rate, iter_times):

#定义优化器(求参数)

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

#损失函数

loss_fun = F.cross_entropy

#损失值记录

train_loss_record = list()

test_loss_record = list()

long_loss_record = list()

#准确率记录

train_record = list()

test_record = list()

long_record = list()

#torch.autograd.set_detect_anomaly(True)

#训练阶段

for iteration in range(iter_times):

model.train() #进入非训练模式

for i, batch in enumerate(train):

x, y = batch #取一个batch

y = y.cuda()

pred = model(x).cuda() #计算输出

optimizer.zero_grad() #梯度初始化

loss = loss_fun(pred, y).cuda() #损失值计算

loss.backward() #反向传播梯度

optimizer.step() #更新参数

model.eval() #进入测试模式

#本轮正确率记录

train_acc = list()

test_acc = list()

long_acc = list()

length = 20

#本轮损失值计算

train_loss = 0

test_loss = 0

long_loss = 0

for i, batch in enumerate(train):

x, y = batch #取一个batch

y = y.cuda()

pred = model(x).cuda() #计算输出

loss = loss_fun(pred, y).cuda() #损失值计算

train_loss += loss.item() #损失值累计

_, y_pre = torch.max(pred, -1)

#计算本次batch的准确率

acc = torch.mean((torch.tensor(y_pre==y, dtype=torch.float)))

train_acc.append(acc)

for i, batch in enumerate(test):

x, y = batch

y = y.cuda()

pred = model(x).cuda() #计算输出

loss = loss_fun(pred, y).cuda() #损失值计算

test_loss += loss.item() #损失值累计

_, y_pre = torch.max(pred, -1)

#计算本次batch准确率

acc = torch.mean((torch.tensor(y_pre==y, dtype=torch.float)))

test_acc.append(acc)

if (len(x[0])) > length: #长句子侦测

long_acc.append(acc)

long_loss += loss.item()

trains_acc = sum(train_acc) / len(train_acc)

tests_acc = sum(test_acc) / len(test_acc)

longs_acc = sum(long_acc) / len(long_acc)

train_loss_record.append(train_loss / len(train_acc))

test_loss_record.append(test_loss / len(test_acc))

long_loss_record.append(long_loss / len(long_acc))

train_record.append(trains_acc.cpu())

test_record.append(tests_acc.cpu())

long_record.append(longs_acc.cpu())

print('----------- Iteration', iteration +1, '-----------')

print('Train loss:', train_loss / len(train_acc))

print('Test loss:', test_loss / len(test_acc))

print('Train accuracy:', trains_acc)

print('Test accuracy:', tests_acc)

print('Long sentence accuracy:', longs_acc)

return train_loss_record, test_loss_record, long_loss_record, train_record, test_record, long_record

def NN_embedding_plot(random_embedding, glove_embedding, learning_rate, batch_size, iter_times):

#获得训练集和测试集的batch

train_random = get_batch(random_embedding.train_matrix,

random_embedding.train_y, batch_size)

test_random = get_batch(random_embedding.test_matrix,

random_embedding.test_y, batch_size)

train_glove = get_batch(glove_embedding.train_matrix,

glove_embedding.train_y, batch_size)

test_glove = get_batch(glove_embedding.test_matrix,

glove_embedding.test_y, batch_size)

#模型建立

torch.manual_seed(2021)

torch.cuda.manual_seed(2021)

random_rnn = MY_RNN(50, 50, random_embedding.len_words)

torch.manual_seed(2021)

torch.cuda.manual_seed(2021)

random_cnn = MY_CNN(50, random_embedding.len_words, random_embedding.longest)

torch.manual_seed(2021)

torch.cuda.manual_seed(2021)

glove_rnn = MY_RNN(50, 50, glove_embedding.len_words, weight=torch.tensor(glove_embedding.embedding, dtype=torch.float))

torch.manual_seed(2021)

torch.cuda.manual_seed(2021)

glove_cnn = MY_CNN(50, glove_embedding.len_words, glove_embedding.longest, weight=torch.tensor(glove_embedding.embedding, dtype=torch.float))

#rnn+random

torch.manual_seed(2021)

torch.cuda.manual_seed(2021)

trl_ran_rnn, tel_ran_rnn, lol_ran_rnn, tra_ran_rnn, tes_ran_rnn, lon_ran_rnn =\

NN_embedding(random_rnn, train_random, test_random, learning_rate, iter_times)

#cnn+random

torch.manual_seed(2021)

torch.cuda.manual_seed(2021)

trl_ran_cnn, tel_ran_cnn, lol_ran_cnn, tra_ran_cnn, tes_ran_cnn, lon_ran_cnn =\

NN_embedding(random_cnn, train_random, test_random, learning_rate, iter_times)

#rnn+glove

torch.manual_seed(2021)

torch.cuda.manual_seed(2021)

trl_glo_rnn, tel_glo_rnn, lol_glo_rnn, tra_glo_rnn, tes_glo_rnn, lon_glo_rnn =\

NN_embedding(glove_rnn, train_glove, test_glove, learning_rate, iter_times)

#cnn+glove

torch.manual_seed(2021)

torch.cuda.manual_seed(2021)

trl_glo_cnn, tel_glo_cnn, lol_glo_cnn, tra_glo_cnn, tes_glo_cnn, lon_glo_cnn =\

NN_embedding(glove_cnn, train_glove, test_glove, learning_rate, iter_times)

#画图

x = list(range(1, iter_times+1))

plt.subplot(2, 2, 1)

plt.plot(x, trl_ran_rnn, 'r--', label='RNN+random')

plt.plot(x, trl_ran_cnn, 'g--', label='CNN+random')

plt.plot(x, trl_glo_rnn, 'b--', label='RNN+glove')

plt.plot(x, trl_glo_cnn, 'y--', label='CNN+glove')

plt.legend()

plt.legend(fontsize=10)

plt.title('Train Loss')

plt.xlabel('Iterations')

plt.ylabel('Loss')

plt.subplot(2, 2, 2)

plt.plot(x, tel_ran_rnn, 'r--', label='RNN+random')

plt.plot(x, tel_ran_cnn, 'g--', label='CNN+random')

plt.plot(x, tel_glo_rnn, 'b--', label='RNN+glove')

plt.plot(x, tel_glo_cnn, 'y--', label='CNN+glove')

plt.legend()

plt.legend(fontsize=10)

plt.title('Test Loss')

plt.xlabel('Iterations')

plt.ylabel('Loss')

plt.subplot(2, 2, 3)

plt.plot(x, tra_ran_rnn, 'r--', label='RNN+random')

plt.plot(x, tra_ran_cnn, 'g--', label='CNN+random')

plt.plot(x, tra_glo_rnn, 'b--', label='RNN+glove')

plt.plot(x, tra_glo_cnn, 'y--', label='CNN+glove')

plt.legend()

plt.legend(fontsize=10)

plt.title('Train Accuracy')

plt.xlabel('Iterations')

plt.ylabel('Accuracy')

plt.ylim(0, 1)

plt.subplot(2, 2, 4)

plt.plot(x, tes_ran_rnn, 'r--', label='RNN+random')

plt.plot(x, tes_ran_cnn, 'g--', label='CNN+random')

plt.plot(x, tes_glo_rnn, 'b--', label='RNN+glove')

plt.plot(x, tes_glo_cnn, 'y--', label='CNN+glove')

plt.legend()

plt.legend(fontsize=10)

plt.title('Test Accuracy')

plt.xlabel('Iterations')

plt.ylabel('Accuracy')

plt.ylim(0, 1)

plt.tight_layout()

fig = plt.gcf()

fig.set_size_inches(8, 8, forward=True)

plt.savefig('main_plot.jpg')

plt.show()

plt.subplot(2, 1, 1)

plt.plot(x, lon_ran_rnn, 'r--', label='RNN+random')

plt.plot(x, lon_ran_cnn, 'g--', label='CNN+random')

plt.plot(x, lon_glo_rnn, 'b--', label='RNN+glove')

plt.plot(x, lon_glo_cnn, 'y--', label='CNN+glove')

plt.legend()

plt.legend(fontsize=10)

plt.title('Long Sentence Accuracy')

plt.xlabel('Iterations')

plt.ylabel('Accuracy')

plt.ylim(0, 1)

plt.subplot(2, 1, 2)

plt.plot(x, lol_ran_rnn, 'r--', label='RNN+random')

plt.plot(x, lol_ran_cnn, 'g--', label='CNN+random')

plt.plot(x, lol_glo_rnn, 'b--', label='RNN+glove')

plt.plot(x, lol_glo_cnn, 'y--', label='CNN+glove')

plt.legend()

plt.legend(fontsize=10)

plt.title('Long Sentence Loss')

plt.xlabel('Iterations')

plt.ylabel('Loss')

plt.tight_layout()

fig = plt.gcf()

fig.set_size_inches(8, 8, forward=True)

plt.savefig('sub_plot.jpg')

plt.show()

import csv

import random

import torch

with open('/kaggle/input/task2train/train.tsv') as f:

tsvreader = csv.reader(f, delimiter = '\t')

temp = list(tsvreader)

with open('/kaggle/input/glove6b50dtxt/glove.6B.50d.txt', 'rb') as f:

lines = f.readlines()

#用GloVe创建词典

trained_dict = dict()

n = len(lines)

for i in range(n):

line = lines[i].split() #将单词与参数分割,以数组形式存储

trained_dict[line[0].decode('utf-8').upper()] = [float(line[j]) for j in range(1, 51)]

#每一句话的数组第一个为单词,与之对应的是参数数组

#初始化

iter_times = 50

alpha = 0.001

#程序开始

data = temp[1:]

batch_size = 500

#随机初始化

random.seed(2021)

random_embedding = Random_embedding(data = data)

random_embedding.get_words() #找到所有单词并标记ID

random_embedding.get_id() #找到每个句子拥有的单词ID

#预训练模型初始化

random.seed(2021)

glove_embedding = Glove_embedding(data = data, trained_dict = trained_dict)

glove_embedding.get_words() #找到所有单词并标记ID

glove_embedding.get_id() #找到每个句子拥有的单词ID

NN_embedding_plot(random_embedding, glove_embedding, alpha, batch_size, iter_times)

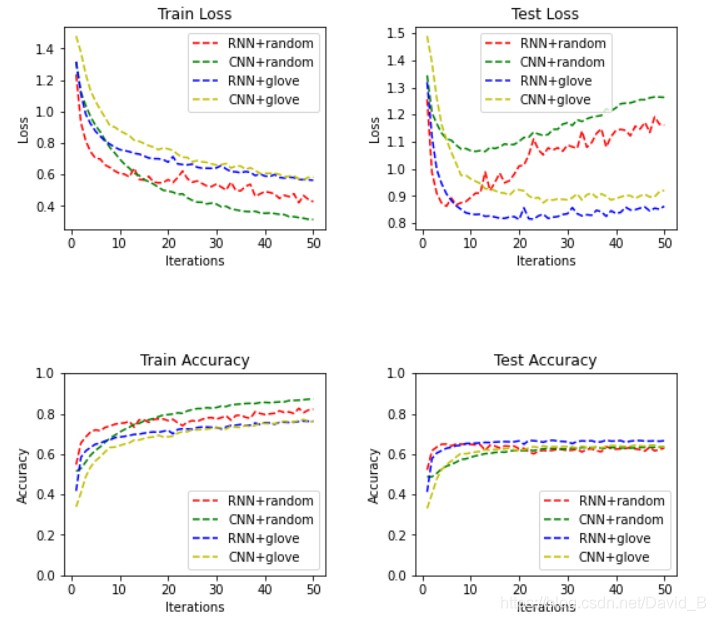

4.3 结果

4.3.1 总体效果

通过结果可以看出RNN比CNN的准确率在测试集和训练集上都要高

GloVe初始化比随机初始化效果更好一些

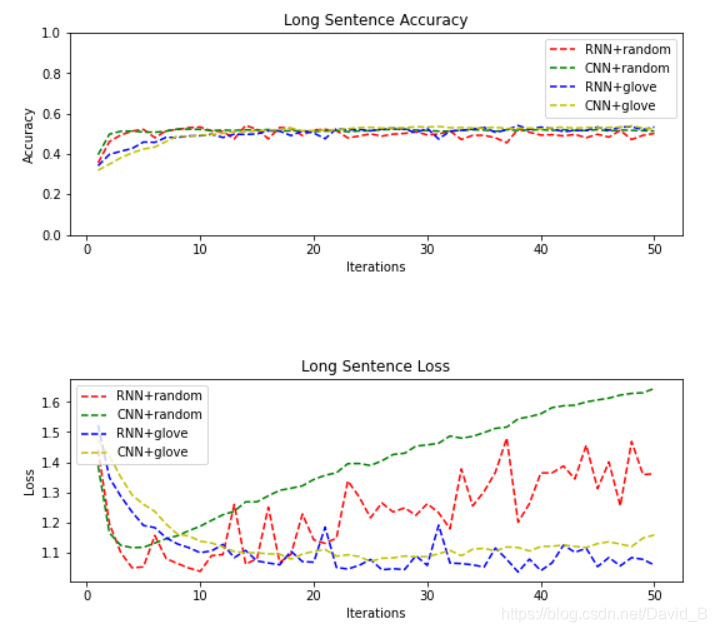

4.3.2 长句子分类效果

对于单词数大于20的句子,在本次任务中RNN与CNN的结果差别不是很大

其他

可以使用Kaggle的GPU跑代码

GloVe数据集

链接:https://pan.baidu.com/s/1OU4v-vXdKaNRZmkB6nWH-Q

提取码:gcmz