本案例使用 jupyter notebook 实现

数据集来源

https://www.kaggle.com/biaiscience/dogs-vs-cats

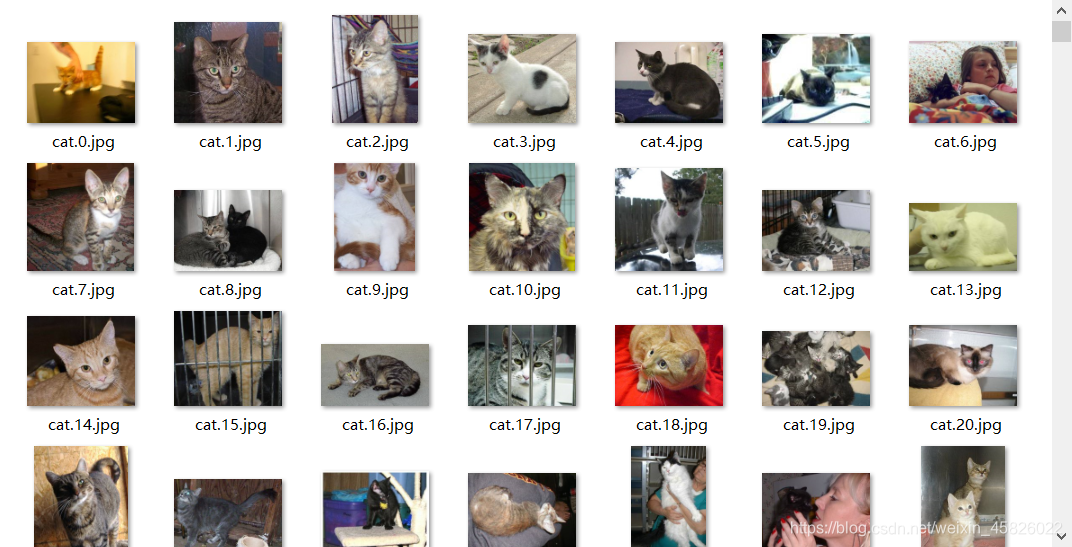

查看数据集

数据集共分为test和train两个文件夹,test文件夹里面的图片没有标签,因此我们仅使用train文件夹内的图片,部分图片如下,可以看到图片的标签为文件名的前三个字母,猫为cat,狗为dog。

导入头文件

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

from torch.optim import Adam

from torchvision import transforms

from torchvision import models

from torchvision.io import read_image

from torch.nn import functional as F

from torch.utils.data import Dataset, DataLoader

import numpy as np

from sklearn.model_selection import StratifiedShuffleSplit

import os

os.environ['TORCH_HOME']='./download_model' # 修改Pytorch模型默认下载位置

加载数据

- 获取train目录下的文件路径名

# 获取图片路径

train_path = './train/train/'

image_file_path = np.array([train_path + i for i in os.listdir(train_path)])

# 根据文件名获取图片对应的标签

labels = np.array([

0 if name.split('/')[-1].startswith('cat') else 1

for name in image_file_path

])

print(len(image_file_path),len(labels))

25000 25000

- 我们可以查看一下获取到的结果形式

# 查看结果

image_file_path[:10], labels[:10]

(array(['../input/dogs-vs-cats/train/train/cat.12461.jpg',

'../input/dogs-vs-cats/train/train/dog.3443.jpg',

'../input/dogs-vs-cats/train/train/dog.7971.jpg',

'../input/dogs-vs-cats/train/train/dog.10728.jpg',

'../input/dogs-vs-cats/train/train/dog.1942.jpg',

'../input/dogs-vs-cats/train/train/dog.375.jpg',

'../input/dogs-vs-cats/train/train/cat.10176.jpg',

'../input/dogs-vs-cats/train/train/cat.8194.jpg',

'../input/dogs-vs-cats/train/train/dog.3259.jpg',

'../input/dogs-vs-cats/train/train/cat.3498.jpg'], dtype='<U47'),

array([0, 1, 1, 1, 1, 1, 0, 0, 1, 0]))

- 使用 StratifiedShuffleSplit 进行随机分层抽样,将25000张图片按 8:1:1 的比例分为训练集、验证集和测试集,且每个数据集中猫和狗的占比均相同

# 分层抽样

# 将数据集按照 8:2 的比例分为训练集和其他数据集

train_val_sss = StratifiedShuffleSplit(n_splits=1, test_size=0.2, random_state=2021)

for train_index, val_index in train_val_sss.split(image_file_path, labels):

train_path, val_path = image_file_path[train_index],image_file_path[val_index]

train_labels, val_labels = labels[train_index],labels[val_index]

# 将其他数据集按照 1:1 的比例分为验证集和测试集

val_test_sss = StratifiedShuffleSplit(n_splits=1, test_size=0.5, random_state=2021)

for val_index, test_index in val_test_sss.split(val_path, val_labels):

val_path, test_path = val_path[val_index],val_path[test_index]

val_labels, test_labels = val_labels[val_index],val_labels[test_index]

# 最终我们得到 8:1:1 的训练集、验证集和测试集

# 每个数据集中猫和狗所占比例相同

- 查看分层抽样结果

# 由于猫的标签是0,狗的标签是1,因此我们可以用.sum()函数获取到狗的个数

print(len(train_path),len(train_labels),train_labels.sum())

print(len(val_path),len(val_labels),val_labels.sum())

print(len(test_path),len(test_labels),test_labels.sum())

20000 20000 10000

2500 2500 1250

2500 2500 1250

- 定义Pytorch数据加载类

# 定义Dataset加载方式

class MyData(Dataset):

def __init__(self, filepath, labels=None, transform=None):

self.filepath = filepath

self.labels = labels

self.transform = transform

def __getitem__(self, index):

image = read_image(self.filepath[index]) # 读取后的图片维度是[channl, height, width]

image = image.to(torch.float32) / 255. # 转换为float32类型,除以255进行归一化

if self.transform is not None:

image = self.transform(image)

if self.labels is not None:

return image, self.labels[index]

return image

def __len__(self):

return self.filepath.shape[0]

- 定义一些指标

image_size = [224, 224] # 图片大小

batch_size = 64 # 批大小

- 定义图像增强器

transform = transforms.Compose([

transforms.RandomHorizontalFlip(), # 随机水平翻转

transforms.RandomRotation(30), # 随机旋转

transforms.Resize([256, 256]), # 设置图片大小

transforms.RandomCrop(image_size), # 将图片随机裁剪为所需图片大小

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) # 使用ImageNet的标准化参数

])

- 获取数据集对象

ds1 = MyData(train_path, train_labels, transform)

ds2 = MyData(val_path, val_labels, transform)

ds3 = MyData(test_path, test_labels, transform)

train_ds = DataLoader(ds1, batch_size=batch_size, shuffle=True)

val_ds = DataLoader(ds2, batch_size=batch_size, shuffle=True)

test_ds = DataLoader(ds3, batch_size=batch_size, shuffle=True)

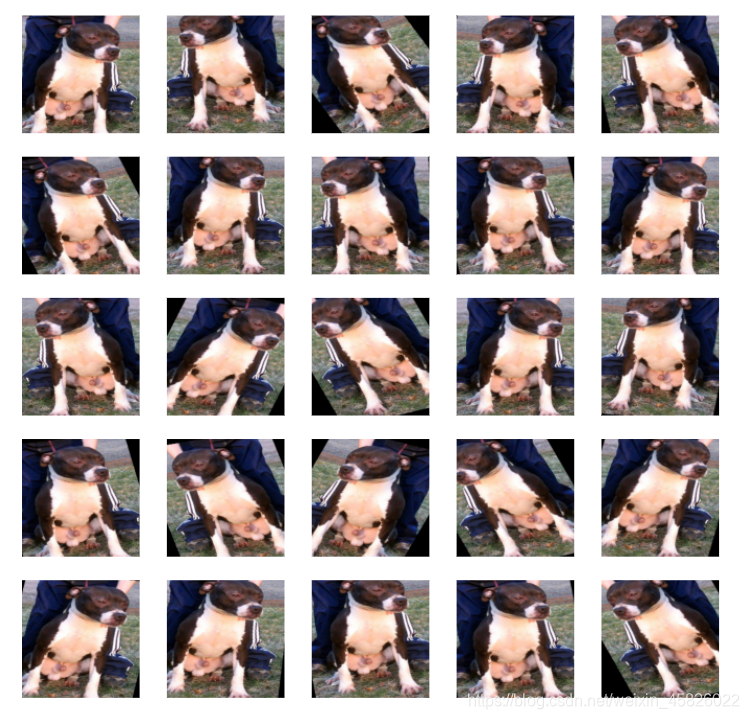

- 针对单张图片,查看图像增强的效果

# 对一张图片查看增强效果

image = read_image(train_path[0])

image = image.to(torch.float32) / 255. # 读取单张图片,并进行归一化

image = image.unsqueeze(0) # 为单张图片加入batch维度

plt.figure(figsize=(12,12))

mean = np.array([0.485, 0.456, 0.406]) # 图像增强所用的标准化参数

std = np.array([0.229, 0.224, 0.225])

for i in range(25):

plt.subplot(5,5,i+1)

img = transform(image)

img = img[0,:,:,:].data.numpy().transpose([1,2,0]) # 将图片从[channel,height,width]变为[height,width,channel]

img = std * img + mean # 反标准化

img = np.clip(img, 0, 1) # 将像素值限制在0~1之间

plt.imshow(img)

plt.axis('off')

plt.show()

- 查看训练集一个批次的图片

# 查看训练集一个batch的图片

for step, (bx, by) in enumerate(train_ds):

if step > 0:

break

plt.figure(figsize=(16,16))

for i in range(len(by)):

plt.subplot(8,8,i+1)

image = bx[i,:,:,:].data.numpy().transpose([1,2,0])

image = std * image + mean # 反标准化

image = np.clip(image, 0, 1)

plt.imshow(image)

plt.axis('off')

plt.title('cat' if by[i].data.numpy()==0 else 'dog')

plt.show()

构建模型

- 对预训练的ResNet50模型进行部分修改,将顶层的1000类输出替换为2类,并冻结参数。

因为前面我们使用os将Pytorch的目录重定向到download_model,因此不要忘了在你当前文件夹下创建download_model文件夹,然后ResNet50将会下载到这个文件夹内,而不是默认下载到C盘。

model = models.resnet50(pretrained=True) # 获取预训练的ResNet50架构

in_features = model.fc.in_features # 获取顶层输出层的输入维度

model.fc = nn.Linear(in_features, 2) # 将输出层替换为自己需要的输出层,输入维度为2

model.add_module('softmax', nn.Softmax(dim=-1)) # 添加softmax层

# 将除了顶层以为,其他层的参数冻结

for name, m in model.named_parameters():

if name.split('.')[0] != 'fc':

m.requires_grad_(False)

- 打印模型

print(model)

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Linear(in_features=2048, out_features=2, bias=True)

(softmax): Softmax(dim=-1)

)

- 将模型迁移到GPU上,以提高训练速度

# 如果有GPU则使用GPU,否则使用CPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = model.to(device)

- 定义优化器和损失函数

optimizer = Adam(model.parameters(), lr=0.0001)

loss_fun = F.cross_entropy

训练模型

- 定义训练函数

def train(model, epoch, train_ds):

model.train() # 开启训练模式

total_num = len(train_ds.dataset) # 训练集总个数

train_loss = 0 # 训练损失

correct_num = 0 # 分类正确的个数

for image, label in train_ds:

image = image.to(device) # 将图片和标签都迁移到GPU上进行加速

label = label.to(device)

label = label.to(torch.long) # 将标签从int32类型转化为long类型,否则计算损失会报错

output = model(image) # 获取模型输出

loss = loss_fun(output, label) # 计算交叉熵损失

train_loss += loss.item() * label.size(0) # 累计总训练损失

optimizer.zero_grad() # 清除优化器上一次的梯度

loss.backward() # 进行反向传播

optimizer.step() # 更新优化器

predict = torch.argmax(output, dim=-1) # 获取预测类别

correct_num += label.eq(predict).sum() # 统计预测正确的个数

train_loss = train_loss / total_num # 计算一个轮次的训练损失

train_acc = correct_num / total_num # 计算一个轮次的训练准确率

# 打印相关信息

print('epoch: {} --> train_loss: {:.6f} - train_acc: {:.6f} - '.format(

epoch, train_loss, train_acc), end='')

- 定义测试函数

def evaluate(model, eval_ds, mode='val'):

model.eval() # 开启测试模式

total_num = len(eval_ds.dataset)

eval_loss = 0

correct_num = 0

for image, label in eval_ds:

image = image.to(device)

label = label.to(device)

label = label.to(torch.long)

output = model(image)

loss = loss_fun(output, label)

eval_loss += loss.item() * label.size(0)

predict = torch.argmax(output, dim=-1)

correct_num += label.eq(predict).sum()

eval_loss = eval_loss / total_num

eval_acc = correct_num / total_num

print('{}_loss: {:.6f} - {}_acc: {:.6f}'.format(

mode, eval_loss, mode, eval_acc))

- 开始训练,训练20个epoch

for epoch in range(20):

train(model, epoch, train_ds)

evaluate(model, val_ds)

epoch: 0 --> train_loss: 0.263510 - train_acc: 0.933600 - val_loss: 0.127010 - val_acc: 0.972800

epoch: 1 --> train_loss: 0.121579 - train_acc: 0.967150 - val_loss: 0.088527 - val_acc: 0.975200

epoch: 2 --> train_loss: 0.097462 - train_acc: 0.969750 - val_loss: 0.074590 - val_acc: 0.977600

epoch: 3 --> train_loss: 0.086457 - train_acc: 0.970450 - val_loss: 0.067147 - val_acc: 0.977200

epoch: 4 --> train_loss: 0.078661 - train_acc: 0.972100 - val_loss: 0.062467 - val_acc: 0.978800

epoch: 5 --> train_loss: 0.076047 - train_acc: 0.972200 - val_loss: 0.056072 - val_acc: 0.980800

epoch: 6 --> train_loss: 0.071828 - train_acc: 0.973850 - val_loss: 0.054756 - val_acc: 0.977600

epoch: 7 --> train_loss: 0.068942 - train_acc: 0.976200 - val_loss: 0.054501 - val_acc: 0.981200

epoch: 8 --> train_loss: 0.068446 - train_acc: 0.974250 - val_loss: 0.048335 - val_acc: 0.984000

epoch: 9 --> train_loss: 0.068512 - train_acc: 0.973300 - val_loss: 0.049108 - val_acc: 0.979600

epoch: 10 --> train_loss: 0.063924 - train_acc: 0.977150 - val_loss: 0.052838 - val_acc: 0.980800

epoch: 11 --> train_loss: 0.060832 - train_acc: 0.977450 - val_loss: 0.046959 - val_acc: 0.980400

epoch: 12 --> train_loss: 0.064698 - train_acc: 0.975300 - val_loss: 0.046457 - val_acc: 0.979600

epoch: 13 --> train_loss: 0.060457 - train_acc: 0.976800 - val_loss: 0.044242 - val_acc: 0.981600

epoch: 14 --> train_loss: 0.061942 - train_acc: 0.976750 - val_loss: 0.045544 - val_acc: 0.983600

epoch: 15 --> train_loss: 0.060018 - train_acc: 0.976900 - val_loss: 0.044339 - val_acc: 0.984000

epoch: 16 --> train_loss: 0.057508 - train_acc: 0.977150 - val_loss: 0.045551 - val_acc: 0.981600

epoch: 17 --> train_loss: 0.056687 - train_acc: 0.978300 - val_loss: 0.045825 - val_acc: 0.980000

epoch: 18 --> train_loss: 0.058531 - train_acc: 0.977450 - val_loss: 0.047936 - val_acc: 0.979200

epoch: 19 --> train_loss: 0.057430 - train_acc: 0.978200 - val_loss: 0.046414 - val_acc: 0.982000

- 在测试集上测试模型

# 测试模型

evaluate(model, test_ds, mode='test')

test_loss: 0.041599 - test_acc: 0.982400

- 解冻参数层,将学习率降低,进行微调

# 解冻特征提取层

for name, m in model.named_parameters():

if name.split('.')[0] != 'fc':

m.requires_grad_(True)

# 使用一个学习率更小的优化器

optimizer = Adam(model.parameters(), lr=0.00001)

- 进行微调训练3个epoch

for epoch in range(3):

train(model, epoch, train_ds)

evaluate(model, val_ds)

epoch: 0 --> train_loss: 0.042424 - train_acc: 0.983500 - val_loss: 0.021933 - val_acc: 0.991600

epoch: 1 --> train_loss: 0.024783 - train_acc: 0.991050 - val_loss: 0.016949 - val_acc: 0.993200

epoch: 2 --> train_loss: 0.018476 - train_acc: 0.993450 - val_loss: 0.021186 - val_acc: 0.992400

- 保存模型

torch.save(model,'model.pkl')