这是一个简单的神经网络训练手写字体数字的案例。

我的运行环境为windows,conda,jupyter,没有安装jupyter的可以去下载安装,或者其它的软件也可以。

首先要先下载tensorflow这个库包,我试了一下pip下载不了,我们可以先去官网下载 tensorflow-1.4.0-cp36-cp36m-win_amd64.whl,下载不了的到我的网盘中下载

链接:https://pan.baidu.com/s/1N4dGCSta6mI01-c4V-1D1Q?

提取码:xy90

然后到所在文件的目录下,进行安装tensorflow 终端执行:

pip install tensorflow-1.4.0-cp36-cp36m-win_amd64.whl?pip安装pandas、numpy、matplotlib等库包

import numpy as np

import pandas as pd

%matplotlib inline

import matplotlib.pyplot as plt

import matplotlib.cm as cm

import tensorflow as tf

# 学习率

LEARNING_RATE = 1e-4

TRAINING_ITERATIONS = 2500 # 迭代次数

DROPOUT = 0.5 # 每批处理一次消除一半神经元

BATCH_SIZE = 50 # 批处理

VALIDATRION_SIZE = 2000 # 验证

IMAGE_TO_DISPLAY = 10 # 10次打印一次将文件放到当前目录下,可以查看文件大小和文件形式。

mnist_train.csv 文件没有可以在我的文档里面下载:

https://download.csdn.net/download/qq_45758854/20229559?spm=1001.2014.3001.5503

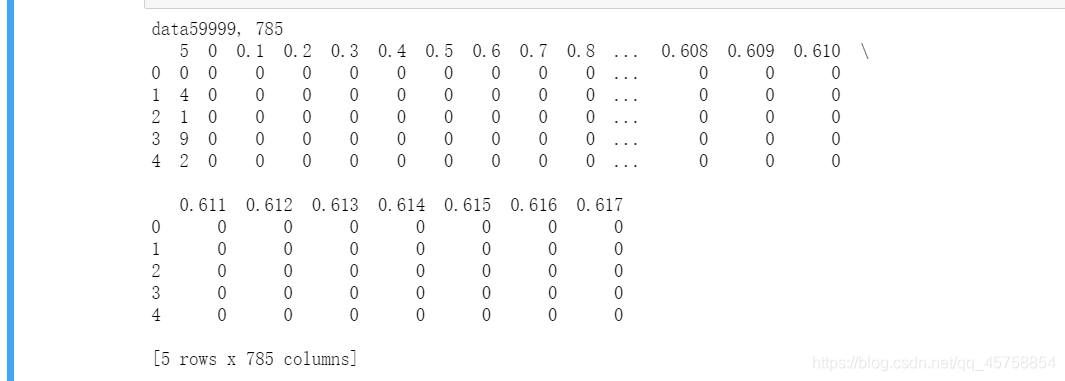

# 读训练数据

data = pd.read_csv('mnist_train.csv')

print('data{0[0]}, {0[1]}'.format(data.shape))

print(data.head())

在jupyter运行之后你可以看见这个结果?

?

images = data.iloc[:, 1:].values

images = images.astype(np.float)

# 转换为0-1之间

images = np.multiply(images, 1.0/255.0)

# print(images)

# print("images({0[0]}, {0[1]})".format(images.shape))

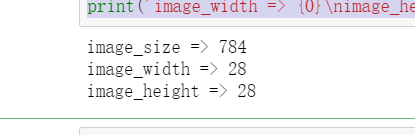

image_size = images.shape[1]

print('image_size => {0}'.format(image_size))

# 开方

image_width = image_height = np.ceil(np.sqrt(image_size)).astype(np.uint8)

print('image_width => {0}\nimage_height => {1}'.format(image_width, image_height))

?

def display(img):

# 转换为28*28图像

one_image = img.reshape(image_width, image_height)

plt.axis('off')

plt.imshow(one_image, cmap=cm.binary)

display(images[IMAGE_TO_DISPLAY])

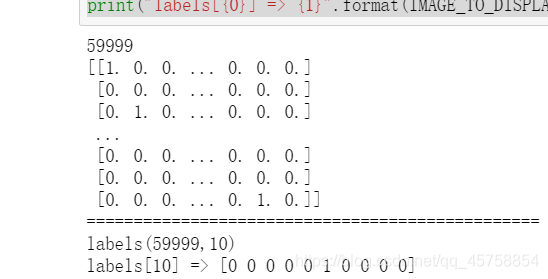

# labels_flat = data[[0]].values.ravel()

labels_flat = data.iloc[:,0].values

print('labels_flat({0})'.format(len(labels_flat)))

print('labels_flat[{0}] => {1}'.format(IMAGE_TO_DISPLAY, labels_flat[IMAGE_TO_DISPLAY]))

labels_count = np.unique(labels_flat).shape[0]

print('labels_count => {0}'.format(labels_count))# 将预测值规划为0,1,正确值为1,其余为0

def dense_to_one_hot(labels_dense, num_classes):

num_labels = labels_dense.shape[0]

print(num_labels)

index_offset = np.arange(num_labels) * num_classes

labels_one_hot = np.zeros((num_labels, num_classes))

labels_one_hot.flat[index_offset + labels_dense.ravel()] = 1

print(labels_one_hot)

return labels_one_hot

labels = dense_to_one_hot(labels_flat, labels_count)

labels = labels.astype(np.uint8)

print("================================================")

print("labels({0[0]},{0[1]})".format(labels.shape))

print("labels[{0}] => {1}".format(IMAGE_TO_DISPLAY, labels[IMAGE_TO_DISPLAY]))

# 分离训练数据和测试数据

validation_images = images[:VALIDATRION_SIZE]

validation_labels = labels[:VALIDATRION_SIZE]

train_images = images[VALIDATRION_SIZE:]

train_labels = labels[VALIDATRION_SIZE:]

print("train_images({0[0]}, {0[1]})".format(train_images.shape))

print("validation_images({0[0]}, {0[1]})".format(validation_images.shape))?

# 权重和偏置的初始化

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev = 0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

# 创建卷积过程

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

# 池化层

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

# 输入和输出

x = tf.placeholder(tf.float32, shape=[None, image_size])

y_ = tf.placeholder(tf.float32, shape=[None, labels_count])

# filter

w_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

image = tf.reshape(x, [-1, image_width, image_height, 1])

print(image.get_shape())

h_conv1 = tf.nn.relu(conv2d(image, w_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

print(h_pool1.get_shape())

w_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, w_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

print(h_pool2.get_shape())

# 全连接层

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

# print(h_pool2_flat.get_shape())

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

print(h_fc1.get_shape())

# 防止过拟合,随机杀死一些神经元

keep_prob = tf.placeholder('float')

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

W_fc2 = weight_variable([1024, labels_count])

b_fc2 = bias_variable([labels_count])

y = tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

print(y.get_shape())

# 损失值

cross_entropy = -tf.reduce_sum(y_*tf.log(y))

# 优化器

train_step = tf.train.AdamOptimizer(LEARNING_RATE).minimize(cross_entropy)

# 评估预测

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, 'float'))

# 预测

predict = tf.argmax(y, 1)

epochs_completed = 0

index_in_epoch = 0

num_examples = train_images.shape[0]

# 按batch迭代数据

def next_batch(batch_size):

global train_images

global train_labels

global index_in_epoch

global epochs_completed

start = index_in_epoch

index_in_epoch += batch_size

# 当所有训练数据都已被使用时,它会被随机重新排序

if index_in_epoch > num_examples:

epochs_completed += 1

# 冲洗数据

perm = np.arange(num_examples)

np.random.shuffle(perm)

train_images = train_images[perm]

train_labels = train_labels[perm]

start = 0

index_in_epoch = batch_size

assert batch_size <= num_examples

end = index_in_epoch

return train_images[start:end], train_labels[start:end]

init = tf.global_variables_initializer()

sess = tf.InteractiveSession()

sess.run(init)

# 变量可视化

train_accuracies = []

validation_accuracies = []

x_range = []

display_step = 1

# 迭代多次,需要 next_batch

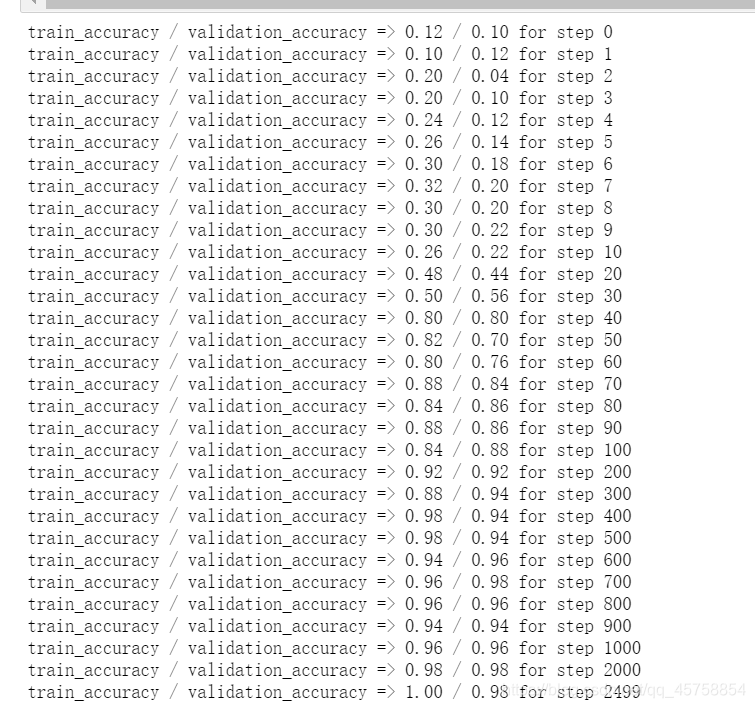

for i in range(TRAINING_ITERATIONS):

# 获得新的批次

batch_xs, batch_ys = next_batch(BATCH_SIZE)

# 判断每一步的进度

if i%display_step == 0 or (i+1) == TRAINING_ITERATIONS:

# 传入 x,y_

train_accuracy = accuracy.eval(feed_dict={x: batch_xs, y_: batch_ys, keep_prob: 1.0})

if (VALIDATRION_SIZE):

validation_accuracy = accuracy.eval(feed_dict={x: validation_images[0: BATCH_SIZE],

y_: validation_labels[0: BATCH_SIZE],

keep_prob: 1.0})

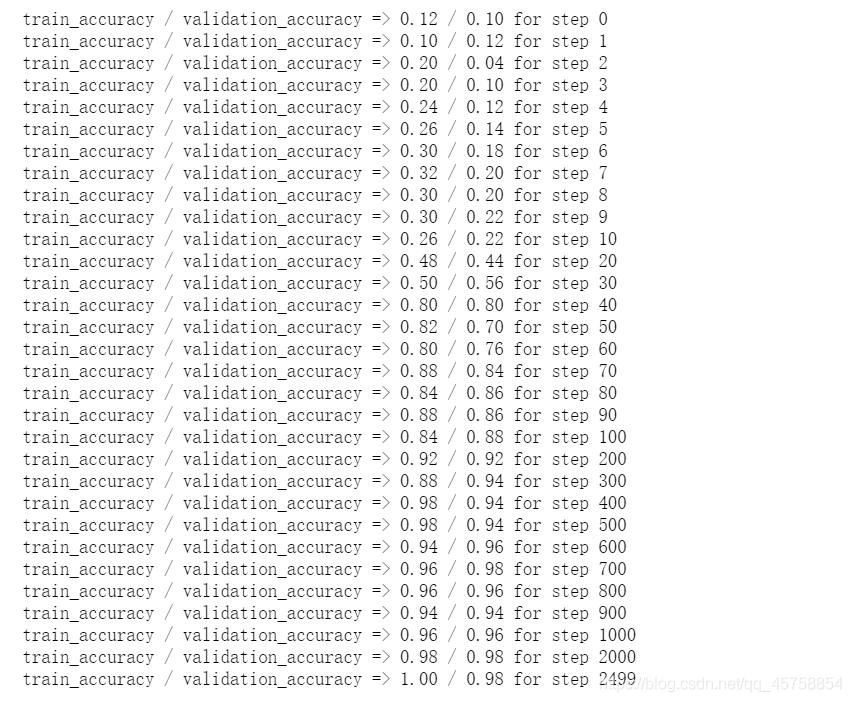

print('train_accuracy / validation_accuracy => %.2f / %.2f for step %d'%(train_accuracy,

validation_accuracy, i))

validation_accuracies.append(validation_accuracy)

else:

print('training_accuracy => %.4f for step %d'%(train_accuracy, i))

train_accuracies.append(train_accuracy)

x_range.append(i)

# 增加显示步骤

if i%(display_step*10) ==0 and i:

display_step *= 10

# 批量训练

sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys, keep_prob: DROPOUT})

?完整代码:

import numpy as np

import pandas as pd

%matplotlib inline

import matplotlib.pyplot as plt

import matplotlib.cm as cm

import tensorflow as tf

# 学习率

LEARNING_RATE = 1e-4

TRAINING_ITERATIONS = 2500 # 迭代次数

DROPOUT = 0.5 # 每批处理一次消除一半神经元

BATCH_SIZE = 50 # 批处理

VALIDATRION_SIZE = 2000 # 验证

IMAGE_TO_DISPLAY = 10 # 10次打印一次

# 读训练数据

data = pd.read_csv('mnist_train.csv')

images = data.iloc[:, 1:].values

images = images.astype(np.float)

# 转换为0-1之间

images = np.multiply(images, 1.0/255.0)

image_size = images.shape[1]

# print('image_size => {0}'.format(image_size))

# 开方

image_width = image_height = np.ceil(np.sqrt(image_size)).astype(np.uint8)

# print('image_width => {0}\nimage_height => {1}'.format(image_width, image_height))

def display(img):

# 转换为28*28图像

one_image = img.reshape(image_width, image_height)

plt.axis('off')

plt.imshow(one_image, cmap=cm.binary)

display(images[IMAGE_TO_DISPLAY])

labels_flat = data.iloc[:,0].values

# print('labels_flat({0})'.format(len(labels_flat)))

# print('labels_flat[{0}] => {1}'.format(IMAGE_TO_DISPLAY, labels_flat[IMAGE_TO_DISPLAY]))

labels_count = np.unique(labels_flat).shape[0]

# 将预测值规划为0,1,正确值为1,其余为0

def dense_to_one_hot(labels_dense, num_classes):

num_labels = labels_dense.shape[0]

index_offset = np.arange(num_labels) * num_classes

labels_one_hot = np.zeros((num_labels, num_classes))

labels_one_hot.flat[index_offset + labels_dense.ravel()] = 1

# print(labels_one_hot)

return labels_one_hot

labels = dense_to_one_hot(labels_flat, labels_count)

labels = labels.astype(np.uint8)

# 分离训练数据和测试数据

validation_images = images[:VALIDATRION_SIZE]

validation_labels = labels[:VALIDATRION_SIZE]

train_images = images[VALIDATRION_SIZE:]

train_labels = labels[VALIDATRION_SIZE:]

# print("train_images({0[0]}, {0[1]})".format(train_images.shape))

# print("validation_images({0[0]}, {0[1]})".format(validation_images.shape))

# 权重和偏置的初始化

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev = 0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

# 创建卷积过程

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

# 池化层

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

# 输入和输出

x = tf.placeholder(tf.float32, shape=[None, image_size])

y_ = tf.placeholder(tf.float32, shape=[None, labels_count])

# filter

w_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

image = tf.reshape(x, [-1, image_width, image_height, 1])

# print(image.get_shape())

h_conv1 = tf.nn.relu(conv2d(image, w_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

# print(h_pool1.get_shape())

w_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, w_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

# print(h_pool2.get_shape())

# 全连接层

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

# print(h_pool2_flat.get_shape())

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

# print(h_fc1.get_shape())

# 防止过拟合,随机杀死一些神经元

keep_prob = tf.placeholder('float')

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

W_fc2 = weight_variable([1024, labels_count])

b_fc2 = bias_variable([labels_count])

y = tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

# print(y.get_shape())

# 损失值

cross_entropy = -tf.reduce_sum(y_*tf.log(y))

# 优化器

train_step = tf.train.AdamOptimizer(LEARNING_RATE).minimize(cross_entropy)

# 评估预测

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, 'float'))

# 预测

predict = tf.argmax(y, 1)

epochs_completed = 0

index_in_epoch = 0

num_examples = train_images.shape[0]

# 按batch迭代数据

def next_batch(batch_size):

global train_images

global train_labels

global index_in_epoch

global epochs_completed

start = index_in_epoch

index_in_epoch += batch_size

# 当所有训练数据都已被使用时,它会被随机重新排序

if index_in_epoch > num_examples:

epochs_completed += 1

# 冲洗数据

perm = np.arange(num_examples)

np.random.shuffle(perm)

train_images = train_images[perm]

train_labels = train_labels[perm]

start = 0

index_in_epoch = batch_size

assert batch_size <= num_examples

end = index_in_epoch

return train_images[start:end], train_labels[start:end]

init = tf.global_variables_initializer()

sess = tf.InteractiveSession()

sess.run(init)

# 变量可视化

train_accuracies = []

validation_accuracies = []

x_range = []

display_step = 1

# 迭代多次,需要 next_batch

for i in range(TRAINING_ITERATIONS):

# 获得新的批次

batch_xs, batch_ys = next_batch(BATCH_SIZE)

# 判断每一步的进度

if i%display_step == 0 or (i+1) == TRAINING_ITERATIONS:

# 传入 x,y_

train_accuracy = accuracy.eval(feed_dict={x: batch_xs, y_: batch_ys, keep_prob: 1.0})

if (VALIDATRION_SIZE):

validation_accuracy = accuracy.eval(feed_dict={x: validation_images[0: BATCH_SIZE],

y_: validation_labels[0: BATCH_SIZE],

keep_prob: 1.0})

print('train_accuracy / validation_accuracy => %.2f / %.2f for step %d'%(train_accuracy,

validation_accuracy, i))

validation_accuracies.append(validation_accuracy)

else:

print('training_accuracy => %.4f for step %d'%(train_accuracy, i))

train_accuracies.append(train_accuracy)

x_range.append(i)

# 增加显示步骤

if i%(display_step*10) ==0 and i:

display_step *= 10

# 批量训练

sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys, keep_prob: DROPOUT})结果:

?