目录

任务简介:

学习深度学习中常见的标准化方法

详细说明:

本节第一部分学习深度学习中最重要的一个 Normalizatoin方法——Batch Normalization,并分析其计算方式,同时讲解PyTorch中nn.BatchNorm1d、nn.BatchNorm2d、nn.BatchNorm3d三种BN的计算方式及原理。

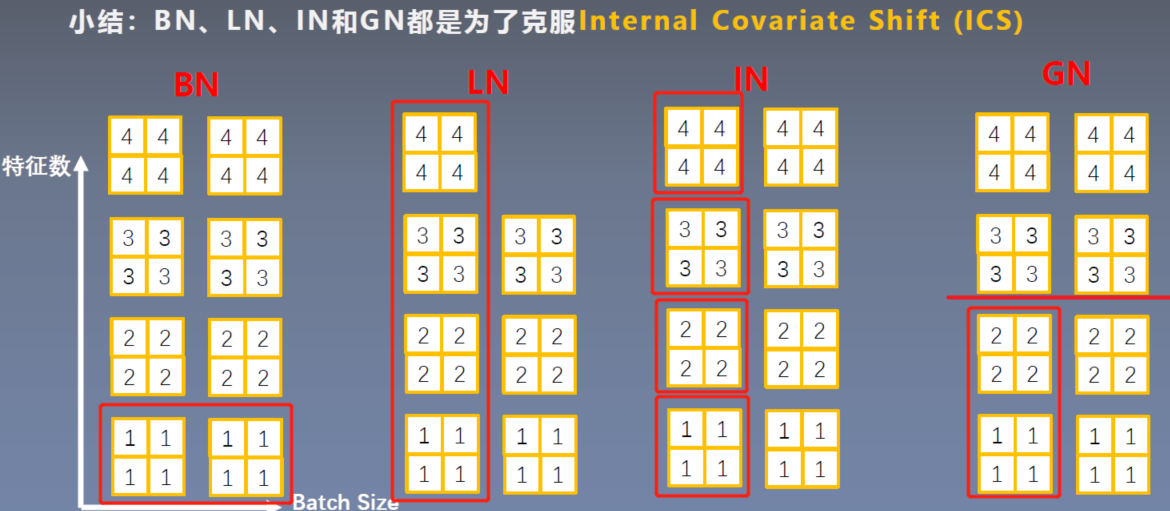

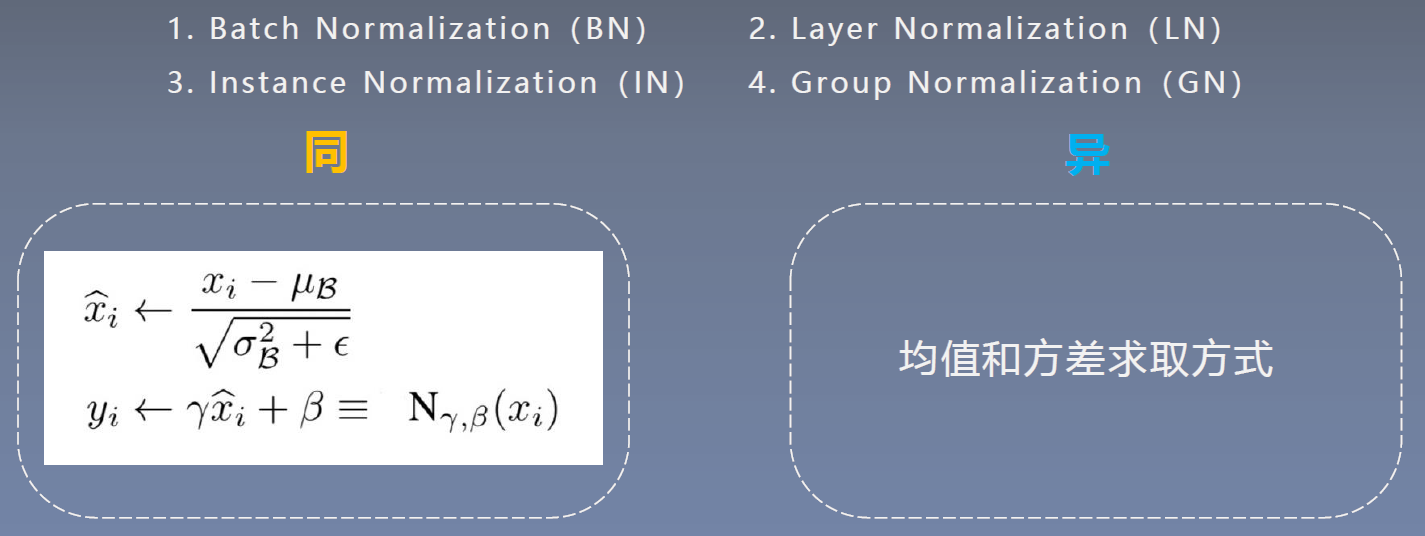

本节第二部分学习2015年之后出现的常见的Normalization方法——Layer Normalizatoin、Instance Normalizatoin和Group Normalizatoin,分析各Normalization的由来与应用场景,同时对比分析BN,LN,IN和GN之间的计算差异。

一、为什么要 Normalization

Normalization 可以约束数据尺度,避免出现数据梯度爆炸或者梯度消失的情况,利于模型训练。

二、常见的 Normalizaton:BN 、 LN 、 IN and GN

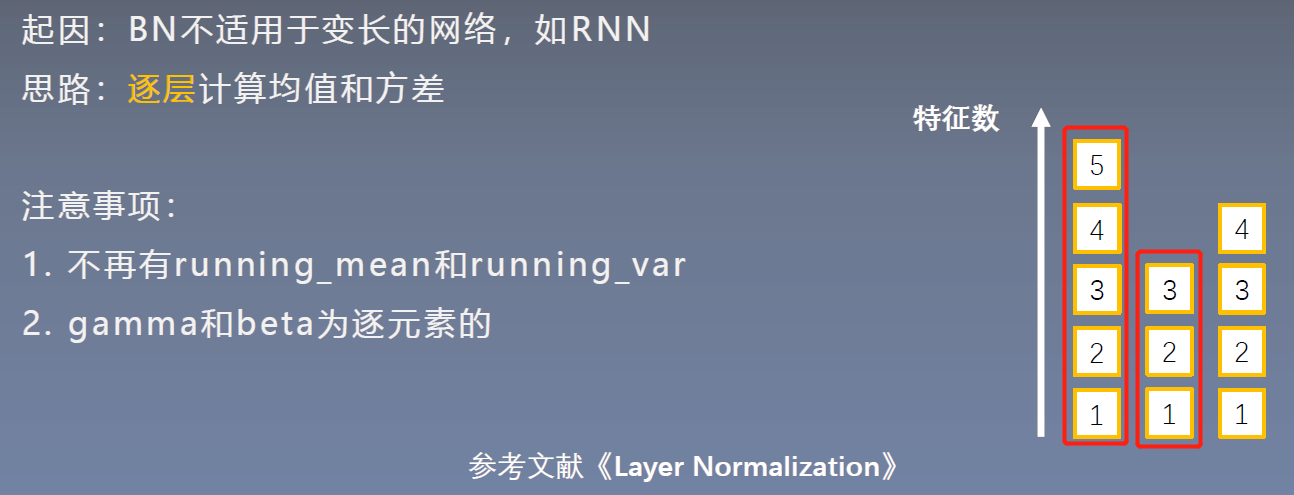

1. Layer Normalization

1.1 nn.LayerNorm

测试代码:

import torch

import numpy as np

import torch.nn as nn

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

# ======================================== nn.layer norm

flag = 1

# flag = 0

if flag:

batch_size = 2

num_features = 3

features_shape = (2, 2)

feature_map = torch.ones(features_shape) # 2D

feature_maps = torch.stack([feature_map * (i + 1) for i in range(num_features)], dim=0) # 3D

feature_maps_bs = torch.stack([feature_maps for i in range(batch_size)], dim=0) # 4D

# feature_maps_bs shape is [8, 6, 3, 4], B * C * H * W

ln = nn.LayerNorm(feature_maps_bs.size()[1:], elementwise_affine=True)

# ln = nn.LayerNorm(feature_maps_bs.size()[1:], elementwise_affine=False)

# ln = nn.LayerNorm([6, 3, 4])

# ln = nn.LayerNorm([6, 3])

output = ln(feature_maps_bs)

print("Layer Normalization")

print(ln.weight.shape)

print(feature_maps_bs[0, ...])

print(output[0, ...])

输出:

Layer Normalization

torch.Size([3, 2, 2])

tensor([[[1., 1.],

[1., 1.]],

[[2., 2.],

[2., 2.]],

[[3., 3.],

[3., 3.]]])

tensor([[[-1.2247, -1.2247],

[-1.2247, -1.2247]],

[[ 0.0000, 0.0000],

[ 0.0000, 0.0000]],

[[ 1.2247, 1.2247],

[ 1.2247, 1.2247]]], grad_fn=<SelectBackward>)

如果设置:

ln = nn.LayerNorm(feature_maps_bs.size()[1:], elementwise_affine=False)

则报错:

AttributeError: 'NoneType' object has no attribute 'shape'

nn.LayerNorm()可以根据shape从后往前设置:# feature_maps_bs shape is [8, 6, 3, 4], B * C * H * W

可以设置nn.LayerNorm([4]),nn.LayerNorm([3,4])以及nn.LayerNorm([6,3,4])但不能设置nn.LayerNorm([6,3])

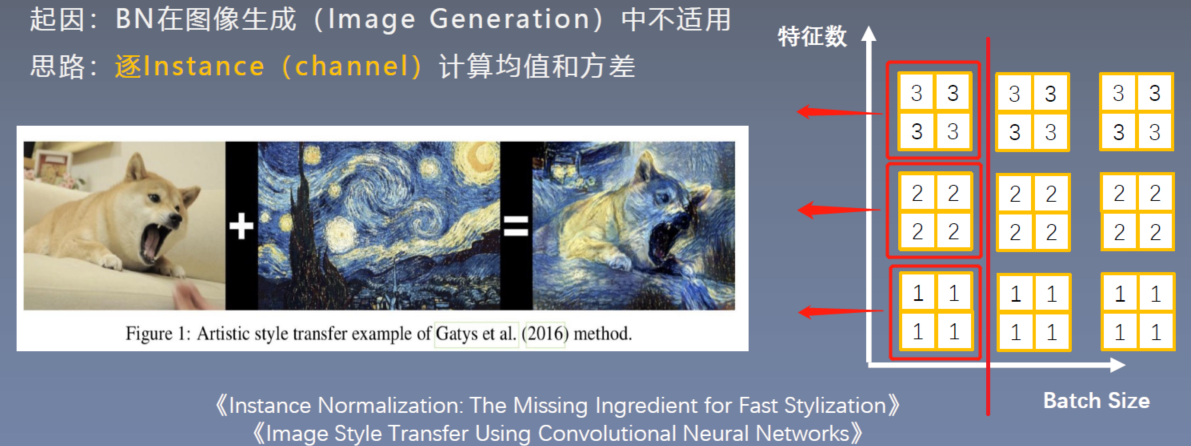

2. Instance Normalization

2.1 nn.InstanceNorm

测试代码:

# ======================================== nn.instance norm 2d

flag = 1

# flag = 0

if flag:

batch_size = 3

num_features = 3

momentum = 0.3

features_shape = (2, 2)

feature_map = torch.ones(features_shape) # 2D

feature_maps = torch.stack([feature_map * (i + 1) for i in range(num_features)], dim=0) # 3D

feature_maps_bs = torch.stack([feature_maps for i in range(batch_size)], dim=0) # 4D

print("Instance Normalization")

print("input data:\n{} shape is {}".format(feature_maps_bs, feature_maps_bs.shape))

instance_n = nn.InstanceNorm2d(num_features=num_features, momentum=momentum)

for i in range(1):

outputs = instance_n(feature_maps_bs)

print(outputs)

#print("\niter:{}, running_mean.shape: {}".format(i, bn.running_mean.shape))

#print("iter:{}, running_var.shape: {}".format(i, bn.running_var.shape))

#print("iter:{}, weight.shape: {}".format(i, bn.weight.shape))

#print("iter:{}, bias.shape: {}".format(i, bn.bias.shape))

输出:

Instance Normalization

input data:

tensor([[[[1., 1.],

[1., 1.]],

[[2., 2.],

[2., 2.]],

[[3., 3.],

[3., 3.]]],

[[[1., 1.],

[1., 1.]],

[[2., 2.],

[2., 2.]],

[[3., 3.],

[3., 3.]]],

[[[1., 1.],

[1., 1.]],

[[2., 2.],

[2., 2.]],

[[3., 3.],

[3., 3.]]]]) shape is torch.Size([3, 3, 2, 2])

tensor([[[[0., 0.],

[0., 0.]],

[[0., 0.],

[0., 0.]],

[[0., 0.],

[0., 0.]]],

[[[0., 0.],

[0., 0.]],

[[0., 0.],

[0., 0.]],

[[0., 0.],

[0., 0.]]],

[[[0., 0.],

[0., 0.]],

[[0., 0.],

[0., 0.]],

[[0., 0.],

[0., 0.]]]])

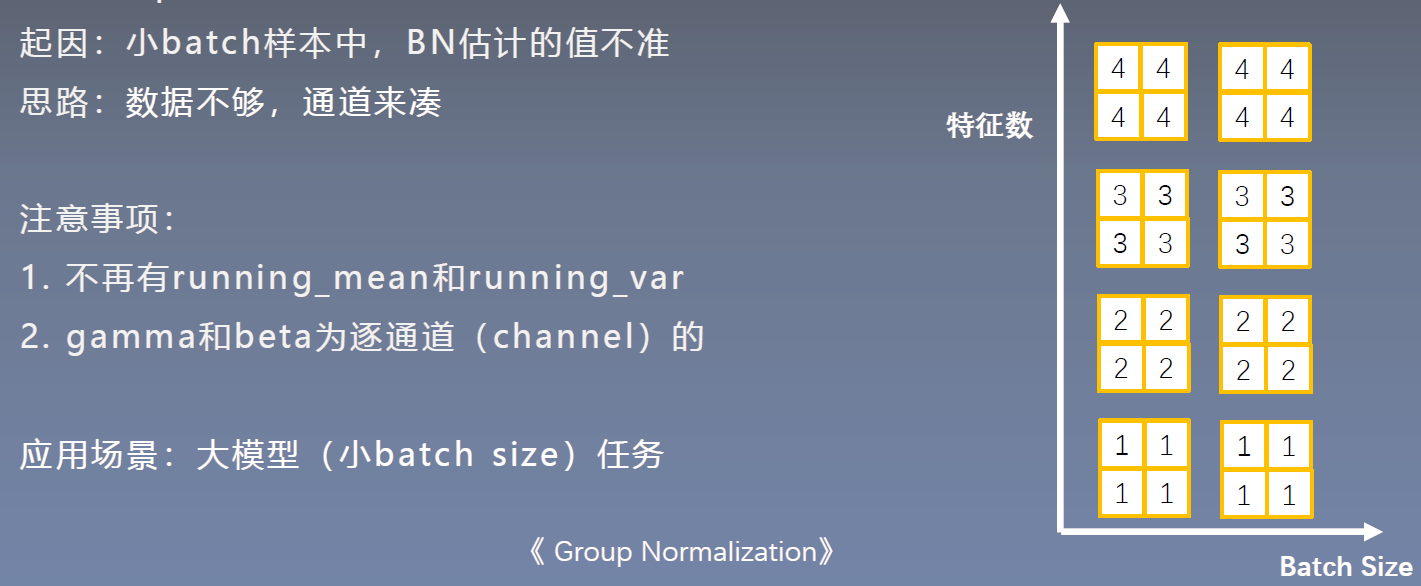

3. Group Normalization

3.1 nn.GroupNorm

测试代码:

# ======================================== nn.grop norm

flag = 1

# flag = 0

if flag:

batch_size = 2

num_features = 4

num_groups = 2 # 3 Expected number of channels in input to be divisible by num_groups

features_shape = (2, 2)

feature_map = torch.ones(features_shape) # 2D

feature_maps = torch.stack([feature_map * (i + 1) for i in range(num_features)], dim=0) # 3D

feature_maps_bs = torch.stack([feature_maps * (i + 1) for i in range(batch_size)], dim=0) # 4D

gn = nn.GroupNorm(num_groups, num_features)

outputs = gn(feature_maps_bs)

print("Group Normalization")

print(gn.weight.shape)

print(outputs[0])

输出:

Group Normalization

torch.Size([4])

tensor([[[-1.0000, -1.0000],

[-1.0000, -1.0000]],

[[ 1.0000, 1.0000],

[ 1.0000, 1.0000]],

[[-1.0000, -1.0000],

[-1.0000, -1.0000]],

[[ 1.0000, 1.0000],

[ 1.0000, 1.0000]]], grad_fn=<SelectBackward>)

三、Normalization 小结