在学习了一系列使用pytorch进行训练的方法之后,我们有一个很重要的点需要注意,就是如何制作自己的数据集,在前面的例子中,我们主要集中于pytorch在算法上的使用,数据集基本都是pytorch提供的,或者是用 data.ImageFolder直接生成的数据+标签(这个方法对于一般的图像问题很好用),但是当我们需要使用类似3D数据,不是图片的数据进行训练时,如何制作自己的数据集,就很关键了,下面我会以CT图片(格式 .nii.gz)举例,来进行数据集制作的介绍。

其实pytorch制作自己的数据集非常便利,可以使用torch.utils.data.Dataset库,继承这个库制作的数据集,后续可以继续使用torch.utils.data. DataLoader 来直接分batchsize等,非常的方便,继承torch.utils.data.Dataset只需要做好三步:

1)写好init,主要是初始化一下数据集的存储低等

2)getitem(self, index):必须写,这个是必须要自己写的,无法继承,主要功能是按照索引读取每个元素的具体内容

3)len(self):必须写,主要是返回整个数据集的长度

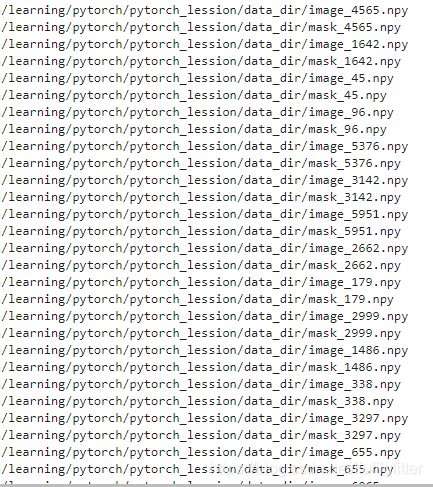

那么我们就直接举例来说明这三个函数的使用,首先,我们的数据集是医疗数据CT图片组,每一个CT图片组都由多个512512的图片组成,我希望生成的数据集为:输入图片 5512512,对应的label为 512512,即每次我们仅预测5张输入图片,做图像分割任务,任务是输出中间张图片的分割结果。所以我会首先用别的代码读取CT图片,然后将输入图片和对应的label存储成.npy 的格式,然后生成一个txt,存储这些数据,生成的txt如下图:

也就是将training data和其对应的label地址存下来,下面就可以写一个属于自己的dataset:

from torch.utils.data import Dataset,DataLoader

import numpy as np

class MyDataset(Dataset):

def __init__(self,data_txt,transform=None):

super().__init__()

self.data_txt=data_txt

self.imgs=[]

self.transform=transform

data_file=open(self.data_txt,'r') #init读取了所有的图片地址,并且存储在self.imgs里面,在后续的getitem里,只需要拿出来一个数据地址,然后读取对应的npy即可

lines=data_file.readlines()

for line in lines:

line=line.strip()

train_path=line.split(' ')[0]

label_path=line.split(' ')[1]

self.imgs.append([train_path,label_path])

def __getitem__(self,index):

train_path,label_path=self.imgs[index]

train_data=np.load(train_path)

label_data=np.load(label_path)

label_data=torch.from_numpy(label_data)#这里注意,我们直接将label_data转换成torch,没有试用transform.ToTensor因为其会将我们的label归一化

if self.transform is not None:

training_data=self.transform(training_data)

training_data=training_data.unsqueeze(0) #添加一维 channel

return training_data,label_data

def __len__(self): #必须自己定义

return len(self.imgs)

在只做自己的数据集的时候,其实最主要的是,在__getitem__的时候,需要每次给出的数据都是符合pytorch数据结构的,我们的图像分割任务,首先training_data 的 结构是[batch_size,channel,depth,width,height],在给出我们的数据的时候,就需要给出[channel,depth,width,height],所以代码中有raining_data=training_data.unsqueeze(0),给输入添加了channel通道.

同时,我们的label应该是3维(batch,height,width),类型为long;不需要变为one-hot向量,但对于(batch,1, height,width)的1通道label,需要用 label.squeeze(1).long() 进行维度和类型变换

具体的一些问题可以参照:

https://blog.csdn.net/nominior/article/details/105098899

在实现了自己数据集的制作之后,就可以进行数据集的使用啦:

from torchvision import datasets,models,transforms

data_txt='data.txt'

transform=transforms.ToTensor()

traindatasets=MyDataset(data_txt,transform)

training_loader = DataLoader(training_dataset, batch_size=2, shuffle=True) #后续可以直接从training_loader 中读取数据进行训练

#for step,(imgs,labels) in enumerate(training_loader):

这里我们使用unet3d进行图片分割,在建立unet3d网络的时候,注意,为了网络结构能很好地放在GPU里训练,需要使用nn.Sequential(),而不是将卷积层,pooling等放在list中,如果放在list中,会造成网络参数无法放入cuda中,从而使得训练数据和weight不在一个设备。

#unet3d.py

import torch

import torch.nn as nn

from torchvision import datasets,models,transforms

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

import os

import cv2

from collections import OrderedDict

import nibabel as nib

from torch.utils.data import Dataset, DataLoader

import glob

import shutil

class conv3dgroup(nn.Module):

def __init__(self,c_in,c_out,k_size=3,stride=1,padding=1):

super().__init__()

self.conv31=nn.Conv3d(c_in,int(c_out/2),k_size,stride,padding)

self.batch_norm1=nn.BatchNorm3d(num_features=int(c_out/2))

self.relu1=nn.ReLU()

self.conv32=nn.Conv3d(int(c_out/2),c_out,k_size,stride,padding)

self.batch_norm2=nn.BatchNorm3d(num_features=c_out)

self.relu2=nn.ReLU()

def forward(self,x):

#print('input:',x.size())

#print(self.conv31)

x=self.conv31(x)

#print('conv31:',x.size())

x = self.batch_norm1(x)

#print('batch_norm1:',x.size())

x=self.relu1(x)

x=self.conv32(x)

#print('conv32:',x.size())

x = self.batch_norm2(x)

#print('batch_norm2:',x.size())

x=self.relu2(x)

#print('relu2:',(x.size()))

return x

class conv3dgroup_decoder(nn.Module):

def __init__(self,c_in,c_out,k_size=3,stride=1,padding=1):

super().__init__()

self.conv31=nn.Conv3d(c_in,int(c_out),k_size,stride,padding)

self.batch_norm1=nn.BatchNorm3d(num_features=int(c_out))

self.relu1=nn.ReLU()

self.conv32=nn.Conv3d(int(c_out),c_out,k_size,stride,padding)

self.batch_norm2=nn.BatchNorm3d(num_features=c_out)

self.relu2=nn.ReLU()

def forward(self,x):

#print('input:',x.size())

#print(self.conv31)

x=self.conv31(x)

#print('conv31:',x.size())

x = self.batch_norm1(x)

#print('batch_norm1:',x.size())

x=self.relu1(x)

x=self.conv32(x)

#print('conv32:',x.size())

x = self.batch_norm2(x)

#print('batch_norm2:',x.size())

x=self.relu2(x)

#print('relu2:',(x.size()))

return x

class Encoder(nn.Module):

def __init__(self,c_in,model_depth=[64,128,256,512],pool_kernel=2):

super().__init__()

self.feature_map={}

self.pool_record={}

self.model_depth=len(model_depth)

self.conv_group=nn.Sequential() #这里一定要用sequential,不然会造成weight和data不在一个设备的问题

self.max_pool=nn.Sequential()

self.max_pool.add_module("max_pooled",nn.MaxPool3d((1,pool_kernel,pool_kernel),stride=(1,pool_kernel,pool_kernel), padding=0))

self.conv_group.add_module("conv0_0",conv3dgroup(c_in,model_depth[0]))

for i in range(1,len(model_depth)):

conv_name="conv"+str(0)+"_"+str(i)

self.conv_group.add_module(conv_name,conv3dgroup(model_depth[i-1],model_depth[i]))

#print(self.conv_group)

def forward(self,x):

#print('step:',0)

x=self.conv_group[0].forward(x)

self.feature_map[0]=x

#print('after conv:',x.size())

for i in range(1,self.model_depth):

#print('step:',i)

y=self.max_pool[0](x)

#print('after max:',y.size())

x=self.conv_group[i].forward(y)

#print('after conv:',x.size())

self.feature_map[i]=x

#print(x.shape)

return x,self.feature_map

class Decoder(nn.Module):

def __init__(self,c_out,model_depth=[64,128,256,512],pool_kernel=2):

super().__init__()

self.conv_group=nn.Sequential()

self.deconv_group=nn.Sequential()

self.model_depth=len(model_depth)

for i in range(self.model_depth-2,-1,-1): # 2,1,0

dconv = nn.ConvTranspose3d(in_channels=model_depth[i+1], out_channels= model_depth[i+1],kernel_size=3, stride=(1,2,2), padding=(1,1,1), output_padding=(0,1,1))

deconv_name='deconv'+str(1)+'_'+str(i)

self.deconv_group.add_module(deconv_name,dconv)

feature_map_size=model_depth[i]+model_depth[i+1]

conv_name='conv'+str(1)+'_'+str(i)

self.conv_group.add_module(conv_name,conv3dgroup_decoder(feature_map_size,model_depth[i]))

#print(self.conv_group)

#print(self.deconv_group)

def forward(self,x,feature_map):

for i in range(self.model_depth-2,-1,-1):

#print('step:',i)

y=self.deconv_group[2-i](x)

#print('deconv_group:',x.size(),y.size(),feature_map[i].size())

feature_map_now=torch.cat([feature_map[i],y],dim=1)

x=self.conv_group[2-i].forward(feature_map_now)

#print('after conv:',x.size())

#print(x.shape)

return x

class unet3d(nn.Module):

def __init__(self,c_in,num_class,sample_dapth,model_depth=[64,128,256,512]):

super().__init__()

self.encoder=Encoder(c_in,model_depth=model_depth,pool_kernel=2)

self.decoder=Decoder(num_class,model_depth=model_depth)

self.out_layer=nn.Conv3d(model_depth[0],num_class,kernel_size=(sample_dapth,3,3),stride=1,padding=(0,1,1))

def forward(self,x):

#print('x input:',x.size())

x_encoder,feature_map=self.encoder.forward(x)

#print('x_encoder:',x_encoder.size())

#print('len(feature_map):',len(feature_map))

#for i in range(len(feature_map)):

# print(feature_map[i].size())

x_decoder=self.decoder.forward(x_encoder,feature_map)

#print('x_decoder:',x_decoder.size())

x=self.out_layer(x_decoder)

#print('final output:',x.size())

return x

if __name__ == '__main__':

x_test = torch.randn(1, 1, 5,512,512)

device=torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(device)

x_test=x_test.to(device)

'''

encoder=Encoder(1,model_depth=[64,128,256,512],pool_kernel=2)

encoder=encoder.to(device)

print(x_test.size())

x_encoder,feature_map=encoder.forward(x_test)

print(x_encoder.shape)

decoder=Decoder(2,model_depth=[64,128,256,512])

decoder=decoder.to(device)

y_decoder=decoder.forward(x_encoder,feature_map)

'''

unet=unet3d(1,2,5)

print(unet.encoder.conv_group[0])

unet=unet.to(device)

print(next(unet.parameters()).is_cuda)

print(x_test.device)

#print(list(unet.parameters()))

unet.forward(x_test)

然后我们的主函数:

import torch

import torch.nn as nn

from torchvision import datasets,models,transforms

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

import os

import cv2

from collections import OrderedDict

import nibabel as nib

from torch.utils.data import Dataset, DataLoader

import glob

import shutil

from unet3d import unet3d

class HepaticVesselData(Dataset):

def __init__(self,txt,transform=None,label_transform=None):

super().__init__()

self.imgs=[]

self.transform=transform

self.label_transform=label_transform

data_txt=open(txt,'r')

data_path=data_txt.readlines()

for line in data_path:

line=line.strip()

training_data_path=line.split(' ')[0]

label_data_path=line.split(' ')[1]

#training_data=np.load(training_data_path)

#label_data=np.load(label_data_path)

self.imgs.append([training_data_path,label_data_path])

def __getitem__(self,index):

train_path=self.imgs[index][0]

label_path=self.imgs[index][1]

#print(self.imgs[index],train_path,label_path)

training_data=np.load(train_path)

label_data=np.load(label_path)

#print('label_data:',label_data.shape)

label_data=torch.from_numpy(label_data)

#print('label_data:',label_data.size())

if self.transform is not None:

training_data=self.transform(training_data)

training_data=training_data.unsqueeze(0)

return training_data,label_data

def __len__(self):

return len(self.imgs)

if __name__ == '__main__':

training_data_path='/dfsdata2/liuli16_data/data/Hepatic_Vessel/train_data/'

label_data_path='/dfsdata2/liuli16_data/data/Hepatic_Vessel/labelsTr/labelTr_only_hepatic_norevised/'

liver_data_path='/dfsdata2/liuli16_data/data/Hepatic_Vessel/liver_mask_train_data/'

data_dir='/dfsdata2/liuli16_data/learning/pytorch/pytorch_lession/data_dir/'

data_txt='data_path.txt'

sample_depth=5

transform=transforms.Compose([transforms.ToTensor()])

training_dataset=HepaticVesselData(data_txt,transform)

training_loader = DataLoader(training_dataset, batch_size=2, shuffle=True)

device=torch.device('cuda' if torch.cuda.is_available() else 'cpu')

unet=unet3d(1,2,5)

print(unet)

unet=unet.to(device)

criterion=nn.CrossEntropyLoss()

optimizer=torch.optim.Adam(unet.parameters(),lr=0.0001)

epoches=100

losses=[]

for i in range(epoches):

running_loss=0.0

running_correct=0.0

for steps,(inputs,labels) in enumerate(training_loader):

#print(inputs.size(),labels.size())

inputs=inputs.type(torch.FloatTensor)

labels=labels.type(torch.LongTensor)

#print(inputs.size(),labels.size())

inputs=inputs.to(device)

labels=labels.to(device)

#print(inputs.size(),labels.size())

y_pred=unet.forward(inputs)

y_pred=y_pred.squeeze()

#print('y_pred size:',y_pred.size(),labels.size())

loss=criterion(y_pred,labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

running_loss=running_loss+loss.item()

if steps%50==0:

print(steps,' loss:',loss.item())

epoch_loss=running_loss/len(training_dataset)

epoch_acc=running_correct/len(training_dataset)

losses.append(epoch_loss)

print('epoch:',i,' traing loss:',epoch_loss)

plt.close()

plt.plot(losses,label='training_loss')

plt.plot(val_losses,label='validation_loss')

plt.legend()

plt.savefig('loss.png')

if i%10==0:

dir='./checkpoint'

PATH=os.path.join(dir,'unet3d'+str(i)+'.pth')

torch.save(unet.state_dict(), PATH)

可以直接进行训练