朴素贝叶斯法的学习与分类

贝叶斯定理

-

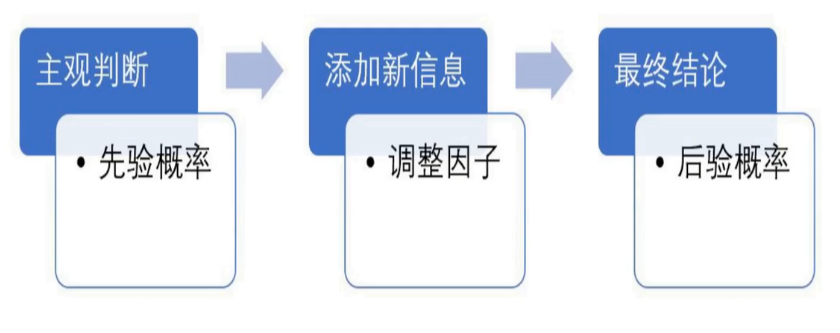

贝叶斯思维

-

条件概率

? P ( X = x ∣ Y = y ) = P ( X = x , Y = y ) P ( Y = y ) P(X=x \mid Y=y)=\frac{P(X=x, Y=y)}{P(Y=y)} P(X=x∣Y=y)=P(Y=y)P(X=x,Y=y)? -

贝叶斯定理

已知:

存在 K K K 类 c 1 , c 2 , ? ? , c K c_{1}, c_{2}, \cdots, c_{K} c1?,c2?,?,cK? , 给定一个新的实例 x = ( x ( 1 ) , x ( 2 ) , ? ? , x ( n ) ) x=\left(x^{(1)}, x^{(2)}, \cdots, x^{(n)}\right) x=(x(1),x(2),?,x(n))

问:该实例归属第 c i c_{i} ci? 类的可能性有多大?

? P ( Y = c i ∣ X = x ) = P ( X = x ∣ Y = c i ) ? P ( Y = c i ) P ( X = x ) P\left(Y=c_{i} \mid X=x\right)=\frac{P\left(X=x \mid Y=c_{i}\right) \cdot P\left(Y=c_{i}\right)}{P(X=x)} P(Y=ci?∣X=x)=P(X=x)P(X=x∣Y=ci?)?P(Y=ci?)?

即,

? P ( Y = c i ∣ X = x ) = P ( X = x ∣ Y = c i ) ? P ( Y = c i ) ∑ i = 1 K P ( X = x ∣ Y = c i ) ? P ( Y = c i ) P\left(Y=c_{i} \mid X=x\right)=\frac{P\left(X=x \mid Y=c_{i}\right) \cdot P\left(Y=c_{i}\right)}{\sum_{i=1}^{K} P\left(X=x \mid Y=c_{i}\right) \cdot P\left(Y=c_{i}\right)} P(Y=ci?∣X=x)=∑i=1K?P(X=x∣Y=ci?)?P(Y=ci?)P(X=x∣Y=ci?)?P(Y=ci?)?

? arg ? max ? c i P ( X = x ∣ Y = c i ) ? P ( Y = c i ) \arg \max _{c_{i}} P\left(X=x \mid Y=c_{i}\right) \cdot P\left(Y=c_{i}\right) argmaxci??P(X=x∣Y=ci?)?P(Y=ci?) -

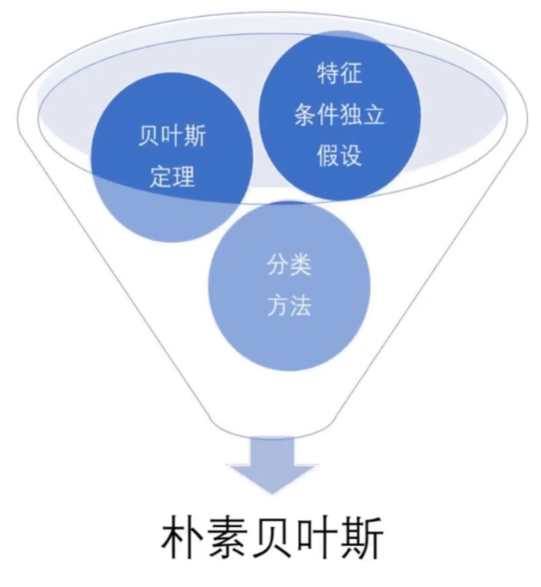

朴素贝叶斯

假设:实例特征之间相互独立

P ( X = x ∣ Y = c i ) = ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) ? P ( X = x ) = ∑ i = 1 K P ( Y = c i ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) ? P ( Y = c i ∣ X = x ) = P ( X = x ∣ Y = c i ) ? P ( Y = c i ) ∑ i = 1 K P ( Y = c i ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) ? P ( Y = c i ∣ X = x ) = P ( Y = c i ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) ∑ i = 1 K P ( Y = c i ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) P\left(X=x \mid Y=c_{i}\right)=\prod_{j=1}^{n} P\left(X^{(j)}=x^{(j)} \mid Y=c_{i}\right) \\\Longrightarrow P(X=x)=\sum_{i=1}^{K} P\left(Y=c_{i}\right) \prod_{j=1}^{n} P\left(X^{(j)}=x^{(j)} \mid Y=c_{i}\right) \\\Longrightarrow P\left(Y=c_{i} \mid X=x\right)=\frac{P\left(X=x \mid Y=c_{i}\right) \cdot P\left(Y=c_{i}\right)}{\sum_{i=1}^{K}P\left(Y=c_{i}\right) \prod_{j=1}^{n} P\left(X(j)=x^{(j)} \mid Y=c_{i}\right)} \\\Longrightarrow P\left(Y=c_{i} \mid X=x\right)=\frac{P\left(Y=c_{i}\right) \prod_{j=1}^{n} P\left(X^{(j)}=x^{(j)} \mid Y=c_{i}\right)}{\sum_{i=1}^{K} P\left(Y=c_{i}\right) \prod_{j=1}^{n} P\left(X(j)=x^{(j)} \mid Y=c_{i}\right)} P(X=x∣Y=ci?)=∏j=1n?P(X(j)=x(j)∣Y=ci?)?P(X=x)=∑i=1K?P(Y=ci?)∏j=1n?P(X(j)=x(j)∣Y=ci?)?P(Y=ci?∣X=x)=∑i=1K?P(Y=ci?)∏j=1n?P(X(j)=x(j)∣Y=ci?)P(X=x∣Y=ci?)?P(Y=ci?)??P(Y=ci?∣X=x)=∑i=1K?P(Y=ci?)∏j=1n?P(X(j)=x(j)∣Y=ci?)P(Y=ci?)∏j=1n?P(X(j)=x(j)∣Y=ci?)?

arg ? max ? c i P ( Y = c i ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) \underset{c_{i}}{\arg \max } P\left(Y=c_{i}\right) \prod_{j=1}^{n} P\left(X^{(j)}=x^{(j)} \mid Y=c_{i}\right) ci?argmax?P(Y=ci?)∏j=1n?P(X(j)=x(j)∣Y=ci?)

基本方法

-

训练数据集:

T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) ? ? , ( x N , y N ) } T=\left\{\left(x_{1}, y_{1}\right),\left(x_{2}, y_{2}\right) \cdots,\left(x_{N}, y_{N}\right)\right\} T={(x1?,y1?),(x2?,y2?)?,(xN?,yN?)}- 输入: X ? R n , x ∈ X \mathcal{X} \subseteq \mathbf{R}^{n}, x \in \mathcal{X} X?Rn,x∈X

- 输出:

Y

=

{

c

1

,

c

2

,

?

?

,

c

K

}

,

y

∈

Y

\mathcal{Y}=\left\{c_{1}, c_{2}, \cdots, c_{K}\right\}, y \in \mathcal{Y}

Y={c1?,c2?,?,cK?},y∈Y

生成方法:学习联合概率分布 P ( X , Y ) P(X, Y) P(X,Y)

-

生成方法:学习联合概率分布 P ( X , Y ) P(X, Y) P(X,Y)

- 先验概率分布:

P ( Y = c i ) , i = 1 , 2 , ? ? , K P\left(Y=c_{i}\right), \quad i=1,2, \cdots, K P(Y=ci?),i=1,2,?,K

- 条件概率分布:

P ( X = x ∣ Y = c i ) = P ( X ( 1 ) = x ( 1 ) , ? ? , X ( n ) = x ( n ) ∣ Y = c i ) P\left(X=x \mid Y=c_{i}\right)=P\left(X^{(1)}=x^{(1)}, \cdots, X^{(n)}=x^{(n)} \mid Y=c_{i}\right) P(X=x∣Y=ci?)=P(X(1)=x(1),?,X(n)=x(n)∣Y=ci?)

- 联合概率分布:

P ( X , Y ) = P ( X = x ∣ Y = c i ) P ( Y = c i ) , i = 1 , 2 , ? ? , K P(X, Y)=P\left(X=x \mid Y=c_{i}\right) P\left(Y=c_{i}\right), i=1,2, \cdots, K P(X,Y)=P(X=x∣Y=ci?)P(Y=ci?),i=1,2,?,K

假设是独立的是为了能够计算出来,使其具有可行性

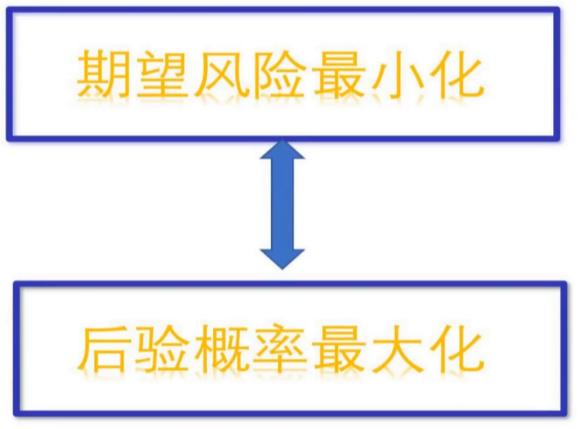

后验概率最大化的含义

- 后验概率

? P ( Y = c i ∣ X = x ) = P ( Y = c i ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) ∑ i = 1 K P ( Y = c i ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) P\left(Y=c_{i} \mid X=x\right)=\frac{P\left(Y=c_{i}\right) \prod_{j=1}^{n} P\left(X^{(j)}=x^{(j)} \mid Y=c_{i}\right)}{\sum_{i=1}^{K} P\left(Y=c_{i}\right) \prod_{j=1}^{n} P\left(X(j)=x^{(j)} \mid Y=c_{i}\right)} P(Y=ci?∣X=x)=∑i=1K?P(Y=ci?)∏j=1n?P(X(j)=x(j)∣Y=ci?)P(Y=ci?)∏j=1n?P(X(j)=x(j)∣Y=ci?)? - 朴素贝叶斯法将实例分到后验概率最大的类中。这等价于期望风险最小化。假设选择

0

?

1

0-1

0?1损失函数:

L ( Y , f ( X ) ) = { 1 , Y ≠ f ( X ) 0 , Y = f ( X ) L ( Y , f ( X ) ) = \{ \begin{array} { l l } { 1 , } & { Y \neq f ( X ) } \\ { 0 , } & { Y = f ( X ) } \end{array} L(Y,f(X))={1,0,?Y?=f(X)Y=f(X)?

式中 f ( X ) f(X) f(X)是分类决策函数。这时,期望风险函数为

R e x p ( f ) = E [ L ( Y , f ( X ) ) ] R _ { e x p } ( f ) = E [ L ( Y , f ( X ) ) ] Rexp?(f)=E[L(Y,f(X))]

因为期望的定义是值出现的概率乘以具体值之和,所以上式可转换为损失函数与联合概率之积的积分:

R exp ? ( f ) = E [ L ( Y , f ( X ) ) ] = ∫ χ × y L ( y , f ( x ) ) P ( x , y ) d x d y = ∫ χ × y L ( y , f ( x ) ) P ( y ∣ x ) P ( x ) d x d y = ∫ χ ∫ Y L ( y , f ( x ) ) P ( y ∣ x ) d y P ( x ) d x R_{\exp }(f)=E[L(Y, f(X))] \\=\int_{\chi \times y} L(y, f(x)) P(x, y) d x d y \\=\int_{\chi \times y} L(y, f(x)) P(y \mid x) P(x) d x d y \\=\int_{\chi} \int_{Y} L(y, f(x)) P(y \mid x) d y P(x) d x Rexp?(f)=E[L(Y,f(X))]=∫χ×y?L(y,f(x))P(x,y)dxdy=∫χ×y?L(y,f(x))P(y∣x)P(x)dxdy=∫χ?∫Y?L(y,f(x))P(y∣x)dyP(x)dx

期望是对联合分布 P ( X , Y ) P(X,Y) P(X,Y)取的。由此取条件期望

R e x p ( f ) = E x ∑ k = 1 K [ L ( c k , f ( X ) ) ] P ( c k ∣ X ) R _ { e x p } ( f ) = E x \sum _ { k = 1 } ^ { K } [ L ( c _ { k } , f ( X ) ) ] P ( c _ { k } | X ) Rexp?(f)=Ex∑k=1K?[L(ck?,f(X))]P(ck?∣X)

为了使期望风险最小化,只需对 X = x X=x X=x逐个极小化,由此得到: - f ( x ) = arg ? min ? y ∈ Y ∑ k = 1 K L ( c k , y ) P ( c k ∣ X = x ) = arg ? min ? y ∈ Y ∑ k = 1 K P ( y ≠ c k ∣ X = x ) = arg ? min ? y ∈ Y ( 1 ? P ( y = c k ∣ X = x ) ) = arg ? max ? y ∈ Y P ( y = c k ∣ X = x ) \begin{aligned} f(x) &=\arg \min _{y \in \mathcal{Y}} \sum_{k=1}^{K} L\left(c_{k}, y\right) P\left(c_{k} \mid X=x\right) \\ &=\arg \min _{y \in \mathcal{Y}} \sum_{k=1}^{K} P\left(y \neq c_{k} \mid X=x\right) \\ &=\arg \min _{y \in \mathcal{Y}}\left(1-P\left(y=c_{k} \mid X=x\right)\right) \\ &=\arg \max _{y \in \mathcal{Y}} P\left(y=c_{k} \mid X=x\right) \end{aligned} f(x)?=argy∈Ymin?k=1∑K?L(ck?,y)P(ck?∣X=x)=argy∈Ymin?k=1∑K?P(y?=ck?∣X=x)=argy∈Ymin?(1?P(y=ck?∣X=x))=argy∈Ymax?P(y=ck?∣X=x)?

- 这样一来,根据期望风险最小化准则就得到了后验概率最大化准则:

? f ( x ) = argmax ? P ( c k ∣ X = x ) f ( x ) = \operatorname { a r g m a x } P ( c _ { k } | X = x ) f(x)=argmaxP(ck?∣X=x)

即朴素贝叶斯法所采用的原理.

朴素贝叶斯法的参数估计

极大似然估计

- 由 arg ? max ? c i P ( Y = c i ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c i ) \underset{c_{i}}{\arg \max } P\left(Y=c_{i}\right) \prod_{j=1}^{n} P\left(X^{(j)}=x^{(j)} \mid Y=c_{i}\right) ci?argmax?P(Y=ci?)∏j=1n?P(X(j)=x(j)∣Y=ci?)可知,学习意味着估计 P ( Y = c k ) P ( Y = c _ { k } ) P(Y=ck?)和 P ( X ( j ) = x ( j ) ∣ Y = c k ) P ( X ^ { ( j ) } = x ^ { ( j ) } | Y = c _ { k } ) P(X(j)=x(j)∣Y=ck?)

- 极大似然估计

- P ( Y = c k ) = ∑ i = 1 N I ( y i = c k ) N , k = 1 , 2 , ? ? , K P ( Y = c _ { k } ) = \frac { \sum _ { i = 1 } ^ { N } I ( y _ { i } = c _ { k } ) } { N } , \quad k = 1 , 2 , \cdots , K P(Y=ck?)=N∑i=1N?I(yi?=ck?)?,k=1,2,?,K N N N是样本,分子是点的个数

- 设第

j

j

j 个特征

x

(

j

)

x^{(j)}

x(j) 可能取值的集合为

{

a

j

1

,

a

j

2

,

?

?

,

a

j

S

j

}

\left\{a_{j 1}, a_{j 2}, \cdots, a_{j S_{j}}\right\}

{aj1?,aj2?,?,ajSj??} , 条件概率

P

(

X

(

j

)

=

a

j

l

∣

Y

=

c

k

)

P\left(X^{(j)}=a_{j l} \mid Y=\right. \left.c_{k}\right)

P(X(j)=ajl?∣Y=ck?) 的极大似然估计是

P ( X ( j ) = a j l ∣ Y = c k ) = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c k ) ∑ i = 1 N I ( y i = c k ) j = 1 , 2 , ? ? , n ; l = 1 , 2 , ? ? , S j ; k = 1 , 2 , ? ? , K P\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{k}\right)}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)} \\j=1,2, \cdots, n ; \quad l=1,2, \cdots, S_{j} ; \quad k=1,2,\cdots,K P(X(j)=ajl?∣Y=ck?)=∑i=1N?I(yi?=ck?)∑i=1N?I(xi(j)?=ajl?,yi?=ck?)?j=1,2,?,n;l=1,2,?,Sj?;k=1,2,?,K

式中, x i ( j ) x_{i}^{(j)} xi(j)? 是第 i i i 个样本的第 j j j 个特征; a j l a_{j l} ajl? 是第 j j j 个特征可能取的第 l l l 个值; I I I 为指 示函数。

学习与分类算法

- 计算先验概率及条件概率

P ( Y = c k ) = ∑ i = 1 N I ( y i = c k ) N , k = 1 , 2 , ? ? , K P ( Y = c _ { k } ) = \frac { \sum _ { i = 1 } ^ { N } I ( y _ { i } = c _ { k } ) } { N } , \quad k = 1 , 2 , \cdots , K P(Y=ck?)=N∑i=1N?I(yi?=ck?)?,k=1,2,?,K

P ( X ( j ) = a j l ∣ Y = c k ) = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c k ) ∑ i = 1 N I ( y i = c k ) j = 1 , 2 , ? ? , n ; l = 1 , 2 , ? ? , S j ; k = 1 , 2 , ? ? , K P\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{k}\right)}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)} \\j=1,2, \cdots, n ; \quad l=1,2, \cdots, S_{j} ; \quad k=1,2,\cdots,K P(X(j)=ajl?∣Y=ck?)=∑i=1N?I(yi?=ck?)∑i=1N?I(xi(j)?=ajl?,yi?=ck?)?j=1,2,?,n;l=1,2,?,Sj?;k=1,2,?,K - 对于给定实例

x

=

(

x

(

1

)

,

x

(

2

)

,

?

?

,

x

(

n

)

)

T

x = ( x ^ { ( 1 ) } , x ^ { ( 2 ) } , \cdots , x ^ { ( n ) } ) ^ { T }

x=(x(1),x(2),?,x(n))T,计算

? P ( Y = c k ) ∏ j = 1 n P ( X ( j ) ) Y = x ( j ) ∣ Y = c k ) , k = 1 , 2 , ? ? , K P ( Y = c _ { k } ) \prod _ { j = 1 } ^ { n } P ( X ^ { ( j ) } ) Y = x ^ { ( j ) } | Y = c _ { k } ) , \quad k = 1 , 2 , \cdots , K P(Y=ck?)∏j=1n?P(X(j))Y=x(j)∣Y=ck?),k=1,2,?,K - 确定实例的类

y = argmax ? P ( Y = c k ) ∏ j = 1 n P ( X ( j ) ) ∣ Y = c i ) ∣ Y = c k ) y = \operatorname { a r g m a x } P ( Y = c _ { k } ) \prod _ { j = 1 } ^ { n } P ( X ^ { ( j ) } ) | Y = c _ { i } ) | Y = c _ { k } ) y=argmaxP(Y=ck?)∏j=1n?P(X(j))∣Y=ci?)∣Y=ck?)

贝叶斯估计

- 先验概率的贝叶斯估计

P λ ( Y = c k ) = ∑ i = 1 N I ( y i = c k ) + λ N + K λ P_{\lambda}\left(Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)+\lambda}{N+K \lambda} Pλ?(Y=ck?)=N+Kλ∑i=1N?I(yi?=ck?)+λ?

- 条件概率的贝叶斯估计

P

λ

(

X

(

j

)

=

a

j

l

∣

Y

=

c

k

)

=

∑

i

=

1

N

I

(

x

i

(

j

)

=

a

j

l

,

y

i

=

c

k

)

+

λ

∑

i

=

1

N

I

(

y

i

=

c

k

)

+

S

j

λ

P_{\lambda}\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{k}\right)+\lambda}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)+S_{j} \lambda}

Pλ?(X(j)=ajl?∣Y=ck?)=∑i=1N?I(yi?=ck?)+Sj?λ∑i=1N?I(xi(j)?=ajl?,yi?=ck?)+λ?

注: $\lambda \geq 0 \quad \lambda=0 $时为极大似然估计,

λ

=

1

\lambda=1

λ=1 时为拉普拉斯平滑(Laplacian Smoothing)。

为什么+

K

K

K

λ

\lambda

λ?

?

∑

k

=

1

K

P

k

(

Y

=

C

k

)

=

1

\sum _ { k = 1 }^{K} P _ { k } ( Y = C _ { k } ) = 1

∑k=1K?Pk?(Y=Ck?)=1

为什么+

S

j

λ

S_{j} \lambda

Sj?λ?

?

∑

l

=

1

S

j

P

λ

(

X

(

j

)

=

a

j

l

∣

Y

=

c

k

)

=

1

\sum _ { l = 1 }^{S_{j}} P_{\lambda}\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)=1

∑l=1Sj??Pλ?(X(j)=ajl?∣Y=ck?)=1