1. Motivation

-

Despite the remarkable accuracy of deep neural networks in object detection, they are costly to train and scale due to supervision requirements.

-

Weakly supervised and zero-shot learning techniques have been explored to scale object detectors to more categories with less supervision, but they have not been as successful and widely adopted as supervised models.

-

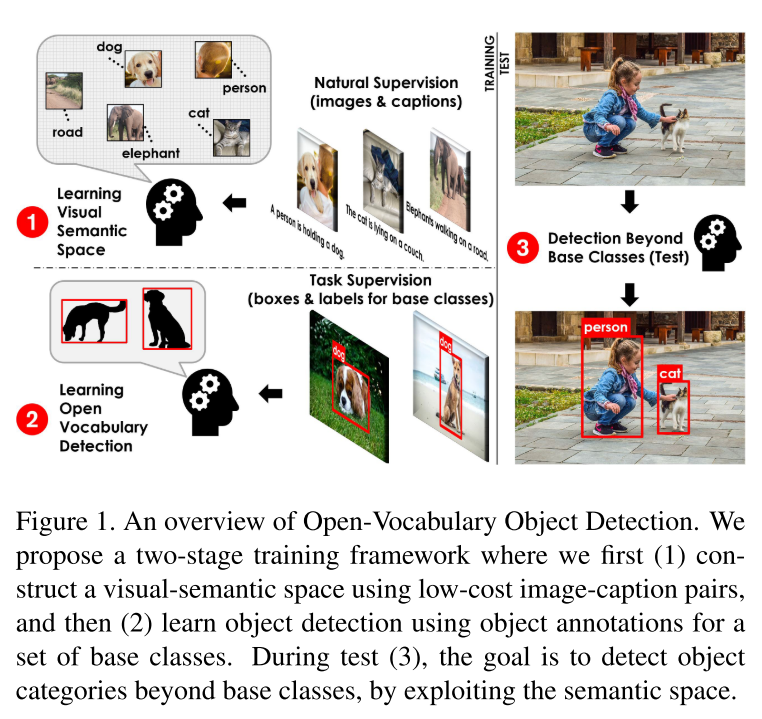

To address the task of OVD, we propose a novel method based on Faster R-CNN [32], which is first pretrained on an image-caption dataset, and then fine-tuned on a bounding box dataset.

-

More specifically, we train a model that takes an image and detects any object within a given target vocabulary VT.

-

To train such a model, we use an image-caption dataset covering a large variety of words denoted as $V_C $as well as a much smaller dataset with localized object annotations from a set of base classes V B V_B VB?.

2. Contribution

-

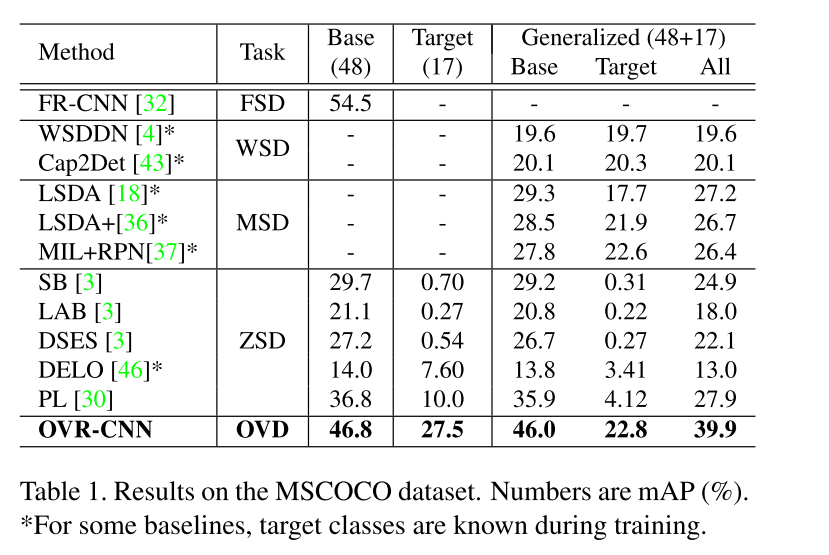

In this paper, we put forth a novel formulation of the object detection problem, namely open- vocabulary object detection, which is more general, more practical, and more effective than weakly supervised and zero-shot approaches.

-

Meanwhile, objects with bounding box annotation can be detected almost as accurately as supervised methods, which is significantly better than weakly supervised baselines.

-

Accordingly, we establish a new state ofthe art for scalable object detection.

-

We name this framework Open Vocabulary Object Detection(OVD).

3. Method

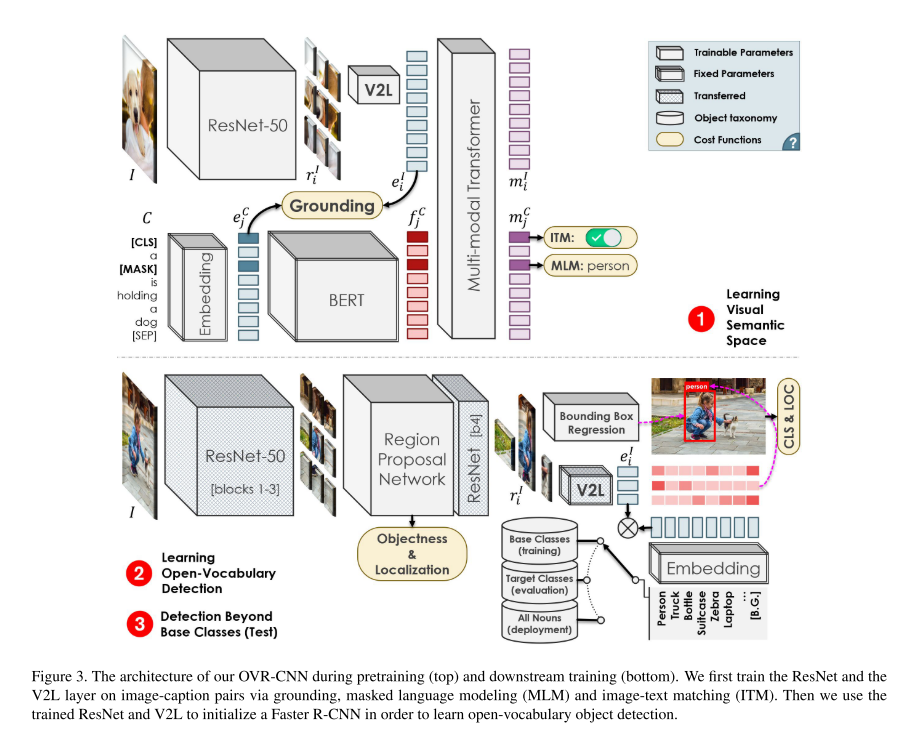

图3为OVR-CNN的framework,基于Faster R-CNN,但是是在zero-shot的形式上训练得到的目标检测器。

确切来说,用base classes V B V_B VB?训练,用target classes V T V_T VT?测试。

为了提升精度,本文的核心思想是通过一个更大的词汇库 V C V_C VC?来预训练一个visual backbone,从而学习丰富的语义空间信息。

在第二个阶段中,使用训练好的ResNet以及V2L 2个模型来初始化Faster R-CNN,从而实现开放词汇的目标检测。

3.1. Learning a visual-semantic space Object

为了解决使用固定的embedding matrix替代classifier weights来训练pretrain base classes embedding而产生overfitting的问题,本文提出了V2L layer。使用的数据不只是base classes。

-

To prevent overfitting, we propose to learn the aforementioned Vision to Language (V2L) projection layer along with the CNN backbone during pretraining, where the data is not limited to a small set of base classes.

-

We use a main (grounding) task as well as a set of auxiliary self-supervision tasks to learn a robust CNN backbone and V2L layer.

作者使用PixelBERT,input为了image-caption,将image输入viusal backbone(ResNet-50),将caption输入language backbone(pretrained BERT),联合产生token embedding,然后将token embedding 输入到multi-model transformer中来提取multi-model embedding。

对于visual backbone,利用ResNet-50,提取输入I的特征,得到 W / 32 × H / 32 W/32 \times H/32 W/32×H/32的feature map,本文定义为 W / 32 × H / 32 W/32 \times H/32 W/32×H/32 regions,将每一个regions i用一个dv-dimension feature vector r i I r_i^I riI?来表示。

利用lauguage backbone,利用BERT,将tokenized caption C作为input,为每一个token j 提取一个dl-dimension word embedding e J C e^C_J eJC?,同时使用position embedding,self-attention等产生dl-dimensional contextualized token embedding f j C f_j^C fjC?。

同时,进一步利用V2L将 r i I r^I_i riI?映射为 e i I e^I_i eiI?,与 f j C f_j^C fjC? 合并,送入transformer中,输入 { m i I } \{m_i^I\} {miI?}以及 { m j C } \{m_j^C \} {mjC?},分别对应着regions以及words。

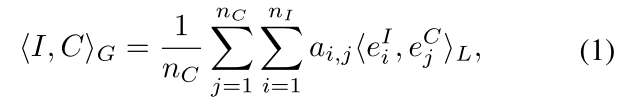

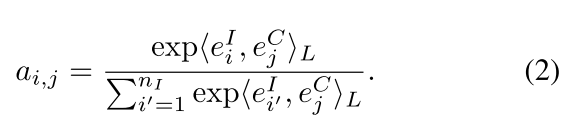

对于每一个image-caption pair,本文定义了一个全局grounding score,如公式1所示:

其中 < . . , . . > L <..,..>_L <..,..>L?表示两个vector的dot product, n c n_c nc?以及 n I n_I nI?表示image以及caption token的数量。

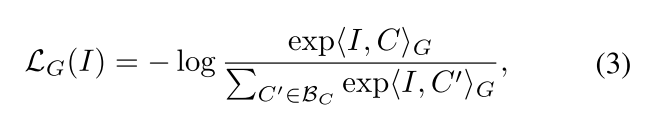

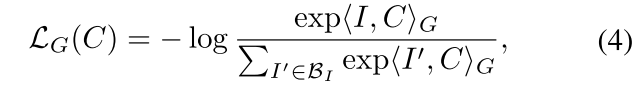

two grounding objective functions:

two grounding objective functions:

有几个细节,注意这里的 B C B_C BC?以及 B I B_I BI?表示的是image 和 caption的batch,而公式3中的 C ′ C' C′对应公式1中的 C C C,公式3中的 I ′ I' I′对应公式1中的 I I I。也就是说公式3中的 I ′ I' I′指的是每一张图片,而公式1中的 e i I e^I_i eiI?则是每一个image中的每一块region(类似于VIT中的patch)。

因此不同image和不同的caption就会存在一个max-min的操作,要最小化non-matching的 pair的得分,最大化match pair的得分。

与PxielBERT类似,引入masked language modeling。

-

Specifically, we randomly replace some words j in each caption C with a [MASK] token, and try to use the multimodal embedding of the masked token m j C m^C_j mjC? to guess the word that was masked

-

We define masked language modeling L M L M L_{MLM} LMLM? as a cross-entropy loss comparing the predicted distribution with the actual word that was masked

-

PixelBERT also em- ploys an image-text matching loss L I T M L_{ITM} LITM?

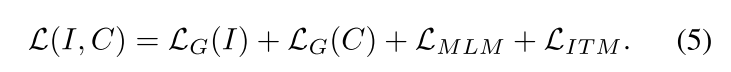

总结,对于每一对image-caption pair,通过最小化公式5的loss,训练visual backbone, V2L backbone,multi-media transformer:

3.2 Learning open-vocabulary detection

如果ResNet以及V2L layer训练好后,第二阶段就可以将它们迁移到目标检测的任务中。接下来是使用ResNet的ste以及前三个block提取特征,使用RPN网络预测objectness以及bbox coordinate,最后使用ResNet的第四个block来对每一个proposal使用pooling操作,得到vector r i I r^I_i riI?,在监督setting中送入分类器。

-

We use the stem and the first 3 blocks of our pretrained ResNet to extract a feature map from a given image.

-

Next, a region proposal network slides anchor boxes on the feature map to predict objectness scores and bounding box coordinates, followed by non-max suppression and region-of-interest pooling to get a feature map for each potential object.

-

Finally, following [32], the 4th block of our pretrained ResNet is applied on each proposal followed by pooling to get a final feature vector rI

i for each proposal box, which is typically fed into a classifier in supervised settings.

如果是zero-shot setting中,在 visual feature r i I r_i^I riI?应用一个线性层来将每一个proposal映射到每一个word space e i I e^I_i eiI?,这样做的作用在于可以比较base以及targetr class embedding。在这里,作者使用之前pretrained V2L,由于使用了RoI-Align,因此vector 可以认为是和pretraining中一样,具有相同的space。

- they can be compared to base or target class embeddings in the training or testing phase respectively

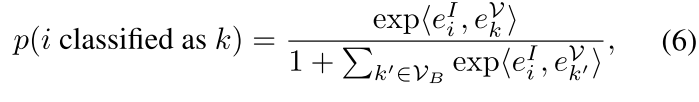

在训练中,将 e i I e^I_i eiI?和base class k进行比较,得到分类得分p,如公式6所示:

其中 e k V e^V_k ekV?是work k的pretrained embedding 。

- We found that a fixed all-zero background embed- ding performs better than a trainable one as it does not push non-foreground bounding boxes, which may contain target classes, to an arbitrary region of the embedding space.

- The ResNet parameters are finetuned, while the region proposal network and the regression head are trained from scratch.

- The classifier head is fully fixed, as it consists of a pretrained V2L layer and word embeddings

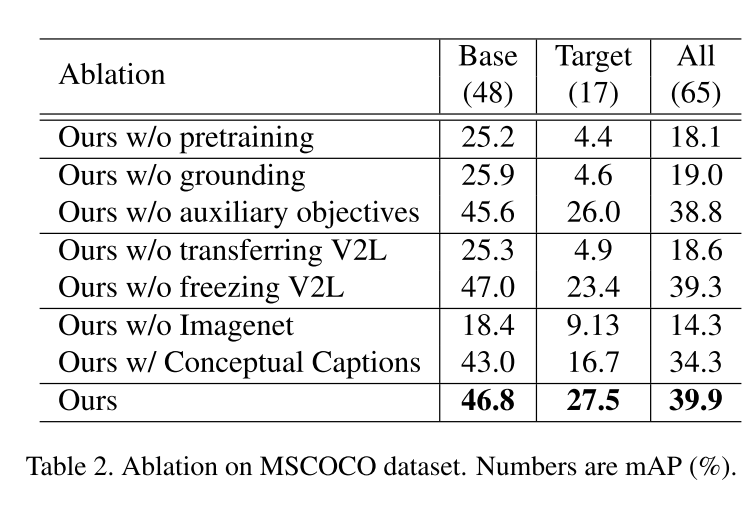

4. Experiment

4.1 Compared with other methods

4.2 Ablation