import torch

import torch.nn as nn

import torchvision

import numpy as np

import random

import torch

import torchvision

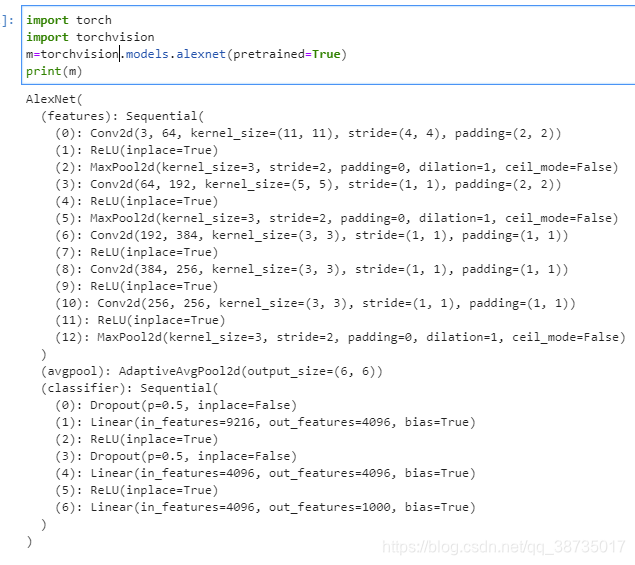

m=torchvision.models.alexnet(pretrained=True)

print(m)

class AlexNet(nn.Module):

def __init__(self):

super(AlexNet,self).__init__()

# 第一层

self.conv1=nn.Conv2d(3,96,7,2,2) # 卷积层

self.relu1=nn.ReLU(inplace=True) # 激活函数层

self.MaxPool2d1=nn.MaxPool2d(3,2,0)# 池化层

# 第二层

self.conv2=nn.Conv2d(96,256,5,1,2)

self.relu2=nn.ReLU(inplace=True)

self.MaxPool2d2=nn.MaxPool2d(3,2,0)

# 第三层

self.conv3=nn.Conv2d(256,384,3,1,1)

self.relu3=nn.ReLU(inplace=True)

# 第四层

self.conv4=nn.Conv2d(384,384,3,1,1)

self.relu4=nn.ReLU(inplace=True)

# 第五层

self.conv5=nn.Conv2d(384,256,3,1,1)

self.relu5=nn.ReLU(inplace=True)

for m in self.modules():# 进行判断把层的参数初始化为0

print("---------------")

print(m)

if isinstance(m,nn.Conv2d):

print(isinstance(m,nn.Conv2d))

nn.init.constant_(m.weight, 0.)

# 前向传播

def forward(self, x):

x=self.conv1(x)

x=self.relu1(x)

x=self.MaxPool2d1(x)

x=self.conv2(x)

x=self.relu2(x)

x=self.MaxPool2d2(x)

x=self.conv3(x)

x=self.relu3(x)

x=self.conv4(x)

x=self.relu4(x)

x=self.conv5(x)

x=self.relu5(x)

return x

net = AlexNet()?