减少代码冗余:将某些重复调用的代码封装成类或函数。

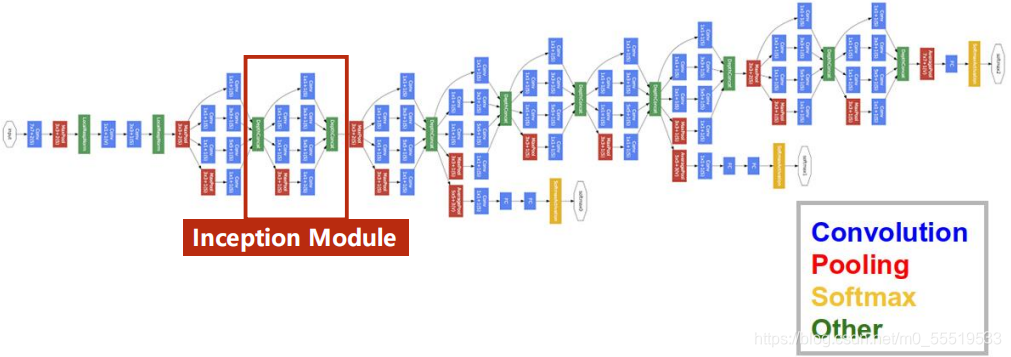

GoogleNet

网络结构

有很多相同的Inception模块组成,就将此模块写成一个类,方便重复调用。

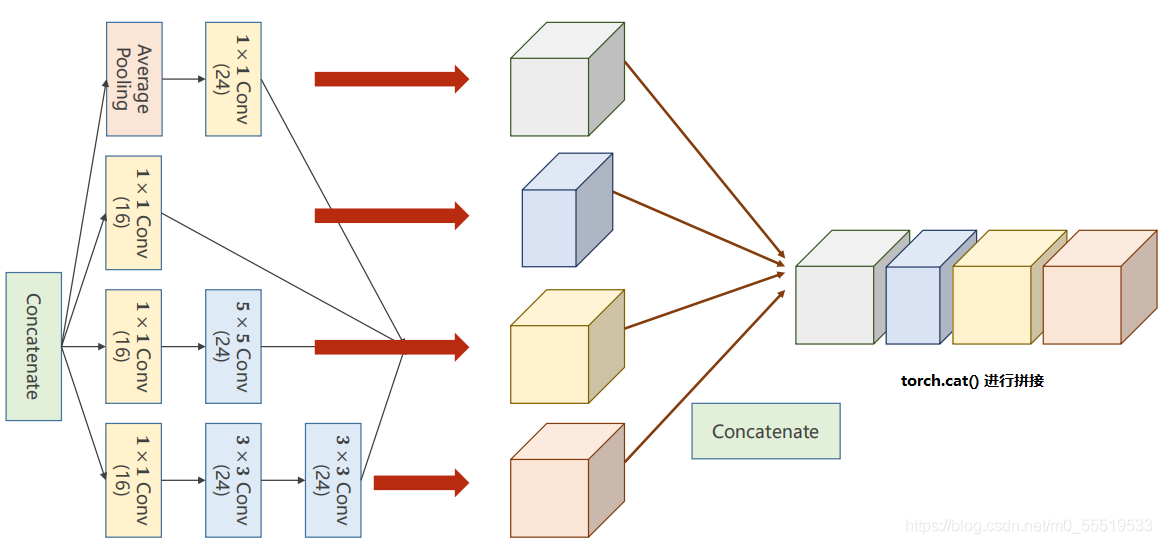

?Inception模块的结构

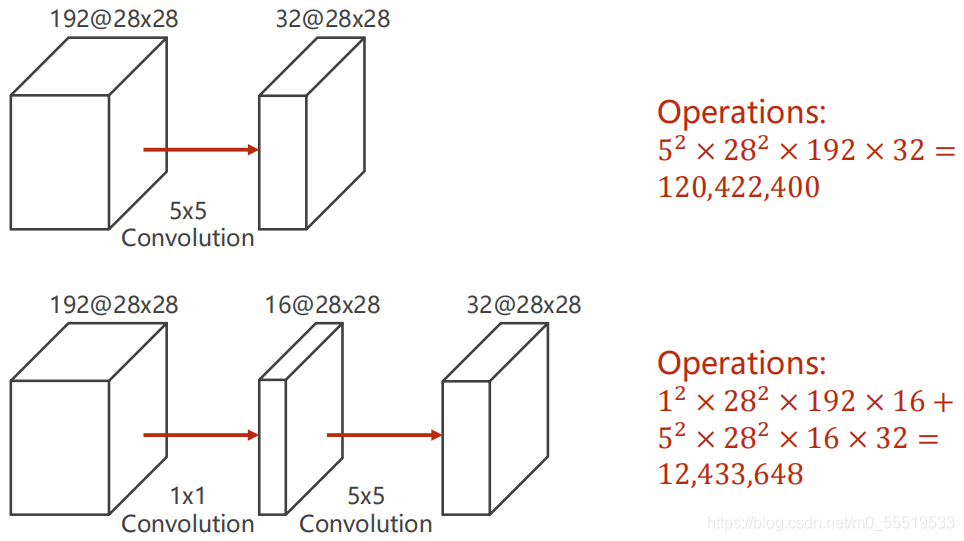

?关于为什么采用1×1的卷积核:通过采用1×1的卷积核计算量减少了10倍。

?

?示例代码:

import torch.nn as nn

import torch.nn.functional as F

import torch

from torch.utils.data import DataLoader

from torchvision import transforms

from torchvision import datasets

import matplotlib.pyplot as plt

#构造数据集

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307, ),(0.3081, ))])

train_sets = datasets.MNIST(root='E:\MyCode\pytorchLearning', transform=transform, train=True, download=False)

test_sets = datasets.MNIST(root='E:\MyCode\pytorchLearning', transform=transform,train=False,download=False)

train_dataloader = DataLoader(dataset=train_sets, batch_size=batch_size, shuffle =True)

test_dataloader = DataLoader(dataset=test_sets, batch_size=batch_size, shuffle=False)

class InceptionA(nn.Module):

def __init__(self,in_channels):

super(InceptionA, self).__init__()

#branch1

self.branch_pool = nn.Conv2d(in_channels, 24, kernel_size=1)

#branch2

self.branch1x1 = nn.Conv2d(in_channels, 16, kernel_size=1)

#branch3

self.branch5x5_1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch5x5_2 = nn.Conv2d(16, 24, kernel_size=5, padding=2)

#branch4

self.branch3x3_1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch3x3_2 = nn.Conv2d(16, 24, kernel_size=3, padding=1)

self.branch3x3_3 = nn.Conv2d(24, 24, kernel_size=3, padding=1)

def forward(self,x):

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

outputs = [branch_pool, branch1x1, branch5x5, branch3x3]

return torch.cat(outputs, dim=1)

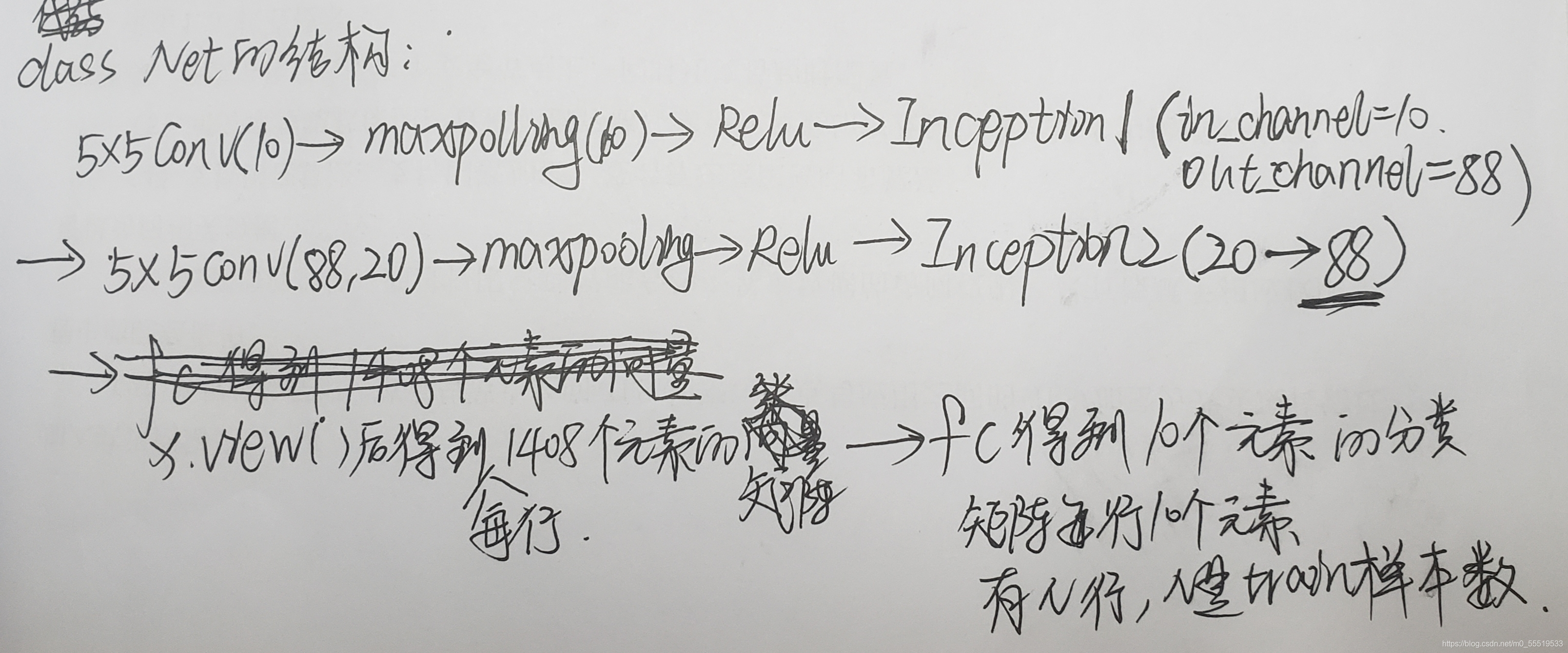

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(88, 20, kernel_size=5)

self.incep1 = InceptionA(in_channels=10)

self.incep2 = InceptionA(in_channels=20)

self.mp = nn.MaxPool2d(2)

self.fc = nn.Linear(1408, 10)

def forward(self, x):

insize = x.size(0)

x = F.relu(self.mp(self.conv1(x)))

x = self.incep1(x)

x = F.relu(self.mp(self.conv2(x)))

x = self.incep2(x)

x = x.view(insize, -1)

x = self.fc(x)

return x

model = Net()

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

model.to(device)

#损失和优化

critirion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

def train(epoch):

running_loss = 0.0

for idx,(inputs,targets) in enumerate(train_dataloader,0):

inputs = inputs.to(device)

targets = targets.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = critirion(outputs,targets)

loss.backward()

optimizer.step()

running_loss += loss.item()

if idx % 300 == 299:

print('[%d,%5d],loss:%.3f' % (epoch+1,idx+1,running_loss/2000))

running_loss = 0.0

def test():

accuracy_list = []

correct = 0

total = 0

with torch.no_grad():

for (inputs,targets) in test_dataloader:

inputs,targets = inputs.to(device),targets.to(device)

outputs = model(inputs)

_,predicted = torch.max(outputs,dim=1)

total += targets.size(0) #batch_size大小

correct += (predicted==targets).sum().item()

print("Accuracy on test set:%d %% [%d/%d]" % (100*correct/total, correct, total))

accuracy = correct % total

accuracy_list.append(accuracy)

return accuracy_list

if __name__=="__main__":

epoch_list=[]

for epoch in range(4):

epoch_list.append(epoch)

train(epoch)

accuracy_list = test()

# plt.plot(epoch_list,accuracy_list)

# plt.xlabel('epoch')

# plt.ylabel('accuracy')

# plt.show()?class Net()的结构:

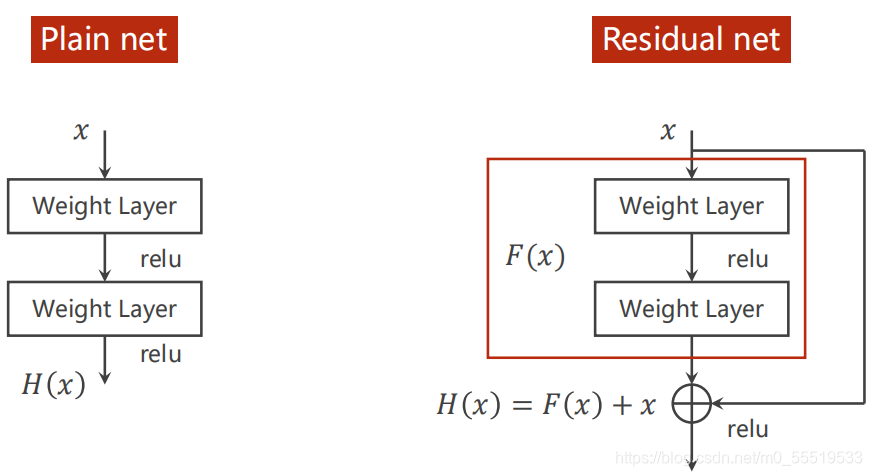

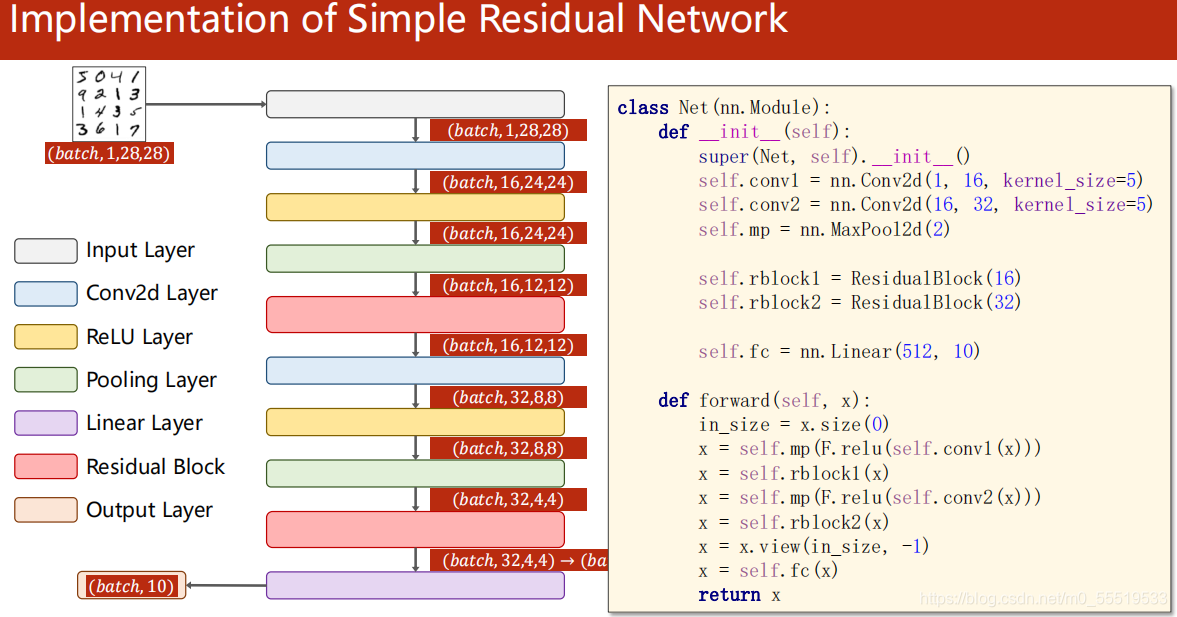

?Residual Net残差网络

目的:权重更新时w=w-αg,当g→0时,w基本不更新,即梯度消失问题,采用逐层训练的方式来解决梯度消失问题。

网络结构:

?示例代码:

import torch

import torch.nn.functional as F

from torchvision import transforms

from torch.utils.data import DataLoader

from torchvision import datasets

transform = transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.1307, ),(0.3081, ))])

batch_size = 64

train_sets = datasets.MNIST(root='E:\image-dataset\MNIST', transform=transform, train=True, download=False)

test_sets = datasets.MNIST(root='E:\image-dataset\MNIST', transform=transform, train=False,download=False)

train_loader = DataLoader(dataset=train_sets, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(dataset=test_sets, batch_size=batch_size, shuffle=False)

class ResidualBlock(torch.nn.Module):

def __init__(self,channels):

super(ResidualBlock, self).__init__()

self.channels = channels

self.conv1 = torch.nn.Conv2d(channels, channels, kernel_size=3,padding=1)

self.conv2 = torch.nn.Conv2d(channels, channels, kernel_size=3,padding=1)

def forward(self,x):

y = F.relu(self.conv1(x))

y = self.conv2(y)

y_ = y + x

y_ = F.relu(y_)

return y_

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 16, kernel_size=5)

self.mp = torch.nn.MaxPool2d(2)

self.conv2 = torch.nn.Conv2d(16, 32, kernel_size=5)

self.fc = torch.nn.Linear(512, 10)

self.residual1 = ResidualBlock(16)

self.residual2 = ResidualBlock(32)

def forward(self,x):

in_size = x.size(0)

x = self.mp(F.relu(self.conv1(x)))

x = self.residual1(x)

x = self.mp(F.relu(self.conv2(x)))

x = self.residual2(x)

x = x.view(in_size,-1)

x = self.fc(x)

return x

model = Net()

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

print(device)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

def train(epoch):

running_loss = 0.0

for idx,(inputs,targets) in enumerate(train_loader,0):

inputs,targets = inputs.to(device),targets.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs,targets)

loss.backward()

optimizer.step()

running_loss += loss.item()

if idx % 300 ==299:

print('%d %5d,loss:%.3f' % (epoch+1,idx+1,running_loss/2000))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for (input,target) in test_loader:

input,target = input.to(device),target.to(device)

output = model(input)

_,predicted = torch.max(output,dim=1)

correct += (predicted == target).sum().item()

total += target.size(0)

print("Accuracy of the test set is:%d %% [%d/%d]" % (100*correct/total,correct, total))

if __name__=='__main__':

for epoch in range(5):

train(epoch)

test()?

?

?

?

?

?