? ? ?接上一篇文章PointPillars模型加速实验(3),到目前为止我们已经成功的将PointPillars网络部分的3大组件PFN,MFN和RPN分别导出了onnx。接下来要将onnx文件build成TensorRT engine并序列化到文件中。对于一般的网络,onnx模型转TensorRT还是比较容易的,目前TensorRT官方对onnx模型的支持是最好的。官方的转换工具TensorRT Backend For ONNX(简称ONNX-TensorRT)也比较成熟了,最新版本已经到8.0.1.6。我所了解的,将ONNX转TensorRT engine有下面几种方式:

(1) trtexec命令行转换工具

trtexec can be used to build engines, using different TensorRT features (see command line arguments), and run inference. trtexec also measures and reports execution time and can be used to understand performance and possibly locate bottlenecks.

Compile this sample by running make in the <TensorRT root directory>/samples/trtexec directory. The binary named trtexec will be created in the <TensorRT root directory>/bin directory.

cd <TensorRT root directory>/samples/trtexec

make借用TensorRT官方的一段描述,在TensorRT的样例代码库中(sample)包含trtexec命令行工具,它主要有两个用途。

- 测试网络性能 - 如果您将模型保存为 UFF 文件、ONNX 文件,或者如果您有 Caffe prototxt 格式的网络描述,您可以使用 trtexec 工具来测试推理的性能。 注意如果只使用 Caffe prototxt 文件并且未提供模型,则会生成随机权重。trtexec 工具有许多选项用于指定输入和输出、性能计时的迭代、允许的精度等。

- 序列化引擎生成 - 可以将UFF、ONNX、Caffe格式的模型构建成engine。

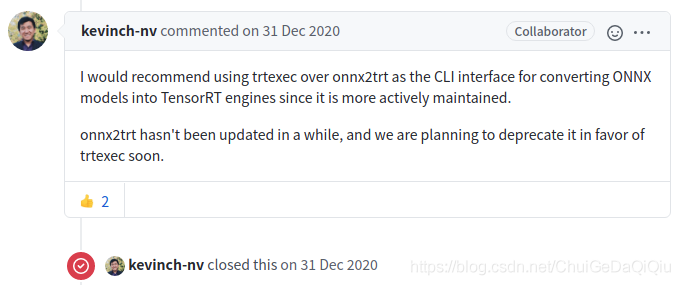

(2). onnx2trt命令行工具

onnx2trt包含在官方的转换工具onnx-tensorrt项目中,onnx-tensorrt编译完成后,在build目录下会看到onnx2trt命令工具,同样可以用来将onnx转TensorRT engine。关于trtexe和onnxtrt,两者有和区别,用水更合适,github有大佬给出了回答。

(3). TensorRT的API

? ? 处理命令行工具,当然TensorRT也提供了转换的API,无论是Python的还是c++的。在后续的onnx转tensorrt中,trtexet和tensorrt api着两种我都会体验一下。

ONNX转TensorRT引擎

? ? ? ? 由于使用tensorrt api将PFN,MFN和RPN的onnx转tensorrt engine模型的build过程基本一致,我这里统一到一个函数(onnx2engine)中。

- ?调用trt.Logger实现一个日至接口,通过该接口来打印错误,警告和一般消息。

1 def onnx2engine(self,onnx_model_name,trt_model_name,dynamic_input=True):

2 TRT_LOGGER = trt.Logger(trt.Logger.INFO)

3 if os.path.exists(trt_model_name) and (os.path.getsize(trt_model_name)>0):

4 print("reading engine from file {}.".format(trt_model_name))

5 with open(trt_model_name, "rb") as f, trt.Runtime(TRT_LOGGER) as runtime:

6 #deserialize the engine

7 return runtime.deserialize_cuda_engine(f.read())

8 if not os.path.exists(onnx_model_name):

9 print(f"onnx file {onnx_model_name} not found, please check path or generate it.")

10 return None

11 if not os.path.getsize(onnx_model_name):

12 print(f"onnx file {onnx_model_name} exist but empty, please regenerate it.")

13 return None- TensorRT支持dynamic-shape的时候,batch这一维度必须是explicit的。最新的onnx-tensorrt也必须设置explicit的batchsize。

14 #This call request that the network not have an implicit batch dimension

15 explicit_batch = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH) #trt7- TensorRT6以后的版本是支持dynamic shape输入的,需要给每个动态输入绑定一个profile,用于指定最大shape,最小shape和常规shape,如果超出这个范围实际运行过程中就会报错。此外,build engine的时候也需要config来设置参数。

16 with trt.Builder(TRT_LOGGER) as builder, \

17 builder.create_network(explicit_batch) as network, \

18 trt.OnnxParser(network, TRT_LOGGER) as parser:

19 builder.max_batch_size = 1

20 """The IBuildConfig has many properties that you can set in order to control

21 such things as the precesion at which the network should run...

22 """

23 config = builder.create_builder_config()

24 """Layer algorithms often require temporary workspace,This parameter limits the

25 maximum size that any layer in the network can use.

26 """

27 config.max_workspace_size = 1 << 30 # 1GB

28 config.set_flag(trt.BuilderFlag.FP16)

29 print('Loading ONNX file from path {}...'.format(onnx_model_name))

30 with open(onnx_model_name, 'rb') as model:

31 print('Beginning ONNX file parsing')

32 if not parser.parse(model.read()):

33 for error in range(parser.num_errors):

34 print(parser.get_error(error))

35 return None

36 print('Completed parsing of ONNX file')

37 print('Building an engine from file {}; this may take a while...'.format(onnx_model_name))- 对于每个dynamic shape输入,必须通过profile.set_shape(name,min_shape,common_shape,max_shape)来明确定义。

38 if dynamic_input:

39 profile = builder.create_optimization_profile()

40 for i in range(network.num_inputs):

41 input_name = network.get_input(i).name

42 shape = list(network.get_input(i).shape)

43 #print(i,"/",network.num_inputs,",",input_name,",",shape)

44 if len(shape) == 4 and shape[2] == -1:

45 min_shape = shape.copy(); min_shape[2] = 120

46 common_shape = shape.copy(); common_shape[2] = 6000

47 max_shape = shape.copy(); max_shape[2] = 12000

48 else:

49 min_shape = shape.copy(); min_shape[1] = 120

50 common_shape = shape.copy(); common_shape[1] = 6000

51 max_shape = shape.copy(); max_shape[1] = 12000

52 profile.set_shape(input_name, min_shape, common_shape, max_shape)

53 config.add_optimization_profile(profile)- 通过build_engine创建TensorRT引擎,并序列化到本地文件中。

55 print("num layers:", network.num_layers)

56 #build the engine using the builder object

57 engine = builder.build_engine(network, config)

58 print("Completed creating Engine")

59 with open(trt_model_name, "wb") as f:

60 f.write(engine.serialize())

61 return engine有了上面的函数,下面的转换就好办了,直接带入就行了。

onnx2engine("pfn_dynamic.onnx","pfn_dynamic.engine",dynamic_input=True)

onnx2engine("mfn_dynamic.onnx","mfn_dynamic.engine",dynamic_input=True)

onnx2engine("rpn.onnx","rpn.engine",dynamic_input=False) ? ? ? ? ?PFN和RPN部分都可以成功了,唯独MFN转换失败了!!!

Loading ONNX file from path mfn_dynamic.onnx...

Beginning ONNX file parsing

[TensorRT] INFO: [TRT] /home/onnx_tensorrt_7.1/ModelImporter.cpp:139: No importer registered for op: Scatter. Attempting to import as plugin.

[TensorRT] INFO: [TRT] /home/onnx_tensorrt_7.1/builtin_op_importers.cpp:3749: Searching for plugin: Scatter, plugin_version: 1, plugin_namespace:?

[TensorRT] ERROR: _6: last dimension of input0 = 64 and second to last dimension of input1 = 1 but must match._6: last dimension of input0 = 64 and second to last dimension of input1 = 1 but must match._6: last dimension of input0 = 64 and second to last dimension of input1 = 1 but must match._6: last dimension of input0 = 64 and second to last dimension of input1 = 1 but must match._6: last dimension of input0 = 64 and second to last dimension of input1 = 1 but must match._6: last dimension of input0 = 64 and second to last dimension of input1 = 1 but must match._6: last dimension of input0 = 64 and second to last dimension of input1 = 1 but must match._6: last dimension of input0 = 64 and second to last dimension of input1 = 1 but must match.INVALID_ARGUMENT: getPluginCreator could not find plugin Scatter version 1

In node 16 (importFallbackPluginImporter): UNSUPPORTED_NODE: Assertion failed: creator && "Plugin not found, are the plugin name, version, and namespace correct?"

?根据报错信息可以推断是TensorRT暂不支持Scatter算子,经查阅TensorRT7.1确实也不支持Scatter算子。?针对这一情况由多种解决办法,例如你可以自己来写TensorRT Plugin来支持Scatter操作,也可以之间写成CUDA算子等等。MFN的问题留待后面推理的时候再来考虑,至少我们现在已经有了MFN和RPN两部分的TensorRT engine。

? ? ? ? 前面说到除了TensorRT API还有trtexec和onnx2trt命令行工具可以用来将onnx转tensorrt engine。这里仅用RPN的onnx做一下实验,PFN主要是因为输入太多,又是dynamic shape,用trtexec来转的话,命令行参数写起来比较繁杂。我分别实验一下在fp16和fp32精度下,RPN生成的TensorRT的加速效果。

./trtexec --explicitBatch --onnx=./rpn.onnx --saveEngine=./rpn.engin --workspace=4096

[07/25/2021-22:18:09] [W] [TRT] [TRT]/home/zuosi/github/onnx_tensorrt_7.1/onnx2trt_utils.cpp:220: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/25/2021-22:18:18] [I] [TRT] Detected 1 inputs and 3 output network tensors.

[07/25/2021-22:18:20] [I] Starting inference threads

[07/25/2021-22:18:23] [I] Warmup completed 0 queries over 200 ms

[07/25/2021-22:18:23] [I] Timing trace has 0 queries over 3.01289 s

[07/25/2021-22:18:23] [I] Trace averages of 10 runs:

[07/25/2021-22:18:23] [I] Average on 10 runs - GPU latency: 4.8686 ms - Host latency: 9.80874 ms (end to end 9.82594 ms, enqueue 0.327498 ms)

[07/25/2021-22:18:23] [I] Average on 10 runs - GPU latency: 4.86126 ms - Host latency: 9.79784 ms (end to end 9.81131 ms, enqueue 0.296658 ms)

[07/25/2021-22:18:23] [I] Average on 10 runs - GPU latency: 4.85182 ms - Host latency: 9.78955 ms (end to end 9.80826 ms, enqueue 0.333121 ms)

[07/25/2021-22:18:23] [I] Average on 10 runs - GPU latency: 4.85986 ms - Host latency: 9.79612 ms (end to end 9.82035 ms, enqueue 0.370084 ms)

[07/25/2021-22:18:23] [I] Average on 10 runs - GPU latency: 4.90681 ms - Host latency: 9.83716 ms (end to end 9.8505 ms, enqueue 0.283475 ms)

.......

./trtexec --explicitBatch --onnx=./rpn.onnx --saveEngine=./rpn.engin --workspace=4096 --fp16

[07/25/2021-22:15:51] [W] [TRT] [TRT]/home/zuosi/github/onnx_tensorrt_7.1/onnx2trt_utils.cpp:220: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/25/2021-22:16:14] [I] [TRT] Detected 1 inputs and 3 output network tensors.

[07/25/2021-22:16:16] [I] Starting inference threads

[07/25/2021-22:16:19] [I] Warmup completed 0 queries over 200 ms

[07/25/2021-22:16:19] [I] Timing trace has 0 queries over 3.01254 s

[07/25/2021-22:16:19] [I] Trace averages of 10 runs:

[07/25/2021-22:16:19] [I] Average on 10 runs - GPU latency: 1.67223 ms - Host latency: 6.60986 ms (end to end 6.62596 ms, enqueue 0.22049 ms)

[07/25/2021-22:16:19] [I] Average on 10 runs - GPU latency: 1.67367 ms - Host latency: 6.61241 ms (end to end 6.62623 ms, enqueue 0.223537 ms)

[07/25/2021-22:16:19] [I] Average on 10 runs - GPU latency: 1.67456 ms - Host latency: 6.61381 ms (end to end 6.62837 ms, enqueue 0.278604 ms)

[07/25/2021-22:16:19] [I] Average on 10 runs - GPU latency: 1.67402 ms - Host latency: 6.61122 ms (end to end 6.62698 ms, enqueue 0.223511 ms)

[07/25/2021-22:16:19] [I] Average on 10 runs - GPU latency: 1.67439 ms - Host latency: 6.61202 ms (end to end 6.62704 ms, enqueue 0.267236 ms)

.......

【补充知识】

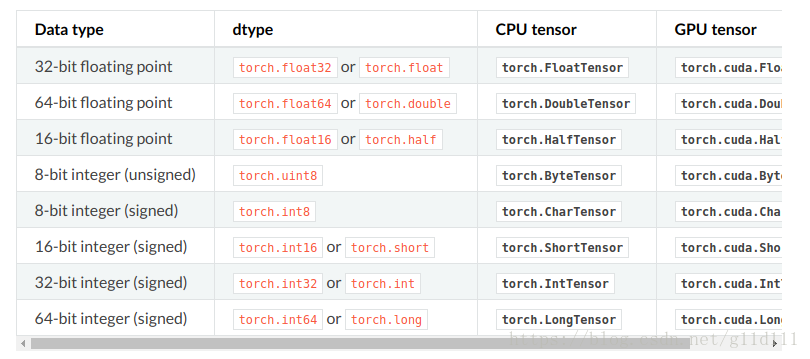

TensorRT支持哪几种权重精度

支持FP32、FP16、INT8、TF32等,这几种类型都比较常用。

- FP32:单精度浮点型,没什么好说的,深度学习中最常见的数据格式,训练推理都会用到;

- FP16:半精度浮点型,相比FP32占用内存减少一半,有相应的指令值,速度比FP32要快很多;

- TF32:第三代Tensor Core支持的一种数据类型,是一种截短的 Float32 数据格式,将FP32中23个尾数位截短为10bits,而指数位仍为8bits,总长度为19(=1+8 +10)。保持了与FP16同样的精度(尾数位都是 10 位),同时还保持了FP32的动态范围指数位都是8位);

- INT8:整型,相比FP16占用内存减小一半,有相应的指令集,模型量化后可以利用INT8进行加速。

【参考文献】

https://blog.csdn.net/Small_Munich/article/details/101559424

https://github.com/nutonomy/second.pytorch

https://forums.developer.nvidia.com/t/6-assertion-failed-convertdtype-onnxtype-dtype-unsupported-cast/179605/2

https://zhuanlan.zhihu.com/p/78882641