1. 导言

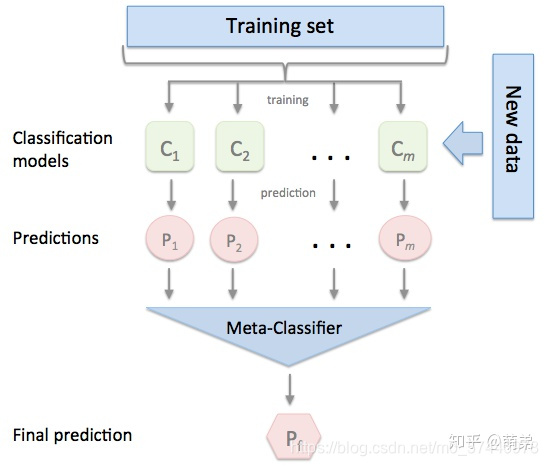

在前几个章节中,我们学习了关于回归和分类的算法,同时也讨论了如何将这些方法集成为强大的算法的集成学习方式,分别是Bagging和Boosting。本章我们继续讨论集成学习方法的最后一个成员–Stacking,这个集成方法在比赛中被称为“懒人”算法,因为它不需要花费过多时间的调参就可以得到一个效果不错的算法,同时,这种算法也比前两种算法容易理解的多,因为这种集成学习的方式不需要理解太多的理论,只需要在实际中加以运用即可。 stacking严格来说并不是一种算法,而是精美而又复杂的,对模型集成的一种策略。Stacking集成算法可以理解为一个两层的集成,第一层含有多个基础分类器,把预测的结果(元特征)提供给第二层, 而第二层的分类器通常是逻辑回归,他把一层分类器的结果当做特征做拟合输出预测结果。在介绍Stacking之前,我们先来对简化版的Stacking进行讨论,也叫做Blending,接着我们对Stacking进行更深入的讨论。

2. Blending集成学习算法

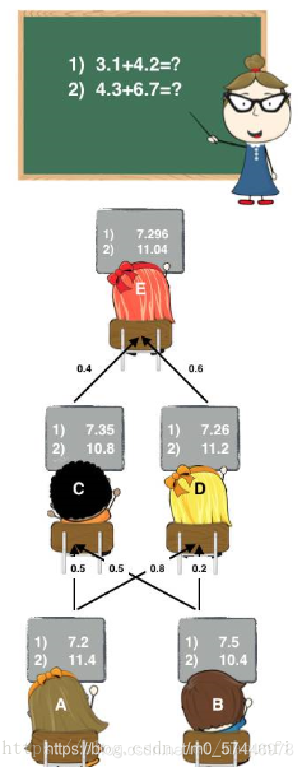

不知道大家小时候有没有过这种经历:老师上课提问到你,那时候你因为开小差而无法立刻得知问题的答案。就在你彷徨的时候,由于你平时人缘比较好,因此周围的同学向你伸出援手告诉了你他们脑中的正确答案,因此你对他们的答案加以总结和分析最终的得出正确答案。相信大家都有过这样的经历,说这个故事的目的是为了引出集成学习家族中的Blending方式,这种集成方式跟我们的故事是十分相像的。如图:(图片来源:https://blog.csdn.net/maqunfi/article/details/82220115)

下面我们来详细讨论下这个Blending集成学习方式:

- (1) 将数据划分为训练集和测试集(test_set),其中训练集需要再次划分为训练集(train_set)和验证集(val_set);

- (2) 创建第一层的多个模型,这些模型可以使同质的也可以是异质的;

- (3) 使用train_set训练步骤2中的多个模型,然后用训练好的模型预测val_set和test_set得到val_predict, test_predict1;

- (4) 创建第二层的模型,使用val_predict作为训练集训练第二层的模型;

- (5) 使用第二层训练好的模型对第二层测试集test_predict1进行预测,该结果为整个测试集的结果。

3. Stacking集成学习算法

基于前面对Blending集成学习算法的讨论,我们知道:Blending在集成的过程中只会用到验证集的数据,对数据实际上是一个很大的浪费。为了解决这个问题,我们详细分析下Blending到底哪里出现问题并如何改进。在Blending中,我们产生验证集的方式是使用分割的方式,产生一组训练集和一组验证集,这让我们联想到交叉验证的方式。顺着这个思路,我们对Stacking进行建模(如下图):

- 首先将所有数据集生成测试集和训练集(假如训练集为10000,测试集为2500行),那么上层会进行5折交叉检验,使用训练集中的8000条作为训练集,剩余2000行作为验证集(橙色)。

- 每次验证相当于使用了蓝色的8000条数据训练出一个模型,使用模型对验证集进行验证得到2000条数据,并对测试集进行预测,得到2500条数据,这样经过5次交叉检验,可以得到中间的橙色的5* 2000条验证集的结果(相当于每条数据的预测结果),5* 2500条测试集的预测结果。

- 接下来会将验证集的5* 2000条预测结果拼接成10000行长的矩阵,标记为 A 1 A_1 A1?,而对于5* 2500行的测试集的预测结果进行加权平均,得到一个2500一列的矩阵,标记为 B 1 B_1 B1?。

- 上面得到一个基模型在数据集上的预测结果 A 1 A_1 A1?、 B 1 B_1 B1?,这样当我们对3个基模型进行集成的话,相于得到了 A 1 A_1 A1?、 A 2 A_2 A2?、 A 3 A_3 A3?、 B 1 B_1 B1?、 B 2 B_2 B2?、 B 3 B_3 B3?六个矩阵。

- 之后我们会将 A 1 A_1 A1?、 A 2 A_2 A2?、 A 3 A_3 A3?并列在一起成10000行3列的矩阵作为training data, B 1 B_1 B1?、 B 2 B_2 B2?、 B 3 B_3 B3?合并在一起成2500行3列的矩阵作为testing data,让下层学习器基于这样的数据进行再训练。

- 再训练是基于每个基础模型的预测结果作为特征(三个特征),次学习器会学习训练如果往这样的基学习的预测结果上赋予权重w,来使得最后的预测最为准确。

下面,我们来实际应用下Stacking是如何集成算法的:(参考案例:https://www.cnblogs.com/Christina-Notebook/p/10063146.html)

Blending与Stacking对比:

Blending的优点在于:

- 比stacking简单(因为不用进行k次的交叉验证来获得stacker feature)

而缺点在于:

- 使用了很少的数据(是划分hold-out作为测试集,并非cv)

- blender可能会过拟合(其实大概率是第一点导致的)

- stacking使用多次的CV会比较稳健

4. 结语

在本章中,我们讨论了如何使用Blending和Stacking的方式去集成多个模型,相比于Bagging与Boosting的集成方式,Blending和Stacking的方式更加简单和直观,且效果还很好,因此在比赛中有这么一句话:它(Stacking)可以帮你打败当前学术界性能最好的算法 。那么截至目前为止,我们已经把所有的集成学习方式都讨论完了,接下来的第六章,我们将以几个大型的案例来展示集成学习的威力。

泰坦尼克号特征工程- 待完善

import re

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings('ignore')

%matplotlib inline

import xlwings as xw

train_data = pd.read_csv('train.csv')

test_data = pd.read_csv('test.csv')

train_data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

PassengerId 891 non-null int64

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 714 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Ticket 891 non-null object

Fare 891 non-null float64

Cabin 204 non-null object

Embarked 889 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.7+ KB

test_data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 418 entries, 0 to 417

Data columns (total 11 columns):

PassengerId 418 non-null int64

Pclass 418 non-null int64

Name 418 non-null object

Sex 418 non-null object

Age 332 non-null float64

SibSp 418 non-null int64

Parch 418 non-null int64

Ticket 418 non-null object

Fare 417 non-null float64

Cabin 91 non-null object

Embarked 418 non-null object

dtypes: float64(2), int64(4), object(5)

memory usage: 36.0+ KB

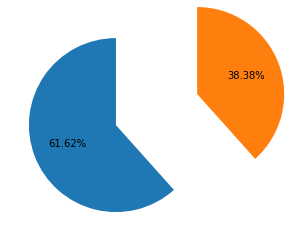

plt.pie(train_data['Survived'].value_counts().values,train_data['Survived'].value_counts().index,autopct='%1.2f%%',startangle=90)

([<matplotlib.patches.Wedge at 0x1a2b4317dc8>,

<matplotlib.patches.Wedge at 0x1a2b4320a48>],

[Text(-1.027562611392443, -0.392574935099458, ''),

Text(1.961710369761393, 0.749461423403913, '')],

[Text(-0.5604886971231506, -0.21413178278152253, '61.62%'),

Text(1.4946364721991565, 0.5710182273553622, '38.38%')])

2、缺失值填充

对数据进行分析的时候要注意其中是否有缺失值。

一些机器学习算法能够处理缺失值,比如神经网络,一些则不能。对于缺失值,一般有以下几种处理方法:

(1)如果数据集很多,但有很少的缺失值,可以删掉带缺失值的行;

(2)如果该属性相对学习来说不是很重要,可以对缺失值赋均值或者众数。比如在哪儿上船Embarked这一属性(共有三个上船地点),缺失俩值,可以用众数赋值

train_data.Embarked.dropna().mode().values # 找到众值

array(['S'], dtype=object)

train_data['Embarked'].value_counts().plot.pie(autopct = '%1.2f%%')

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b4328b48>

train_data.Embarked[train_data.Embarked.isnull()] = train_data.Embarked.dropna().mode().values

(3)对于标称属性,可以赋一个代表缺失的值,比如‘U0’。因为缺失本身也可能代表着一些隐含信息。比如船舱号Cabin这一属性,缺失可能代表并没有船舱。

#replace missing value with U0

train_data['Cabin'] = train_data.Cabin.fillna('U0') # train_data.Cabin[train_data.Cabin.isnull()]='U0'

(4)使用回归 随机森林等模型来预测缺失属性的值。因为Age在该数据集里是一个相当重要的特征(先对Age进行分析即可得知),所以保证一定的缺失值填充准确率是非常重要的,对结果也会产生较大影响。一般情况下,会使用数据完整的条目作为模型的训练集,以此来预测缺失值。对于当前的这个数据,可以使用随机森林来预测也可以使用线性回归预测。这里使用随机森林预测模型,选取数据集中的数值属性作为特征(因为sklearn的模型只能处理数值属性,所以这里先仅选取数值特征,但在实际的应用中需要将非数值特征转换为数值特征)

————————————————

版权声明:本文为CSDN博主「大树先生的博客」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/koala_tree/article/details/78725881

from sklearn.ensemble import RandomForestRegressor

age_df = train_data[['Age','Survived','Fare', 'Parch', 'SibSp', 'Pclass']]

age_df_notnull = age_df.loc[(train_data['Age'].notnull())]

age_df_isnull = age_df.loc[(train_data['Age'].isnull())]

age_df_notnull

| Age | Survived | Fare | Parch | SibSp | Pclass | |

|---|---|---|---|---|---|---|

| 0 | 22.0 | 0 | 7.2500 | 0 | 1 | 3 |

| 1 | 38.0 | 1 | 71.2833 | 0 | 1 | 1 |

| 2 | 26.0 | 1 | 7.9250 | 0 | 0 | 3 |

| 3 | 35.0 | 1 | 53.1000 | 0 | 1 | 1 |

| 4 | 35.0 | 0 | 8.0500 | 0 | 0 | 3 |

| ... | ... | ... | ... | ... | ... | ... |

| 885 | 39.0 | 0 | 29.1250 | 5 | 0 | 3 |

| 886 | 27.0 | 0 | 13.0000 | 0 | 0 | 2 |

| 887 | 19.0 | 1 | 30.0000 | 0 | 0 | 1 |

| 889 | 26.0 | 1 | 30.0000 | 0 | 0 | 1 |

| 890 | 32.0 | 0 | 7.7500 | 0 | 0 | 3 |

714 rows × 6 columns

age_df_notnull.values[:,1:] # 设为特征

array([[ 0. , 7.25 , 0. , 1. , 3. ],

[ 1. , 71.2833, 0. , 1. , 1. ],

[ 1. , 7.925 , 0. , 0. , 3. ],

...,

[ 1. , 30. , 0. , 0. , 1. ],

[ 1. , 30. , 0. , 0. , 1. ],

[ 0. , 7.75 , 0. , 0. , 3. ]])

age_df_notnull.values[:,0] # 设为结果

array([22. , 38. , 26. , 35. , 35. , 54. , 2. , 27. , 14. ,

4. , 58. , 20. , 39. , 14. , 55. , 2. , 31. , 35. ,

34. , 15. , 28. , 8. , 38. , 19. , 40. , 66. , 28. ,

42. , 21. , 18. , 14. , 40. , 27. , 3. , 19. , 18. ,

7. , 21. , 49. , 29. , 65. , 21. , 28.5 , 5. , 11. ,

22. , 38. , 45. , 4. , 29. , 19. , 17. , 26. , 32. ,

16. , 21. , 26. , 32. , 25. , 0.83, 30. , 22. , 29. ,

28. , 17. , 33. , 16. , 23. , 24. , 29. , 20. , 46. ,

26. , 59. , 71. , 23. , 34. , 34. , 28. , 21. , 33. ,

37. , 28. , 21. , 38. , 47. , 14.5 , 22. , 20. , 17. ,

21. , 70.5 , 29. , 24. , 2. , 21. , 32.5 , 32.5 , 54. ,

12. , 24. , 45. , 33. , 20. , 47. , 29. , 25. , 23. ,

19. , 37. , 16. , 24. , 22. , 24. , 19. , 18. , 19. ,

27. , 9. , 36.5 , 42. , 51. , 22. , 55.5 , 40.5 , 51. ,

16. , 30. , 44. , 40. , 26. , 17. , 1. , 9. , 45. ,

28. , 61. , 4. , 1. , 21. , 56. , 18. , 50. , 30. ,

36. , 9. , 1. , 4. , 45. , 40. , 36. , 32. , 19. ,

19. , 3. , 44. , 58. , 42. , 24. , 28. , 34. , 45.5 ,

18. , 2. , 32. , 26. , 16. , 40. , 24. , 35. , 22. ,

30. , 31. , 27. , 42. , 32. , 30. , 16. , 27. , 51. ,

38. , 22. , 19. , 20.5 , 18. , 35. , 29. , 59. , 5. ,

24. , 44. , 8. , 19. , 33. , 29. , 22. , 30. , 44. ,

25. , 24. , 37. , 54. , 29. , 62. , 30. , 41. , 29. ,

30. , 35. , 50. , 3. , 52. , 40. , 36. , 16. , 25. ,

58. , 35. , 25. , 41. , 37. , 63. , 45. , 7. , 35. ,

65. , 28. , 16. , 19. , 33. , 30. , 22. , 42. , 22. ,

26. , 19. , 36. , 24. , 24. , 23.5 , 2. , 50. , 19. ,

0.92, 17. , 30. , 30. , 24. , 18. , 26. , 28. , 43. ,

26. , 24. , 54. , 31. , 40. , 22. , 27. , 30. , 22. ,

36. , 61. , 36. , 31. , 16. , 45.5 , 38. , 16. , 29. ,

41. , 45. , 45. , 2. , 24. , 28. , 25. , 36. , 24. ,

40. , 3. , 42. , 23. , 15. , 25. , 28. , 22. , 38. ,

40. , 29. , 45. , 35. , 30. , 60. , 24. , 25. , 18. ,

19. , 22. , 3. , 22. , 27. , 20. , 19. , 42. , 1. ,

32. , 35. , 18. , 1. , 36. , 17. , 36. , 21. , 28. ,

23. , 24. , 22. , 31. , 46. , 23. , 28. , 39. , 26. ,

21. , 28. , 20. , 34. , 51. , 3. , 21. , 33. , 44. ,

34. , 18. , 30. , 10. , 21. , 29. , 28. , 18. , 28. ,

19. , 32. , 28. , 42. , 17. , 50. , 14. , 21. , 24. ,

64. , 31. , 45. , 20. , 25. , 28. , 4. , 13. , 34. ,

5. , 52. , 36. , 30. , 49. , 29. , 65. , 50. , 48. ,

34. , 47. , 48. , 38. , 56. , 0.75, 38. , 33. , 23. ,

22. , 34. , 29. , 22. , 2. , 9. , 50. , 63. , 25. ,

35. , 58. , 30. , 9. , 21. , 55. , 71. , 21. , 54. ,

25. , 24. , 17. , 21. , 37. , 16. , 18. , 33. , 28. ,

26. , 29. , 36. , 54. , 24. , 47. , 34. , 36. , 32. ,

30. , 22. , 44. , 40.5 , 50. , 39. , 23. , 2. , 17. ,

30. , 7. , 45. , 30. , 22. , 36. , 9. , 11. , 32. ,

50. , 64. , 19. , 33. , 8. , 17. , 27. , 22. , 22. ,

62. , 48. , 39. , 36. , 40. , 28. , 24. , 19. , 29. ,

32. , 62. , 53. , 36. , 16. , 19. , 34. , 39. , 32. ,

25. , 39. , 54. , 36. , 18. , 47. , 60. , 22. , 35. ,

52. , 47. , 37. , 36. , 49. , 49. , 24. , 44. , 35. ,

36. , 30. , 27. , 22. , 40. , 39. , 35. , 24. , 34. ,

26. , 4. , 26. , 27. , 42. , 20. , 21. , 21. , 61. ,

57. , 21. , 26. , 80. , 51. , 32. , 9. , 28. , 32. ,

31. , 41. , 20. , 24. , 2. , 0.75, 48. , 19. , 56. ,

23. , 18. , 21. , 18. , 24. , 32. , 23. , 58. , 50. ,

40. , 47. , 36. , 20. , 32. , 25. , 43. , 40. , 31. ,

70. , 31. , 18. , 24.5 , 18. , 43. , 36. , 27. , 20. ,

14. , 60. , 25. , 14. , 19. , 18. , 15. , 31. , 4. ,

25. , 60. , 52. , 44. , 49. , 42. , 18. , 35. , 18. ,

25. , 26. , 39. , 45. , 42. , 22. , 24. , 48. , 29. ,

52. , 19. , 38. , 27. , 33. , 6. , 17. , 34. , 50. ,

27. , 20. , 30. , 25. , 25. , 29. , 11. , 23. , 23. ,

28.5 , 48. , 35. , 36. , 21. , 24. , 31. , 70. , 16. ,

30. , 19. , 31. , 4. , 6. , 33. , 23. , 48. , 0.67,

28. , 18. , 34. , 33. , 41. , 20. , 36. , 16. , 51. ,

30.5 , 32. , 24. , 48. , 57. , 54. , 18. , 5. , 43. ,

13. , 17. , 29. , 25. , 25. , 18. , 8. , 1. , 46. ,

16. , 25. , 39. , 49. , 31. , 30. , 30. , 34. , 31. ,

11. , 0.42, 27. , 31. , 39. , 18. , 39. , 33. , 26. ,

39. , 35. , 6. , 30.5 , 23. , 31. , 43. , 10. , 52. ,

27. , 38. , 27. , 2. , 1. , 62. , 15. , 0.83, 23. ,

18. , 39. , 21. , 32. , 20. , 16. , 30. , 34.5 , 17. ,

42. , 35. , 28. , 4. , 74. , 9. , 16. , 44. , 18. ,

45. , 51. , 24. , 41. , 21. , 48. , 24. , 42. , 27. ,

31. , 4. , 26. , 47. , 33. , 47. , 28. , 15. , 20. ,

19. , 56. , 25. , 33. , 22. , 28. , 25. , 39. , 27. ,

19. , 26. , 32. ])

X = age_df_notnull.values[:,1:]

Y = age_df_notnull.values[:,0]

RFR = RandomForestRegressor(n_estimators=1000,n_jobs=-1)

RFR.fit(X,Y)

RandomForestRegressor(bootstrap=True, criterion='mse', max_depth=None,

max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=1000,

n_jobs=-1, oob_score=False, random_state=None, verbose=0,

warm_start=False)

PredictAgeData = RFR.predict(age_df_isnull.values[:,1:])

RFR.score(X,Y) # 查看评分

0.6974897892968519

train_data.loc[train_data["Age"].isnull(),"Age"] = PredictAgeData

查看下填充后的数据

train_data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

PassengerId 891 non-null int64

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 891 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Ticket 891 non-null object

Fare 891 non-null float64

Cabin 891 non-null object

Embarked 891 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.7+ KB

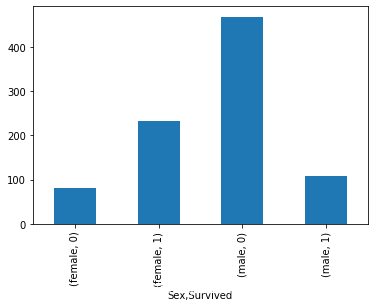

3、分析数据关系

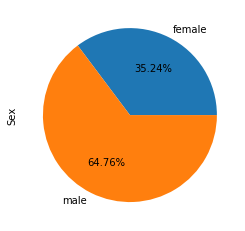

(1)性别与是否生存的关系

train_data.groupby(['Sex','Survived'])[['Survived']].count()

| Survived | ||

|---|---|---|

| Sex | Survived | |

| female | 0 | 81 |

| 1 | 233 | |

| male | 0 | 468 |

| 1 | 109 |

train_data.groupby(['Sex','Survived'])['Survived'].count().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b4d2c548>

train_data.groupby(['Sex'])['Sex'].count().plot.pie(autopct = '%1.2f%%')

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b7f9a048>

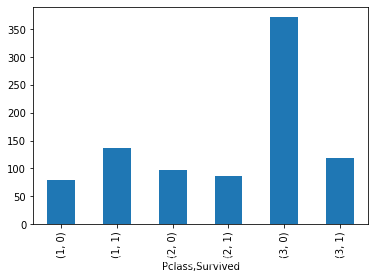

train_data.groupby(['Pclass','Survived'])['Survived'].count().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b800a788>

train_data.groupby(['Pclass'])['Pclass'].mean().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b8071988>

train_data.groupby(['Pclass'])['Pclass'].mean().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b80e0ac8>

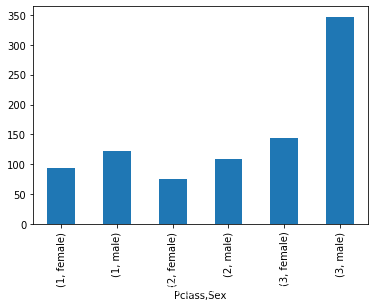

可以看出,3号仓的和男人死亡的最多

train_data.groupby(['Pclass','Sex'])['Pclass'].count().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b8134608>

train_data.groupby(['Pclass','Sex','Survived'])['Pclass'].count()

Pclass Sex Survived

1 female 0 3

1 91

male 0 77

1 45

2 female 0 6

1 70

male 0 91

1 17

3 female 0 72

1 72

male 0 300

1 47

Name: Pclass, dtype: int64

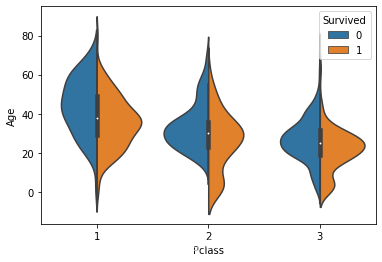

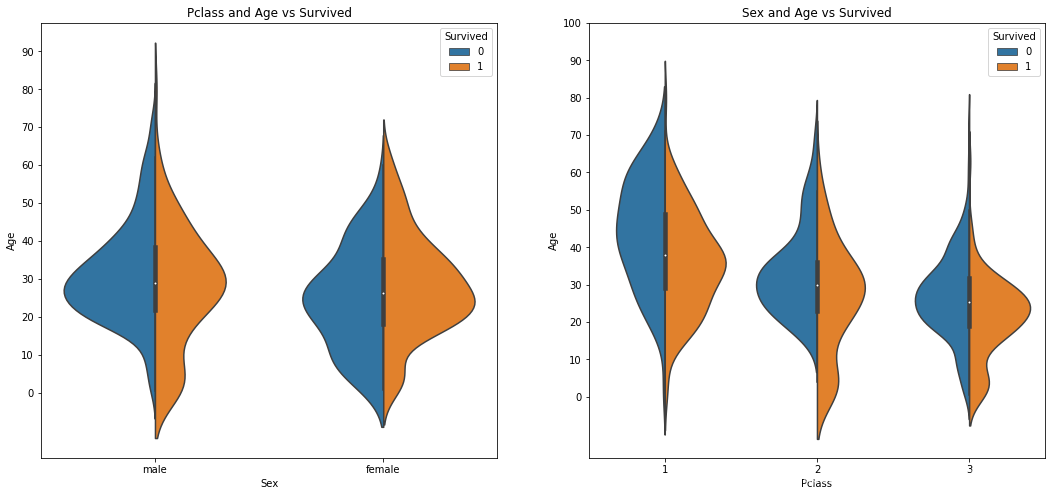

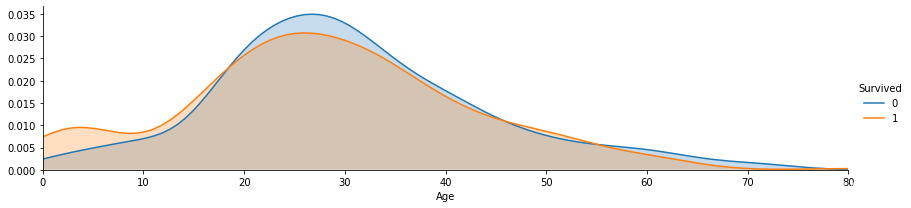

(3)年龄与存活与否的关系

sns.violinplot("Pclass", "Age", hue="Survived", data=train_data, split=True)

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b81c2888>

fig, ax = plt.subplots(1, 2, figsize = (18, 8)) # 1,2 是限制展示形式 row*col=1*2

sns.violinplot("Pclass", "Age", hue="Survived", data=train_data, split=True, ax=ax[1]) # ax[1]是摆放位置

ax[0].set_title('Pclass and Age vs Survived')

ax[0].set_yticks(range(0, 110, 10))

sns.violinplot("Sex", "Age", hue="Survived", data=train_data, split=True, ax=ax[0])

ax[1].set_title('Sex and Age vs Survived')

ax[1].set_yticks(range(0, 110, 10))

plt.show()

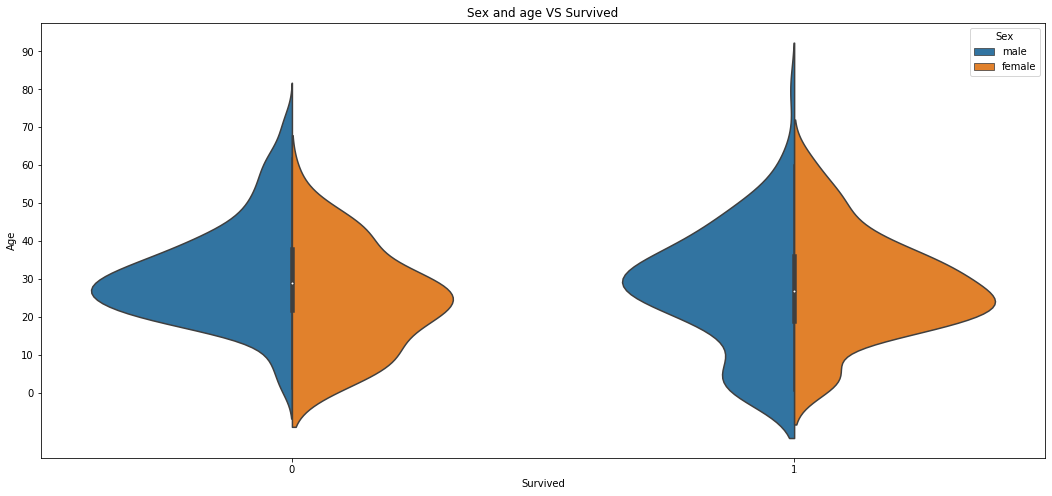

train_data.groupby(["Survived","Sex"])[["Age"]].count()

| Age | ||

|---|---|---|

| Survived | Sex | |

| 0 | female | 81 |

| male | 468 | |

| 1 | female | 233 |

| male | 109 |

fig = plt.subplots(1, figsize = (18, 8))

pic = sns.violinplot("Survived","Age", hue="Sex", data=train_data, split=True)

pic.set_title("Sex and age VS Survived")

pic.set_yticks(range(0,100,10))

[<matplotlib.axis.YTick at 0x1a2b869eac8>,

<matplotlib.axis.YTick at 0x1a2b8693fc8>,

<matplotlib.axis.YTick at 0x1a2b8692948>,

<matplotlib.axis.YTick at 0x1a2b83cb588>,

<matplotlib.axis.YTick at 0x1a2b83cbe88>,

<matplotlib.axis.YTick at 0x1a2b83cf748>,

<matplotlib.axis.YTick at 0x1a2b83d4188>,

<matplotlib.axis.YTick at 0x1a2b83d4a48>,

<matplotlib.axis.YTick at 0x1a2b83d83c8>,

<matplotlib.axis.YTick at 0x1a2b83cfcc8>]

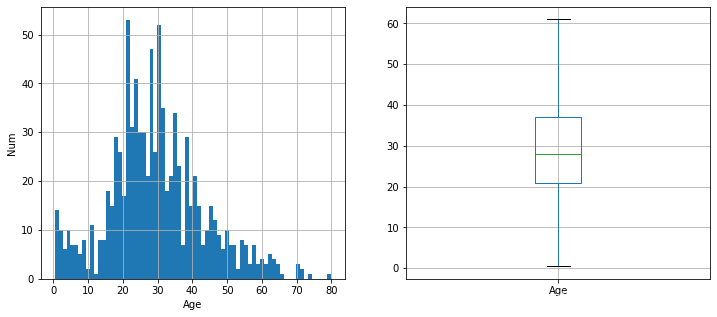

分析总体的年龄分布

plt.figure(figsize=(12,5))

plt.subplot(121)

train_data['Age'].hist(bins=70)

plt.xlabel('Age')

plt.ylabel('Num')

plt.subplot(122)

train_data.boxplot(column='Age', showfliers=False)

plt.show()

不同年龄下的生存和非生存的分布情况:

facet = sns.FacetGrid(train_data, hue="Survived",aspect=4)

facet.map(sns.kdeplot,'Age',shade= True)

facet.set(xlim=(0, train_data['Age'].max()))

facet.add_legend()

<seaborn.axisgrid.FacetGrid at 0x1a2b8b53508>

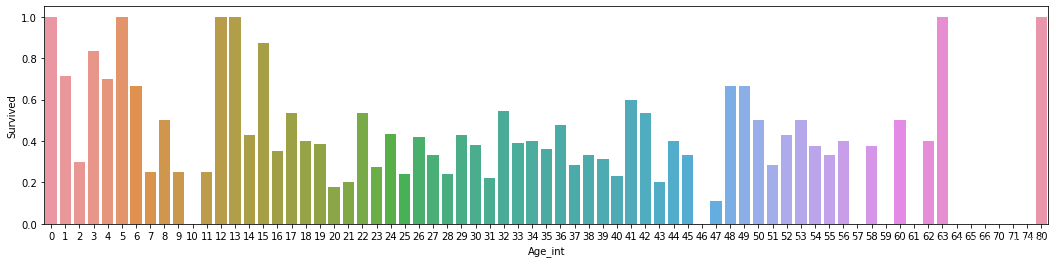

train_data["Age_int"] = train_data["Age"].astype(int)

train_data[["Age_int", "Survived"]].groupby(['Age_int'],as_index=True).mean()

| Survived | |

|---|---|

| Age_int | |

| 0 | 1.000000 |

| 1 | 0.714286 |

| 2 | 0.300000 |

| 3 | 0.833333 |

| 4 | 0.700000 |

| ... | ... |

| 66 | 0.000000 |

| 70 | 0.000000 |

| 71 | 0.000000 |

| 74 | 0.000000 |

| 80 | 1.000000 |

71 rows × 1 columns

fig, axis1 = plt.subplots(1,1,figsize=(18,4))

train_data["Age_int"] = train_data["Age"].astype(int)

average_age = train_data[["Age_int", "Survived"]].groupby(['Age_int'],as_index=False).mean() # as_index 等于reset_index的效果

sns.barplot(x='Age_int', y='Survived', data=average_age)

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b8a5df48>

train_data['Age'].describe()

count 891.000000

mean 29.653886

std 13.738179

min 0.420000

25% 21.000000

50% 28.000000

75% 37.000000

max 80.000000

Name: Age, dtype: float64

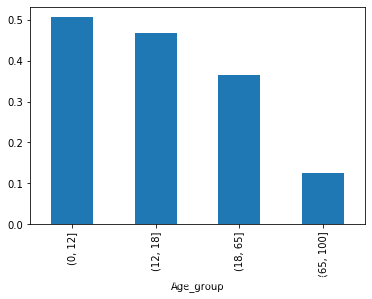

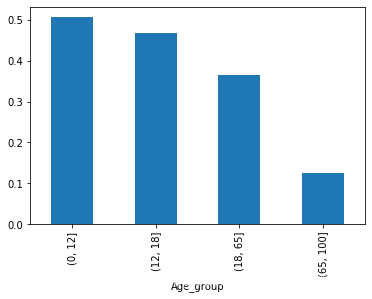

样本有891,平均年龄约为30岁,标准差13.5岁,最小年龄为0.42,最大年龄80.

按照年龄,将乘客划分为儿童、少年、成年和老年,分析四个群体的生还情况:

bins = [0, 12, 18, 65, 100]

train_data['Age_group'] = pd.cut(train_data['Age'], bins)

by_age = train_data.groupby('Age_group')['Survived'].mean()

by_age

Age_group

(0, 12] 0.506173

(12, 18] 0.466667

(18, 65] 0.364512

(65, 100] 0.125000

Name: Survived, dtype: float64

by_age.plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b9e97648>

by_age.plot(kind = 'bar')

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b9cf0d48>

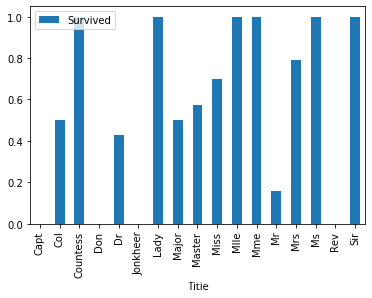

(4) 称呼与存活与否的关系 Name

通过观察名字数据,我们可以看出其中包括对乘客的称呼,如:Mr、Miss、Mrs等,称呼信息包含了乘客的年龄、性别,同时也包含了如社会地位等的称呼,如:Dr,、Lady、Major、Master等的称呼。

train_data['Title'] = train_data['Name'].str.extract(' ([A-Za-z]+)\.', expand=False)

pd.crosstab(train_data['Title'], train_data['Sex'])

| Sex | female | male |

|---|---|---|

| Title | ||

| Capt | 0 | 1 |

| Col | 0 | 2 |

| Countess | 1 | 0 |

| Don | 0 | 1 |

| Dr | 1 | 6 |

| Jonkheer | 0 | 1 |

| Lady | 1 | 0 |

| Major | 0 | 2 |

| Master | 0 | 40 |

| Miss | 182 | 0 |

| Mlle | 2 | 0 |

| Mme | 1 | 0 |

| Mr | 0 | 517 |

| Mrs | 125 | 0 |

| Ms | 1 | 0 |

| Rev | 0 | 6 |

| Sir | 0 | 1 |

train_data[['Title','Survived']].groupby(['Title']).mean().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b9d7e888>

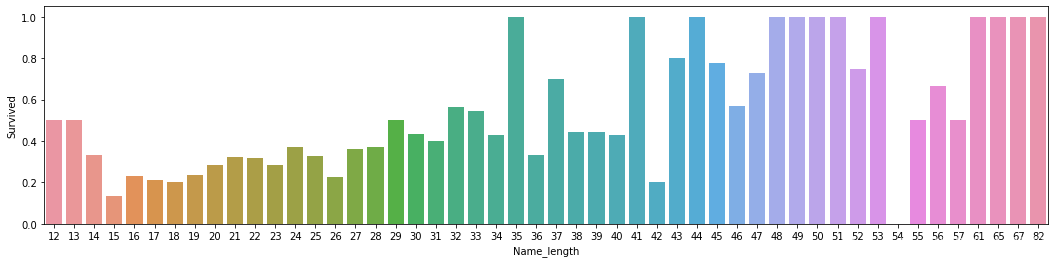

同时,对于名字,我们还可以观察名字长度和生存率之间存在关系的可能:

fig, axis1 = plt.subplots(1,1,figsize=(18,4))

train_data['Name_length'] = train_data['Name'].apply(len)

name_length = train_data[['Name_length','Survived']].groupby(['Name_length'],as_index=False).mean()

sns.barplot(x='Name_length', y='Survived', data=name_length)

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b9e3aa08>

从上面的图片可以看出,名字长度和生存与否确实也存在一定的相关性。

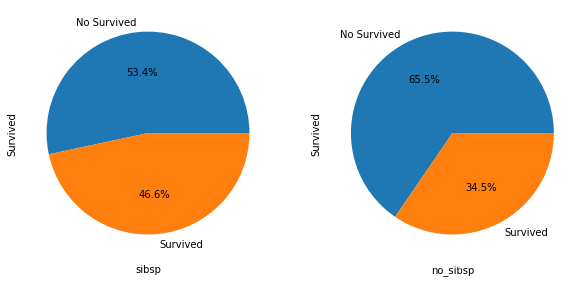

(5) 有无兄弟姐妹和存活与否的关系 SibSp

# 将数据分为有兄弟姐妹的和没有兄弟姐妹的两组:

sibsp_df = train_data[train_data['SibSp'] != 0]

no_sibsp_df = train_data[train_data['SibSp'] == 0]

plt.figure(figsize=(10,5))

plt.subplot(121)

sibsp_df['Survived'].value_counts().plot.pie(labels=['No Survived', 'Survived'], autopct = '%1.1f%%')

plt.xlabel('sibsp')

plt.subplot(122)

no_sibsp_df['Survived'].value_counts().plot.pie(labels=['No Survived', 'Survived'], autopct = '%1.1f%%')

plt.xlabel('no_sibsp')

plt.show()

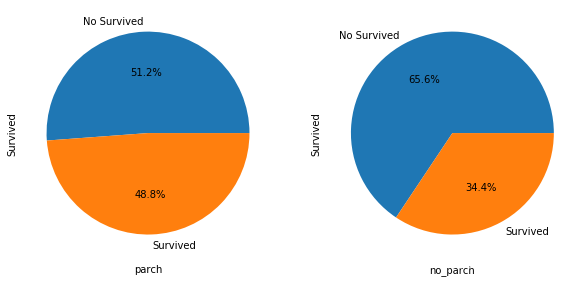

(6) 有无父母子女和存活与否的关系 Parch

和有无兄弟姐妹一样,同样分析可以得到:

parch_df = train_data[train_data['Parch'] != 0]

no_parch_df = train_data[train_data['Parch'] == 0]

plt.figure(figsize=(10,5))

plt.subplot(121)

parch_df['Survived'].value_counts().plot.pie(labels=['No Survived', 'Survived'], autopct = '%1.1f%%')

plt.xlabel('parch')

plt.subplot(122)

no_parch_df['Survived'].value_counts().plot.pie(labels=['No Survived', 'Survived'], autopct = '%1.1f%%')

plt.xlabel('no_parch')

plt.show()

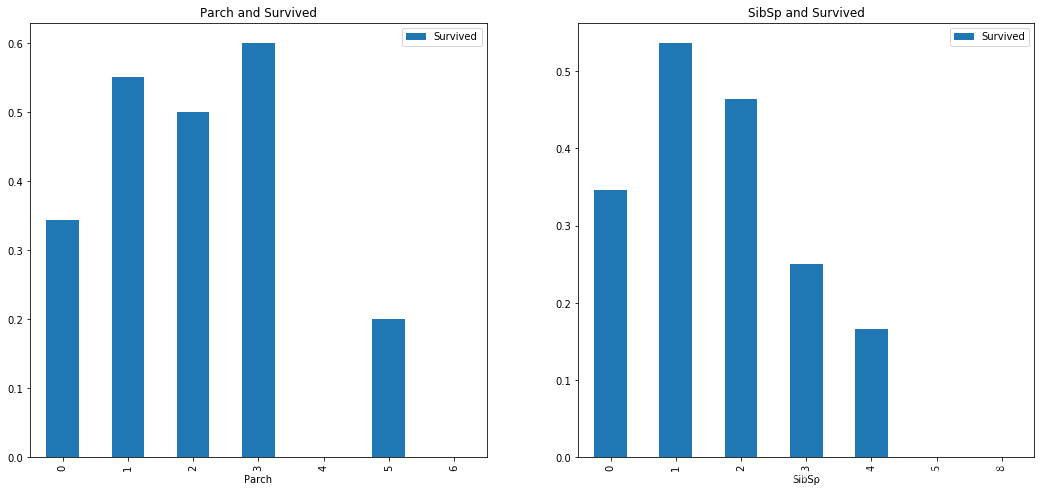

(7) 亲友的人数和存活与否的关系 SibSp & Parch

fig,ax=plt.subplots(1,2,figsize=(18,8))

train_data[['Parch','Survived']].groupby(['Parch']).mean().plot.bar(ax=ax[0])

ax[0].set_title('Parch and Survived')

train_data[['SibSp','Survived']].groupby(['SibSp']).mean().plot.bar(ax=ax[1])

ax[1].set_title('SibSp and Survived')

ax[1].set_title('SibSp and Survived')

Text(0.5, 1.0, 'SibSp and Survived')

train_data['Family_Size'] = train_data['Parch'] + train_data['SibSp'] + 1

train_data[['Family_Size','Survived']].groupby(['Family_Size']).mean().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2b8620fc8>

从图表中可以看出,若独自一人,那么其存活率比较低;但是如果亲友太多的话,存活率也会很低。

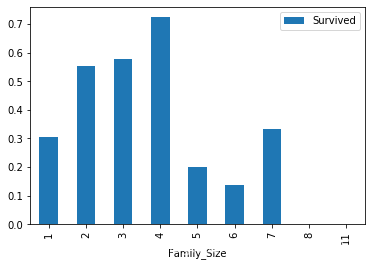

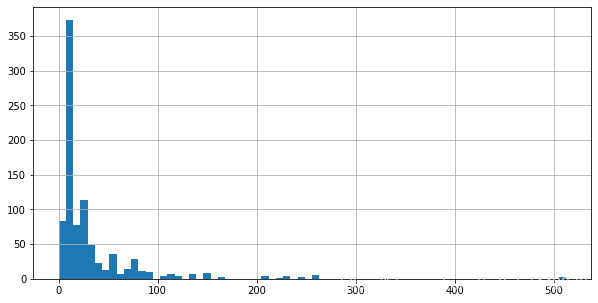

(8) 票价分布和存活与否的关系 Fare

plt.figure(figsize=(10,5))

train_data['Fare'].hist(bins = 70)

train_data.boxplot(column='Fare', by='Pclass', showfliers=False)

plt.show()

train_data['Fare'].describe()

count 891.000000

mean 32.204208

std 49.693429

min 0.000000

25% 7.910400

50% 14.454200

75% 31.000000

max 512.329200

Name: Fare, dtype: float64

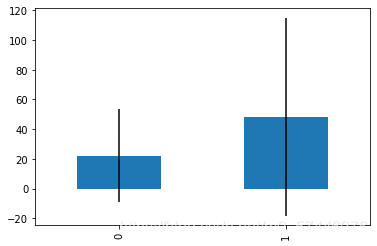

绘制生存与否与票价均值和方差的关系:

fare_not_survived = train_data['Fare'][train_data['Survived'] == 0]

fare_survived = train_data['Fare'][train_data['Survived'] == 1]

average_fare = pd.DataFrame([fare_not_survived.mean(), fare_survived.mean()])

average_fare

| 0 | |

|---|---|

| 0 | 22.117887 |

| 1 | 48.395408 |

std_fare = pd.DataFrame([fare_not_survived.std(), fare_survived.std()])

std_fare

| 0 | |

|---|---|

| 0 | 31.388207 |

| 1 | 66.596998 |

average_fare.plot(yerr=std_fare, kind='bar', legend=False)

<matplotlib.axes._subplots.AxesSubplot at 0x1a2ba5696c8>

由上图标可知,票价与是否生还有一定的相关性,生还者的平均票价要大于未生还者的平均票价。

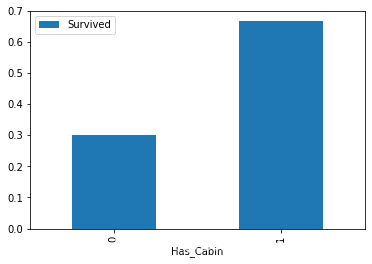

(9) 船舱类型和存活与否的关系 Cabin

由于船舱的缺失值确实太多,有效值仅仅有204个,很难分析出不同的船舱和存活的关系,所以在做特征工程的时候,可以直接将该组特征丢弃。

当然,这里我们也可以对其进行一下分析,对于缺失的数据都分为一类。

简单地将数据分为是否有Cabin记录作为特征,与生存与否进行分析:

# Replace missing values with "U0"

train_data.loc[train_data.Cabin.isnull(), 'Cabin'] = 'U0'

train_data['Has_Cabin'] = train_data['Cabin'].apply(lambda x: 0 if x == 'U0' else 1)

train_data[['Has_Cabin','Survived']].groupby(['Has_Cabin']).mean().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2ba5ff948>

x = "U0"

re.compile("([a-zA-Z]+)").search(x).group()

'U'

train_data['Cabin']

0 U0

1 C85

2 U0

3 C123

4 U0

...

886 U0

887 B42

888 U0

889 C148

890 U0

Name: Cabin, Length: 891, dtype: object

train_data['Cabin'].map(lambda x: re.compile("([a-zA-Z]+)").search(x).group())

0 U

1 C

2 U

3 C

4 U

..

886 U

887 B

888 U

889 C

890 U

Name: Cabin, Length: 891, dtype: object

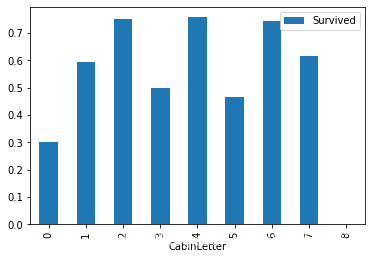

对不同类型的船舱进行分析:

train_data['Cabin'].map(lambda x: re.compile("([a-zA-Z]+)").search(x).group())

0 U

1 C

2 U

3 C

4 U

..

886 U

887 B

888 U

889 C

890 U

Name: Cabin, Length: 891, dtype: object

train_data['CabinLetter'] = train_data['Cabin'].map(lambda x: re.compile("([a-zA-Z]+)").search(x).group())

train_data['CabinLetter']

0 U

1 C

2 U

3 C

4 U

..

886 U

887 B

888 U

889 C

890 U

Name: CabinLetter, Length: 891, dtype: object

pd.factorize(train_data['CabinLetter'])[0]

array([0, 1, 0, 1, 0, 0, 2, 0, 0, 0, 3, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 4,

0, 5, 0, 0, 0, 1, 0, 0, 0, 6, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 4, 0, 6, 1, 0, 0, 0, 0, 0, 6, 1, 0, 0, 0,

7, 0, 0, 0, 0, 0, 0, 0, 0, 7, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

1, 0, 0, 0, 2, 0, 0, 0, 5, 4, 0, 0, 0, 0, 4, 0, 0, 0, 0, 0, 0, 0,

1, 0, 0, 0, 0, 0, 0, 0, 6, 0, 0, 0, 0, 2, 4, 0, 0, 0, 7, 0, 0, 0,

0, 0, 0, 0, 4, 1, 0, 6, 0, 0, 0, 0, 0, 0, 0, 0, 7, 0, 0, 1, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 2, 0, 0, 0, 6, 0, 0, 0, 5, 0,

0, 1, 0, 0, 0, 0, 0, 7, 0, 5, 0, 0, 0, 0, 0, 0, 0, 7, 6, 6, 0, 0,

0, 0, 0, 0, 0, 0, 0, 3, 0, 0, 0, 5, 0, 0, 0, 0, 0, 4, 0, 0, 4, 0,

0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 1, 0, 0, 4, 0, 0, 3, 1, 0, 0, 0, 0, 6, 0, 0, 0, 0, 2, 6,

0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 4, 0, 0, 0, 0, 0, 0, 0, 0, 5, 0,

0, 0, 0, 0, 0, 6, 4, 0, 0, 0, 0, 1, 1, 6, 0, 0, 0, 2, 0, 1, 0, 1,

0, 2, 1, 6, 0, 0, 0, 0, 0, 0, 1, 2, 0, 0, 0, 0, 0, 1, 0, 4, 0, 6,

0, 1, 1, 0, 0, 0, 1, 2, 0, 8, 7, 1, 0, 0, 0, 7, 0, 0, 0, 0, 0, 1,

0, 0, 0, 0, 2, 0, 0, 0, 0, 0, 0, 0, 0, 0, 4, 0, 0, 6, 2, 0, 0, 0,

0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6, 0, 0, 4, 3, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 2, 1, 0, 0, 0, 2, 6, 0, 0, 1, 0,

0, 0, 0, 0, 0, 5, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 2, 4, 0, 0, 2, 0,

2, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 4, 0, 5, 0, 0, 0, 0, 0, 0, 0, 0,

6, 0, 1, 6, 0, 0, 0, 0, 1, 0, 0, 0, 4, 0, 1, 0, 0, 0, 0, 0, 6, 1,

0, 0, 0, 0, 0, 0, 2, 0, 0, 4, 7, 0, 0, 0, 6, 0, 0, 6, 0, 0, 0, 1,

0, 0, 0, 0, 0, 0, 0, 0, 6, 0, 0, 6, 6, 0, 0, 0, 1, 0, 0, 0, 0, 0,

1, 0, 0, 0, 0, 0, 5, 0, 2, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1,

2, 0, 0, 0, 0, 2, 0, 0, 0, 1, 0, 5, 0, 2, 0, 6, 0, 0, 0, 4, 0, 0,

0, 0, 0, 0, 0, 5, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0,

0, 0, 7, 0, 0, 4, 0, 0, 0, 4, 0, 4, 0, 0, 5, 0, 6, 0, 0, 0, 0, 0,

0, 0, 0, 6, 0, 0, 0, 4, 0, 5, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 4,

0, 0, 2, 0, 0, 0, 0, 0, 0, 1, 0, 6, 0, 0, 0, 0, 0, 0, 0, 6, 0, 4,

0, 0, 0, 0, 0, 0, 0, 6, 6, 0, 0, 0, 0, 0, 0, 0, 1, 7, 1, 2, 0, 0,

0, 0, 0, 2, 0, 0, 1, 1, 1, 0, 0, 7, 1, 2, 0, 0, 0, 0, 0, 0, 2, 0,

0, 0, 0, 0, 6, 0, 0, 0, 0, 0, 0, 6, 0, 0, 4, 1, 6, 0, 0, 6, 0, 0,

4, 0, 0, 2, 0, 0, 0, 0, 0, 0, 0, 6, 0, 0, 0, 6, 0, 4, 0, 0, 0, 0,

0, 0, 2, 0, 0, 0, 7, 0, 0, 6, 0, 6, 4, 0, 0, 0, 0, 0, 0, 6, 0, 0,

0, 0, 0, 0, 4, 0, 0, 0, 0, 0, 6, 0, 0, 0, 5, 0, 0, 2, 0, 0, 0, 0,

0, 6, 0, 0, 0, 0, 6, 0, 0, 2, 0, 0, 0, 0, 0, 6, 0, 0, 0, 0, 0, 2,

0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 4, 0, 0, 0, 2,

0, 0, 0, 0, 4, 0, 0, 0, 0, 5, 0, 0, 0, 4, 6, 0, 0, 0, 0, 0, 0, 1,

0, 0, 0, 0, 0, 0, 0, 6, 0, 1, 0], dtype=int64)

# create feature for the alphabetical part of the cabin number

train_data['CabinLetter'] = train_data['Cabin'].map(lambda x: re.compile("([a-zA-Z]+)").search(x).group()) # re.compile将字符串中的字母分割出来

# convert the distinct cabin letters with incremental integer values

train_data['CabinLetter'] = pd.factorize(train_data['CabinLetter'])[0] # 将字母转化为数值特征 按1,2,3,4...进行排序

train_data[['CabinLetter','Survived']].groupby(['CabinLetter']).mean().plot.bar()

<matplotlib.axes._subplots.AxesSubplot at 0x1a2ba691048>

可见,不同的船舱生存率也有不同,但是差别不大。所以在处理中,我们可以直接将特征删除。

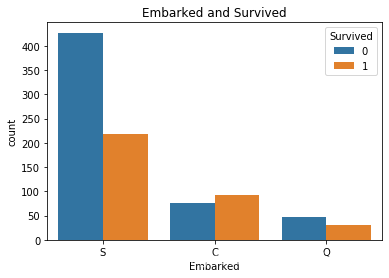

(10) 港口和存活与否的关系 Embarked

sns.countplot('Embarked', hue='Survived', data=train_data)

plt.title('Embarked and Survived')

Text(0.5, 1.0, 'Embarked and Survived')

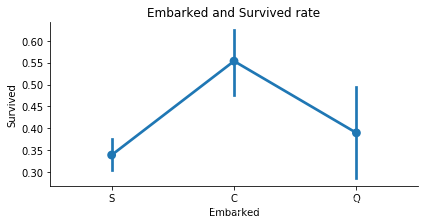

sns.factorplot('Embarked', 'Survived', data=train_data, size=3, aspect=2)

plt.title('Embarked and Survived rate')

plt.show()

由上可以看出,在不同的港口上船,生还率不同,C最高,Q次之,S最低。

以上为所给出的数据特征与生还与否的分析。

据了解,泰坦尼克号上共有2224名乘客。本训练数据只给出了891名乘客的信息,如果该数据集是从总共的2224人中随机选出的,根据中心极限定理,该样本的数据也足够大,那么我们的分析结果就具有代表性;但如果不是随机选取,那么我们的分析结果就可能不太靠谱了。

————————————————

版权声明:本文为CSDN博主「大树先生的博客」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/Koala_Tree/article/details/78725881

(11) 其他可能和存活与否有关系的特征

对于数据集中没有给出的特征信息,我们还可以联想其他可能会对模型产生影响的特征因素。如:乘客的国籍、乘客的身高、乘客的体重、乘客是否会游泳、乘客职业等等。

另外还有数据集中没有分析的几个特征:Ticket(船票号)、Cabin(船舱号),这些因素的不同可能会影响乘客在船中的位置从而影响逃生的顺序。但是船舱号数据缺失,船票号类别大,难以分析规律,所以在后期模型融合的时候,将这些因素交由模型来决定其重要性。

————————————————

版权声明:本文为CSDN博主「大树先生的博客」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/Koala_Tree/article/details/78725881

4. 变量转换

变量转换的目的是将数据转换为适用于模型使用的数据,不同模型接受不同类型的数据,Scikit-learn要求数据都是数字型numeric,所以我们要将一些非数字型的原始数据转换为数字型numeric。

所以下面对数据的转换进行介绍,以在进行特征工程的时候使用。

所有的数据可以分为两类:

1.定量(Quantitative)变量可以以某种方式排序,Age就是一个很好的列子。

2.定性(Qualitative)变量描述了物体的某一(不能被数学表示的)方面,Embarked就是一个例子。

定性(Qualitative)转换:

1. Dummy Variables

就是类别变量或者二元变量,当qualitative variable是一些频繁出现的几个独立变量时,Dummy Variables比较适合使用。我们以Embarked为例,Embarked只包含三个值’S’,‘C’,‘Q’,我们可以使用下面的代码将其转换为dummies:

train_data['Embarked']

0 S

1 C

2 S

3 S

4 S

..

886 S

887 S

888 S

889 C

890 Q

Name: Embarked, Length: 891, dtype: object

pd.get_dummies(train_data['Embarked'])

| C | Q | S | |

|---|---|---|---|

| 0 | 0 | 0 | 1 |

| 1 | 1 | 0 | 0 |

| 2 | 0 | 0 | 1 |

| 3 | 0 | 0 | 1 |

| 4 | 0 | 0 | 1 |

| ... | ... | ... | ... |

| 886 | 0 | 0 | 1 |

| 887 | 0 | 0 | 1 |

| 888 | 0 | 0 | 1 |

| 889 | 1 | 0 | 0 |

| 890 | 0 | 1 | 0 |

891 rows × 3 columns

embark_dummies = pd.get_dummies(train_data['Embarked'])

train_data = train_data.join(embark_dummies)

train_data.drop(['Embarked'], axis=1,inplace=True)

embark_dummies = train_data[['S', 'C', 'Q']]

embark_dummies.head()

| S | C | Q | |

|---|---|---|---|

| 0 | 1 | 0 | 0 |

| 1 | 0 | 1 | 0 |

| 2 | 1 | 0 | 0 |

| 3 | 1 | 0 | 0 |

| 4 | 1 | 0 | 0 |

2. Factorizing

dummy不好处理Cabin(船舱号)这种标称属性,因为他出现的变量比较多。所以Pandas有一个方法叫做factorize(),它可以创建一些数字,来表示类别变量,对每一个类别映射一个ID,这种映射最后只生成一个特征,不像dummy那样生成多个特征。

train_data['Cabin']

0 U0

1 C85

2 U0

3 C123

4 U0

...

886 U0

887 B42

888 U0

889 C148

890 U0

Name: Cabin, Length: 891, dtype: object

train_data['Cabin'].map( lambda x : re.compile("([a-zA-Z]+)").search(x).group())

0 U

1 C

2 U

3 C

4 U

..

886 U

887 B

888 U

889 C

890 U

Name: Cabin, Length: 891, dtype: object

# Replace missing values with "U0"

train_data['Cabin'][train_data.Cabin.isnull()] = 'U0'

# create feature for the alphabetical part of the cabin number

train_data['CabinLetter'] = train_data['Cabin'].map( lambda x : re.compile("([a-zA-Z]+)").search(x).group())

# convert the distinct cabin letters with incremental integer values

train_data['CabinLetter'] = pd.factorize(train_data['CabinLetter'])[0]

train_data['Cabin'].head(10)

0 U0

1 C85

2 U0

3 C123

4 U0

5 U0

6 E46

7 U0

8 U0

9 U0

Name: Cabin, dtype: object

pd.factorize(train_data['CabinLetter'])[0][0:10]

array([0, 1, 0, 1, 0, 0, 2, 0, 0, 0], dtype=int64)

train_data['CabinLetter'].head(10)

0 0

1 1

2 0

3 1

4 0

5 0

6 2

7 0

8 0

9 0

Name: CabinLetter, dtype: int64

定量(Quantitative)转换:

1. Scaling

Scaling可以将一个很大范围的数值映射到一个很小的范围(通常是-1 - 1,或则是0 - 1),很多情况下我们需要将数值做Scaling使其范围大小一样,否则大范围数值特征将会由更高的权重。比如:Age的范围可能只是0-100,而income的范围可能是0-10000000,在某些对数组大小敏感的模型中会影响其结果。

下面对Age进行Scaling:

from sklearn import preprocessing

assert np.size(train_data['Age']) == 891

# StandardScaler will subtract the mean from each value then scale to the unit variance

scaler = preprocessing.StandardScaler()

train_data['Age_scaled'] = scaler.fit_transform(train_data['Age'].values.reshape(-1, 1))

train_data['Age_scaled']

0 -0.557438

1 0.607854

2 -0.266115

3 0.389361

4 0.389361

...

886 -0.193284

887 -0.775930

888 -0.320543

889 -0.266115

890 0.170869

Name: Age_scaled, Length: 891, dtype: float64

2. Binning

Binning通过观察“邻居”(即周围的值)将连续数据离散化。存储的值被分布到一些“桶”或“箱“”中,就像直方图的bin将数据划分成几块一样。下面的代码对Fare进行Binning。

train_data['Fare']

0 7.2500

1 71.2833

2 7.9250

3 53.1000

4 8.0500

...

886 13.0000

887 30.0000

888 23.4500

889 30.0000

890 7.7500

Name: Fare, Length: 891, dtype: float64

train_data['Fare_bin'] = pd.qcut(train_data['Fare'], 5)

train_data['Fare_bin'].head()

0 (-0.001, 7.854]

1 (39.688, 512.329]

2 (7.854, 10.5]

3 (39.688, 512.329]

4 (7.854, 10.5]

Name: Fare_bin, dtype: category

Categories (5, interval[float64]): [(-0.001, 7.854] < (7.854, 10.5] < (10.5, 21.679] < (21.679, 39.688] < (39.688, 512.329]]

在将数据Bining化后,要么将数据factorize化,要么dummies化。

# factorize

train_data['Fare_bin_id'] = pd.factorize(train_data['Fare_bin'])[0]

# dummies

fare_bin_dummies_df = pd.get_dummies(train_data['Fare_bin']).rename(columns=lambda x: 'Fare_' + str(x))

train_data = pd.concat([train_data, fare_bin_dummies_df], axis=1)

train_data['Fare_bin_id']

0 0

1 1

2 2

3 1

4 2

..

886 3

887 4

888 4

889 4

890 0

Name: Fare_bin_id, Length: 891, dtype: int64

fare_bin_dummies_df

| Fare_bin | Fare_(-0.001, 7.854] | Fare_(7.854, 10.5] | Fare_(10.5, 21.679] | Fare_(21.679, 39.688] | Fare_(39.688, 512.329] |

|---|---|---|---|---|---|

| 0 | 1 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 1 |

| 2 | 0 | 1 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 1 |

| 4 | 0 | 1 | 0 | 0 | 0 |

| ... | ... | ... | ... | ... | ... |

| 886 | 0 | 0 | 1 | 0 | 0 |

| 887 | 0 | 0 | 0 | 1 | 0 |

| 888 | 0 | 0 | 0 | 1 | 0 |

| 889 | 0 | 0 | 0 | 1 | 0 |

| 890 | 1 | 0 | 0 | 0 | 0 |

891 rows × 5 columns

5. 特征工程

在进行特征工程的时候,我们不仅需要对训练数据进行处理,还需要同时将测试数据同训练数据一起处理,使得二者具有相同的数据类型和数据分布。

train_df_org = pd.read_csv('train.csv')

test_df_org = pd.read_csv('test.csv')

test_df_org['Survived'] = 0

combined_train_test = train_df_org.append(test_df_org)

PassengerId = test_df_org['PassengerId']

train_df_org.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

PassengerId 891 non-null int64

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 714 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Ticket 891 non-null object

Fare 891 non-null float64

Cabin 204 non-null object

Embarked 889 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.7+ KB

test_df_org.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 418 entries, 0 to 417

Data columns (total 12 columns):

PassengerId 418 non-null int64

Pclass 418 non-null int64

Name 418 non-null object

Sex 418 non-null object

Age 332 non-null float64

SibSp 418 non-null int64

Parch 418 non-null int64

Ticket 418 non-null object

Fare 417 non-null float64

Cabin 91 non-null object

Embarked 418 non-null object

Survived 418 non-null int64

dtypes: float64(2), int64(5), object(5)

memory usage: 39.3+ KB

PassengerId

0 892

1 893

2 894

3 895

4 896

...

413 1305

414 1306

415 1307

416 1308

417 1309

Name: PassengerId, Length: 418, dtype: int64

对数据进行特征工程,也就是从各项参数中提取出对输出结果有或大或小的影响的特征,将这些特征作为训练模型的依据。

一般来说,我们会先从含有缺失值的特征开始。

combined_train_test.info()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 1309 entries, 0 to 417

Data columns (total 12 columns):

Age 1046 non-null float64

Cabin 295 non-null object

Embarked 1307 non-null object

Fare 1308 non-null float64

Name 1309 non-null object

Parch 1309 non-null int64

PassengerId 1309 non-null int64

Pclass 1309 non-null int64

Sex 1309 non-null object

SibSp 1309 non-null int64

Survived 1309 non-null int64

Ticket 1309 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 132.9+ KB

(1) Embarked

因为“Embarked”项的缺失值不多,所以这里我们以众数来填充:

combined_train_test['Embarked'].fillna(combined_train_test['Embarked'].mode().iloc[0], inplace=True)

对于三种不同的港口,由上面介绍的数值转换,我们知道可以有两种特征处理方式:dummy和facrorizing。因为只有三个港口,所以我们可以直接用dummy来处理:

# 为了后面的特征分析,这里我们将 Embarked 特征进行facrorizing

combined_train_test['Embarked'] = pd.factorize(combined_train_test['Embarked'])[0]

combined_train_test['Embarked']

0 0

1 1

2 0

3 0

4 0

..

413 0

414 1

415 0

416 0

417 1

Name: Embarked, Length: 1309, dtype: int64

# 使用 pd.get_dummies 获取one-hot 编码

emb_dummies_df = pd.get_dummies(combined_train_test['Embarked'], prefix=combined_train_test[['Embarked']].columns[0])

combined_train_test = pd.concat([combined_train_test, emb_dummies_df], axis=1)

emb_dummies_df

| Embarked_0 | Embarked_1 | Embarked_2 | |

|---|---|---|---|

| 0 | 1 | 0 | 0 |

| 1 | 0 | 1 | 0 |

| 2 | 1 | 0 | 0 |

| 3 | 1 | 0 | 0 |

| 4 | 1 | 0 | 0 |

| ... | ... | ... | ... |

| 413 | 1 | 0 | 0 |

| 414 | 0 | 1 | 0 |

| 415 | 1 | 0 | 0 |

| 416 | 1 | 0 | 0 |

| 417 | 0 | 1 | 0 |

1309 rows × 3 columns

(2) Sex

对Sex也进行one-hot编码,也就是dummy处理:

one-hot 编码的定义:

独热编码即 One-Hot 编码,又称一位有效编码。其方法是使用 N位 状态寄存器来对 N个状态 进行编码,每个状态都有它独立的寄存器位,并且在任意时候,其中只有一位有效。

具体参考:https://blog.csdn.net/qq_15192373/article/details/89552498

# 为了后面的特征分析,这里我们也将 Sex 特征进行facrorizing

combined_train_test['Sex'] = pd.factorize(combined_train_test['Sex'])[0]

sex_dummies_df = pd.get_dummies(combined_train_test['Sex'], prefix=combined_train_test[['Sex']].columns[0])

combined_train_test = pd.concat([combined_train_test, sex_dummies_df], axis=1)

(3) Name

首先先从名字中提取各种称呼:

# what is each person's title?

combined_train_test['Title'] = combined_train_test['Name'].map(lambda x: re.compile(", (.*?)\.").findall(x)[0])

combined_train_test['Name'].map(lambda x: re.compile(", (.*?)\.").findall(x)[0])

0 Mr

1 Mrs

2 Miss

3 Mrs

4 Mr

...

413 Mr

414 Dona

415 Mr

416 Mr

417 Master

Name: Name, Length: 1309, dtype: object

将各式称呼进行统一化处理:

title_Dict = {}

title_Dict.update(dict.fromkeys(['Capt', 'Col', 'Major', 'Dr', 'Rev'], 'Officer'))

title_Dict.update(dict.fromkeys(['Don', 'Sir', 'the Countess', 'Dona', 'Lady'], 'Royalty'))

title_Dict.update(dict.fromkeys(['Mme', 'Ms', 'Mrs'], 'Mrs'))

title_Dict.update(dict.fromkeys(['Mlle', 'Miss'], 'Miss'))

title_Dict.update(dict.fromkeys(['Mr'], 'Mr'))

title_Dict.update(dict.fromkeys(['Master','Jonkheer'], 'Master'))

combined_train_test['Title'] = combined_train_test['Title'].map(title_Dict)

title_Dict

{'Capt': 'Officer',

'Col': 'Officer',

'Major': 'Officer',

'Dr': 'Officer',

'Rev': 'Officer',

'Don': 'Royalty',

'Sir': 'Royalty',

'the Countess': 'Royalty',

'Dona': 'Royalty',

'Lady': 'Royalty',

'Mme': 'Mrs',

'Ms': 'Mrs',

'Mrs': 'Mrs',

'Mlle': 'Miss',

'Miss': 'Miss',

'Mr': 'Mr',

'Master': 'Master',

'Jonkheer': 'Master'}

使用dummy对不同的称呼进行分列:

pd.factorize(combined_train_test['Title'])

(array([0, 1, 2, ..., 0, 0, 3], dtype=int64),

Index(['Mr', 'Mrs', 'Miss', 'Master', 'Royalty', 'Officer'], dtype='object'))

# 为了后面的特征分析,这里我们也将 Title 特征进行facrorizing

combined_train_test['Title'] = pd.factorize(combined_train_test['Title'])[0]

title_dummies_df = pd.get_dummies(combined_train_test['Title'], prefix=combined_train_test[['Title']].columns[0])

combined_train_test = pd.concat([combined_train_test, title_dummies_df], axis=1)

增加名字长度的特征:

combined_train_test['Name_length'] = combined_train_test['Name'].apply(len)

combined_train_test.shape

(1309, 25)

(4) Fare

由前面分析可以知道,Fare项在测试数据中缺少一个值,所以需要对该值进行填充。

我们按照一二三等舱各自的均价来填充:

下面transform将函数np.mean应用到各个group中。

combined_train_test[['Fare']]

| Fare | |

|---|---|

| 0 | 7.2500 |

| 1 | 71.2833 |

| 2 | 7.9250 |

| 3 | 53.1000 |

| 4 | 8.0500 |

| ... | ... |

| 413 | 8.0500 |

| 414 | 108.9000 |

| 415 | 7.2500 |

| 416 | 8.0500 |

| 417 | 22.3583 |

1309 rows × 1 columns

combined_train_test.groupby('Pclass')[['Fare']].count()

| Fare | |

|---|---|

| Pclass | |

| 1 | 323 |

| 2 | 277 |

| 3 | 708 |

combined_train_test.groupby('Pclass').transform(len) # 找均值的过程transform

| Age | Cabin | Embarked | Fare | Name | Parch | PassengerId | Sex | SibSp | Survived | ... | Sex_0 | Sex_1 | Title | Title_0 | Title_1 | Title_2 | Title_3 | Title_4 | Title_5 | Name_length | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | ... | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 |

| 1 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | ... | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 |

| 2 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | ... | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 |

| 3 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | ... | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 |

| 4 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | ... | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 413 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | ... | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 |

| 414 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | ... | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 | 323 |

| 415 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | ... | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 |

| 416 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | ... | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 |

| 417 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | ... | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 | 709 |

1309 rows × 24 columns

combined_train_test[['Fare']].fillna(combined_train_test.groupby('Pclass').transform(np.mean))

| Fare | |

|---|---|

| 0 | 7.2500 |

| 1 | 71.2833 |

| 2 | 7.9250 |

| 3 | 53.1000 |

| 4 | 8.0500 |

| ... | ... |

| 413 | 8.0500 |

| 414 | 108.9000 |

| 415 | 7.2500 |

| 416 | 8.0500 |

| 417 | 22.3583 |

1309 rows × 1 columns

combined_train_test['Fare'] = combined_train_test[['Fare']].fillna(combined_train_test.groupby('Pclass').transform(np.mean))

通过对Ticket数据的分析,我们可以看到部分票号数据有重复,同时结合亲属人数及名字的数据,和票价船舱等级对比,我们可以知道购买的票中有家庭票和团体票,所以我们需要将团体票的票价分配到每个人的头上。

combined_train_test['Group_Ticket'] = combined_train_test['Fare'].groupby(by=combined_train_test['Ticket']).transform('count')

combined_train_test['Fare'] = combined_train_test['Fare'] / combined_train_test['Group_Ticket']

# combined_train_test.drop(['Group_Ticket'], axis=1, inplace=True)

combined_train_test['Group_Ticket']

0 1

1 2

2 1

3 2

4 1

..

413 1

414 3

415 1

416 1

417 3

Name: Group_Ticket, Length: 1309, dtype: int64

combined_train_test.drop(['Group_Ticket'], axis=1, inplace=True)

使用binning给票价分等级:

combined_train_test['Fare_bin'] = pd.qcut(combined_train_test['Fare'], 5)

对于5个等级的票价我们也可以继续使用dummy为票价等级分列:

combined_train_test['Fare_bin_id'] = pd.factorize(combined_train_test['Fare_bin'])[0]

fare_bin_dummies_df = pd.get_dummies(combined_train_test['Fare_bin_id']).rename(columns=lambda x: 'Fare_' + str(x))

combined_train_test = pd.concat([combined_train_test, fare_bin_dummies_df], axis=1)

combined_train_test.drop(['Fare_bin'], axis=1, inplace=True)

combined_train_test

| Age | Cabin | Embarked | Fare | Name | Parch | PassengerId | Pclass | Sex | SibSp | ... | Title_3 | Title_4 | Title_5 | Name_length | Fare_bin_id | Fare_0 | Fare_1 | Fare_2 | Fare_3 | Fare_4 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 22.0 | NaN | 0 | 7.250000 | Braund, Mr. Owen Harris | 0 | 1 | 3 | 0 | 1 | ... | 0 | 0 | 0 | 23 | 0 | 1 | 0 | 0 | 0 | 0 |

| 1 | 38.0 | C85 | 1 | 35.641650 | Cumings, Mrs. John Bradley (Florence Briggs Th... | 0 | 2 | 1 | 1 | 1 | ... | 0 | 0 | 0 | 51 | 1 | 0 | 1 | 0 | 0 | 0 |

| 2 | 26.0 | NaN | 0 | 7.925000 | Heikkinen, Miss. Laina | 0 | 3 | 3 | 1 | 0 | ... | 0 | 0 | 0 | 22 | 2 | 0 | 0 | 1 | 0 | 0 |

| 3 | 35.0 | C123 | 0 | 26.550000 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | 0 | 4 | 1 | 1 | 1 | ... | 0 | 0 | 0 | 44 | 1 | 0 | 1 | 0 | 0 | 0 |

| 4 | 35.0 | NaN | 0 | 8.050000 | Allen, Mr. William Henry | 0 | 5 | 3 | 0 | 0 | ... | 0 | 0 | 0 | 24 | 2 | 0 | 0 | 1 | 0 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 413 | NaN | NaN | 0 | 8.050000 | Spector, Mr. Woolf | 0 | 1305 | 3 | 0 | 0 | ... | 0 | 0 | 0 | 18 | 2 | 0 | 0 | 1 | 0 | 0 |

| 414 | 39.0 | C105 | 1 | 36.300000 | Oliva y Ocana, Dona. Fermina | 0 | 1306 | 1 | 1 | 0 | ... | 0 | 1 | 0 | 28 | 1 | 0 | 1 | 0 | 0 | 0 |

| 415 | 38.5 | NaN | 0 | 7.250000 | Saether, Mr. Simon Sivertsen | 0 | 1307 | 3 | 0 | 0 | ... | 0 | 0 | 0 | 28 | 0 | 1 | 0 | 0 | 0 | 0 |

| 416 | NaN | NaN | 0 | 8.050000 | Ware, Mr. Frederick | 0 | 1308 | 3 | 0 | 0 | ... | 0 | 0 | 0 | 19 | 2 | 0 | 0 | 1 | 0 | 0 |

| 417 | NaN | NaN | 1 | 7.452767 | Peter, Master. Michael J | 1 | 1309 | 3 | 0 | 1 | ... | 1 | 0 | 0 | 24 | 0 | 1 | 0 | 0 | 0 | 0 |

1309 rows × 31 columns

(5) Pclass

Pclass这一项,其实已经可以不用继续处理了,我们只需要将其转换为dummy形式即可。

但是为了更好的分析问题,我们这里假设对于不同等级的船舱,各船舱内部的票价也说明了各等级舱的位置,那么也就很有可能与逃生的顺序有关系。所以这里分出每等舱里的高价和低价位。

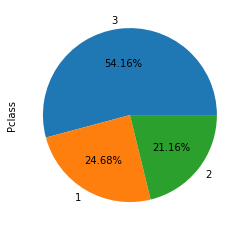

combined_train_test['Pclass'].value_counts().plot.pie(autopct = '%1.2f%%')

<matplotlib.axes._subplots.AxesSubplot at 0x1a2bba76748>

from sklearn.preprocessing import LabelEncoder

# 建立PClass Fare Category

def pclass_fare_category(df, pclass1_mean_fare, pclass2_mean_fare, pclass3_mean_fare):

if df['Pclass'] == 1:

if df['Fare'] <= pclass1_mean_fare:

return 'Pclass1_Low'

else:

return 'Pclass1_High'

elif df['Pclass'] == 2:

if df['Fare'] <= pclass2_mean_fare:

return 'Pclass2_Low'

else:

return 'Pclass2_High'

elif df['Pclass'] == 3:

if df['Fare'] <= pclass3_mean_fare:

return 'Pclass3_Low'

else:

return 'Pclass3_High'

Pclass1_mean_fare = combined_train_test['Fare'].groupby(by=combined_train_test['Pclass']).mean().get([1]).values[0]

Pclass2_mean_fare = combined_train_test['Fare'].groupby(by=combined_train_test['Pclass']).mean().get([2]).values[0]

Pclass3_mean_fare = combined_train_test['Fare'].groupby(by=combined_train_test['Pclass']).mean().get([3]).values[0]

# 建立Pclass_Fare Category

combined_train_test['Pclass_Fare_Category'] = combined_train_test.apply(pclass_fare_category, args=(Pclass1_mean_fare, Pclass2_mean_fare, Pclass3_mean_fare), axis=1)

pclass_level = LabelEncoder()

# 给每一项添加标签

pclass_level.fit(np.array(

['Pclass1_Low', 'Pclass1_High', 'Pclass2_Low', 'Pclass2_High', 'Pclass3_Low', 'Pclass3_High']))

LabelEncoder()

# 转换成数值

combined_train_test['Pclass_Fare_Category'] = pclass_level.transform(combined_train_test['Pclass_Fare_Category'])

# dummy 转换

pclass_dummies_df = pd.get_dummies(combined_train_test['Pclass_Fare_Category']).rename(columns=lambda x: 'Pclass_' + str(x))

combined_train_test = pd.concat([combined_train_test, pclass_dummies_df], axis=1)

小tips–关于独热

多特征值序列化数值化独热编码处理

当我们在运用某些模型时,比如在Scikit-learn中,它要求数据都得是numberic(数值型),若是文本类型就无法进行训练。

那么在这种情况下,我们就应该先对数据进行序列化数值化:

下面是几种在Python中数值化的方法:

-

自然数编码 : a) 使用sklearn中的LabelEncoder()方法,转换为数值型特征

b) 使用pd.factorize()函数 -

独热编码(one-hot encoding):生成一个(n_examples * n_classes)大小的0~1矩阵,每个样本仅对应一个label

a) 使用pandas中的get_dummies实现 b) 使用OneHotEncoder() , LabelEncoder() , LabelBinarizer() 这些方法

同时,我们将 Pclass 特征factorize化:

np.array(combined_train_test['Pclass'])

array([3, 1, 3, ..., 3, 3, 3], dtype=int64)

combined_train_test['Pclass'] = pd.factorize(combined_train_test['Pclass'])[0]

np.array(combined_train_test['Pclass'])

array([0, 1, 0, ..., 0, 0, 0], dtype=int64)

(6) Parch and SibSp

由前面的分析,我们可以知道,亲友的数量没有或者太多会影响到Survived。所以将二者合并为FamliySize这一组合项,同时也保留这两项。

def family_size_category(family_size):

if family_size <= 1:

return 'Single'

elif family_size <= 4:

return 'Small_Family'

else:

return 'Large_Family'

combined_train_test['Family_Size'] = combined_train_test['Parch'] + combined_train_test['SibSp'] + 1

combined_train_test['Family_Size_Category'] = combined_train_test['Family_Size'].map(family_size_category)

le_family = LabelEncoder()

# 给每一项添加标签

le_family.fit(np.array(['Single', 'Small_Family', 'Large_Family']))

combined_train_test['Family_Size_Category'] = le_family.transform(combined_train_test['Family_Size_Category'])

family_size_dummies_df = pd.get_dummies(combined_train_test['Family_Size_Category'],

prefix=combined_train_test[['Family_Size_Category']].columns[0])

combined_train_test = pd.concat([combined_train_test, family_size_dummies_df], axis=1)

(7) Age

因为Age项的缺失值较多,所以不能直接填充age的众数或者平均数。

常见的有两种对年龄的填充方式:一种是根据Title中的称呼,如Mr,Master、Miss等称呼不同类别的人的平均年龄来填充;一种是综合几项如Sex、Title、Pclass等其他没有缺失值的项,使用机器学习算法来预测Age。

这里我们使用后者来处理。以Age为目标值,将Age完整的项作为训练集,将Age缺失的项作为测试集。

missing_age_df = pd.DataFrame(combined_train_test[

['Age', 'Embarked', 'Sex', 'Title', 'Name_length', 'Family_Size', 'Family_Size_Category','Fare', 'Fare_bin_id', 'Pclass']])

missing_age_train = missing_age_df[missing_age_df['Age'].notnull()]

missing_age_test = missing_age_df[missing_age_df['Age'].isnull()]

missing_age_test.head()

| Age | Embarked | Sex | Title | Name_length | Family_Size | Family_Size_Category | Fare | Fare_bin_id | Pclass | |

|---|---|---|---|---|---|---|---|---|---|---|

| 5 | NaN | 2 | 0 | 0 | 16 | 1 | 1 | 8.4583 | 2 | 0 |

| 17 | NaN | 0 | 0 | 0 | 28 | 1 | 1 | 13.0000 | 3 | 2 |

| 19 | NaN | 1 | 1 | 1 | 23 | 1 | 1 | 7.2250 | 4 | 0 |

| 26 | NaN | 1 | 0 | 0 | 23 | 1 | 1 | 7.2250 | 4 | 0 |

| 28 | NaN | 2 | 1 | 2 | 29 | 1 | 1 | 7.8792 | 0 | 0 |

建立Age的预测模型,我们可以多模型预测,然后再做模型的融合,提高预测的精度。

1)Bagging + 决策树 = 随机森林

2)AdaBoost + 决策树 = 提升树

3)Gradient Boosting + 决策树 = GBDT

整合:

from sklearn import ensemble

from sklearn import model_selection

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.ensemble import RandomForestRegressor

def fill_missing_age(missing_age_train, missing_age_test):

missing_age_X_train = missing_age_train.drop(['Age'], axis=1)

missing_age_Y_train = missing_age_train['Age']

missing_age_X_test = missing_age_test.drop(['Age'], axis=1)

# model 1 gbm

gbm_reg = GradientBoostingRegressor(random_state=42)

gbm_reg_param_grid = {'n_estimators': [2000], 'max_depth': [4], 'learning_rate': [0.01], 'max_features': [3]}

gbm_reg_grid = model_selection.GridSearchCV(gbm_reg, gbm_reg_param_grid, cv=10, n_jobs=25, verbose=1, scoring='neg_mean_squared_error')

gbm_reg_grid.fit(missing_age_X_train, missing_age_Y_train)

print('Age feature Best GB Params:' + str(gbm_reg_grid.best_params_))

print('Age feature Best GB Score:' + str(gbm_reg_grid.best_score_))

print('GB Train Error for "Age" Feature Regressor:' + str(gbm_reg_grid.score(missing_age_X_train, missing_age_Y_train)))

missing_age_test.loc[:, 'Age_GB'] = gbm_reg_grid.predict(missing_age_X_test)

print(missing_age_test['Age_GB'][:4])

# model 2 rf

rf_reg = RandomForestRegressor()

rf_reg_param_grid = {'n_estimators': [200], 'max_depth': [5], 'random_state': [0]}

rf_reg_grid = model_selection.GridSearchCV(rf_reg, rf_reg_param_grid, cv=10, n_jobs=25, verbose=1, scoring='neg_mean_squared_error')

rf_reg_grid.fit(missing_age_X_train, missing_age_Y_train)

print('Age feature Best RF Params:' + str(rf_reg_grid.best_params_))

print('Age feature Best RF Score:' + str(rf_reg_grid.best_score_))

print('RF Train Error for "Age" Feature Regressor' + str(rf_reg_grid.score(missing_age_X_train, missing_age_Y_train)))

missing_age_test.loc[:, 'Age_RF'] = rf_reg_grid.predict(missing_age_X_test)

print(missing_age_test['Age_RF'][:4])

# two models merge

print('shape1', missing_age_test['Age'].shape, missing_age_test[['Age_GB', 'Age_RF']].mode(axis=1).shape)

# missing_age_test['Age'] = missing_age_test[['Age_GB', 'Age_LR']].mode(axis=1)

missing_age_test.loc[:, 'Age'] = np.mean([missing_age_test['Age_GB'], missing_age_test['Age_RF']])

print(missing_age_test['Age'][:4])

missing_age_test.drop(['Age_GB', 'Age_RF'], axis=1, inplace=True)

return missing_age_test

利用融合模型预测的结果填充Age的缺失值:

combined_train_test.loc[(combined_train_test.Age.isnull()), 'Age'] = fill_missing_age(missing_age_train, missing_age_test)

Fitting 10 folds for each of 1 candidates, totalling 10 fits

[Parallel(n_jobs=25)]: Using backend LokyBackend with 25 concurrent workers.

[Parallel(n_jobs=25)]: Done 5 out of 10 | elapsed: 14.3s remaining: 14.3s

[Parallel(n_jobs=25)]: Done 10 out of 10 | elapsed: 17.1s finished

C:\ProgramData\Anaconda3\lib\site-packages\sklearn\model_selection\_search.py:814: DeprecationWarning: The default of the `iid` parameter will change from True to False in version 0.22 and will be removed in 0.24. This will change numeric results when test-set sizes are unequal.

DeprecationWarning)

Age feature Best GB Params:{'learning_rate': 0.01, 'max_depth': 4, 'max_features': 3, 'n_estimators': 2000}

Age feature Best GB Score:-130.2956775989383

GB Train Error for "Age" Feature Regressor:-64.65669617233556

5 35.773942

17 31.489153

19 34.113840

26 28.621281

Name: Age_GB, dtype: float64

Fitting 10 folds for each of 1 candidates, totalling 10 fits

[Parallel(n_jobs=25)]: Using backend LokyBackend with 25 concurrent workers.

[Parallel(n_jobs=25)]: Done 5 out of 10 | elapsed: 7.8s remaining: 7.8s

[Parallel(n_jobs=25)]: Done 10 out of 10 | elapsed: 10.1s finished

C:\ProgramData\Anaconda3\lib\site-packages\sklearn\model_selection\_search.py:814: DeprecationWarning: The default of the `iid` parameter will change from True to False in version 0.22 and will be removed in 0.24. This will change numeric results when test-set sizes are unequal.

DeprecationWarning)

Age feature Best RF Params:{'max_depth': 5, 'n_estimators': 200, 'random_state': 0}

Age feature Best RF Score:-119.09495605170706

RF Train Error for "Age" Feature Regressor-96.06031484477619

5 33.459421

17 33.076798

19 34.855942

26 28.146718

Name: Age_RF, dtype: float64

shape1 (263,) (263, 2)

5 30.000675

17 30.000675

19 30.000675

26 30.000675

Name: Age, dtype: float64

missing_age_X_train = missing_age_train.drop(['Age'], axis=1)

missing_age_Y_train = missing_age_train['Age']

missing_age_X_test = missing_age_test.drop(['Age'], axis=1)

# model 1 gbm

gbm_reg = GradientBoostingRegressor(random_state=42)

gbm_reg_param_grid = {'n_estimators': [2000], 'max_depth': [4], 'learning_rate': [0.01], 'max_features': [3]}

gbm_reg_grid = model_selection.GridSearchCV(gbm_reg, gbm_reg_param_grid, cv=10, n_jobs=25, verbose=1, scoring='neg_mean_squared_error')

gbm_reg_grid

GridSearchCV(cv=10, error_score='raise-deprecating',

estimator=GradientBoostingRegressor(alpha=0.9,

criterion='friedman_mse',

init=None, learning_rate=0.1,

loss='ls', max_depth=3,

max_features=None,

max_leaf_nodes=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

n_estimators=100,

n_iter_no_change=None,

presort='auto',

random_state=42, subsample=1.0,

tol=0.0001,

validation_fraction=0.1,

verbose=0, warm_start=False),

iid='warn', n_jobs=25,

param_grid={'learning_rate': [0.01], 'max_depth': [4],

'max_features': [3], 'n_estimators': [2000]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring='neg_mean_squared_error', verbose=1)

(8) Ticket

观察Ticket的值,我们可以看到,Ticket有字母和数字之分,而对于不同的字母,可能在很大程度上就意味着船舱等级或者不同船舱的位置,也会对Survived产生一定的影响,所以我们将Ticket中的字母分开,为数字的部分则分为一类。

combined_train_test['Ticket_Letter'] = combined_train_test['Ticket'].str.split().str[0]

combined_train_test['Ticket_Letter'].apply(lambda x: 'U0' if x.isnumeric() else x)

0 A/5

1 PC

2 STON/O2.

3 U0

4 U0

...

413 A.5.

414 PC

415 SOTON/O.Q.

416 U0

417 U0

Name: Ticket_Letter, Length: 1309, dtype: object

combined_train_test['Ticket_Letter'] = combined_train_test['Ticket'].str.split().str[0]

combined_train_test['Ticket_Letter'] = combined_train_test['Ticket_Letter'].apply(lambda x: 'U0' if x.isnumeric() else x)

# 如果要提取数字信息,则也可以这样做,现在我们对数字票单纯地分为一类。

# combined_train_test['Ticket_Number'] = combined_train_test['Ticket'].apply(lambda x: pd.to_numeric(x, errors='coerce'))

# combined_train_test['Ticket_Number'].fillna(0, inplace=True)

# 将 Ticket_Letter factorize

combined_train_test['Ticket_Letter'] = pd.factorize(combined_train_test['Ticket_Letter'])[0]

(9) Cabin

因为Cabin项的缺失值确实太多了,我们很难对其进行分析,或者预测。所以这里我们可以直接将Cabin这一项特征去除。但通过上面的分析,可以知道,该特征信息的有无也与生存率有一定的关系,所以这里我们暂时保留该特征,并将其分为有和无两类。

combined_train_test.loc[combined_train_test.Cabin.isnull(), 'Cabin'] = 'U0'

combined_train_test['Cabin'] = combined_train_test['Cabin'].apply(lambda x: 0 if x == 'U0' else 1)

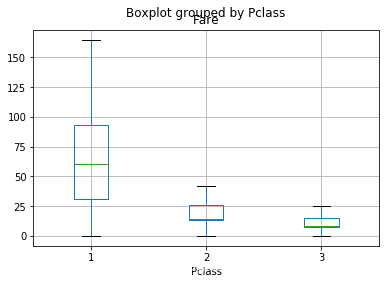

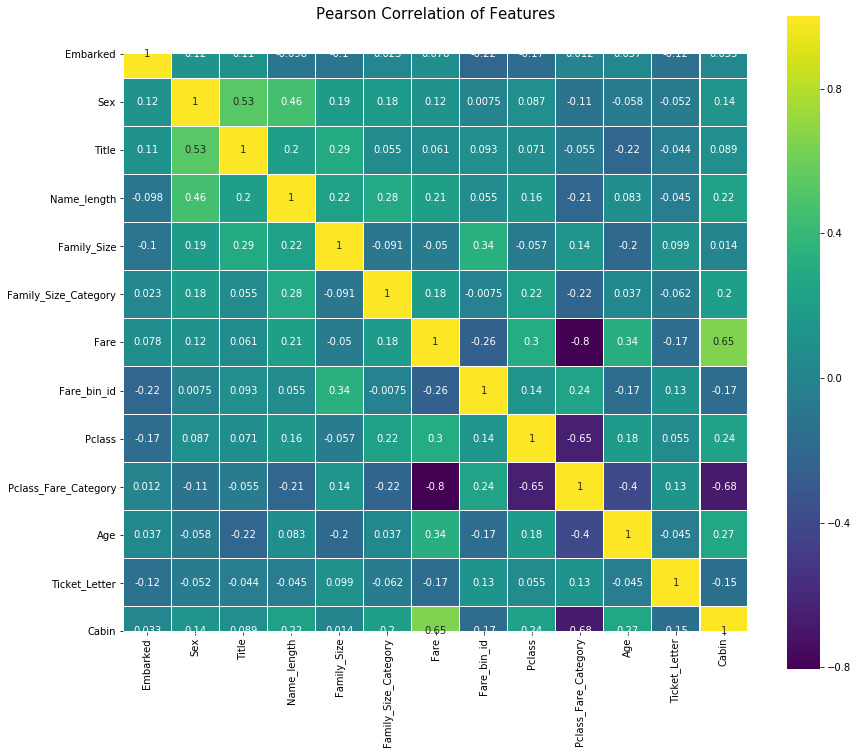

特征间相关性分析

我们挑选一些主要的特征,生成特征之间的关联图,查看特征与特征之间的相关性:

Correlation = pd.DataFrame(combined_train_test[

['Embarked', 'Sex', 'Title', 'Name_length', 'Family_Size', 'Family_Size_Category','Fare', 'Fare_bin_id', 'Pclass',

'Pclass_Fare_Category', 'Age', 'Ticket_Letter', 'Cabin']])

Correlation

| Embarked | Sex | Title | Name_length | Family_Size | Family_Size_Category | Fare | Fare_bin_id | Pclass | Pclass_Fare_Category | Age | Ticket_Letter | Cabin | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 23 | 2 | 2 | 7.250000 | 0 | 0 | 5 | 22.000000 | 0 | 0 |

| 1 | 1 | 1 | 1 | 51 | 2 | 2 | 35.641650 | 1 | 1 | 0 | 38.000000 | 1 | 1 |

| 2 | 0 | 1 | 2 | 22 | 1 | 1 | 7.925000 | 2 | 0 | 4 | 26.000000 | 2 | 0 |

| 3 | 0 | 1 | 1 | 44 | 2 | 2 | 26.550000 | 1 | 1 | 1 | 35.000000 | 3 | 1 |

| 4 | 0 | 0 | 0 | 24 | 1 | 1 | 8.050000 | 2 | 0 | 4 | 35.000000 | 3 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 413 | 0 | 0 | 0 | 18 | 1 | 1 | 8.050000 | 2 | 0 | 4 | 30.000675 | 24 | 0 |

| 414 | 1 | 1 | 4 | 28 | 1 | 1 | 36.300000 | 1 | 1 | 0 | 39.000000 | 1 | 1 |

| 415 | 0 | 0 | 0 | 28 | 1 | 1 | 7.250000 | 0 | 0 | 5 | 38.500000 | 21 | 0 |

| 416 | 0 | 0 | 0 | 19 | 1 | 1 | 8.050000 | 2 | 0 | 4 | 30.000675 | 3 | 0 |

| 417 | 1 | 0 | 3 | 24 | 3 | 2 | 7.452767 | 0 | 0 | 4 | 30.000675 | 3 | 0 |

1309 rows × 13 columns

colormap = plt.cm.viridis

plt.figure(figsize=(14,12))

plt.title('Pearson Correlation of Features', y=1.05, size=15)

sns.heatmap(Correlation.astype(float).corr(),linewidths=0.1,vmax=1.0, square=True, cmap=colormap, linecolor='white', annot=True)

<matplotlib.axes._subplots.AxesSubplot at 0x1a2ba56a888>

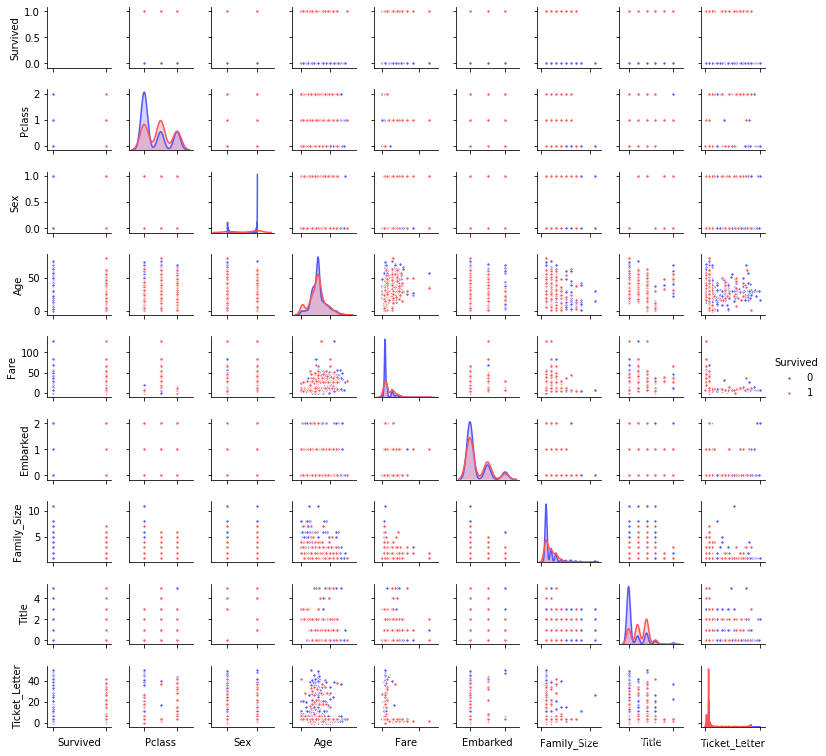

特征之间的数据分布图

g = sns.pairplot(combined_train_test[[u'Survived', u'Pclass', u'Sex', u'Age', u'Fare', u'Embarked',

u'Family_Size', u'Title', u'Ticket_Letter']], hue='Survived', palette = 'seismic',size=1.2,diag_kind = 'kde',diag_kws=dict(shade=True),plot_kws=dict(s=10) )

g.set(xticklabels=[])

<seaborn.axisgrid.PairGrid at 0x1a2ba2c6508>

输入模型前的一些处理:

1. 一些数据的正则化

这里我们将Age和fare进行正则化:

scale_age_fare = preprocessing.StandardScaler().fit(combined_train_test[['Age','Fare', 'Name_length']])

scale_age_fare

StandardScaler(copy=True, with_mean=True, with_std=True)

combined_train_test[['Age','Fare', 'Name_length']] = scale_age_fare.transform(combined_train_test[['Age','Fare', 'Name_length']])

combined_train_test[['Age','Fare', 'Name_length']]

| Age | Fare | Name_length | |

|---|---|---|---|

| 0 | -0.613832 | -0.554177 | -0.434672 |

| 1 | 0.628562 | 1.541869 | 2.511806 |

| 2 | -0.303234 | -0.504344 | -0.539904 |

| 3 | 0.395613 | 0.870667 | 1.775186 |

| 4 | 0.395613 | -0.495116 | -0.329441 |

| ... | ... | ... | ... |

| 413 | 0.007417 | -0.495116 | -0.960829 |

| 414 | 0.706211 | 1.590472 | 0.091485 |

| 415 | 0.667387 | -0.554177 | 0.091485 |

| 416 | 0.007417 | -0.495116 | -0.855598 |

| 417 | 0.007417 | -0.539208 | -0.329441 |

1309 rows × 3 columns

2. 弃掉无用特征

对于上面的特征工程中,我们从一些原始的特征中提取出了很多要融合到模型中的特征,但是我们需要剔除那些原本的我们用不到的或者非数值特征:

首先对我们的数据先进行一下备份,以便后期的再次分析:

combined_data_backup = combined_train_test

pd.set_option('max_columns',50)

combined_train_test

| Age | Cabin | Embarked | Fare | Name | Parch | PassengerId | Pclass | Sex | SibSp | Survived | Ticket | Embarked_0 | Embarked_1 | Embarked_2 | Sex_0 | Sex_1 | Title | Title_0 | Title_1 | Title_2 | Title_3 | Title_4 | Title_5 | Name_length | Fare_bin_id | Fare_0 | Fare_1 | Fare_2 | Fare_3 | Fare_4 | Pclass_Fare_Category | Pclass_0 | Pclass_1 | Pclass_2 | Pclass_3 | Pclass_4 | Pclass_5 | Family_Size | Family_Size_Category | Family_Size_Category_0 | Family_Size_Category_1 | Family_Size_Category_2 | Ticket_Letter | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -0.613832 | 0 | 0 | -0.554177 | Braund, Mr. Owen Harris | 0 | 1 | 0 | 0 | 1 | 0 | A/5 21171 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | -0.434672 | 0 | 1 | 0 | 0 | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 1 | 2 | 2 | 0 | 0 | 1 | 0 |

| 1 | 0.628562 | 1 | 1 | 1.541869 | Cumings, Mrs. John Bradley (Florence Briggs Th... | 0 | 2 | 1 | 1 | 1 | 1 | PC 17599 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 2.511806 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 2 | 2 | 0 | 0 | 1 | 1 |

| 2 | -0.303234 | 0 | 0 | -0.504344 | Heikkinen, Miss. Laina | 0 | 3 | 0 | 1 | 0 | 1 | STON/O2. 3101282 | 1 | 0 | 0 | 0 | 1 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | -0.539904 | 2 | 0 | 0 | 1 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 2 |

| 3 | 0.395613 | 1 | 0 | 0.870667 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | 0 | 4 | 1 | 1 | 1 | 1 | 113803 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1.775186 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 2 | 0 | 0 | 1 | 3 |

| 4 | 0.395613 | 0 | 0 | -0.495116 | Allen, Mr. William Henry | 0 | 5 | 0 | 0 | 0 | 0 | 373450 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | -0.329441 | 2 | 0 | 0 | 1 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 3 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 413 | 0.007417 | 0 | 0 | -0.495116 | Spector, Mr. Woolf | 0 | 1305 | 0 | 0 | 0 | 0 | A.5. 3236 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | -0.960829 | 2 | 0 | 0 | 1 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 24 |

| 414 | 0.706211 | 1 | 1 | 1.590472 | Oliva y Ocana, Dona. Fermina | 0 | 1306 | 1 | 1 | 0 | 0 | PC 17758 | 0 | 1 | 0 | 0 | 1 | 4 | 0 | 0 | 0 | 0 | 1 | 0 | 0.091485 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 1 |

| 415 | 0.667387 | 0 | 0 | -0.554177 | Saether, Mr. Simon Sivertsen | 0 | 1307 | 0 | 0 | 0 | 0 | SOTON/O.Q. 3101262 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0.091485 | 0 | 1 | 0 | 0 | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 1 | 0 | 21 |

| 416 | 0.007417 | 0 | 0 | -0.495116 | Ware, Mr. Frederick | 0 | 1308 | 0 | 0 | 0 | 0 | 359309 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | -0.855598 | 2 | 0 | 0 | 1 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 3 |

| 417 | 0.007417 | 0 | 1 | -0.539208 | Peter, Master. Michael J | 1 | 1309 | 0 | 0 | 1 | 0 | 2668 | 0 | 1 | 0 | 1 | 0 | 3 | 0 | 0 | 0 | 1 | 0 | 0 | -0.329441 | 0 | 1 | 0 | 0 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 1 | 0 | 3 | 2 | 0 | 0 | 1 | 3 |

1309 rows × 44 columns

combined_train_test[['PassengerId', 'Embarked', 'Sex', 'Name', 'Title', 'Fare_bin_id', 'Pclass_Fare_Category',

'Parch', 'SibSp', 'Family_Size_Category', 'Ticket']]

| PassengerId | Embarked | Sex | Name | Title | Fare_bin_id | Pclass_Fare_Category | Parch | SibSp | Family_Size_Category | Ticket | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 0 | Braund, Mr. Owen Harris | 0 | 0 | 5 | 0 | 1 | 2 | A/5 21171 |

| 1 | 2 | 1 | 1 | Cumings, Mrs. John Bradley (Florence Briggs Th... | 1 | 1 | 0 | 0 | 1 | 2 | PC 17599 |

| 2 | 3 | 0 | 1 | Heikkinen, Miss. Laina | 2 | 2 | 4 | 0 | 0 | 1 | STON/O2. 3101282 |

| 3 | 4 | 0 | 1 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | 1 | 1 | 1 | 0 | 1 | 2 | 113803 |

| 4 | 5 | 0 | 0 | Allen, Mr. William Henry | 0 | 2 | 4 | 0 | 0 | 1 | 373450 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 413 | 1305 | 0 | 0 | Spector, Mr. Woolf | 0 | 2 | 4 | 0 | 0 | 1 | A.5. 3236 |

| 414 | 1306 | 1 | 1 | Oliva y Ocana, Dona. Fermina | 4 | 1 | 0 | 0 | 0 | 1 | PC 17758 |

| 415 | 1307 | 0 | 0 | Saether, Mr. Simon Sivertsen | 0 | 0 | 5 | 0 | 0 | 1 | SOTON/O.Q. 3101262 |

| 416 | 1308 | 0 | 0 | Ware, Mr. Frederick | 0 | 2 | 4 | 0 | 0 | 1 | 359309 |

| 417 | 1309 | 1 | 0 | Peter, Master. Michael J | 3 | 0 | 4 | 1 | 1 | 2 | 2668 |

1309 rows × 11 columns

combined_train_test.drop(['PassengerId', 'Embarked', 'Sex', 'Name', 'Title', 'Fare_bin_id', 'Pclass_Fare_Category',

'Parch', 'SibSp', 'Family_Size_Category', 'Ticket'],axis=1,inplace=True)

3. 将训练数据和测试数据分开:

combined_train_test[:891]

| Age | Cabin | Fare | Pclass | Survived | Embarked_0 | Embarked_1 | Embarked_2 | Sex_0 | Sex_1 | Title_0 | Title_1 | Title_2 | Title_3 | Title_4 | Title_5 | Name_length | Fare_0 | Fare_1 | Fare_2 | Fare_3 | Fare_4 | Pclass_0 | Pclass_1 | Pclass_2 | Pclass_3 | Pclass_4 | Pclass_5 | Family_Size | Family_Size_Category_0 | Family_Size_Category_1 | Family_Size_Category_2 | Ticket_Letter | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -0.613832 | 0 | -0.554177 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | -0.434672 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 2 | 0 | 0 | 1 | 0 |

| 1 | 0.628562 | 1 | 1.541869 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 2.511806 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 1 |

| 2 | -0.303234 | 0 | -0.504344 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | -0.539904 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 2 |

| 3 | 0.395613 | 1 | 0.870667 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1.775186 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 1 | 3 |

| 4 | 0.395613 | 0 | -0.495116 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | -0.329441 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 3 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 886 | -0.225584 | 0 | -0.129677 | 2 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | -0.645135 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 3 |

| 887 | -0.846781 | 1 | 1.125368 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0.091485 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 3 |

| 888 | 0.007417 | 0 | -0.656611 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1.354261 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 4 | 0 | 0 | 1 | 15 |

| 889 | -0.303234 | 1 | 1.125368 | 1 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | -0.645135 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 3 |

| 890 | 0.162664 | 0 | -0.517264 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | -0.855598 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 3 |

891 rows × 33 columns

combined_train_test.shape

(1309, 33)

train_data = combined_train_test[:891]

test_data = combined_train_test[891:]

titanic_train_data_X = train_data.drop(['Survived'],axis=1)

titanic_train_data_Y = train_data['Survived']

titanic_test_data_X = test_data.drop(['Survived'],axis=1)

titanic_train_data_X.shape

(891, 32)

6. 模型融合及测试

模型融合的过程需要分几步来进行。

(1) 利用不同的模型来对特征进行筛选,选出较为重要的特征: