飞桨领航团AI达人创造营

数据集的获取途径及获取技巧

数据集的获取途径

- aistudio 开源数据集

- Kaggle 有趣比较火热的数据集

- 天池

- DataFountain

- 科大讯飞官网

- COCO数据集

完整流程概述

-

图像处理完整流程

-

图片数据获取

-

图片数据清洗

-----初步了解数据,筛选掉不合适的图片

-

图片数据标注

-

图片数据预处理 data processing

- 标准化 standardlization

- 中心化 = 去均值 mean normallization

- 将各个维度中心化到0

- 目的:加快收敛速度,在某些激活函数上表现更好

- 归一化 = 除以标准差

- 将各个维度方差标准化处于[-1,1]之间

- 目的:提高收敛效率,统一不同输入范围的数据对于模型学习的影响,映射到激活函数有效梯度的值域

- 中心化 = 去均值 mean normallization

- 标准化 standardlization

-

图片数据准备 data preparation(训练+测试阶段)

- 划分训练集、验证集、测试集

-

图片数据增强 data augmentation (训练阶段)

CV常见的数据增强

- 随机旋转

- 随机水平或垂直反转

- 缩放

- 裁剪

- 平移

- 调整亮度、对比度、饱和度、色差等

- 注入噪声

- 基于生成对抗网络GAN做数据增强 AutoAugment等

-

-

纯数据处理完整流程:

- 1.感知数据

??----初步了解数据

??----记录特征的数量和特征的名称

??----抽样了解记录中的数值特点描述性统计结果

??----特征类型

??----与相关知识领域数据结合,特征融合

- 2.数据清理

??----转换数据类型

??----处理缺失数据

??----处理离群数据

- 3.特征变换

??----特征数值化

??----特征二值化

??----OneHot编码

??----特征离散化特征

??----规范化

????区间变换

????标准化

????归一化

- 4.特征选择

??----封装器法

????循序特征选择

????穷举特征选择

????递归特征选择

??----过滤器法

??----嵌入法

- 5.特征抽取

??----无监督特征抽取

????主成分分析

????因子分析

??----有监督特征抽取

拓展小知识:

???皮尔森相关系数是用来反应俩变量之间相似程度的统计量,在机器学习中可以用来计算特征与类别间的相似度,即可判断所提取到的特征和类别是正相关、负相关还是没有相关程度。

Pearson系数的取值范围为[-1,1],当值为负时,为负相关,当值为正时,为正相关,绝对值越大,则正/负相关的程度越大。若数据无重复值,且两个变量完全单调相关时,spearman相关系数为+1或-1。当两个变量独立时相关系统为0,但反之不成立。

用Corr()函数即可,(保证行相同)。

公式如下:

ρ X , Y = cov ? ( X , Y ) σ X σ Y = E ( ( X ? μ X ) ( Y ? μ Y ) ) σ X σ Y = E ( X Y ) ? E ( X ) E ( Y ) E ( X 2 ) ? E 2 ( X ) E ( Y 2 ) ? E 2 ( Y ) \rho_{X, Y}=\frac{\operatorname{cov}(X, Y)}{\sigma_{X} \sigma_{Y}}=\frac{E\left(\left(X-\mu_{X}\right)\left(Y-\mu_{Y}\right)\right)}{\sigma_{X} \sigma_{Y}}=\frac{E(X Y)-E(X) E(Y)}{\sqrt{E\left(X^{2}\right)-E^{2}(X)} \sqrt{E\left(Y^{2}\right)-E^{2}(Y)}} ρX,Y?=σX?σY?cov(X,Y)?=σX?σY?E((X?μX?)(Y?μY?))?=E(X2)?E2(X)?E(Y2)?E2(Y)?E(XY)?E(X)E(Y)?

当两个变量的标准差都不为零时,相关系数才有定义,Pearson相关系数适用于:

(1)、两个变量之间是线性关系,都是连续数据。

(2)、两个变量的总体是正态分布,或接近正态的单峰分布。

(3)、两个变量的观测值是成对的,每对观测值之间相互独立。

数据处理

官方数据处理成VOC或者COCO

COCO2017数据集介绍:

?? COCO数据集是Microsoft制作收集用于Detection + Segmentation + Localization + Captioning的数据集,作者收集了其2017年的版本,一共有25G左右的图片和600M左右的标签文件。

COCO数据集共有小类80个,分别为:

[‘person’, ‘bicycle’, ‘car’, ‘motorcycle’, ‘airplane’, ‘bus’, ‘train’, ‘truck’, ‘boat’, ‘traffic light’, ‘fire hydrant’, ‘stop sign’, ‘parking meter’, ‘bench’, ‘bird’, ‘cat’, ‘dog’, ‘horse’, ‘sheep’, ‘cow’, ‘elephant’, ‘bear’, ‘zebra’, ‘giraffe’, ‘backpack’, ‘umbrella’, ‘handbag’, ‘tie’, ‘suitcase’, ‘frisbee’, ‘skis’, ‘snowboard’, ‘sports ball’, ‘kite’, ‘baseball bat’, ‘baseball glove’, ‘skateboard’, ‘surfboard’, ‘tennis racket’, ‘bottle’, ‘wine glass’, ‘cup’, ‘fork’, ‘knife’, ‘spoon’, ‘bowl’, ‘banana’, ‘apple’, ‘sandwich’, ‘orange’, ‘broccoli’, ‘carrot’, ‘hot dog’, ‘pizza’, ‘donut’, ‘cake’, ‘chair’, ‘couch’, ‘potted plant’, ‘bed’, ‘dining table’, ‘toilet’, ‘tv’, ‘laptop’, ‘mouse’, ‘remote’, ‘keyboard’, ‘cell phone’, ‘microwave’, ‘oven’, ‘toaster’, ‘sink’, ‘refrigerator’, ‘book’, ‘clock’, ‘vase’, ‘scissors’, ‘teddy bear’, ‘hair drier’, ‘toothbrush’]

大类12个,分别为

[‘appliance’, ‘food’, ‘indoor’, ‘accessory’, ‘electronic’, ‘furniture’, ‘vehicle’, ‘sports’, ‘animal’, ‘kitchen’, ‘person’, ‘outdoor’]

COCO格式,文件夹路径样式:

COCO_2017/

├── val2017 # 总的验证集

├── train2017 # 总的训练集

├── annotations # COCO标注

│ ├── instances_train2017.json # object instances(目标实例) ---目标实例的训练集标注

│ ├── instances_val2017.json # object instances(目标实例) ---目标实例的验证集标注

│ ├── person_keypoints_train2017.json # object keypoints(目标上的关键点) ---关键点检测的训练集标注

│ ├── person_keypoints_val2017.json # object keypoints(目标上的关键点) ---关键点检测的验证集标注

│ ├── captions_train2017.json # image captions(看图说话) ---看图说话的训练集标注

│ ├── captions_val2017.json # image captions(看图说话) ---看图说话的验证集标注

?

VOC格式,文件夹路径样式:

VOC_2017/

├── Annotations # 每张图片相关的标注信息,xml格式

├── ImageSets

│ ├── Main # 各个类别所在图片的文件名

├── JPEGImages # 包括训练验证测试用到的所有图片

├── label_list.txt # 标签的类别数

├── train_val.txt #训练集

├── val.txt # 验证集

Object Keypoint 类型的标注格式:

{

"info": info,

"licenses": [license],

"images": [image],

"annotations": [annotation],

"categories": [category]

}

?? 其中,info、licenses、images这三个结构体/类型,在不同的JSON文件中这三个类型是一样的,定义是共享的(object instances(目标实例), object keypoints(目标上的关键点), image captions(看图说话))。不共享的是annotation和category这两种结构体,他们在不同类型的JSON文件中是不一样的。 新增的keypoints是一个长度为3 X k的数组,其中k是category中keypoints的总数量。每一个keypoint是一个长度为3的数组,第一和第二个元素分别是x和y坐标值,第三个元素是个标志位v,v为0时表示这个关键点没有标注(这种情况下x=y=v=0),v为1时表示这个关键点标注了但是不可见(被遮挡了),v为2时表示这个关键点标注了同时也可见。

um_keypoints表示这个目标上被标注的关键点的数量(v>0),比较小的目标上可能就无法标注关键点。

annotation{

"keypoints": [x1,y1,v1,...],

"num_keypoints": int,

"id": int,

"image_id": int,

"category_id": int,

"segmentation": RLE or [polygon],

"area": float,

"bbox": [x,y,width,height],

"iscrowd": 0 or 1,

}

示例:

{

"segmentation": [[125.12,539.69,140.94,522.43,100.67,496.54,84.85,469.21,73.35,450.52,104.99,342.65,168.27,290.88,179.78,288,189.84,286.56,191.28,260.67,202.79,240.54,221.48,237.66,248.81,243.42,257.44,256.36,253.12,262.11,253.12,275.06,299.15,233.35,329.35,207.46,355.24,206.02,363.87,206.02,365.3,210.34,373.93,221.84,363.87,226.16,363.87,237.66,350.92,237.66,332.22,234.79,314.97,249.17,271.82,313.89,253.12,326.83,227.24,352.72,214.29,357.03,212.85,372.85,208.54,395.87,228.67,414.56,245.93,421.75,266.07,424.63,276.13,437.57,266.07,450.52,284.76,464.9,286.2,479.28,291.96,489.35,310.65,512.36,284.76,549.75,244.49,522.43,215.73,546.88,199.91,558.38,204.22,565.57,189.84,568.45,184.09,575.64,172.58,578.52,145.26,567.01,117.93,551.19,133.75,532.49]],

"num_keypoints": 10,

"area": 47803.27955,

"iscrowd": 0,

"keypoints": [0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,142,309,1,177,320,2,191,398,2,237,317,2,233,426,2,306,233,2,92,452,2,123,468,2,0,0,0,251,469,2,0,0,0,162,551,2],

"image_id": 425226,"bbox": [73.35,206.02,300.58,372.5],"category_id": 1,

"id": 183126},

categories字段

?? 最后,对于每一个category结构体,相比Object Instance中的category新增了2个额外的字段,keypoints是一个长度为k的数组,包含了每个关键点的名字;skeleton定义了各个关键点之间的连接性(比如人的左手腕和左肘就是连接的,但是左手腕和右手腕就不是)。

目前,COCO的keypoints只标注了person category (分类为人)。

{

"id": int,

"name": str,

"supercategory": str,

"keypoints": [str],

"skeleton": [edge]

}

示例:

{

"supercategory": "person",

"id": 1,

"name": "person",

"keypoints": ["nose","left_eye","right_eye","left_ear","right_ear","left_shoulder","right_shoulder","left_elbow","right_elbow","left_wrist","right_wrist","left_hip","right_hip","left_knee","right_knee","left_ankle","right_ankle"],

"skeleton": [[16,14],[14,12],[17,15],[15,13],[12,13],[6,12],[7,13],[6,7],[6,8],[7,9],[8,10],[9,11],[2,3],[1,2],[1,3],[2,4],[3,5],[4,6],[5,7]]

}

转换脚本:

# 创建索引

from pycocotools.coco import COCO

import os

import shutil

from tqdm import tqdm

import skimage.io as io

import matplotlib.pyplot as plt

import cv2

from PIL import Image, ImageDraw

from shutil import move

import xml.etree.ElementTree as ET

from random import shuffle

# 保存路径

savepath = "VOCData/"

img_dir = savepath + 'images/' #images 存取所有照片

anno_dir = savepath + 'Annotations/' #Annotations存取xml文件信息

datasets_list=['train2017', 'val2017']

classes_names = ['person']

# 读取COCO数据集地址 Store annotations and train2017/val2017/... in this folder

dataDir = './'

#写好模板,里面的%s与%d 后面文件输入输出流改变 -------转数据集阶段--------

headstr = """

<annotation>

<folder>VOC</folder>

<filename>%s</filename>

<source>

<database>My Database</database>

<annotation>COCO</annotation>

<image>flickr</image>

<flickrid>NULL</flickrid>

</source>

<owner>

<flickrid>NULL</flickrid>

<name>company</name>

</owner>

<size>

<width>%d</width>

<height>%d</height>

<depth>%d</depth>

</size>

<segmented>0</segmented>

"""

objstr = """

<object>

<name>%s</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<difficult>0</difficult>

<bndbox>

<xmin>%d</xmin>

<ymin>%d</ymin>

<xmax>%d</xmax>

<ymax>%d</ymax>

</bndbox>

</object>

"""

tailstr = '''

</annotation>

'''

# if the dir is not exists,make it,else delete it

def mkr(path):

if os.path.exists(path):

shutil.rmtree(path)

os.mkdir(path)

else:

os.mkdir(path)

mkr(img_dir)

mkr(anno_dir)

def id2name(coco): # 生成字典 提取数据中的id,name标签的值 ---------处理数据阶段---------

classes = dict()

for cls in coco.dataset['categories']:

classes[cls['id']] = cls['name']

return classes

def write_xml(anno_path, head, objs, tail): #把提取的数据写入到相应模板的地方

f = open(anno_path, "w")

f.write(head)

for obj in objs:

f.write(objstr % (obj[0], obj[1], obj[2], obj[3], obj[4]))

f.write(tail)

def save_annotations_and_imgs(coco, dataset, filename, objs):

# eg:COCO_train2014_000000196610.jpg-->COCO_train2014_000000196610.xml

anno_path = anno_dir + filename[:-3] + 'xml'

img_path = dataDir + dataset + '/' + filename

dst_imgpath = img_dir + filename

img = cv2.imread(img_path)

if (img.shape[2] == 1):

print(filename + " not a RGB image")

return

shutil.copy(img_path, dst_imgpath)

head = headstr % (filename, img.shape[1], img.shape[0], img.shape[2])

tail = tailstr

write_xml(anno_path, head, objs, tail)

def showimg(coco, dataset, img, classes, cls_id, show=True):

global dataDir

I = Image.open('%s/%s/%s' % (dataDir, dataset, img['file_name']))# 通过id,得到注释的信息

annIds = coco.getAnnIds(imgIds=img['id'], catIds=cls_id, iscrowd=None)

anns = coco.loadAnns(annIds)

# coco.showAnns(anns)

objs = []

for ann in anns:

class_name = classes[ann['category_id']]

if class_name in classes_names:

if 'bbox' in ann:

bbox = ann['bbox']

xmin = int(bbox[0])

ymin = int(bbox[1])

xmax = int(bbox[2] + bbox[0])

ymax = int(bbox[3] + bbox[1])

obj = [class_name, xmin, ymin, xmax, ymax]

objs.append(obj)

return objs

for dataset in datasets_list:

# ./COCO/annotations/instances_train2014.json

annFile = '{}/annotations/instances_{}.json'.format(dataDir, dataset)

# COCO API for initializing annotated data

coco = COCO(annFile)

'''

COCO 对象创建完毕后会输出如下信息:

loading annotations into memory...

Done (t=0.81s)

creating index...

index created!

至此, json 脚本解析完毕, 并且将图片和对应的标注数据关联起来.

'''

# show all classes in coco

classes = id2name(coco)

print(classes)

# [1, 2, 3, 4, 6, 8]

classes_ids = coco.getCatIds(catNms=classes_names)

print(classes_ids)

for cls in classes_names:

# Get ID number of this class

cls_id = coco.getCatIds(catNms=[cls])

img_ids = coco.getImgIds(catIds=cls_id)

# imgIds=img_ids[0:10]

for imgId in tqdm(img_ids):

img = coco.loadImgs(imgId)[0]

filename = img['file_name']

objs = showimg(coco, dataset, img, classes, classes_ids, show=False)

save_annotations_and_imgs(coco, dataset, filename, objs)

out_img_base = 'VOCData/images'

out_xml_base = 'VOCData/Annotations'

img_base = 'VOCData/images/'

xml_base = 'VOCData/Annotations/'

if not os.path.exists(out_img_base):

os.mkdir(out_img_base)

if not os.path.exists(out_xml_base):

os.mkdir(out_xml_base)

for img in tqdm(os.listdir(img_base)):

xml = img.replace('.jpg', '.xml')

src_img = os.path.join(img_base, img)

src_xml = os.path.join(xml_base, xml)

dst_img = os.path.join(out_img_base, img)

dst_xml = os.path.join(out_xml_base, xml)

if os.path.exists(src_img) and os.path.exists(src_xml):

move(src_img, dst_img)

move(src_xml, dst_xml)

def extract_xml(infile):

with open(infile,'r') as f: #解析xml中的name标签

xml_text = f.read()

root = ET.fromstring(xml_text)

classes = []

for obj in root.iter('object'):

cls_ = obj.find('name').text

classes.append(cls_)

return classes

if __name__ == '__main__':

base = 'VOCData/Annotations/'

Xmls=[]

# Xmls = sorted([v for v in os.listdir(base) if v.endswith('.xml')])

for v in os.listdir(base):

if v.endswith('.xml'):

Xmls.append(str(v))

# iterable -- 可迭代对象。key -- 主要是用来进行比较的元素,只有一个参数,具体的函数的参数就是取自于可迭代对象中,指定可迭代对象中的一个元素来进行排序。reverse -- 排序规则,reverse = True 降序 , reverse = False 升序(默认)。

print('-[INFO] total:', len(Xmls))

# print(Xmls)

labels = {'person': 0}

for xml in Xmls:

infile = os.path.join(base, xml)

# print(infile)

cls_ = extract_xml(infile)

for c in cls_:

if not c in labels:

print(infile, c)

raise

labels[c] += 1

for k, v in labels.items():

print('-[Count] {} total:{} per:{}'.format(k, v, v/len(Xmls)))

自定义数据集进行训练

- 常见标注工具

- labelimg

- labelme

- PPOCRLabel

- 制作VOC与COCO格式数据集并划分:

- 利用paddle相关工具做处理可得到想要的数据格式(paddlex)

图像处理方法

-

图像的本质

- 位图:

- 由像素点定义

- 放大会糊

- 文件体积较大

- 色彩表现丰富逼真

- 矢量图:

- 超矢量定义

- 放大不太模糊

- 文件体积较小

- 表现力差

- 位图:

-

为什么要做数据增强?

很多深度学习模型的复杂度较高,且在数据量较少的情况下容易造成过拟合(受到训练集中很多无关因素的影响)

模型训练及评估

-

详细调优见下次课

-

拓展介绍mAP:

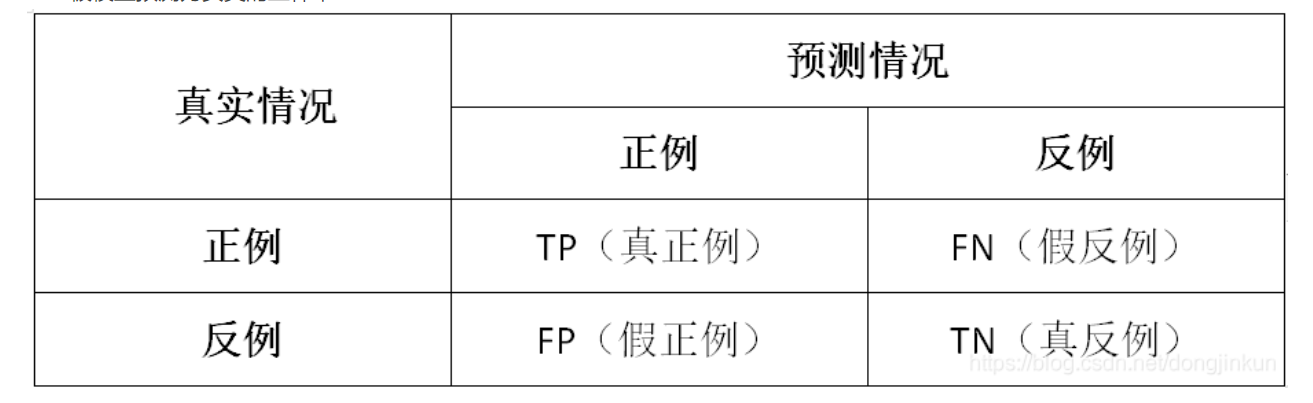

在机器学习领域中,用于评价一个模型的性能有多种指标,其中几项就是FP、FN、TP、TN、精确率(Precision)、召回率(Recall)、准确率(Accuracy)。

mean Average Precision, 即各类别AP的平均值,是AP:PR 曲线下面积。

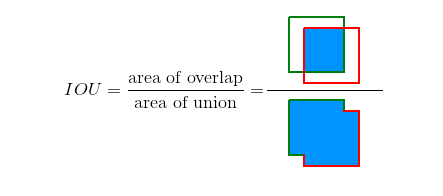

此前先了解一下IOU评判标准:

TP、FP、FN、TN

常见的评判方式,第一位的T,F代表正确或者错误。第二位的P和N代表判断的正确或者错误

-

True Positive (TP): I o U > I O U threshold? \mathrm{IoU}>I O U_{\text {threshold }} IoU>IOUthreshold?? (IOU的阈值一般取0.5)的所有检测框数量(同一Ground Truth只计算一次),可以理解为真实框,或者标准答案

-

False Positive (FP): I o U < I O U threshold? \mathrm{IoU}<I O U_{\text {threshold }} IoU<IOUthreshold?? 的所有检测框数量

-

False Negative (FN): 没有检测到的 GT 的数量

-

True Negative (TN): mAP中无用到

查准率(Precision): Precision = T P T P + F P = T P ?all?detections? =\frac{T P}{T P+F P}=\frac{T P}{\text { all detections }} =TP+FPTP?=?all?detections?TP?

查全率(Recall): Recall = T P T P + F N = T P ?all?ground?truths? =\frac{T P}{T P+F N}=\frac{T P}{\text { all ground truths }} =TP+FNTP?=?all?ground?truths?TP?

二者绘制的曲线称为 P-R 曲线:

查准率:P 为纵轴y 查全率:R 为横轴x轴,如下图

mAP值即为,PR曲线下的面积。

模型推理预测

-

可使用pdx.det.visualize将结果可视化并保存

O U_{\text {threshold }}$ (IOU的阈值一般取0.5)的所有检测框数量(同一Ground Truth只计算一次),可以理解为真实框,或者标准答案 -

False Positive (FP): I o U < I O U threshold? \mathrm{IoU}<I O U_{\text {threshold }} IoU<IOUthreshold?? 的所有检测框数量

-

False Negative (FN): 没有检测到的 GT 的数量

-

True Negative (TN): mAP中无用到

[外链图片转存中…(img-ZEwTn7KU-1627568001199)]

查准率(Precision): Precision = T P T P + F P = T P ?all?detections? =\frac{T P}{T P+F P}=\frac{T P}{\text { all detections }} =TP+FPTP?=?all?detections?TP?

查全率(Recall): Recall = T P T P + F N = T P ?all?ground?truths? =\frac{T P}{T P+F N}=\frac{T P}{\text { all ground truths }} =TP+FNTP?=?all?ground?truths?TP?

二者绘制的曲线称为 P-R 曲线:

查准率:P 为纵轴y 查全率:R 为横轴x轴,如下图

[外链图片转存中…(img-vovm0pGy-1627568001199)]

mAP值即为,PR曲线下的面积。

模型推理预测

- 可使用pdx.det.visualize将结果可视化并保存