来自 B 站刘二大人的《PyTorch深度学习实践》P10 的学习笔记

之前的由线性层组成的全连接网络是深度学习常用的分类器,由于全连接的特性,网络提取的特征未免冗余或者抓不住重点,比如像素的相对空间位置会被忽略。

所以,在全连接层前面加入特征提取器是十分有必要的,卷积神经网络就是最好的特征提取器。

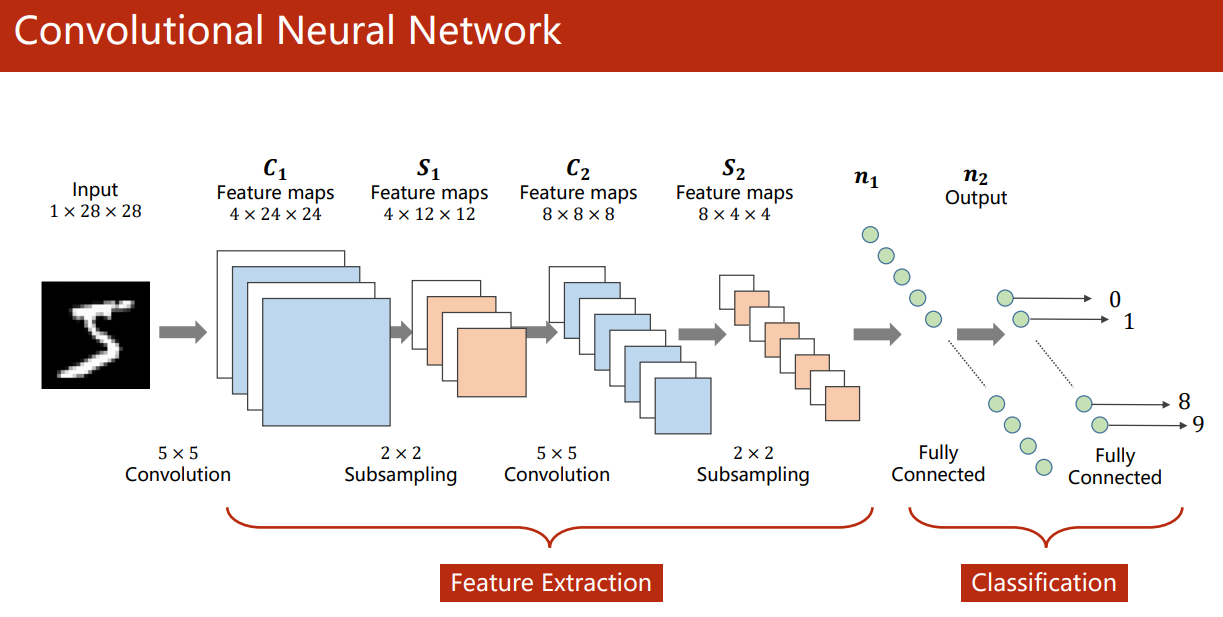

CNN

关于卷积神经网络的输入输出特征图,以及多通道多批量的特征处理,参考:卷积神经网络的输入输出特征图大小计算。

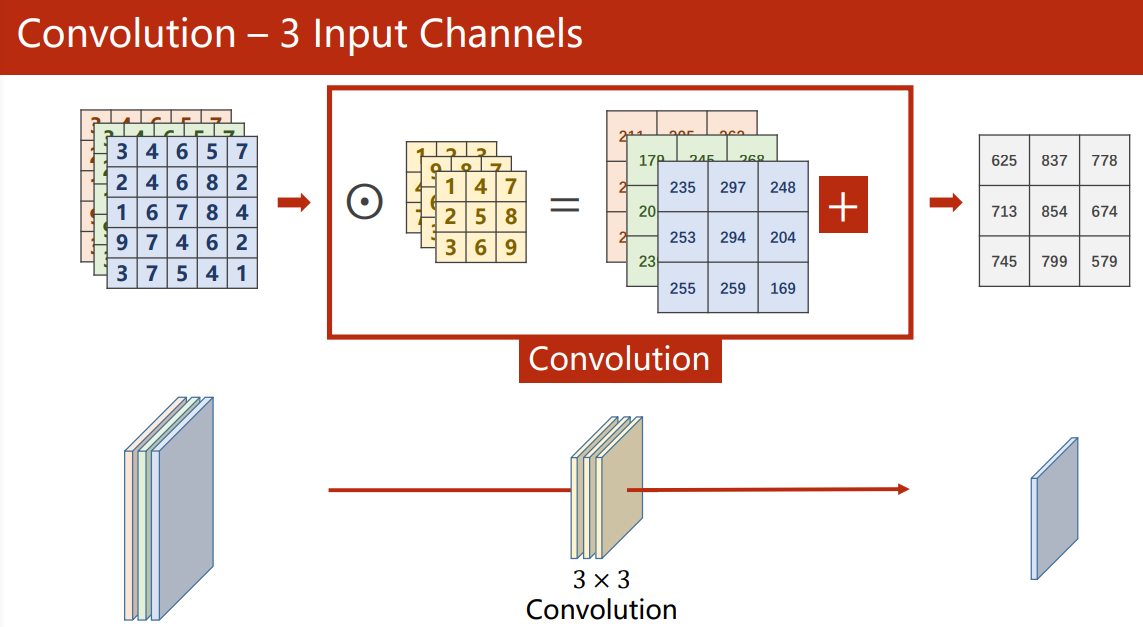

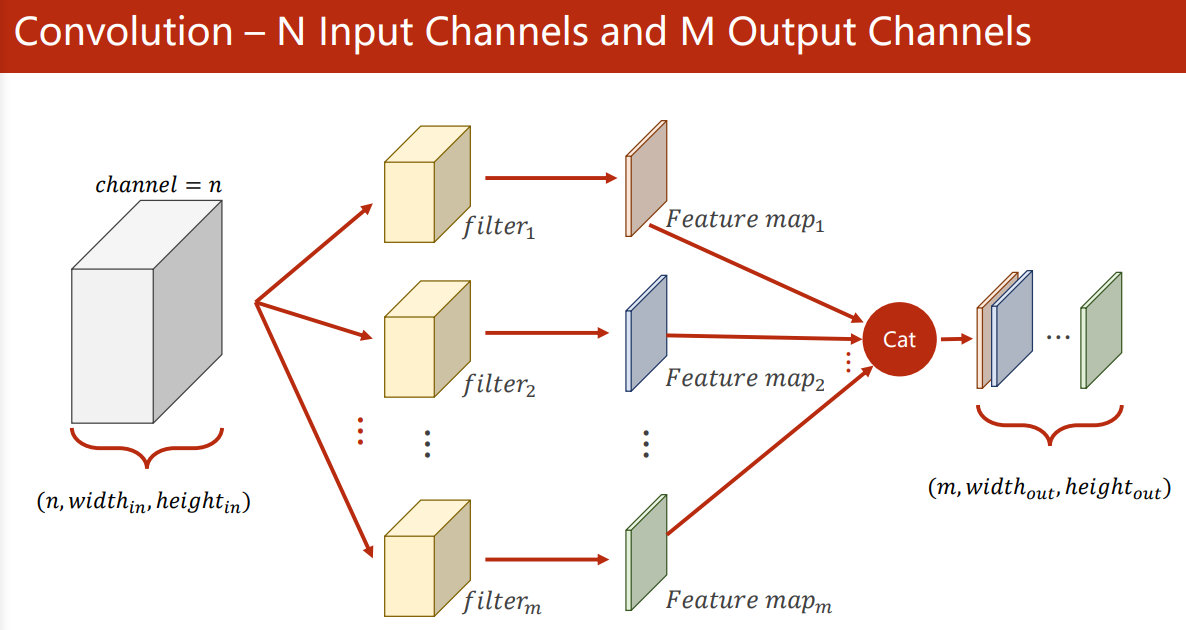

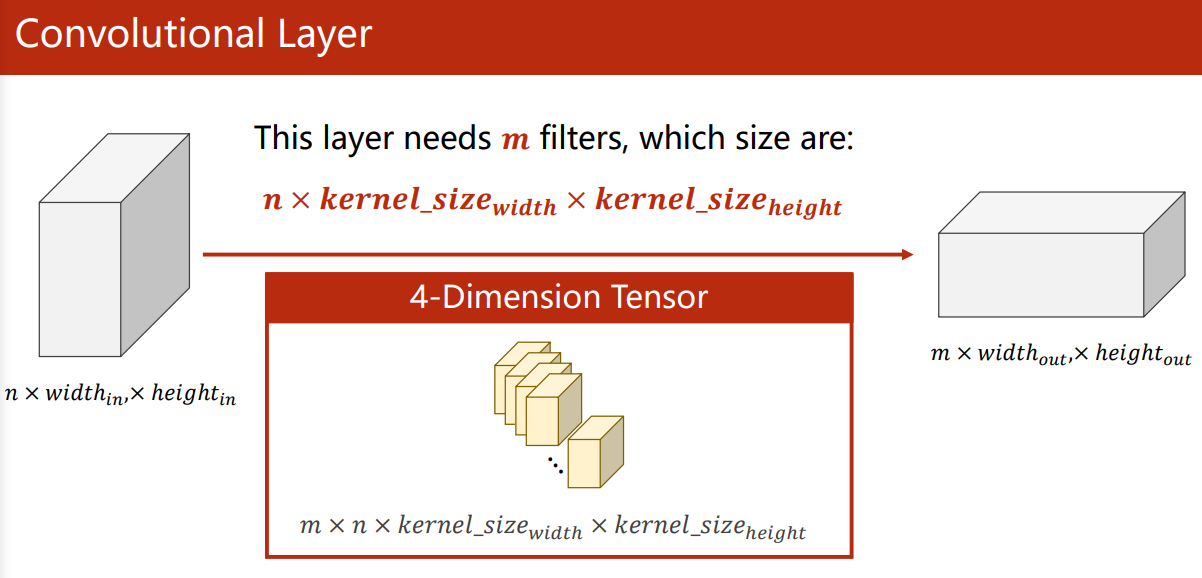

单输出通道的卷积核:输入图像的每个通道分别对应一片卷积核矩阵,一次卷积最后会把输入图像的通道个数的特征图相加,最终得到一个单通道的特征图。

所以,

-

每个卷积核的通道数和输入图像的通道数量一致,一个卷积核只输出一个单通道的特征图:

-

卷积核的数量决定输出特征图的个数,最后通过堆叠来形成一个多通道的特征图:

-

卷积核的大小和输入图像大小无关,只有需要不同卷积层之间的特征图相加的时候,才需要考虑卷积核的大小和步幅,因为此时要求相加的特征图大小一致

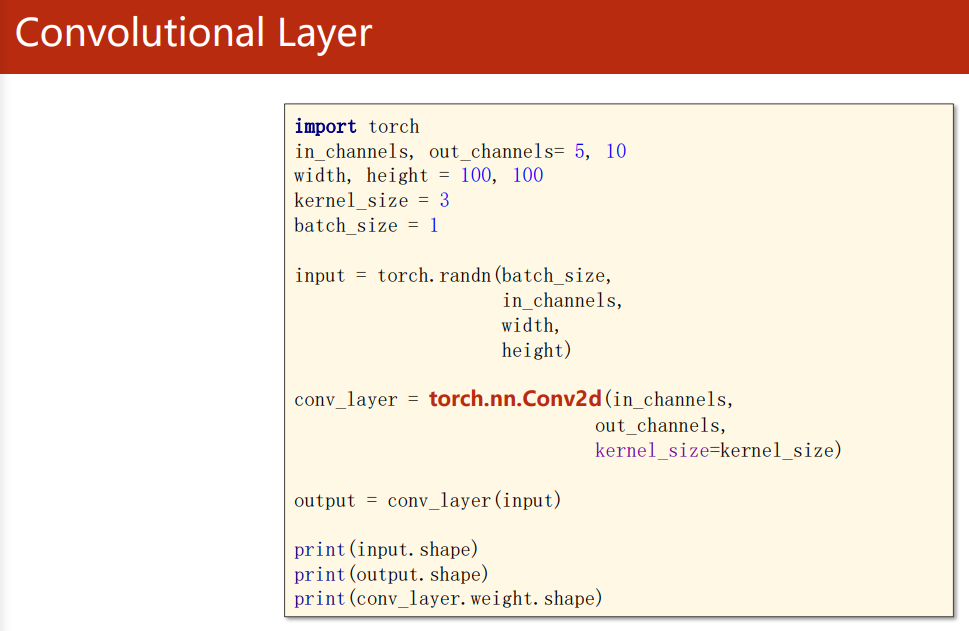

PyTorch 卷积层

需要注意的是 PyTorch 中所有的输入数据都要求是 mini-batch 的,所以我们构建一个随机的 Tensor 作为输入的时候,torch.randn(batch_size, in_channels, width, height) 中第一个就要设置 batch-size 参数。

-

torch.nn.Conv2d(in_channels, out_channels, kernel_size)这里的三个参数是必须设置的。

设置填充(padding)、步幅(stride)、池化(pooling)的目的是为了控制输出特征图的大小,计算公式如下:

o u t 边 长 = i n 边 长 + 2 ? padding ? kernel-size 2 ? stride + 1 out_{边长} = \frac{in_{边长} + 2 \cdot \text{padding} - \text{kernel-size}}{2 \cdot \text{stride}} + 1 out边长?=2?stridein边长?+2?padding?kernel-size?+1

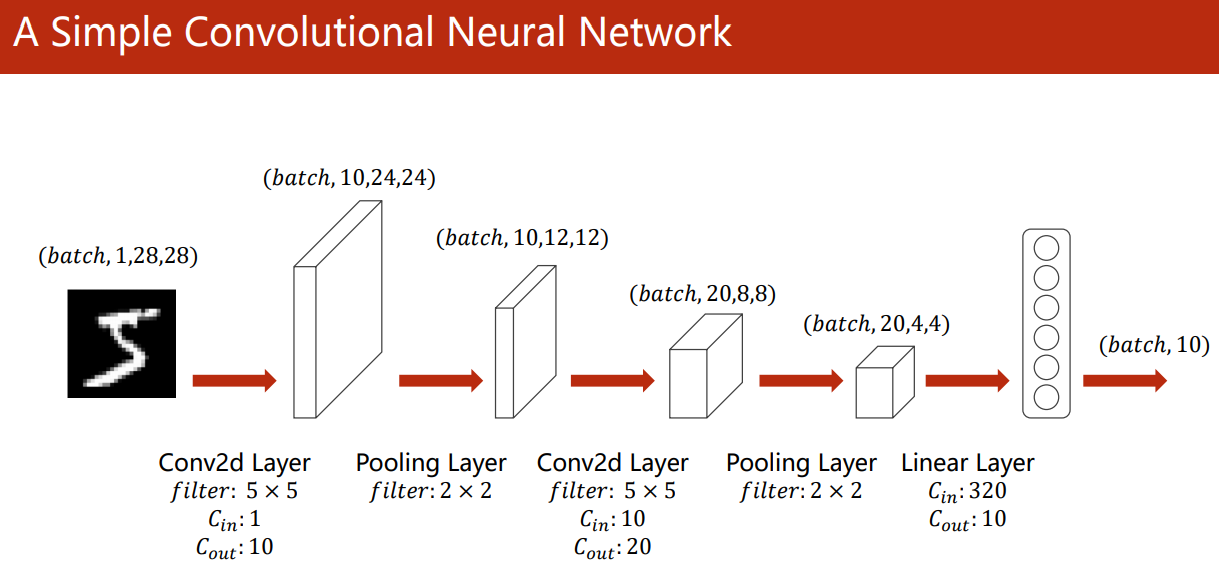

一个简单的 CNN

完整代码:

import os

import copy

import numpy as np

import torch

from torch import nn, optim

from torch.nn import functional as F

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

trans = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

train_set = datasets.MNIST(root="../datasets/mnist",

train=True,

transform=trans, # 原始是 PIL Image 格式

download=True)

test_set = datasets.MNIST(root="../datasets/mnist",

train=False,

transform=trans,

download=True)

train_loader = DataLoader(train_set, batch_size=64, shuffle=True)

test_loader = DataLoader(test_set, batch_size=64, shuffle=True)

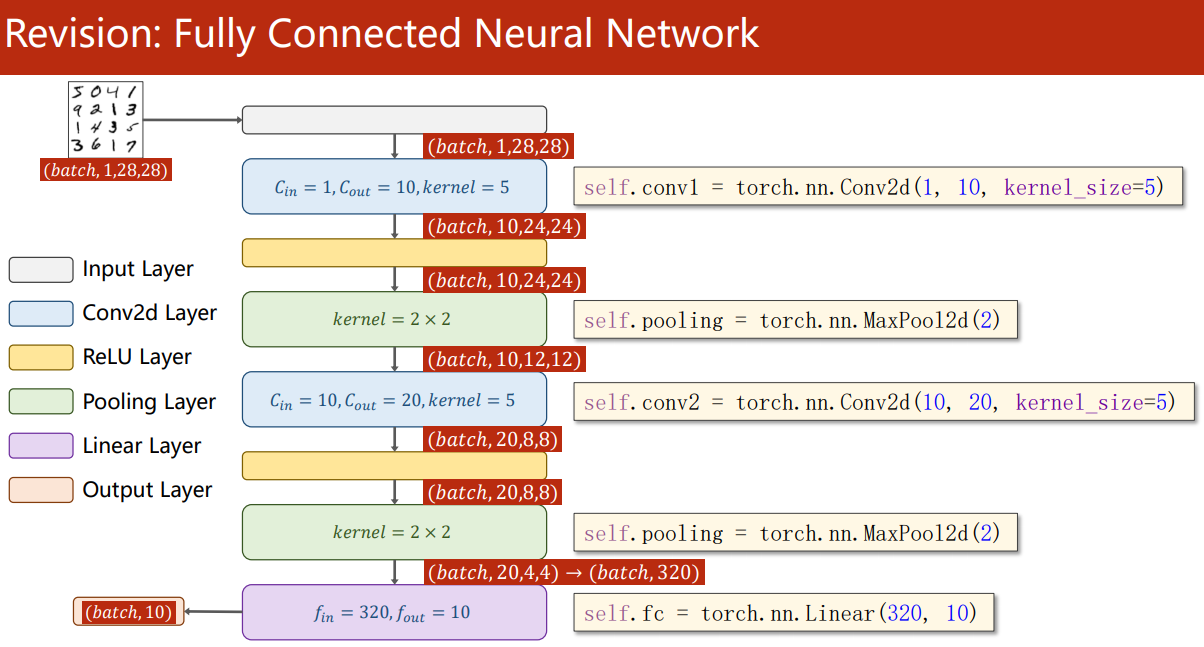

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 10, 5)

self.conv2 = nn.Conv2d(10, 20, 5)

self.linear = nn.Linear(320, 10)

self.mp = nn.MaxPool2d(2)

self.activate = nn.ReLU()

def forward(self, x):

batch_size = x.size(0) # batch-size 所在维度不变,其它维展开成320,输入线性层

x = self.activate(self.mp(self.conv1(x))) # out: N,10,12,12

x = self.activate(self.mp(self.conv2(x))) # out: N,20,4,4

x = x.view(batch_size, -1) # out: N,320

x = self.linear(x)

return x

model = Model()

def train(model, train_loader, save_dst="./models"):

global acc

criterion = nn.CrossEntropyLoss() # 包含了 softmax 层

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5) # SGD 对 batch-size 很敏感,64 是最好的;lr=0.01, momentum=0.5

optim_name = optimizer.__str__().split('(')[0].strip()

print("optimizer name:", optim_name)

acc_lst = []

for epoch in range(6):

TP = 0

loss_lst = []

for i, (imgs, labels) in enumerate(train_loader):

y_pred = model(imgs)

# print("x:", x.shape, "y:", labels.shape, "y_pred:", y_pred.shape)

loss = criterion(y_pred, labels)

loss_lst.append(loss.item())

y_hat = copy.copy(y_pred)

TP += torch.sum(labels.flatten() == torch.argmax(y_hat, dim=1))

optimizer.zero_grad()

loss.backward()

optimizer.step()

acc = TP.data.numpy() / len(train_set)

acc_lst.append(acc)

print("epoch:", epoch, "loss:", np.mean(loss_lst), "acc:", round(acc, 3), f"TP: {TP} / {len(train_set)}")

# 保存模型

torch.save(model.state_dict(), os.path.join(save_dst, f"{optim_name}_acc_{round(acc, 2)}.pth"))

print(f"model saved in {save_dst}")

# 绘制精度曲线

plt.plot(np.arange(len(acc_lst)), acc_lst)

plt.tight_layout()

plt.show()

def test(model, test_loader, load_dst="./models/SGD_acc_0.99.pth"):

TP = 0

model.load_state_dict(torch.load(load_dst))

for i, (imgs, labels) in enumerate(test_loader):

with torch.no_grad():

y_pred = model(imgs)

# print("x:", x.shape, "y:", labels.shape, "y_pred:", y_pred.shape)

y_hat = copy.copy(y_pred)

TP += torch.sum(labels.flatten() == torch.argmax(y_hat, dim=1)) # .sum().item()

acc = TP.data.numpy() / len(test_set)

print("acc:", round(acc, 4), f"TP: {TP} / {len(test_set)}")

def draw(model, test_loader, load_dst="./models/SGD_acc_0.99.pth"):

model.load_state_dict(torch.load(load_dst))

examples = enumerate(test_loader)

_, (imgs, labels) = next(examples)

with torch.no_grad():

y_pred = model(imgs)

for i in range(30):

plt.subplot(5, 6, i + 1)

plt.tight_layout()

plt.imshow(imgs[i][0], cmap='gray', interpolation='none')

plt.title("p: {}".format(

y_pred.data.max(1, keepdim=True)[1][i].item()))

plt.xticks([])

plt.yticks([])

plt.show()

if __name__ == '__main__':

train(model, train_loader)

# test(model, test_loader, load_dst="./models/Adam_acc_0.99.pth")

# draw(model, test_loader, load_dst="./models/Adam_acc_0.99.pth")

运行结果

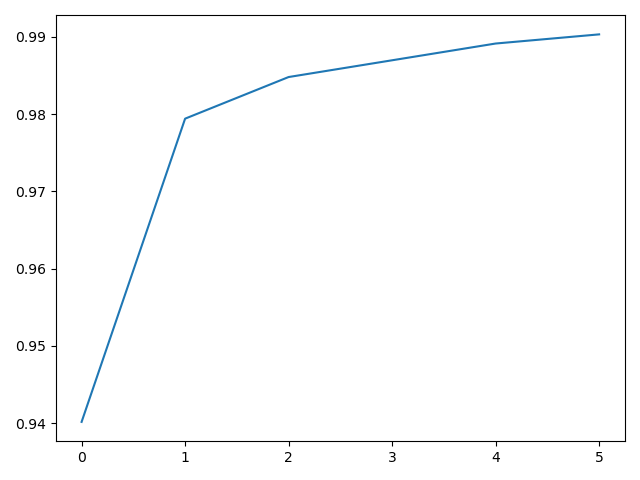

- 精度曲线:

- 测试结果:

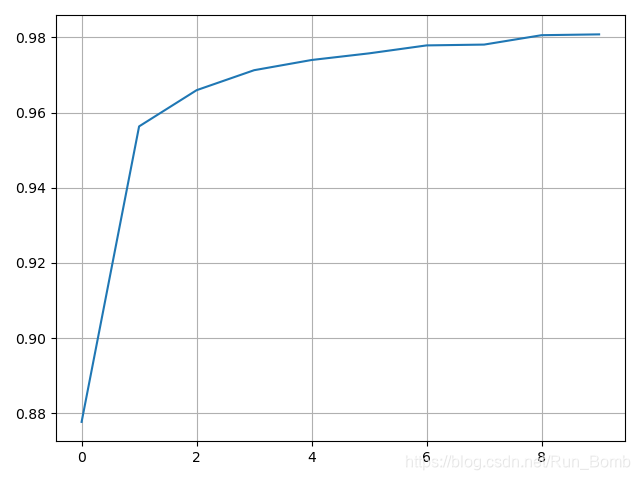

作业题

构建一个稍微复杂的 CNN

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 24, 1)

self.conv2 = nn.Conv2d(24, 16, 3)

self.conv3 = nn.Conv2d(16, 8, 3)

self.linear1 = nn.Linear(32, 64)

self.linear2 = nn.Linear(64, 128)

self.linear3 = nn.Linear(128, 10)

self.mp = nn.MaxPool2d(2)

self.activate = nn.ReLU()

def forward(self, x):

batch_size = x.size(0) # batch-size 所在维度不变,其它维展开成320,输入线性层

x = self.activate(self.mp(self.conv1(x)))

x = self.activate(self.mp(self.conv2(x)))

x = self.activate(self.mp(self.conv3(x))) # out: N, 8, 2, 2

x = x.view(batch_size, -1) # N, 32

x = self.linear1(x)

x = self.linear2(x)

x = self.linear3(x)

return x

可能网络更复杂之后需要更多的训练 epoch,现在的精度没有之前的网络高。

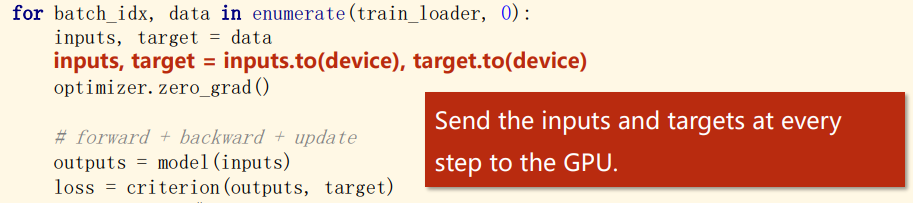

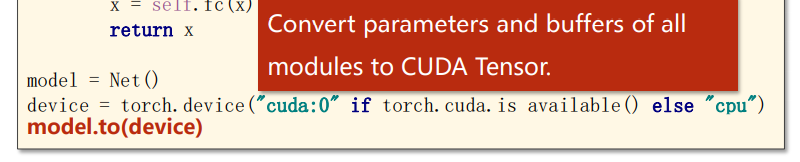

使用 GPU

只需要两步:

- 把模型转到 CUDA Tensor

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") # 有 cuda 则使用 GPU model.to(device)

- 把训练数据转到 CUDA Tensor

for batch_idx, data in enumerate(train_loader, 0): inputs, target = data inputs, target = inputs.to(device), target.to(device) outputs = model(inputs)