硬件问题真的是搞机器学习的一个痛处,更何况这只是入门级别的。

基于CNN和VGG16,实现对海贼王人物的分类识别。本次自己动手搭建了VGG16 网络,并且和迁移学习的VGG16的网络的实验效果做了一个对比,还包括其中出现的一些幺蛾子。

1.导入库

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

import os,PIL,pathlib

from tensorflow import keras

from tensorflow.keras import layers,models,Sequential,Input

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Conv2D,MaxPooling2D,Dense,Flatten,Dropout

2.数据处理

数据所在文件夹

data_dir = "E:/tmp/.keras/datasets/hzw_photos"

data_dir = pathlib.Path(data_dir)

构造一个ImageDataGenerator对图片进行处理(包括归一化和数据增强)

train_data_gen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1./255,#归一化

rotation_range=45,#随机翻转

shear_range=0.2,#c错切变换

zoom_range=0.2,

validation_split=0.2,#划分数据集,8:2的划分

horizontal_flip=True#水平翻转

)

以8:2的比例划分训练集和测试集

train_ds = train_data_gen.flow_from_directory(

directory=data_dir,

target_size=(height,width),

batch_size=batch_size,

shuffle=True,

class_mode='categorical',

subset='training'

)

test_ds = train_data_gen.flow_from_directory(

directory=data_dir,

target_size=(height,width),

batch_size=batch_size,

shuffle=True,

class_mode='categorical',

subset='validation'

)

结果如下所示:

Found 499 images belonging to 7 classes.

Found 122 images belonging to 7 classes.

7种类别如下所示:

all_images_paths = list(data_dir.glob('*'))##”*”匹配0个或多个字符

all_images_paths = [str(path) for path in all_images_paths]

all_label_names = [path.split("\\")[5].split(".")[0] for path in all_images_paths]

print(all_label_names)

结果:['lufei', 'luobin', 'namei', 'qiaoba', 'shanzhi', 'suolong', 'wusuopu']

3.超参数的设置

height = 224

width = 224

batch_size = 64

epochs = 20

4.CNN网络

CNN网络:3层卷积池化层+Flatten+3层全连接层

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(16,3,padding="same",activation="relu",input_shape=(height,width,3)),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(32,3,padding="same",activation="relu"),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(64,3,padding="same",activation="relu"),

tf.keras.layers.AveragePooling2D(),

tf.keras.layers.Conv2D(128,3,padding="same",activation="relu"),

tf.keras.layers.AveragePooling2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(1024,activation="relu"),

tf.keras.layers.Dense(512,activation="relu"),

tf.keras.layers.Dense(7,activation="softmax")

])

优化器设置为adam

model.compile(optimizer="adam",

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=["acc"])

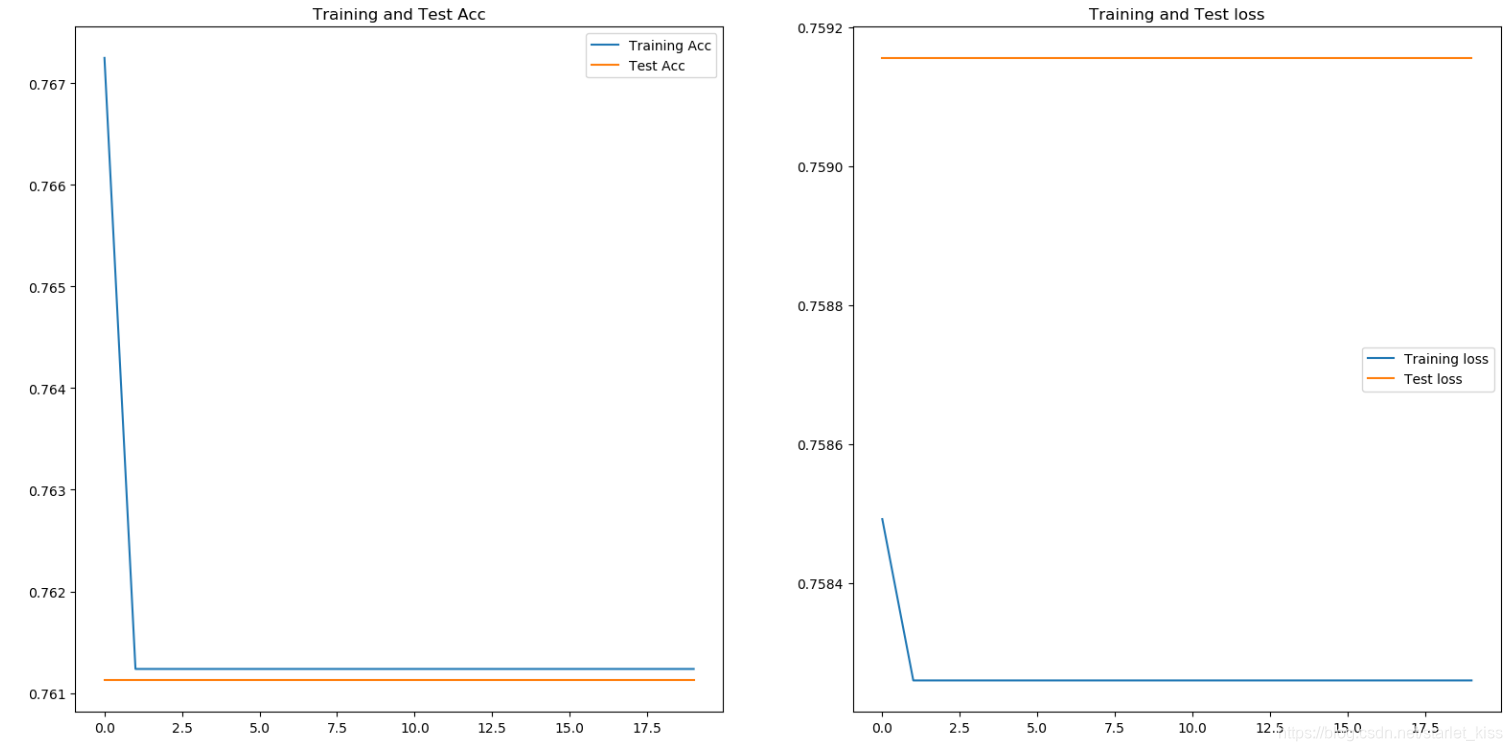

实验结果如下所示:

说实话,头一回遇见这种情况。20个epoch根本没有什么训练效果。

参考大佬的博客,修改了一下优化器:

opt = tf.keras.optimizers.Adam(learning_rate=1e-4)

model.compile(optimizer=opt,

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=["acc"])

实验结果如下所示:

准确率和loss值都得到了优化。

5.VGG16网络

VGG-16包含了16个隐藏层(13个卷积层和3个全连接层),故称为VGG-16。

1.迁移学习的VGG16网络

#引用VGG16模型

conv_base = tf.keras.applications.VGG16(weights='imagenet',include_top=False)

#设置为不可训练

conv_base.trainable =False

#模型搭建

model = tf.keras.Sequential()

model.add(conv_base)

model.add(tf.keras.layers.GlobalAveragePooling2D())

model.add(tf.keras.layers.Dense(1024,activation='relu'))

model.add(tf.keras.layers.Dense(7,activation='sigmoid'))

opt = tf.keras.optimizers.Adam(learning_rate=1e-4)

model.compile(optimizer=opt,

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=["acc"])

history = model.fit(

train_ds,

validation_data=test_ds,

epochs=epochs

)

其中epochs设置的为10,batch_size设置的为64.

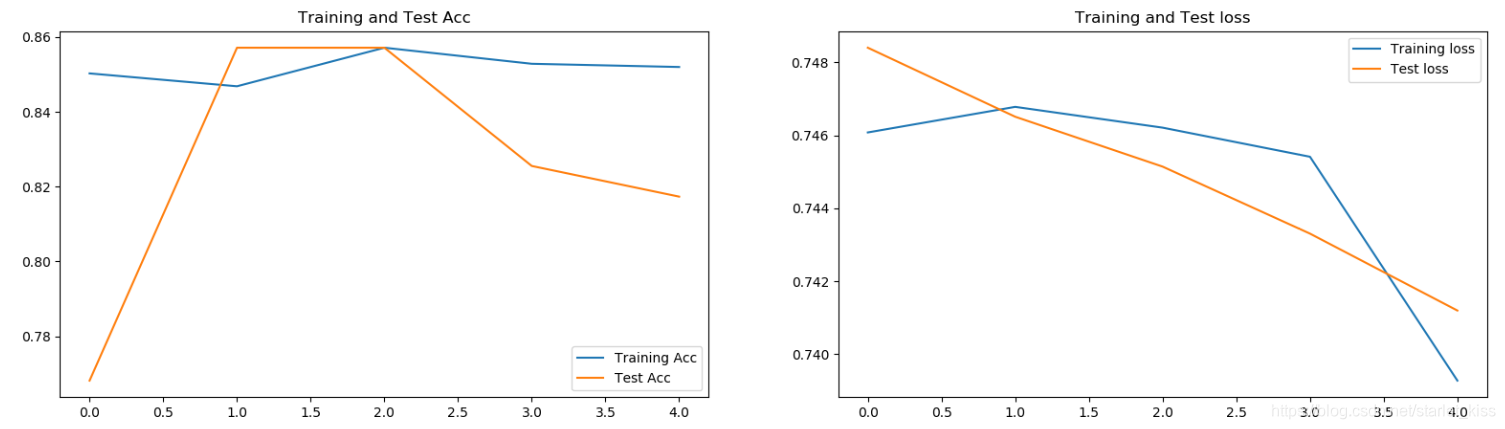

实验结果如下所示:

准确率在第二个epoch之后就没有得到改善,并且没有CNN的准确率高。但是loss值得到了改善。

2.自己搭建VGG16网络

def VGG16(nb_classes,input_shape):

input_ten = Input(shape=input_shape)

#1

x = Conv2D(64,(3,3),activation='relu',padding='same',name='block1_conv1')(input_ten)

x = Conv2D(64,(3,3),activation='relu',padding='same',name='block1_conv2')(x)

x = MaxPooling2D((2,2),strides=(2,2),name='block1_pool')(x)

#2

x = Conv2D(128,(3,3),activation='relu',padding='same',name='block2_conv1')(x)

x = Conv2D(128, (3, 3), activation='relu', padding='same', name='block2_conv2')(x)

x = MaxPooling2D((2,2),strides=(2,2),name='block2_pool')(x)

#3

x = Conv2D(256,(3,3),activation='relu',padding='same',name='block3_conv1')(x)

x = Conv2D(256, (3, 3), activation='relu', padding='same', name='block3_conv2')(x)

x = Conv2D(256, (3, 3), activation='relu', padding='same', name='block3_conv3')(x)

x = MaxPooling2D((2, 2), strides=(2, 2), name='block3_pool')(x)

#4

x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv1')(x)

x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv2')(x)

x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv3')(x)

x = MaxPooling2D((2, 2), strides=(2, 2), name='block4_pool')(x)

#5

x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv1')(x)

x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv2')(x)

x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv3')(x)

x = MaxPooling2D((2, 2), strides=(2, 2), name='block5_pool')(x)

#6Dense

x = Flatten()(x)

x = Dense(4096,activation='relu',name='fc1')(x)

x = Dense(4096,activation='relu',name='fc2')(x)

output_ten = Dense(nb_classes,activation='softmax',name='predictions')(x)

model = Model(input_ten,output_ten)

return model

model = VGG16(7,(height,width,3))

model.summary()

由于硬件问题,epoch设置的是5,batch_size设置的是32.

怎么会出现这样的结果,头大。希望路过的大佬批评指正。

总结一下:

这次出现了之前没有出现过的情况。在之前的实验中,只出现过过拟合的情况,经过数据增强之后,得到了很大的改善。但是这次一开始就进行了数据增强,因此并没有出现过拟合的现象。但是这次的准确率并没有随着训练次数的增加而增加,而是保持不动。在改了优化器之后,这种现象得到了改善。

在之前的实验中,VGG16网络的训练效果往往比自己搭建的CNN实验效果要好,但是这次并没有。准确率没有CNN高。迁移学习的VGG16网络比起自己搭建的VGG16网络效果要好。

这恐怕就是深度学习的魅力吧,作为一个入门者,要走的路还有很多~,诸君一起努力吧。

努力加油a啊