本文为学习PaddlePaddle官方教程后的笔记与总结,数据集及代码参考自PaddlePaddle官方教程。

PaddlePaddle官方教程链接:

飞桨PaddlePaddle-源于产业实践的开源深度学习平台

一,主要内容介绍

随着深度学习不断的发展,目前先后在CV领域常见的CNN网络模型种类主要有LeNet——AlexNet——VGG——GoogleNet——ResNet,本文先后使用了这几种不同CNN网络来比较其在图像分类上的效果比较。

二,数据集介绍

iChallenge-PM是百度大脑和中山大学中山眼科中心联合举办的iChallenge比赛中提供的关于病理性近视(Pathologic Myopia,PM)的医疗类数据集,包含1200个受试者的眼底视网膜图片,训练、验证和测试数据集各400张。

数据集的官方网址:

Baidu Research Open-Access Dataset - Introduction

三,不同CNN网络模型

3.1 LeNet网络

LeNet网络结构主要是利用多个卷积核与池化层一直对原数据做多次计算,从而达到提取数据特征、减小原数据量的目的。

本程序搭建的网络结构对数据的卷积计算的过程见下表所示:

程序的主要框架依旧:加载图像——提取数据——搭建网络——配置训练,直接上代码。

import cv2

import random

import numpy as np

import os

import paddle

from paddle.nn import Conv2D, MaxPool2D, Linear, Dropout

import paddle.nn.functional as F

import matplotlib.pyplot as plt

# 对读入的图像数据进行预处理

def transform_img(img):

# 将图片尺寸缩放道 224x224

img = cv2.resize(img, (224, 224))

# 读入的图像数据格式是[H, W, C]

# 使用转置操作将其变成[C, H, W]

img = np.transpose(img, (2,0,1)) # transpose作用是改变序列,(2,0,1)表示各个轴

img = img.astype('float32')

# 最大为225,将数据范围调整到[-1.0, 1.0]之间

img = img / 255.

img = img * 2.0 - 1.0

return img

# 定义训练集数据读取器

def data_loader(datadir, batch_size=10, mode = 'train'):

# 将datadir目录下的文件列出来,每条文件都要读入

filenames = os.listdir(datadir)

def reader():

if mode == 'train':

# 训练时随机打乱数据顺序

random.shuffle(filenames)

batch_imgs = []

batch_labels = []

for name in filenames:

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

if name[0] == 'H' or name[0] == 'N':

# H开头的文件名表示高度近似,N开头的文件名表示正常视力

# 高度近视和正常视力的样本,都不是病理性的,属于负样本,标签为0

label = 0

elif name[0] == 'P':

# P开头的是病理性近视,属于正样本,标签为1

label = 1

else:

raise('Not excepted file name')

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

# 定义验证集数据读取器

def valid_data_loader(datadir, csvfile, batch_size=64, mode='valid'):

# 训练集读取时通过文件名来确定样本标签,验证集则通过csvfile来读取每个图片对应的标签

filelists = open(csvfile).readlines()

def reader():

batch_imgs = []

batch_labels = []

for line in filelists[1:]:

line = line.strip().split(',') # 把每行的每个字符一个个分开,变成一个list

name = line[1]

label = int(line[2])

# 根据图片文件名加载图片,并对图像数据作预处理

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

DATADIR = 'D:/Pycharm 2020/Data set/Eye disease recognition/train/PALM-Training400/'

DATADIR2 = 'D:/Pycharm 2020/Data set/Eye disease recognition/validation/PALM-Validation400/'

CSVFILE = 'D:/Pycharm 2020/Data set/Eye disease recognition/labels.csv' # 必须是UTF-8编码的CSV文件

# 定义训练过程

def train_pm(model, optimizer):

# 开启0号GPU训练

'''

use_gpu = True

paddle.set_device('gpu:0') if use_gpu else paddle.set_device('cpu')

'''

paddle.set_device('gpu:0')

print('start training ... ')

model.train()

epoch_num = 20

iter = 0

iters = []

train_losses = []

# 定义数据读取器,训练数据读取器和验证数据读取器

train_loader = data_loader(DATADIR, batch_size=64, mode='train')

valid_loader = valid_data_loader(DATADIR2, CSVFILE)

for epoch in range(epoch_num): # 循环从0开始,到epoch_num-1,共 epoch_num 轮

for batch_id, data in enumerate(train_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

loss = F.binary_cross_entropy_with_logits(logits, label)

avg_loss = paddle.mean(loss)

if batch_id % 2 == 0: # 返回除法的余数

iters.append(iter)

train_losses.append(avg_loss.numpy())

iter = iter + 2

print("epoch: {}, batch_id: {}, loss is: {}".format(epoch, batch_id, avg_loss.numpy()))

# 反向传播,更新权重,清除梯度

avg_loss.backward()

optimizer.step()

optimizer.clear_grad()

model.eval()

accuracies = []

losses = []

for batch_id, data in enumerate(valid_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

# 二分类,sigmoid计算后的结果以0.5为阈值分两个类别

# 计算sigmoid后的预测概率,进行loss计算

pred = F.sigmoid(logits)

loss = F.binary_cross_entropy_with_logits(logits, label)

# 计算预测概率小于0.5的类别

pred2 = pred * (-1.0) + 1.0

# 得到两个类别的预测概率,并沿第一个维度级联

pred = paddle.concat([pred2, pred], axis=1)

acc = paddle.metric.accuracy(pred, paddle.cast(label, dtype='int64'))

accuracies.append(acc.numpy())

losses.append(loss.numpy())

print("[validation] accuracy/loss: {}/{}".format(np.mean(accuracies), np.mean(losses)))

model.train()

paddle.save(model.state_dict(), 'palm.pdparams')

paddle.save(optimizer.state_dict(), 'palm.pdopt')

return iters, train_losses

# 定义评估过程

def evaluation(model, params_file_path):

# 开启0号GPU预估

paddle.set_device('gpu:0')

print('start evaluation .......')

#加载模型参数

model_state_dict = paddle.load(params_file_path)

model.load_dict(model_state_dict)

model.eval()

eval_loader = data_loader(DATADIR,batch_size=10, mode='eval')

acc_set = []

avg_loss_set = []

for batch_id, data in enumerate(eval_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

y_data = y_data.astype(np.int64)

label_64 = paddle.to_tensor(y_data)

# 计算预测和精度

prediction, acc = model(img, label_64)

# 计算损失函数值

loss = F.binary_cross_entropy_with_logits(prediction, label)

avg_loss = paddle.mean(loss)

acc_set.append(float(acc.numpy()))

avg_loss_set.append(float(avg_loss.numpy()))

# 求平均精度

acc_val_mean = np.array(acc_set).mean()

avg_loss_val_mean = np.array(avg_loss_set).mean()

print('loss={}, acc={}'.format(avg_loss_val_mean, acc_val_mean))

# 定义 LeNet 网络结构

class LeNet(paddle.nn.Layer):

def __init__(self, num_classes=1):

super(LeNet, self).__init__()

# 创建卷积和池化层块,每个卷积层使用Sigmoid激活函数,后面跟着一个2x2的池化

self.conv1 = Conv2D(in_channels=3, out_channels=6, kernel_size=5)

self.max_pool1 = MaxPool2D(kernel_size=2, stride=2)

self.conv2 = Conv2D(in_channels=6, out_channels=16, kernel_size=5)

self.max_pool2 = MaxPool2D(kernel_size=2, stride=2)

# 创建第3个卷积层

self.conv3 = Conv2D(in_channels=16, out_channels=120, kernel_size=4)

# 创建全连接层,第一个全连接层的输出神经元个数为64

self.fc1 = Linear(in_features=300000, out_features=64)

# 第二个全连接层输出神经元个数为分类标签的类别数

self.fc2 = Linear(in_features=64, out_features=num_classes)

# 网络的前向计算过程

def forward(self, x, label=None):

x = self.conv1(x)

x = F.sigmoid(x)

x = self.max_pool1(x)

x = self.conv2(x)

x = F.sigmoid(x)

x = self.max_pool2(x)

x = self.conv3(x)

x = F.sigmoid(x)

x = paddle.reshape(x, [x.shape[0], -1]) # paddle.reshape(x, shape, name=None),拉直了

x = self.fc1(x)

x = F.sigmoid(x)

x = self.fc2(x)

if label is not None:

acc = paddle.metric.accuracy(input=x, label=label)

return x, acc

else:

return x

# 创建模型

model = LeNet(num_classes=1)

# 启动训练过程

opt = paddle.optimizer.Momentum(learning_rate=0.001, momentum=0.9, parameters=model.parameters())

iters, train_losses = train_pm(model, optimizer=opt)

evaluation(model, params_file_path="palm.pdparams")

# 画出训练过程中Loss的变化曲线

plt.figure()

plt.title("LeNet-train loss", fontsize=24)

plt.xlabel("iter", fontsize=14)

plt.ylabel("loss", fontsize=14)

plt.plot(iters, train_losses, color='red', label='train loss')

plt.grid()

plt.show()3.2 AlexNet网络

AlexNet网络相比于LeNet网络的改进主要体现在,加深了网络层数,激活函数使用relu,加入dropout层,做图像增强。

Alex网络结构对数据的卷积计算的过程见下表所示:

代码如下:

import cv2

import random

import numpy as np

import os

import paddle

from paddle.nn import Conv2D, MaxPool2D, Linear, Dropout

import paddle.nn.functional as F

import matplotlib.pyplot as plt

# 对读入的图像数据进行预处理

def transform_img(img):

# 将图片尺寸缩放道 224x224

img = cv2.resize(img, (224, 224))

# 读入的图像数据格式是[H, W, C]

# 使用转置操作将其变成[C, H, W]

img = np.transpose(img, (2,0,1)) # transpose作用是改变序列,(2,0,1)表示各个轴

img = img.astype('float32')

# 将数据范围调整到[-1.0, 1.0]之间

img = img / 255.

img = img * 2.0 - 1.0

return img

# 定义训练集数据读取器

def data_loader(datadir, batch_size=10, mode = 'train'):

# 将datadir目录下的文件列出来,每条文件都要读入

filenames = os.listdir(datadir)

def reader():

if mode == 'train':

# 训练时随机打乱数据顺序

random.shuffle(filenames)

batch_imgs = []

batch_labels = []

for name in filenames:

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

if name[0] == 'H' or name[0] == 'N':

# H开头的文件名表示高度近似,N开头的文件名表示正常视力

# 高度近视和正常视力的样本,都不是病理性的,属于负样本,标签为0

label = 0

elif name[0] == 'P':

# P开头的是病理性近视,属于正样本,标签为1

label = 1

else:

raise('Not excepted file name')

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

# 定义验证集数据读取器

def valid_data_loader(datadir, csvfile, batch_size=10, mode='valid'):

# 训练集读取时通过文件名来确定样本标签,验证集则通过csvfile来读取每个图片对应的标签

filelists = open(csvfile).readlines()

def reader():

batch_imgs = []

batch_labels = []

for line in filelists[1:]:

line = line.strip().split(',') # 把每行的每个字符一个个分开,变成一个list

name = line[1]

label = int(line[2])

# 根据图片文件名加载图片,并对图像数据作预处理

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

DATADIR = 'D:/Pycharm 2020/Data set/Eye disease recognition/train/PALM-Training400/'

DATADIR2 = 'D:/Pycharm 2020/Data set/Eye disease recognition/validation/PALM-Validation400/'

CSVFILE = 'D:/Pycharm 2020/Data set/Eye disease recognition/labels.csv' # 必须是UTF-8编码的CSV文件

# 定义训练过程

def train_pm(model, optimizer):

# 开启0号GPU训练

'''

use_gpu = True

paddle.set_device('gpu:0') if use_gpu else paddle.set_device('cpu')

'''

paddle.set_device('gpu:0')

print('start training ... ')

model.train()

epoch_num = 20

iter = 0

iters = []

train_losses = []

# 定义数据读取器,训练数据读取器和验证数据读取器

train_loader = data_loader(DATADIR, batch_size=64, mode='train')

valid_loader = valid_data_loader(DATADIR2, CSVFILE,batch_size=64)

for epoch in range(epoch_num):

for batch_id, data in enumerate(train_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

loss = F.binary_cross_entropy_with_logits(logits, label)

avg_loss = paddle.mean(loss)

if batch_id % 2 == 0:

iters.append(iter)

train_losses.append(avg_loss.numpy())

iter = iter + 2

print("epoch: {}, batch_id: {}, loss is: {}".format(epoch, batch_id, avg_loss.numpy()))

# 反向传播,更新权重,清除梯度

avg_loss.backward()

optimizer.step()

optimizer.clear_grad()

model.eval()

accuracies = []

losses = []

for batch_id, data in enumerate(valid_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

# 二分类,sigmoid计算后的结果以0.5为阈值分两个类别

# 计算sigmoid后的预测概率,进行loss计算

pred = F.sigmoid(logits)

loss = F.binary_cross_entropy_with_logits(logits, label)

# 计算预测概率小于0.5的类别

pred2 = pred * (-1.0) + 1.0

# 得到两个类别的预测概率,并沿第一个维度级联

pred = paddle.concat([pred2, pred], axis=1)

acc = paddle.metric.accuracy(pred, paddle.cast(label, dtype='int64'))

accuracies.append(acc.numpy())

losses.append(loss.numpy())

print("[validation] accuracy/loss: {}/{}".format(np.mean(accuracies), np.mean(losses)))

model.train()

paddle.save(model.state_dict(), 'palm.pdparams')

paddle.save(optimizer.state_dict(), 'palm.pdopt')

return iters, train_losses

# 定义评估过程

def evaluation(model, params_file_path):

# 开启0号GPU预估

use_gpu = True

paddle.set_device('gpu:0') if use_gpu else paddle.set_device('cpu')

print('start evaluation .......')

#加载模型参数

model_state_dict = paddle.load(params_file_path)

model.load_dict(model_state_dict)

model.eval()

eval_loader = data_loader(DATADIR,batch_size=64, mode='eval')

acc_set = []

avg_loss_set = []

for batch_id, data in enumerate(eval_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

y_data = y_data.astype(np.int64)

label_64 = paddle.to_tensor(y_data)

# 计算预测和精度

prediction, acc = model(img, label_64)

# 计算损失函数值

loss = F.binary_cross_entropy_with_logits(prediction, label)

avg_loss = paddle.mean(loss)

acc_set.append(float(acc.numpy()))

avg_loss_set.append(float(avg_loss.numpy()))

# 求平均精度

acc_val_mean = np.array(acc_set).mean()

avg_loss_val_mean = np.array(avg_loss_set).mean()

print('loss={}, acc={}'.format(avg_loss_val_mean, acc_val_mean))

# 定义 AlexNet 网络结构

class AlexNet(paddle.nn.Layer):

def __init__(self, num_classes=1):

super(AlexNet, self).__init__()

# AlexNet与LeNet一样也会同时使用卷积和池化层提取图像特征

# 与LeNet不同的是激活函数换成了‘relu’

self.conv1 = Conv2D(in_channels=3, out_channels=96, kernel_size=11, stride=4, padding=5) # padding_height = padding_width = padding。默认值:0

self.max_pool1 = MaxPool2D(kernel_size=2, stride=2)

self.conv2 = Conv2D(in_channels=96, out_channels=256, kernel_size=5, stride=1, padding=2)

self.max_pool2 = MaxPool2D(kernel_size=2, stride=2)

self.conv3 = Conv2D(in_channels=256, out_channels=384, kernel_size=3, stride=1, padding=1)

self.conv4 = Conv2D(in_channels=384, out_channels=384, kernel_size=3, stride=1, padding=1)

self.conv5 = Conv2D(in_channels=384, out_channels=256, kernel_size=3, stride=1, padding=1)

self.max_pool5 = MaxPool2D(kernel_size=2, stride=2)

self.fc1 = Linear(in_features=12544, out_features=4096)

self.drop_ratio1 = 0.5

self.drop1 = Dropout(self.drop_ratio1)

self.fc2 = Linear(in_features=4096, out_features=4096)

self.drop_ratio2 = 0.5

self.drop2 = Dropout(self.drop_ratio2)

self.fc3 = Linear(in_features=4096, out_features=num_classes)

def forward(self, x, label=None):

x = self.conv1(x)

x = F.relu(x)

x = self.max_pool1(x)

x = self.conv2(x)

x = F.relu(x)

x = self.max_pool2(x)

x = self.conv3(x)

x = F.relu(x)

x = self.conv4(x)

x = F.relu(x)

x = self.conv5(x)

x = F.relu(x)

x = self.max_pool5(x)

x = paddle.reshape(x, [x.shape[0], -1])

x = self.fc1(x)

x = F.relu(x)

# 在全连接之后使用dropout抑制过拟合

x = self.drop1(x)

x = self.fc2(x)

x = F.relu(x)

# 在全连接之后使用dropout抑制过拟合

x = self.drop2(x)

x = self.fc3(x)

if label is not None:

acc = paddle.metric.accuracy(input=x, label=label)

return x, acc

else:

return x

# 创建模型

model = AlexNet()

# 启动训练过程

opt = paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters())

iters, train_losses = train_pm(model, optimizer=opt)

# 画出训练过程中Loss的变化曲线

plt.figure()

plt.title("AlexNet-train loss", fontsize=24)

plt.xlabel("iter", fontsize=14)

plt.ylabel("loss", fontsize=14)

plt.plot(iters, train_losses, color='red', label='train loss')

plt.grid()

plt.show()3.3?VGG网络

VGG网络的主要思想是重复使用简单的卷积层(例如3×3),把模型做的非常深,加大感受野。

VGG网络结构对数据的卷积计算的过程见下表所示:

程序如下:

import cv2

import random

import numpy as np

import os

import paddle

from paddle.nn import Conv2D, MaxPool2D, Linear, Dropout

import paddle.nn.functional as F

from paddle.nn import Conv2D, MaxPool2D, BatchNorm2D, Linear

import matplotlib.pyplot as plt

# 对读入的图像数据进行预处理

def transform_img(img):

# 将图片尺寸缩放道 224x224

img = cv2.resize(img, (224, 224))

# 读入的图像数据格式是[H, W, C]

# 使用转置操作将其变成[C, H, W]

img = np.transpose(img, (2,0,1)) # transpose作用是改变序列,(2,0,1)表示各个轴

img = img.astype('float32')

# 将数据范围调整到[-1.0, 1.0]之间

img = img / 255.

img = img * 2.0 - 1.0

return img

# 定义训练集数据读取器

def data_loader(datadir, batch_size=10, mode = 'train'):

# 将datadir目录下的文件列出来,每条文件都要读入

filenames = os.listdir(datadir)

def reader():

if mode == 'train':

# 训练时随机打乱数据顺序

random.shuffle(filenames)

batch_imgs = []

batch_labels = []

for name in filenames:

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

if name[0] == 'H' or name[0] == 'N':

# H开头的文件名表示高度近似,N开头的文件名表示正常视力

# 高度近视和正常视力的样本,都不是病理性的,属于负样本,标签为0

label = 0

elif name[0] == 'P':

# P开头的是病理性近视,属于正样本,标签为1

label = 1

else:

raise('Not excepted file name')

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

# 定义验证集数据读取器

def valid_data_loader(datadir, csvfile, batch_size=10, mode='valid'):

# 训练集读取时通过文件名来确定样本标签,验证集则通过csvfile来读取每个图片对应的标签

filelists = open(csvfile).readlines()

def reader():

batch_imgs = []

batch_labels = []

for line in filelists[1:]:

line = line.strip().split(',') # 把每行的每个字符一个个分开,变成一个list

name = line[1]

label = int(line[2])

# 根据图片文件名加载图片,并对图像数据作预处理

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

DATADIR = 'D:/Pycharm 2020/Data set/Eye disease recognition/train/PALM-Training400/'

DATADIR2 = 'D:/Pycharm 2020/Data set/Eye disease recognition/validation/PALM-Validation400/'

CSVFILE = 'D:/Pycharm 2020/Data set/Eye disease recognition/labels.csv' # 必须是UTF-8编码的CSV文件

# 定义训练过程

def train_pm(model, optimizer):

# 开启0号GPU训练

paddle.set_device('gpu:0')

# paddle.set_device('gpu:0')

print('start training ... ')

model.train()

epoch_num = 20

iter = 0

iters = []

train_losses = []

# 定义数据读取器,训练数据读取器和验证数据读取器

train_loader = data_loader(DATADIR, batch_size=2, mode='train')

valid_loader = valid_data_loader(DATADIR2, CSVFILE, batch_size=2)

for epoch in range(epoch_num):

for batch_id, data in enumerate(train_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

loss = F.binary_cross_entropy_with_logits(logits, label)

avg_loss = paddle.mean(loss)

if batch_id % 5 == 0:

iters.append(iter)

train_losses.append(avg_loss.numpy())

iter = iter + 5

print("epoch: {}, batch_id: {}, loss is: {}".format(epoch, batch_id, avg_loss.numpy()))

# 反向传播,更新权重,清除梯度

avg_loss.backward()

optimizer.step()

optimizer.clear_grad()

model.eval()

accuracies = []

losses = []

for batch_id, data in enumerate(valid_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

# 二分类,sigmoid计算后的结果以0.5为阈值分两个类别

# 计算sigmoid后的预测概率,进行loss计算

pred = F.sigmoid(logits)

loss = F.binary_cross_entropy_with_logits(logits, label)

# 计算预测概率小于0.5的类别

pred2 = pred * (-1.0) + 1.0

# 得到两个类别的预测概率,并沿第一个维度级联

pred = paddle.concat([pred2, pred], axis=1)

acc = paddle.metric.accuracy(pred, paddle.cast(label, dtype='int64'))

accuracies.append(acc.numpy())

losses.append(loss.numpy())

print("[validation] accuracy/loss: {}/{}".format(np.mean(accuracies), np.mean(losses)))

model.train()

paddle.save(model.state_dict(), 'palm.pdparams')

paddle.save(optimizer.state_dict(), 'palm.pdopt')

return iters, train_losses

# 定义vgg网络

class VGG(paddle.nn.Layer):

def __init__(self):

super(VGG, self).__init__()

in_channels = [3, 64, 128, 256, 512, 512]

# 定义第一个卷积块,包含两个卷积

self.conv1_1 = Conv2D(in_channels=in_channels[0], out_channels=in_channels[1], kernel_size=3, padding=1, stride=1)

self.conv1_2 = Conv2D(in_channels=in_channels[1], out_channels=in_channels[1], kernel_size=3, padding=1, stride=1)

# 定义第二个卷积块,包含两个卷积

self.conv2_1 = Conv2D(in_channels=in_channels[1], out_channels=in_channels[2], kernel_size=3, padding=1,stride=1)

self.conv2_2 = Conv2D(in_channels=in_channels[2], out_channels=in_channels[2], kernel_size=3, padding=1,stride=1)

# 定义第三个卷积块,包含三个卷积

self.conv3_1 = Conv2D(in_channels=in_channels[2], out_channels=in_channels[3], kernel_size=3, padding=1,stride=1)

self.conv3_2 = Conv2D(in_channels=in_channels[3], out_channels=in_channels[3], kernel_size=3, padding=1,stride=1)

self.conv3_3 = Conv2D(in_channels=in_channels[3], out_channels=in_channels[3], kernel_size=3, padding=1,stride=1)

# 定义第四个卷积块,包含三个卷积

self.conv4_1 = Conv2D(in_channels=in_channels[3], out_channels=in_channels[4], kernel_size=3, padding=1,stride=1)

self.conv4_2 = Conv2D(in_channels=in_channels[4], out_channels=in_channels[4], kernel_size=3, padding=1,stride=1)

self.conv4_3 = Conv2D(in_channels=in_channels[4], out_channels=in_channels[4], kernel_size=3, padding=1,stride=1)

# 定义第五个卷积块,包含三个卷积

self.conv5_1 = Conv2D(in_channels=in_channels[4], out_channels=in_channels[5], kernel_size=3, padding=1,stride=1)

self.conv5_2 = Conv2D(in_channels=in_channels[5], out_channels=in_channels[5], kernel_size=3, padding=1,stride=1)

self.conv5_3 = Conv2D(in_channels=in_channels[5], out_channels=in_channels[5], kernel_size=3, padding=1,stride=1)

# 使用Sequential 将全连接层和relu组成一个线性结构(fc + relu)

# 当输入为224x224时,经过五个卷积块和池化层后,特征维度变为[512x7x7]

self.fc1 = paddle.nn.Sequential(paddle.nn.Linear(512 * 7 * 7, 4096), paddle.nn.ReLU())

self.drop1_ratio = 0.5

self.dropout1 = paddle.nn.Dropout(self.drop1_ratio, mode='upscale_in_train')

# 使用Sequential 将全连接层和relu组成一个线性结构(fc + relu)

self.fc2 = paddle.nn.Sequential(paddle.nn.Linear(4096, 4096), paddle.nn.ReLU())

self.drop2_ratio = 0.5

self.dropout2 = paddle.nn.Dropout(self.drop2_ratio, mode='upscale_in_train')

self.fc3 = paddle.nn.Linear(4096, 1)

self.relu = paddle.nn.ReLU()

self.pool = MaxPool2D(stride=2, kernel_size=2)

def forward(self, x):

x = self.relu(self.conv1_1(x))

x = self.relu(self.conv1_2(x))

x = self.pool(x)

x = self.relu(self.conv2_1(x))

x = self.relu(self.conv2_2(x))

x = self.pool(x)

x = self.relu(self.conv3_1(x))

x = self.relu(self.conv3_2(x))

x = self.relu(self.conv3_3(x))

x = self.pool(x)

x = self.relu(self.conv4_1(x))

x = self.relu(self.conv4_2(x))

x = self.relu(self.conv4_3(x))

x = self.pool(x)

x = self.relu(self.conv5_1(x))

x = self.relu(self.conv5_2(x))

x = self.relu(self.conv5_3(x))

x = self.pool(x)

x = paddle.flatten(x, 1, -1)

x = self.dropout1(self.relu(self.fc1(x)))

x = self.dropout2(self.relu(self.fc2(x)))

x = self.fc3(x)

return x

# 创建模型

model = VGG()

# 启动训练过程

opt = paddle.optimizer.Adam(learning_rate=0.001, parameters=model.parameters())

# opt = paddle.optimizer.Momentum(learning_rate=0.001, momentum=0.9, parameters=model.parameters())

iters, train_losses = train_pm(model, optimizer=opt)

# 画出训练过程中Loss的变化曲线

plt.figure()

plt.title("VGG-train loss", fontsize=24)

plt.xlabel("iter", fontsize=14)

plt.ylabel("loss", fontsize=14)

plt.plot(iters, train_losses, color='red', label='train loss')

plt.grid()

plt.show()

3.4?GoogleNet网络

以上的CNN模型都是输入图像有多少个通道数,使用相同的out_channels个的卷积核对每一个逐一通道做相同的卷积计算,GoogleNet网络的思想是每一个通道都做使用不再相同的卷积核做不同的卷积计算。

通俗点来说就是,例如在VGG中,[3×224×224]数据做卷积计算时。沿着第一个维度,在3个通道上都是用10个[5×5]的卷积核做计算,而在GoogleNet中,沿着第一个维度,其维度数为3,分别用8个[5×5],16个[3×3]与18个[3×3],32个[5×5]与12个[3×3],做计算,就是每个通道上的卷积核形状,卷积层数,卷积核个数都不再相同。?

GoogleNet网络对数据的卷积计算过程本人粗略整理了一下,见下表:

附上源代码:

import cv2

import random

import numpy as np

import os

import paddle

import paddle.nn.functional as F

from paddle.nn import Conv2D, MaxPool2D, AdaptiveAvgPool2D, Linear

import matplotlib.pyplot as plt

# 对读入的图像数据进行预处理

def transform_img(img):

# 将图片尺寸缩放道 224x224

img = cv2.resize(img, (224, 224))

# 读入的图像数据格式是[H, W, C]

# 使用转置操作将其变成[C, H, W]

img = np.transpose(img, (2,0,1)) # transpose作用是改变序列,(2,0,1)表示各个轴

img = img.astype('float32')

# 将数据范围调整到[-1.0, 1.0]之间

img = img / 255.

img = img * 2.0 - 1.0

return img

# 定义训练集数据读取器

def data_loader(datadir, batch_size=10, mode = 'train'):

# 将datadir目录下的文件列出来,每条文件都要读入

filenames = os.listdir(datadir)

def reader():

if mode == 'train':

# 训练时随机打乱数据顺序

random.shuffle(filenames)

batch_imgs = []

batch_labels = []

for name in filenames:

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

if name[0] == 'H' or name[0] == 'N':

# H开头的文件名表示高度近似,N开头的文件名表示正常视力

# 高度近视和正常视力的样本,都不是病理性的,属于负样本,标签为0

label = 0

elif name[0] == 'P':

# P开头的是病理性近视,属于正样本,标签为1

label = 1

else:

raise('Not excepted file name')

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

# 定义验证集数据读取器

def valid_data_loader(datadir, csvfile, batch_size=10, mode='valid'):

# 训练集读取时通过文件名来确定样本标签,验证集则通过csvfile来读取每个图片对应的标签

filelists = open(csvfile).readlines()

def reader():

batch_imgs = []

batch_labels = []

for line in filelists[1:]:

line = line.strip().split(',') # 把每行的每个字符一个个分开,变成一个list

name = line[1]

label = int(line[2])

# 根据图片文件名加载图片,并对图像数据作预处理

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

DATADIR = 'D:/Pycharm 2020/Data set/Eye disease recognition/train/PALM-Training400/'

DATADIR2 = 'D:/Pycharm 2020/Data set/Eye disease recognition/validation/PALM-Validation400/'

CSVFILE = 'D:/Pycharm 2020/Data set/Eye disease recognition/labels.csv' # 必须是UTF-8编码的CSV文件

# 定义训练过程

def train_pm(model, optimizer):

# 开启0号GPU训练

paddle.set_device('gpu:0')

# paddle.set_device('gpu:0')

print('start training ... ')

model.train()

epoch_num = 20

iter = 0

iters = []

train_losses = []

# 定义数据读取器,训练数据读取器和验证数据读取器

train_loader = data_loader(DATADIR, batch_size=10, mode='train')

valid_loader = valid_data_loader(DATADIR2, CSVFILE, batch_size=10)

for epoch in range(epoch_num):

for batch_id, data in enumerate(train_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

loss = F.binary_cross_entropy_with_logits(logits, label)

avg_loss = paddle.mean(loss)

if batch_id % 10 == 0:

iters.append(iter)

train_losses.append(avg_loss.numpy())

iter = iter + 10

print("epoch: {}, batch_id: {}, loss is: {}".format(epoch, batch_id, avg_loss.numpy()))

# 反向传播,更新权重,清除梯度

avg_loss.backward()

optimizer.step()

optimizer.clear_grad()

model.eval()

accuracies = []

losses = []

for batch_id, data in enumerate(valid_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

# 二分类,sigmoid计算后的结果以0.5为阈值分两个类别

# 计算sigmoid后的预测概率,进行loss计算

pred = F.sigmoid(logits)

loss = F.binary_cross_entropy_with_logits(logits, label)

# 计算预测概率小于0.5的类别

pred2 = pred * (-1.0) + 1.0

# 得到两个类别的预测概率,并沿第一个维度级联

pred = paddle.concat([pred2, pred], axis=1)

acc = paddle.metric.accuracy(pred, paddle.cast(label, dtype='int64'))

accuracies.append(acc.numpy())

losses.append(loss.numpy())

print("[validation] accuracy/loss: {}/{}".format(np.mean(accuracies), np.mean(losses)))

model.train()

paddle.save(model.state_dict(), 'palm.pdparams')

paddle.save(optimizer.state_dict(), 'palm.pdopt')

return iters, train_losses

# 定义Inception块

class Inception(paddle.nn.Layer):

def __init__(self, c0, c1, c2, c3, c4, **kwargs):

'''

Inception模块的实现代码,

c1,图(b)中第一条支路1x1卷积的输出通道数,数据类型是整数

c2,图(b)中第二条支路卷积的输出通道数,数据类型是tuple或list,

其中c2[0]是1x1卷积的输出通道数,c2[1]是3x3

c3,图(b)中第三条支路卷积的输出通道数,数据类型是tuple或list,

其中c3[0]是1x1卷积的输出通道数,c3[1]是3x3

c4,图(b)中第一条支路1x1卷积的输出通道数,数据类型是整数

'''

super(Inception, self).__init__()

# 依次创建Inception块每条支路上使用到的操作

self.p1_1 = Conv2D(in_channels=c0, out_channels=c1, kernel_size=1, stride=1)

self.p2_1 = Conv2D(in_channels=c0, out_channels=c2[0], kernel_size=1, stride=1)

self.p2_2 = Conv2D(in_channels=c2[0], out_channels=c2[1], kernel_size=3, padding=1, stride=1)

self.p3_1 = Conv2D(in_channels=c0, out_channels=c3[0], kernel_size=1, stride=1)

self.p3_2 = Conv2D(in_channels=c3[0], out_channels=c3[1], kernel_size=5, padding=2, stride=1)

self.p4_1 = MaxPool2D(kernel_size=3, stride=1, padding=1)

self.p4_2 = Conv2D(in_channels=c0, out_channels=c4, kernel_size=1, stride=1)

# # 新加一层batchnorm稳定收敛

# self.batchnorm = paddle.nn.BatchNorm2D(c1+c2[1]+c3[1]+c4)

def forward(self, x):

# 支路1只包含一个1x1卷积

p1 = F.relu(self.p1_1(x))

# 支路2包含 1x1卷积 + 3x3卷积

p2 = F.relu(self.p2_2(F.relu(self.p2_1(x))))

# 支路3包含 1x1卷积 + 5x5卷积

p3 = F.relu(self.p3_2(F.relu(self.p3_1(x))))

# 支路4包含 最大池化和1x1卷积

p4 = F.relu(self.p4_2(self.p4_1(x)))

# 将每个支路的输出特征图拼接在一起作为最终的输出结果

return paddle.concat([p1, p2, p3, p4], axis=1)

# return self.batchnorm()

class GoogLeNet(paddle.nn.Layer):

def __init__(self):

super(GoogLeNet, self).__init__()

# GoogLeNet包含五个模块,每个模块后面紧跟一个池化层

# 第一个模块包含1个卷积层

self.conv1 = Conv2D(in_channels=3, out_channels=64, kernel_size=7, padding=3, stride=1)

# 3x3最大池化

self.pool1 = MaxPool2D(kernel_size=3, stride=2, padding=1)

# 第二个模块包含2个卷积层

self.conv2_1 = Conv2D(in_channels=64, out_channels=64, kernel_size=1, stride=1)

self.conv2_2 = Conv2D(in_channels=64, out_channels=192, kernel_size=3, padding=1, stride=1)

# 3x3最大池化

self.pool2 = MaxPool2D(kernel_size=3, stride=2, padding=1)

# 第三个模块包含2个Inception块

self.block3_1 = Inception(192, 64, (96, 128), (16, 32), 32)

self.block3_2 = Inception(256, 128, (128, 192), (32, 96), 64)

# 3x3最大池化

self.pool3 = MaxPool2D(kernel_size=3, stride=2, padding=1)

# 第四个模块包含5个Inception块

self.block4_1 = Inception(480, 192, (96, 208), (16, 48), 64)

self.block4_2 = Inception(512, 160, (112, 224), (24, 64), 64)

self.block4_3 = Inception(512, 128, (128, 256), (24, 64), 64)

self.block4_4 = Inception(512, 112, (144, 288), (32, 64), 64)

self.block4_5 = Inception(528, 256, (160, 320), (32, 128), 128)

# 3x3最大池化

self.pool4 = MaxPool2D(kernel_size=3, stride=2, padding=1)

# 第五个模块包含2个Inception块

self.block5_1 = Inception(832, 256, (160, 320), (32, 128), 128)

self.block5_2 = Inception(832, 384, (192, 384), (48, 128), 128)

# 全局池化,用的是global_pooling,不需要设置pool_stride

self.pool5 = AdaptiveAvgPool2D(output_size=1)

self.fc = Linear(in_features=1024, out_features=1)

def forward(self, x):

x = self.pool1(F.relu(self.conv1(x)))

x = self.pool2(F.relu(self.conv2_2(F.relu(self.conv2_1(x)))))

x = self.pool3(self.block3_2(self.block3_1(x)))

x = self.block4_3(self.block4_2(self.block4_1(x)))

x = self.pool4(self.block4_5(self.block4_4(x)))

x = self.pool5(self.block5_2(self.block5_1(x)))

x = paddle.reshape(x, [x.shape[0], -1])

x = self.fc(x)

return x

# 创建模型

model = GoogLeNet()

print(len(model.parameters()))

opt = paddle.optimizer.Momentum(learning_rate=0.001, momentum=0.9, parameters=model.parameters(), weight_decay=0.001)

# 启动训练过程

iters, train_losses = train_pm(model, optimizer=opt)

# 画出训练过程中Loss的变化曲线

plt.figure()

plt.title("GoogleNet-train loss", fontsize=24)

plt.xlabel("iter", fontsize=14)

plt.ylabel("loss", fontsize=14)

plt.plot(iters, train_losses, color='red', label='train loss')

plt.grid()

plt.show()3.5?ResNet网络

ResNet网络主要是在结构中加入了残差块。

ResNet网络对数据的卷积计算过程与上文中的计算过程都类似,只是在在某一层的线性模块之后,非线性模块之前增加前面某层的输出,如下图共有5个残差块。

我个人的理解最通俗来说就是在例如VGG网络基础上各层之间跨层连接上一条线,实现跨层恒等变换,具体为什么这样做,以及原理,可参考这篇博客:

https://blog.csdn.net/dulingtingzi/article/details/79870486

这个文章讲的比较浅显,也可以加深理解。

https://www.jianshu.com/p/b08e4724fcea

代码如下:

import cv2

import random

import numpy as np

import os

import paddle

import paddle.nn.functional as F

import paddle.nn as nn

import matplotlib.pyplot as plt

# 对读入的图像数据进行预处理

def transform_img(img):

# 将图片尺寸缩放道 224x224

img = cv2.resize(img, (224, 224))

# 读入的图像数据格式是[H, W, C]

# 使用转置操作将其变成[C, H, W]

img = np.transpose(img, (2,0,1)) # transpose作用是改变序列,(2,0,1)表示各个轴

img = img.astype('float32')

# 将数据范围调整到[-1.0, 1.0]之间

img = img / 255.

img = img * 2.0 - 1.0

return img

# 定义训练集数据读取器

def data_loader(datadir, batch_size=10, mode = 'train'):

# 将datadir目录下的文件列出来,每条文件都要读入

filenames = os.listdir(datadir)

def reader():

if mode == 'train':

# 训练时随机打乱数据顺序

random.shuffle(filenames)

batch_imgs = []

batch_labels = []

for name in filenames:

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

if name[0] == 'H' or name[0] == 'N':

# H开头的文件名表示高度近似,N开头的文件名表示正常视力

# 高度近视和正常视力的样本,都不是病理性的,属于负样本,标签为0

label = 0

elif name[0] == 'P':

# P开头的是病理性近视,属于正样本,标签为1

label = 1

else:

raise('Not excepted file name')

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

# 定义验证集数据读取器

def valid_data_loader(datadir, csvfile, batch_size=10, mode='valid'):

# 训练集读取时通过文件名来确定样本标签,验证集则通过csvfile来读取每个图片对应的标签

filelists = open(csvfile).readlines()

def reader():

batch_imgs = []

batch_labels = []

for line in filelists[1:]:

line = line.strip().split(',') # 把每行的每个字符一个个分开,变成一个list

name = line[1]

label = int(line[2])

# 根据图片文件名加载图片,并对图像数据作预处理

filepath = os.path.join(datadir, name)

img = cv2.imread(filepath)

img = transform_img(img)

# 每读取一个样本的数据,就将其放入数据列表中

batch_imgs.append(img)

batch_labels.append(label)

if len(batch_imgs) == batch_size:

# 当数据列表的长度等于batch_size的时候,

# 把这些数据当作一个mini-batch,并作为数据生成器的一个输出

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

batch_imgs = []

batch_labels = []

if len(batch_imgs) > 0:

# 剩余样本数目不足一个batch_size的数据,一起打包成一个mini-batch

imgs_array = np.array(batch_imgs).astype('float32')

labels_array = np.array(batch_labels).astype('float32').reshape(-1, 1)

yield imgs_array, labels_array

return reader

DATADIR = 'D:/Pycharm 2020/Data set/Eye disease recognition/train/PALM-Training400/'

DATADIR2 = 'D:/Pycharm 2020/Data set/Eye disease recognition/validation/PALM-Validation400/'

CSVFILE = 'D:/Pycharm 2020/Data set/Eye disease recognition/labels.csv' # 必须是UTF-8编码的CSV文件

# 定义训练过程

def train_pm(model, optimizer):

# 开启0号GPU训练

use_gpu = True

paddle.set_device('gpu:0') if use_gpu else paddle.set_device('cpu')

# paddle.set_device('gpu:0')

print('start training ... ')

model.train()

epoch_num = 20

iter = 0

iters = []

train_losses = []

# 定义数据读取器,训练数据读取器和验证数据读取器

train_loader = data_loader(DATADIR, batch_size=10, mode='train')

valid_loader = valid_data_loader(DATADIR2, CSVFILE)

for epoch in range(epoch_num):

for batch_id, data in enumerate(train_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

loss = F.binary_cross_entropy_with_logits(logits, label)

avg_loss = paddle.mean(loss)

if batch_id % 10 == 0:

iters.append(iter)

train_losses.append(avg_loss.numpy())

iter = iter + 10

print("epoch: {}, batch_id: {}, loss is: {}".format(epoch, batch_id, avg_loss.numpy()))

# 反向传播,更新权重,清除梯度

avg_loss.backward()

optimizer.step()

optimizer.clear_grad()

model.eval()

accuracies = []

losses = []

for batch_id, data in enumerate(valid_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data)

# 运行模型前向计算,得到预测值

logits = model(img)

# 二分类,sigmoid计算后的结果以0.5为阈值分两个类别

# 计算sigmoid后的预测概率,进行loss计算

pred = F.sigmoid(logits)

loss = F.binary_cross_entropy_with_logits(logits, label)

# 计算预测概率小于0.5的类别

pred2 = pred * (-1.0) + 1.0

# 得到两个类别的预测概率,并沿第一个维度级联

pred = paddle.concat([pred2, pred], axis=1)

acc = paddle.metric.accuracy(pred, paddle.cast(label, dtype='int64'))

accuracies.append(acc.numpy())

losses.append(loss.numpy())

print("[validation] accuracy/loss: {}/{}".format(np.mean(accuracies), np.mean(losses)))

model.train()

paddle.save(model.state_dict(), 'palm.pdparams')

paddle.save(optimizer.state_dict(), 'palm.pdopt')

return iters, train_losses

# ResNet中使用了BatchNorm层,在卷积层的后面加上BatchNorm以提升数值稳定性

# 定义卷积批归一化块

class ConvBNLayer(paddle.nn.Layer):

def __init__(self, num_channels, num_filters, filter_size, stride=1, groups=1, act=None):

"""

num_channels, 卷积层的输入通道数

num_filters, 卷积层的输出通道数

stride, 卷积层的步幅

groups, 分组卷积的组数,默认groups=1不使用分组卷积

"""

super(ConvBNLayer, self).__init__()

# 创建卷积层

self._conv = nn.Conv2D(in_channels=num_channels, out_channels=num_filters, kernel_size=filter_size, stride=stride, padding=(filter_size - 1) // 2, groups=groups, bias_attr=False)

# 创建BatchNorm层

self._batch_norm = paddle.nn.BatchNorm2D(num_filters)

self.act = act

def forward(self, inputs):

y = self._conv(inputs)

y = self._batch_norm(y)

if self.act == 'leaky':

y = F.leaky_relu(x=y, negative_slope=0.1)

elif self.act == 'relu':

y = F.relu(x=y)

return y

# 定义残差块

# 每个残差块会对输入图片做三次卷积,然后跟输入图片进行短接

# 如果残差块中第三次卷积输出特征图的形状与输入不一致,则对输入图片做1x1卷积,将其输出形状调整成一致

class BottleneckBlock(paddle.nn.Layer):

def __init__(self, num_channels, num_filters, stride, shortcut=True):

super(BottleneckBlock, self).__init__()

# 创建第一个卷积层 1x1

self.conv0 = ConvBNLayer(num_channels=num_channels, num_filters=num_filters, filter_size=1, act='relu')

# 创建第二个卷积层 3x3

self.conv1 = ConvBNLayer(num_channels=num_filters, num_filters=num_filters, filter_size=3, stride=stride, act='relu')

# 创建第三个卷积 1x1,但输出通道数乘以4

self.conv2 = ConvBNLayer(num_channels=num_filters, num_filters=num_filters * 4, filter_size=1, act=None)

# 如果conv2的输出跟此残差块的输入数据形状一致,则shortcut=True

# 否则shortcut = False,添加1个1x1的卷积作用在输入数据上,使其形状变成跟conv2一致

if not shortcut:

self.short = ConvBNLayer(num_channels=num_channels, num_filters=num_filters * 4, filter_size=1, stride=stride)

self.shortcut = shortcut

self._num_channels_out = num_filters * 4

def forward(self, inputs):

y = self.conv0(inputs)

conv1 = self.conv1(y)

conv2 = self.conv2(conv1)

# 如果shortcut=True,直接将inputs跟conv2的输出相加

# 否则需要对inputs进行一次卷积,将形状调整成跟conv2输出一致

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

y = paddle.add(x=short, y=conv2)

y = F.relu(y)

return y

# 定义ResNet模型

class ResNet(paddle.nn.Layer):

def __init__(self, layers=50, class_dim=1):

"""

layers, 网络层数,可以是50, 101或者152

class_dim,分类标签的类别数

"""

super(ResNet, self).__init__()

self.layers = layers

supported_layers = [50, 101, 152]

assert layers in supported_layers, \

"supported layers are {} but input layer is {}".format(supported_layers, layers)

if layers == 50:

# ResNet50包含多个模块,其中第2到第5个模块分别包含3、4、6、3个残差块

depth = [3, 4, 6, 3]

elif layers == 101:

# ResNet101包含多个模块,其中第2到第5个模块分别包含3、4、23、3个残差块

depth = [3, 4, 23, 3]

elif layers == 152:

# ResNet152包含多个模块,其中第2到第5个模块分别包含3、8、36、3个残差块

depth = [3, 8, 36, 3]

# 残差块中使用到的卷积的输出通道数

num_filters = [64, 128, 256, 512]

# ResNet的第一个模块,包含1个7x7卷积,后面跟着1个最大池化层

self.conv = ConvBNLayer(num_channels=3, num_filters=64, filter_size=7, stride=2, act='relu')

self.pool2d_max = nn.MaxPool2D(kernel_size=3, stride=2, padding=1)

# ResNet的第二到第五个模块c2、c3、c4、c5

self.bottleneck_block_list = []

num_channels = 64

for block in range(len(depth)):

shortcut = False

for i in range(depth[block]):

bottleneck_block = self.add_sublayer(

'bb_%d_%d' % (block, i),

BottleneckBlock(

num_channels=num_channels,

num_filters=num_filters[block],

stride=2 if i == 0 and block != 0 else 1, # c3、c4、c5将会在第一个残差块使用stride=2;其余所有残差块stride=1

shortcut=shortcut))

num_channels = bottleneck_block._num_channels_out

self.bottleneck_block_list.append(bottleneck_block)

shortcut = True

# 在c5的输出特征图上使用全局池化

self.pool2d_avg = paddle.nn.AdaptiveAvgPool2D(output_size=1)

# stdv用来作为全连接层随机初始化参数的方差

import math

stdv = 1.0 / math.sqrt(2048 * 1.0)

# 创建全连接层,输出大小为类别数目,经过残差网络的卷积和全局池化后,

# 卷积特征的维度是[B,2048,1,1],故最后一层全连接的输入维度是2048

self.out = nn.Linear(in_features=2048, out_features=class_dim,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(-stdv, stdv)))

def forward(self, inputs):

y = self.conv(inputs)

y = self.pool2d_max(y)

for bottleneck_block in self.bottleneck_block_list:

y = bottleneck_block(y)

y = self.pool2d_avg(y)

y = paddle.reshape(y, [y.shape[0], -1])

y = self.out(y)

return y

# 创建模型

model = ResNet()

# 定义优化器

opt = paddle.optimizer.Momentum(learning_rate=0.001, momentum=0.9, parameters=model.parameters(), weight_decay=0.001)

# 启动训练过程

iters, train_losses = train_pm(model, optimizer=opt)

# 画出训练过程中Loss的变化曲线

plt.figure()

plt.title("ResNet-train loss", fontsize=24)

plt.xlabel("iter", fontsize=14)

plt.ylabel("loss", fontsize=14)

plt.plot(iters, train_losses, color='red', label='train loss')

plt.grid()

plt.show()

四,几种CNN的训练效果比较

注:此训练结果只能做参考,每个模型都只是只跑了20~40epochs,模型训练并没有完全稳定下来,且VGG训练效果过差的原因应该是本人NVIDIA 1050Ti太不给力,只能把batch_size调为1才能让模型跑起来,使得VGG训练效果很差。按照理论来说,VGG的训练准确率应超过90%。

各个模型训练过程中的loss可视化如下列各图所示:

数据统计如下:

| loss | accuracy | |

| LeNet | 0.691962 | 0.524554 |

| AlexNet | 0.166790 | 0.941964 |

| VGG | 0.692041 | 0.527500 |

| GoogleNet | 0.132089 | 0.955000 |

| ResNet | 0.113273 | 0.970000 |

五,实际判断效果实战

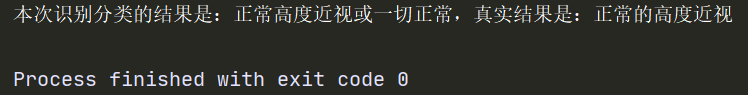

上面模型评估的效果不错,但输入一张具体的图片后究竟是否能判断准确,以一张正常高度近视的图片为例来测试,代码如下(直接加上面代码下面即可):

# 定义检测过程

model = AlexNet()

params_file_path = 'D:/Pycharm 2020/Projects/PaddlePaddle 2.1/眼疾图片识别/palm.pdparams' # 路径最后不能再加/

img_path = 'D:/Pycharm 2020/Data set/Eye disease recognition/verify/Severe myopia.jpg'

# 加载模型参数

param_dict = paddle.load(params_file_path)

model.load_dict(param_dict)

# 灌入数据

model.eval()

img = cv2.imread(img_path)

img_transform = transform_img(img)

img_array = np.array(img_transform).astype('float32')

img_array = img_array.reshape((1,3,224,224))

# 模型反馈分类标签的对应概率

results = model(paddle.to_tensor(img_array))

print(results)

Predicted_result = np.array(results).astype('float32')

Predicted_result = Predicted_result[0][0]

# 根据输出值接近0与1的程度来进行分类

if abs(Predicted_result-1) < abs(Predicted_result-0):

print("本次识别分类的结果是:病理性近视,真实结果是:正常的高度近视")

elif abs(Predicted_result-1) > abs(Predicted_result-0):

print("本次识别分类的结果是:正常高度近视或一切正常,真实结果是:正常的高度近视")

else:

print('本次无法做出判断,请再次强化神经网络训练')结果显示,能够判断准确,本文只拿了一个例子来说明,并不具有说服力,真正成熟还需要大量例子测试检验。