文章目录

- 一、损失函数的概念

- 二、损失函数

- 1.nn.CrossEntropyLoss

- 2. nn.NLLLoss

- 3. nn.BCELoss

- 4. nn.BCEWithLogitsLoss

- 5.nn.L1Loss

- 6. nn.MSELoss

- 7. SmoothL1Loss

- 8.PoissonNLLLoss

- 9.KLDivLoss

- 10.MarginRankingLoss

- 11.MultiLabelMarginLoss

- 12.SoftMarginLoss

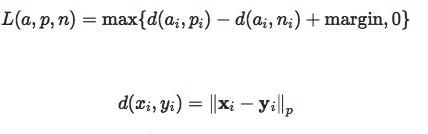

- 13.MultiLabelSoftMarginLoss

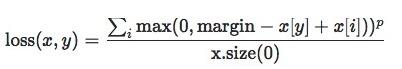

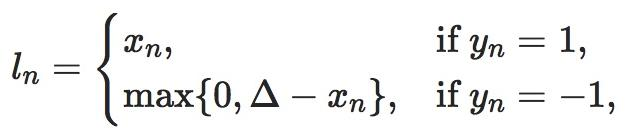

- 14.MultiMarginLoss

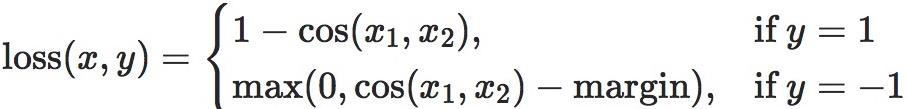

- 15.TripletMarginLoss

- 16.HingeEmbeddingLoss

- 17.CosineEmbeddingLoss

- 18.CTCLoss

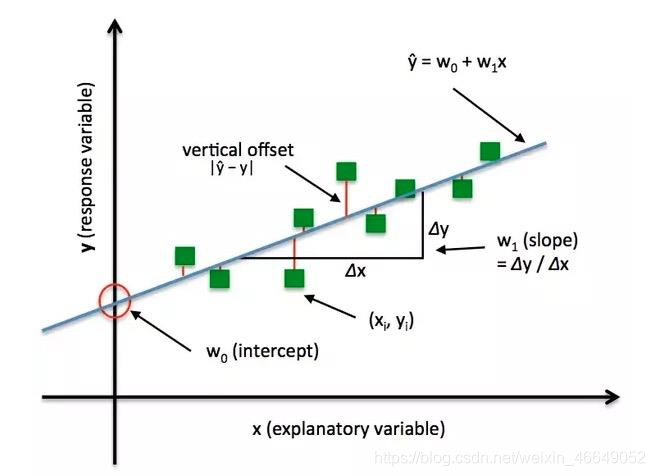

一、损失函数的概念

??损失函数:衡量模型输出与真实标签的差异。

损失函数(Loss Function):

L

o

s

s

=

𝒇

(

𝒚

^

,

𝒚

)

Loss = 𝒇(\hat𝒚 , 𝒚)

Loss=f(y^?,y)

注:损失函数是计算一个样本。

代价函数(Cost Function):

C

o

s

t

=

1

N

∑

i

n

f

(

y

i

^

,

y

i

)

Cost = \frac{1}{N}\sum_i^nf(\hat{y_i},y_i)

Cost=N1?i∑n?f(yi?^?,yi?)

注:针对样本集,然后求平均值。

目标函数(Objective Function):

O

b

j

=

C

o

s

t

+

R

e

g

u

l

a

r

i

z

a

t

i

o

n

Obj= Cost + Regularization

Obj=Cost+Regularization

注:代价函数并不是越小越好,越小容易导致模型过拟合;所以需要正则项来控制模型的复杂度。

??下面介绍PyTorch中的Loss,源码如下:

class _Loss(Module):

# Loss继承于Module,8个字典

# size_average, reduce这两个参数即将被舍弃

def __init__(self, size_average=None, reduce=None, reduction='mean'):

super(_Loss, self).__init__()

if size_average is not None or reduce is not None:

self.reduction = _Reduction.legacy_get_string(

size_average, reduce)

else:

self.reduction = reduction

二、损失函数

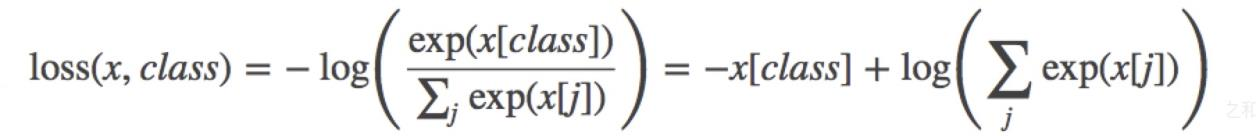

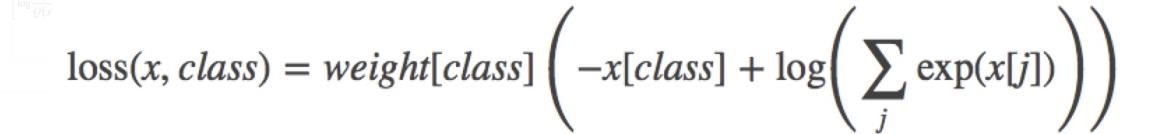

1.nn.CrossEntropyLoss

源码如下:

def cross_entropy(input, target, weight=None, size_average=None, ignore_index=-100,

reduce=None, reduction='mean'):

# type: (Tensor, Tensor, Optional[Tensor], Optional[bool], int, Optional[bool], str) -> Tensor

r"""This criterion combines `log_softmax` and `nll_loss` in a single

function.

See :class:`~torch.nn.CrossEntropyLoss` for details.

Args:

input (Tensor) : :math:`(N, C)` where `C = number of classes` or :math:`(N, C, H, W)`

in case of 2D Loss, or :math:`(N, C, d_1, d_2, ..., d_K)` where :math:`K \geq 1`

in the case of K-dimensional loss.

target (Tensor) : :math:`(N)` where each value is :math:`0 \leq \text{targets}[i] \leq C-1`,

or :math:`(N, d_1, d_2, ..., d_K)` where :math:`K \geq 1` for

K-dimensional loss.

weight (Tensor, optional): a manual rescaling weight given to each

class. If given, has to be a Tensor of size `C`

size_average (bool, optional): Deprecated (see :attr:`reduction`). By default,

the losses are averaged over each loss element in the batch. Note that for

some losses, there multiple elements per sample. If the field :attr:`size_average`

is set to ``False``, the losses are instead summed for each minibatch. Ignored

when reduce is ``False``. Default: ``True``

ignore_index (int, optional): Specifies a target value that is ignored

and does not contribute to the input gradient. When :attr:`size_average` is

``True``, the loss is averaged over non-ignored targets. Default: -100

reduce (bool, optional): Deprecated (see :attr:`reduction`). By default, the

losses are averaged or summed over observations for each minibatch depending

on :attr:`size_average`. When :attr:`reduce` is ``False``, returns a loss per

batch element instead and ignores :attr:`size_average`. Default: ``True``

reduction (string, optional): Specifies the reduction to apply to the output:

``'none'`` | ``'mean'`` | ``'sum'``. ``'none'``: no reduction will be applied,

``'mean'``: the sum of the output will be divided by the number of

elements in the output, ``'sum'``: the output will be summed. Note: :attr:`size_average`

and :attr:`reduce` are in the process of being deprecated, and in the meantime,

specifying either of those two args will override :attr:`reduction`. Default: ``'mean'``

Examples::

>>> input = torch.randn(3, 5, requires_grad=True)

>>> target = torch.randint(5, (3,), dtype=torch.int64)

>>> loss = F.cross_entropy(input, target)

>>> loss.backward()

"""

if size_average is not None or reduce is not None:

reduction = _Reduction.legacy_get_string(size_average, reduce)

# nn.LogSoftmax ()与nn.NLLLoss ()结合,进行交叉熵计算

return nll_loss(log_softmax(input, 1), target, weight, None, ignore_index, None, reduction)

nn.CrossEntropyLoss(weight=None,

size_average=None,

ignore_index=-100,

reduce=None,

reduction=‘mean’)

功能: nn.LogSoftmax ()与nn.NLLLoss ()结合,进行交叉熵计算

nn.LogSoftmax ()用于将输出值归一化到0-1之间,然后通过nn.NLLLoss ()来计算交叉熵

主要参数:

-

weight:各类别的loss设置权值

-

ignore _index:忽略某个类别

-

reduction :计算模式,可为none/sum/mean

none- 逐个元素计算

sum- 所有元素求和,返回标量

mean- 加权平均,返回标量

交叉熵 = 信息熵 + 相对熵

H

(

𝑷

,

𝑸

)

=

H

(

𝑷

)

+

𝑫

𝑲

𝑳

(

𝑷

,

𝑸

)

H(𝑷, 𝑸) = H(𝑷) + 𝑫_{𝑲𝑳}(𝑷, 𝑸)

H(P,Q)=H(P)+DKL?(P,Q)

交叉熵:

H

(

𝑷

,

𝑸

)

=

?

∑

𝒊

=

𝟏

𝑵

𝑷

(

𝒙

𝒊

)

𝒍

𝒐

𝒈

𝑸

(

𝒙

i

)

H(𝑷, 𝑸) = ? \sum_{𝒊=𝟏}^𝑵 𝑷(𝒙_𝒊)𝒍𝒐𝒈𝑸(𝒙_i)

H(P,Q)=?i=1∑N?P(xi?)logQ(xi?)

P

P

P是真实的概率分布,

Q

Q

Q是模型输出的概率分布。由于

H

(

P

)

H(P)

H(P)是一个常数,所以在机器学习中,我们优化最小化交叉熵等价于优化相对熵。

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# fake data

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 1, 1], dtype=torch.long)

# def loss function

loss_f_none = nn.CrossEntropyLoss(weight=None, reduction='none')

loss_f_sum = nn.CrossEntropyLoss(weight=None, reduction='sum')

loss_f_mean = nn.CrossEntropyLoss(weight=None, reduction='mean')

# forward

loss_none = loss_f_none(inputs, target)

loss_sum = loss_f_sum(inputs, target)

loss_mean = loss_f_mean(inputs, target)

# view

print("Cross Entropy Loss:\n ", loss_none, loss_sum, loss_mean)

Cross Entropy Loss:

tensor([1.3133, 0.1269, 0.1269]) tensor(1.5671) tensor(0.5224)

使用weight参数后:

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# fake data

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 1, 1], dtype=torch.long)

# def loss function

weights = torch.tensor([1, 2], dtype=torch.float)

# weights = torch.tensor([0.7, 0.3], dtype=torch.float)

loss_f_none_w = nn.CrossEntropyLoss(weight=weights, reduction='none')

loss_f_sum = nn.CrossEntropyLoss(weight=weights, reduction='sum')

loss_f_mean = nn.CrossEntropyLoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target)

loss_sum = loss_f_sum(inputs, target)

loss_mean = loss_f_mean(inputs, target)

# view

print("\nweights: ", weights)

print(loss_none_w, loss_sum, loss_mean)

# 基于带权重公式,第二个数据与第三个数据乘以2

weights: tensor([1., 2.])

tensor([1.3133, 0.2539, 0.2539]) tensor(1.8210) tensor(0.3642)

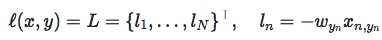

2. nn.NLLLoss

nn.NLLLoss(weight=None,

size_average=None,

ignore_index=-100,

reduce=None,

reduction='mean')

功能:实现负对数似然函数中的负号功能

主要参数:

- weight:各类别的

l

o

s

s

loss

loss设置权值

- ignore_index:忽略某个类别

- reduction :计算模式,可为none/sum /mean

none-逐个元素计算

sum-所有元素求和,返回标量

mean-加权平均,返回标量

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# fake data

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 1, 1], dtype=torch.long)

# 权重

weights = torch.tensor([1, 1], dtype=torch.float)

loss_f_none_w = nn.NLLLoss(weight=weights, reduction='none')

loss_f_sum = nn.NLLLoss(weight=weights, reduction='sum')

loss_f_mean = nn.NLLLoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target)

loss_sum = loss_f_sum(inputs, target)

loss_mean = loss_f_mean(inputs, target)

# view

print("\nweights: ", weights)

print("NLL Loss", loss_none_w, loss_sum, loss_mean)

NLL Loss tensor([-1., -3., -3.]) tensor(-7.) tensor(-2.3333)

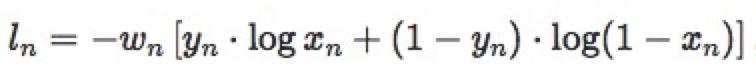

3. nn.BCELoss

nn.BCELoss(weight=None,

size_average=None,

reduce=None,

reduction='mean’)

功能:二分类交叉熵,针对每一个神经元节点;注意事项:输入值取值在[0,1]

主要参数:

-

weight:各类别的loss设置权值

-

ignore_index:忽略某个类别

-

reduction :计算模式,可为none/sum /mean

none-逐个元素计算

sum-所有元素求和,返回标量

mean-加权平均,返回标量

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

inputs = torch.tensor([[1, 2], [2, 2], [3, 4], [4, 5]], dtype=torch.float)

target = torch.tensor([[1, 0], [1, 0], [0, 1], [0, 1]], dtype=torch.float)

target_bce = target

# 数据尺度压缩到0-1之间

inputs = torch.sigmoid(inputs)

weights = torch.tensor([1, 1], dtype=torch.float)

loss_f_none_w = nn.BCELoss(weight=weights, reduction='none')

loss_f_sum = nn.BCELoss(weight=weights, reduction='sum')

loss_f_mean = nn.BCELoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target_bce)

loss_sum = loss_f_sum(inputs, target_bce)

loss_mean = loss_f_mean(inputs, target_bce)

# view

print("\nweights: ", weights)

print("BCE Loss", loss_none_w, loss_sum, loss_mean)

weights: tensor([1., 1.])

BCE Loss tensor([[0.3133, 2.1269],

[0.1269, 2.1269],

[3.0486, 0.0181],

[4.0181, 0.0067]]) tensor(11.7856) tensor(1.4732)

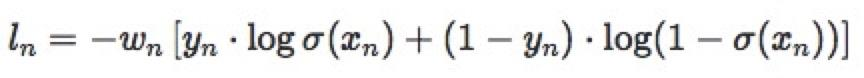

4. nn.BCEWithLogitsLoss

nn.BCEWithLogitsLoss(weight=None,

size_average=None,

reduce=None, reduction='mean',

pos_weight=None)

功能:结合Sigmoid与二分类交叉熵;注意事项:网络最后不加sigmoid函数

主要参数:

- pos_weight :正样本的权值,使得更加关注正样本的loss

- weight:各类别的loss设置权值

在 x n x_n xn?外面加上一层sigmoid函数。 - ignore _index:忽略某个类别

- reduction :计算模式,可为none/sum /m e an

none-逐个元素计算

sum-所有元素求和,返回标量

mean-加权平均,返回标量

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

inputs = torch.tensor([[1, 2], [2, 2], [3, 4], [4, 5]], dtype=torch.float)

target = torch.tensor([[1, 0], [1, 0], [0, 1], [0, 1]], dtype=torch.float)

target_bce = target

weights = torch.tensor([1, 1], dtype=torch.float)

loss_f_none_w = nn.BCEWithLogitsLoss(weight=weights, reduction='none')

loss_f_sum = nn.BCEWithLogitsLoss(weight=weights, reduction='sum')

loss_f_mean = nn.BCEWithLogitsLoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target_bce)

loss_sum = loss_f_sum(inputs, target_bce)

loss_mean = loss_f_mean(inputs, target_bce)

# view

print("\nweights: ", weights)

print(loss_none_w, loss_sum, loss_mean)

weights: tensor([1., 1.])

tensor([[0.3133, 2.1269],

[0.1269, 2.1269],

[3.0486, 0.0181],

[4.0181, 0.0067]]) tensor(11.7856) tensor(1.4732)

下面实验pos_weight参数:

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

inputs = torch.tensor([[1, 2], [2, 2], [3, 4], [4, 5]], dtype=torch.float)

target = torch.tensor([[1, 0], [1, 0], [0, 1], [0, 1]], dtype=torch.float)

target_bce = target

weights = torch.tensor([1], dtype=torch.float)

# 对于正样本所在的数据乘3

pos_w = torch.tensor([3], dtype=torch.float) # 3

loss_f_none_w = nn.BCEWithLogitsLoss(weight=weights, reduction='none', pos_weight=pos_w)

loss_f_sum = nn.BCEWithLogitsLoss(weight=weights, reduction='sum', pos_weight=pos_w)

loss_f_mean = nn.BCEWithLogitsLoss(weight=weights, reduction='mean', pos_weight=pos_w)

# forward

loss_none_w = loss_f_none_w(inputs, target_bce)

loss_sum = loss_f_sum(inputs, target_bce)

loss_mean = loss_f_mean(inputs, target_bce)

# view

print("\npos_weights: ", pos_w)

print(loss_none_w, loss_sum, loss_mean)

pos_weights: tensor([3.])

tensor([[0.9398, 2.1269],

[0.3808, 2.1269],

[3.0486, 0.0544],

[4.0181, 0.0201]]) tensor(12.7158) tensor(1.5895)

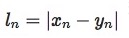

5.nn.L1Loss

nn.L1Loss(size_average=None,

reduce=None,

reduction='mean')

功能: 计算inputs与target之差的绝对值

主要参数:

- reduction :计算模式,可为none/sum/mean

none- 逐个元素计算

sum- 所有元素求和,返回标量

mean- 加权平均,返回标量

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

inputs = torch.ones((2, 2))

target = torch.ones((2, 2)) * 3

loss_f = nn.L1Loss(reduction='none')

loss = loss_f(inputs, target)

print("input:{}\ntarget:{}\nL1 loss:{}".format(inputs, target, loss))

input:tensor([[1., 1.],

[1., 1.]])

target:tensor([[3., 3.],

[3., 3.]])

L1 loss:tensor([[2., 2.],

[2., 2.]])

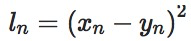

6. nn.MSELoss

nn.MSELoss(size_average=None,

reduce=None,

reduction='mean')

功能: 计算inputs与target之差的平方

主要参数:

- reduction :计算模式,可为none/sum/mean

none- 逐个元素计算

sum- 所有元素求和,返回标量

mean- 加权平均,返回标量

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

inputs = torch.ones((2, 2))

target = torch.ones((2, 2)) * 3

loss_f_mse = nn.MSELoss(reduction='none')

loss_mse = loss_f_mse(inputs, target)

print("MSE loss:{}".format(loss_mse))

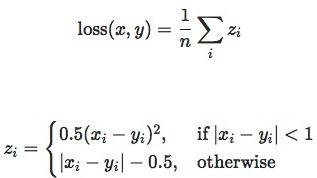

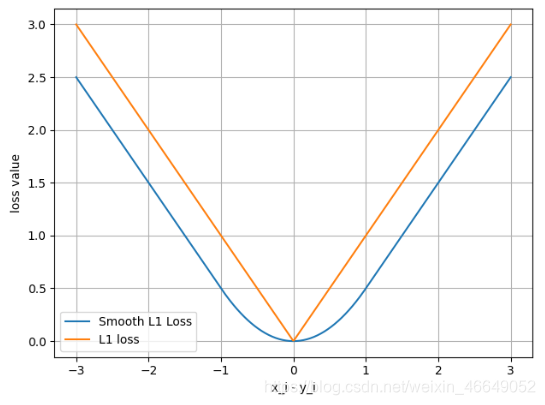

7. SmoothL1Loss

nn.SmoothL1Loss(size_average=None,

reduce=None,

reduction='mean')

功能:平滑的L1损失,相比于L1Loss,处处可导

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

inputs = torch.linspace(-3, 3, steps=500)

target = torch.zeros_like(inputs)

loss_f = nn.SmoothL1Loss(reduction='none')

loss_smooth = loss_f(inputs, target)

loss_l1 = np.abs(inputs.numpy())

plt.plot(inputs.numpy(), loss_smooth.numpy(), label='Smooth L1 Loss')

plt.plot(inputs.numpy(), loss_l1, label='L1 loss')

plt.xlabel('x_i - y_i')

plt.ylabel('loss value')

8.PoissonNLLLoss

nn.PoissonNLLLoss(log_input=True,

full=False,

size_average=None,

eps=1e-08,

reduce=None,

reduction='mean')

功能:泊松分布的负对数似然损失函数

主要参数:

- log_input :输入是否为对数形式,决定计算公式

log_input = True,

l o s s ( i n p u t , t a r g e t ) = e x p ( i n p u t ) ? t a r g e t ? i n p u t loss(input, target) = exp(input) - target * input loss(input,target)=exp(input)?target?input

log_input = False,

l o s s ( i n p u t , t a r g e t ) = i n p u t ? t a r g e t ? l o g ( i n p u t + e p s ) loss(input, target) = input - target * log(input+eps) loss(input,target)=input?target?log(input+eps) - full :计算所有loss,默认为False

- eps :修正项,避免log(input)为nan

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

inputs = torch.randn((2, 2))

target = torch.randn((2, 2))

loss_f = nn.PoissonNLLLoss(log_input=True, full=False, reduction='none')

loss = loss_f(inputs, target)

print("input:{}\ntarget:{}\nPoisson NLL loss:{}".format(inputs, target, loss))

input:tensor([[0.6614, 0.2669],

[0.0617, 0.6213]])

target:tensor([[-0.4519, -0.1661],

[-1.5228, 0.3817]])

Poisson NLL loss:tensor([[2.2363, 1.3503],

[1.1575, 1.6242]])

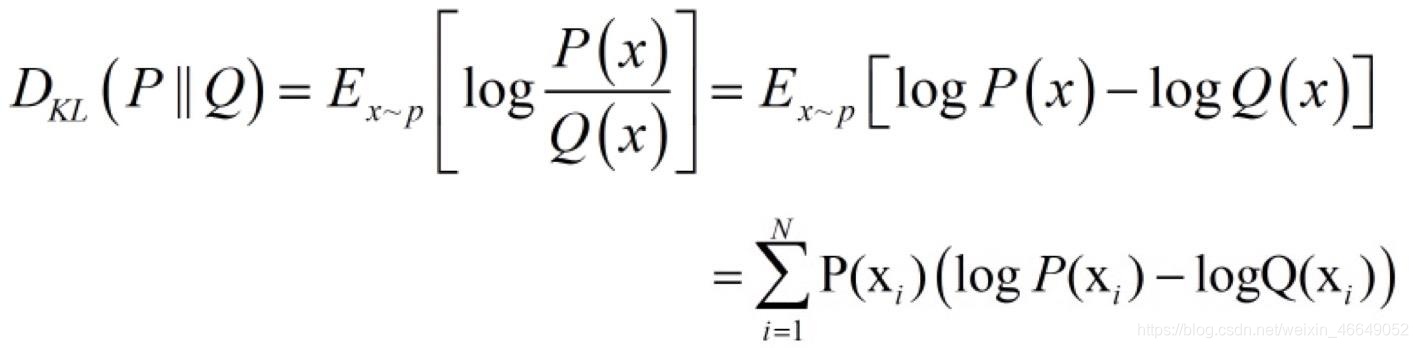

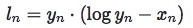

9.KLDivLoss

nn.KLDivLoss(size_average=None,

reduce=None,

reduction='mean')

功能:计算KLD(divergence),KL散度,相对熵

注意事项:需提前将输入计算 log-probabilities,如通过nn.logsoftmax()

主要参数:

- reduction :none/sum/mean/batchmean

batchmean- batchsize维度求平均值(除数不是元素个数而是batchsize的大小)

none- 逐个元素计算

sum- 所有元素求和,返回标量

mean- 加权平均,返回标量

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

inputs = torch.tensor([[0.5, 0.3, 0.2], [0.2, 0.3, 0.5]])

# pytorch函数计算公式不是原始定义公式,其对输入默认已经取log了,在损失函数计算中比公式定义少了一个log(input)的操作

# 因此公式定义里有一个log(y_i / x_i),在pytorch变为了 log(y_i) - x_i,

inputs = F.log_softmax(inputs, 1)

target = torch.tensor([[0.9, 0.05, 0.05], [0.1, 0.7, 0.2]], dtype=torch.float)

loss_f_none = nn.KLDivLoss(reduction='none')

loss_f_mean = nn.KLDivLoss(reduction='mean')

loss_f_bs_mean = nn.KLDivLoss(reduction='batchmean')

loss_none = loss_f_none(inputs, target)

loss_mean = loss_f_mean(inputs, target)

loss_bs_mean = loss_f_bs_mean(inputs, target)

print("loss_none:\n{}\nloss_mean:\n{}\nloss_bs_mean:\n{}".format(loss_none, loss_mean, loss_bs_mean))

loss_none:

tensor([[ 0.7510, -0.0928, -0.0878],

[-0.1063, 0.5482, -0.1339]])

loss_mean:

0.1464076191186905

loss_bs_mean:

0.43922287225723267

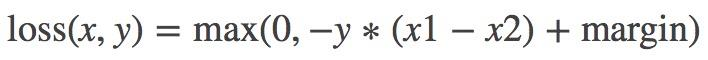

10.MarginRankingLoss

nn.MarginRankingLoss(margin=0.0,

size_average=None,

reduce=None,

reduction='mean')

功能:计算两个向量之间的相似度,用于排序任务

特别说明:该方法计算两组数据之间的差异,返回一个

n

?

n

n*n

n?n的 loss 矩阵

主要参数:

- margin :边界值,x1与x2之间的差异值

- reduction :计算模式,可为none/sum/mean

y = 1时, 希望x1比x2大,当x1>x2时,不产生loss

y = -1时,希望x2比x1大,当x2>x1时,不产生loss

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

x1 = torch.tensor([[1], [2], [3]], dtype=torch.float)

x2 = torch.tensor([[2], [2], [2]], dtype=torch.float)

target = torch.tensor([1, 1, -1], dtype=torch.float)

loss_f_none = nn.MarginRankingLoss(margin=0, reduction='none')

loss = loss_f_none(x1, x2, target)

print(loss)

tensor([[1., 1., 0.],

[0., 0., 0.],

[0., 0., 1.]])

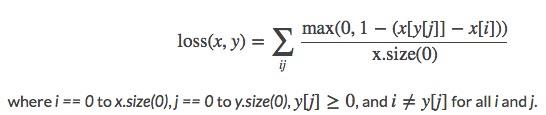

11.MultiLabelMarginLoss

nn.MultiLabelMarginLoss(size_average=None,

reduce=None,

reduction='mean')

功能:多标签边界损失函数(多标签分类与多分类是不同的,多标签分类指的是一个样本对应多个标签)

举例:四分类任务,样本x属于0类和3类,

标签:[0, 3, -1, -1] , 不是[1, 0, 0, 1]

主要参数:

- reduction :计算模式,可为none/sum/mean

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

x = torch.tensor([[0.1, 0.2, 0.4, 0.8]])

y = torch.tensor([[0, 3, -1, -1]], dtype=torch.long)

loss_f = nn.MultiLabelMarginLoss(reduction='none')

loss = loss_f(x, y)

print(loss)

tensor([0.8500])

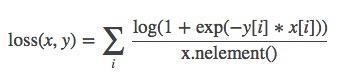

12.SoftMarginLoss

nn.SoftMarginLoss(size_average=None,

reduce=None,

reduction='mean')

功能:计算二分类的logistic损失

主要参数:

- reduction :计算模式,可为none/sum/mean

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

inputs = torch.tensor([[0.3, 0.7], [0.5, 0.5]])

target = torch.tensor([[-1, 1], [1, -1]], dtype=torch.float)

loss_f = nn.SoftMarginLoss(reduction='none')

loss = loss_f(inputs, target)

print("SoftMargin: ", loss)

SoftMargin: tensor([[0.8544, 0.4032],

[0.4741, 0.9741]])

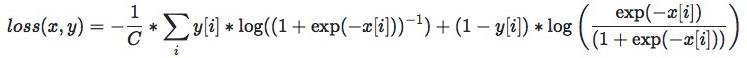

13.MultiLabelSoftMarginLoss

nn.MultiLabelSoftMarginLoss(weight=None,

size_average=None,

reduce=None,

reduction='mean')

功能:SoftMarginLoss多标签版本

主要参数:

- weight:各类别的loss设置权值

- reduction :计算模式,可为none/sum/mean

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

inputs = torch.tensor([[0.3, 0.7, 0.8]])

target = torch.tensor([[0, 1, 1]], dtype=torch.float)

loss_f = nn.MultiLabelSoftMarginLoss(reduction='none')

loss = loss_f(inputs, target)

print("MultiLabel SoftMargin: ", loss)

MultiLabel SoftMargin: tensor([0.5429])

14.MultiMarginLoss

nn.MultiMarginLoss(p=1,

margin=1.0,

weight=None,

size_average=None,

reduce=None,

reduction='mean')

功能:计算多分类的折页损失

主要参数:

- p :可选1或2

- weight:各类别的loss设置权值

- margin :边界值

- reduction :计算模式,可为none/sum/mean

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

x = torch.tensor([[0.1, 0.2, 0.7], [0.2, 0.5, 0.3]])

y = torch.tensor([1, 2], dtype=torch.long)

loss_f = nn.MultiMarginLoss(reduction='none')

loss = loss_f(x, y)

print("Multi Margin Loss: ", loss)

Multi Margin Loss: tensor([0.8000, 0.7000])

15.TripletMarginLoss

nn.TripletMarginLoss(margin=1.0,

p=2.0,

eps=1e-06,

swap=False,

size_average=None,

reduce=None,

reduction='mean')

功能:计算三元组损失,人脸验证中常用

主要参数:

- p :范数的阶,默认为2

- margin :边界值

- reduction :计算模式,可为none/sum/mean

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

anchor = torch.tensor([[1.]])

pos = torch.tensor([[2.]])

neg = torch.tensor([[0.5]])

loss_f = nn.TripletMarginLoss(margin=1.0, p=1)

loss = loss_f(anchor, pos, neg)

print("Triplet Margin Loss", loss)

Triplet Margin Loss tensor(1.5000)

16.HingeEmbeddingLoss

nn.HingeEmbeddingLoss(margin=1.0,

size_average=None,

reduce=None,

reduction='mean')

功能:计算两个输入的相似性,常用于非线性embedding和半监督学习

- ▲是margin=1.0

特别注意:输入

x

x

x应为两个输入之差的绝对值,需要手动计算

主要参数:

- margin :边界值

- reduction :计算模式,可为none/sum/mean

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

inputs = torch.tensor([[1., 0.8, 0.5]])

target = torch.tensor([[1, 1, -1]])

loss_f = nn.HingeEmbeddingLoss(margin=1, reduction='none')

loss = loss_f(inputs, target)

print("Hinge Embedding Loss", loss)

Hinge Embedding Loss tensor([[1.0000, 0.8000, 0.5000]])

17.CosineEmbeddingLoss

nn.CosineEmbeddingLoss(margin=0.0,

size_average=None,

reduce=None,

reduction='mean')

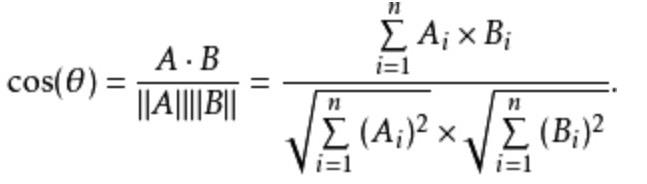

功能:采用余弦相似度计算两个输入的相似性

主要参数:

? margin :可取值[-1, 1] , 推荐为[0, 0.5]

? reduction :计算模式,可为none/sum/mean

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

x1 = torch.tensor([[0.3, 0.5, 0.7], [0.3, 0.5, 0.7]])

x2 = torch.tensor([[0.1, 0.3, 0.5], [0.1, 0.3, 0.5]])

target = torch.tensor([[1, -1]], dtype=torch.float)

loss_f = nn.CosineEmbeddingLoss(margin=0., reduction='none')

loss = loss_f(x1, x2, target)

print("Cosine Embedding Loss", loss)

Cosine Embedding Loss tensor([[0.0167, 0.9833]])

18.CTCLoss

torch.nn.CTCLoss(blank=0,

reduction='mean',

zero_infinity=False)

功能: 计算CTC损失,解决时序类数据的分类

Connectionist Temporal Classification

主要参数:

- blank :blank label

- zero_infinity :无穷大的值或梯度置0 ? reduction :计算模式,可为none/sum/mean

import torch

import random

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

T = 50 # Input sequence length

C = 20 # Number of classes (including blank)

N = 16 # Batch size

S = 30 # Target sequence length of longest target in batch

S_min = 10 # Minimum target length, for demonstration purposes

# Initialize random batch of input vectors, for *size = (T,N,C)

inputs = torch.randn(T, N, C).log_softmax(2).detach().requires_grad_()

# Initialize random batch of targets (0 = blank, 1:C = classes)

target = torch.randint(low=1, high=C, size=(N, S), dtype=torch.long)

input_lengths = torch.full(size=(N,), fill_value=T, dtype=torch.long)

target_lengths = torch.randint(low=S_min, high=S, size=(N,), dtype=torch.long)

ctc_loss = nn.CTCLoss()

loss = ctc_loss(inputs, target, input_lengths, target_lengths)

print("CTC loss: ", loss)

CTC loss: tensor(7.5385, grad_fn=<MeanBackward0>)

如果对您有帮助,麻烦点赞关注,这真的对我很重要!!!如果需要互关,请评论或者私信!