pytorch简单项目模板

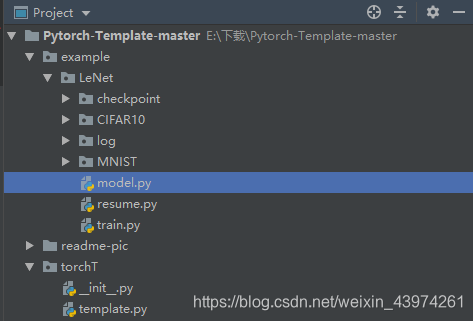

初学pytorch,但是不知道一个完整的项目应该怎么写,网上的模板大多数都很复杂看不懂,终于找到一个适合新手用的模板,包含数据生成器、模型定义、训练、模型保存、记录最佳模型、画图分析。模板地址:https://github.com/KinglittleQ/Pytorch-Template

pytorch项目流程

一般来说,用pytorch编写深度学习模型,一般需要编写一下几个部分:

模型定义

数据处理和加载

训练模型

测试模型

训练过程可视化

这里先给出模板的总体,之后再详细说明:

import torch

import os

import os.path as osp

class TemplateModel():

def __init__(self):

self.writer = None

self.train_logger = None # not neccessary

self.eval_logger = None # not neccessary

self.args = None # not neccessary

self.step = 0

self.epoch = 0

# self.sum_loss=0

self.correct = 0

self.total=0

self.best_acc = float('Inf')

self.model = None

self.optimizer = None

self.criterion = None

self.metric = None

self.train_loader = None

self.test_loader = None

self.device = None

self.ckpt_dir = None

self.log_per_step = None#每多少轮计算一次损失值

# self.eval_per_epoch = None

def check_init(self):#检测是否正常

assert self.model

assert self.optimizer

assert self.criterion

assert self.metric

assert self.train_loader

assert self.test_loader

assert self.device

assert self.ckpt_dir

assert self.log_per_step

if not osp.exists(self.ckpt_dir):

os.mkdir(self.ckpt_dir)

def load_state(self, fname, optim=True):#加载模型参数

state = torch.load(fname)

if isinstance(self.model, torch.nn.DataParallel):

self.model.module.load_state_dict(state['model'])

else:

self.model.load_state_dict(state['model'])

if optim and 'optimizer' in state:

self.optimizer.load_state_dict(state['optimizer'])

self.step = state['step']

self.epoch = state['epoch']

self.best_acc = state['best_acc']

print('load model from {}'.format(fname))

def save_state(self, fname, optim=True):#保存模型参数

state = {}

if isinstance(self.model, torch.nn.DataParallel):

state['model'] = self.model.module.state_dict()

else:

state['model'] = self.model.state_dict()

if optim:

state['optimizer'] = self.optimizer.state_dict()

state['step'] = self.step

state['epoch'] = self.epoch

state['best_acc'] = self.best_acc

torch.save(state, fname)

print('save model at {}'.format(fname))

def train(self):#训练

self.model.train()

self.epoch += 1

self.correct = 0

self.total = 0

for batch in self.train_loader:

self.step += 1

self.optimizer.zero_grad()

loss, acc = self.train_loss(batch)

loss.backward()

self.optimizer.step()

# self.sum_loss+=loss.item()

if self.step % self.log_per_step == 0:

self.writer.add_scalar('loss', loss.item(), self.step)

print('train epoch {}\tstep {}\tloss {:.3}\tacc {:.3}'.format(self.epoch, self.step, loss.item(),acc))

if self.train_logger:

self.train_logger(self.writer, acc)

def train_loss(self, batch):

x, y = batch

x = x.to(self.device)

y = y.to(self.device)

pred = self.model(x)

loss = self.criterion(pred, y)

_, predicted = torch.max(pred.data, 1)

self.correct += predicted.eq(y.data).sum()

self.total += y.size(0)

acc=self.correct/self.total

return loss, acc

# def train_acc(self,batch):

def eval(self):

self.model.eval()

acc, others = self.eval_acc()

if acc > self.best_acc:

self.best_acc = acc

self.save_state(osp.join(self.ckpt_dir, 'best.pth.tar'), False)

self.save_state(osp.join(self.ckpt_dir, '{}.pth.tar'.format(self.epoch)))

self.writer.add_scalar('acc', acc, self.epoch)

print('epoch {}\tacc {:.3}'.format(self.epoch, acc))

if self.eval_logger:

self.eval_logger(self.writer, others)

return acc

def eval_acc(self):

xs, ys, preds = [], [], []

for batch in self.test_loader:

x, y = batch

x = x.to(self.device)

y = y.to(self.device)

pred = self.model(x)

xs.append(x.cpu())

ys.append(y.cpu())

preds.append(pred.cpu())

xs = torch.cat(xs, dim=0)

ys = torch.cat(ys, dim=0)

preds = torch.cat(preds, dim=0)

acc = self.metric(preds, ys)

return acc, None

def inference(self, x):

x = x.to(self.device)

return self.model(x)

def num_parameters(self):

return sum([p.data.nelement() for p in self.model.parameters()])

模型定义

模型定义需要自己实现。我的模型定义在model中

import torch

import torch.nn as nn

import torch.nn.functional as F

class LeNet(nn.Module):

def __init__(self, act='relu'):

super(LeNet, self).__init__() # 第二、三行都是python类继承的基本操作,此写法应该是python2.7的继承格式,但python3里写这个好像也可以

self.conv1 = nn.Conv2d(3, 6, 5) # 添加第一个卷积层,调用了nn里面的Conv2d()

self.pool = nn.MaxPool2d(2, 2) # 最大池化层

self.conv2 = nn.Conv2d(6, 16, 5) # 同样是卷积层

self.fc1 = nn.Linear(16 * 5 * 5, 120) # 接着三个全连接层

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

# .view( )是一个tensor的方法,使得tensor改变size但是元素的总数是不变的。

# 第一个参数-1是说这个参数由另一个参数确定, 比如矩阵在元素总数一定的情况下,确定列数就能确定行数。

# 那么为什么这里只关心列数不关心行数呢,因为马上就要进入全连接层了,而全连接层说白了就是矩阵乘法,

# 你会发现第一个全连接层的首参数是16*5*5,所以要保证能够相乘,在矩阵乘法之前就要把x调到正确的size

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

定义完成后,要调用则在初始化模板时将它写入。

以下是train.py中代码,用这两个文件就可以进行模型训练了。

import torch

import torch.optim as optim

import torchvision.transforms as tfs

import torchvision

from torchvision.datasets import MNIST

from torch.utils.data import DataLoader

import tensorboardX as tX

from example.LeNet.model import LeNet

# import os.path as osp

from torchT import TemplateModel

lr = 0.001

batch_size = 128

n_epochs = 130

eval_per_epoch = 1

log_per_step = 100

# device = torch.device('cuda:0')

log_dir = 'log'

ckpt_dir = 'checkpoint'

device = torch.device("cuda:0" )

class Model(TemplateModel):

def __init__(self, args=None):

super().__init__()

self.writer = tX.SummaryWriter(log_dir=log_dir, comment='LeNet')

self.train_logger = None

self.eval_logger = None

self.args = args

self.step = 0

self.epoch = 0

self.best_error = float('Inf')

self.device = torch.device('cuda:0')

self.model = LeNet().to(self.device)

self.optimizer = optim.Adam(self.model.parameters(), lr=lr)

self.criterion = torch.nn.CrossEntropyLoss()

self.metric = metric

# train_transform = tfs.Compose([tfs.ToTensor(), tfs.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

train_transform = tfs.Compose([

# tfs.RandomRotation(degrees=15), # 将图像在-15-15之间随机旋转

# tfs.ColorJitter(brightness=0.5, contrast=0.5, saturation=0.5, hue=0.5), # 修改修改亮度、对比度和饱和度

# # transforms.RandomResizedCrop( scale=(0.1, 1), ratio=(0.5, 2)),

# tfs.RandomHorizontalFlip(),

tfs.ToTensor(), tfs.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

train_dataset = torchvision.datasets.CIFAR10(root='CIFAR10', train=True, transform=train_transform, download=True)

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_dataset = torchvision.datasets.CIFAR10(root='CIFAR10', train=False, transform=train_transform, download=True)

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False)

self.train_loader = train_loader

self.test_loader = test_loader

self.ckpt_dir = ckpt_dir

self.log_per_step = log_per_step

# self.eval_per_epoch = None

self.check_init()

def metric(pred, target):

pred = torch.argmax(pred, dim=1)

correct_num = torch.sum(pred == target).item()

total_num = target.size(0)

accuracy = correct_num / total_num

# return 1. - accuracy

return accuracy

def main():

model = Model()

for i in range(n_epochs):

model.train()

if model.epoch % eval_per_epoch == 0:

print("Waiting Test!")

with torch.no_grad():

model.eval()

print('Done!!!')

if __name__ == '__main__':

print(torch.__version__)

print(torch.cuda.is_available())

main()

在此处调用网络。

其他地方也大致相同,只需要将模板中的一些属性重写就可以了。

我修改了部分的代码,加入了训练时的准确度计算,将测试时的计算错误率改成了准确率。