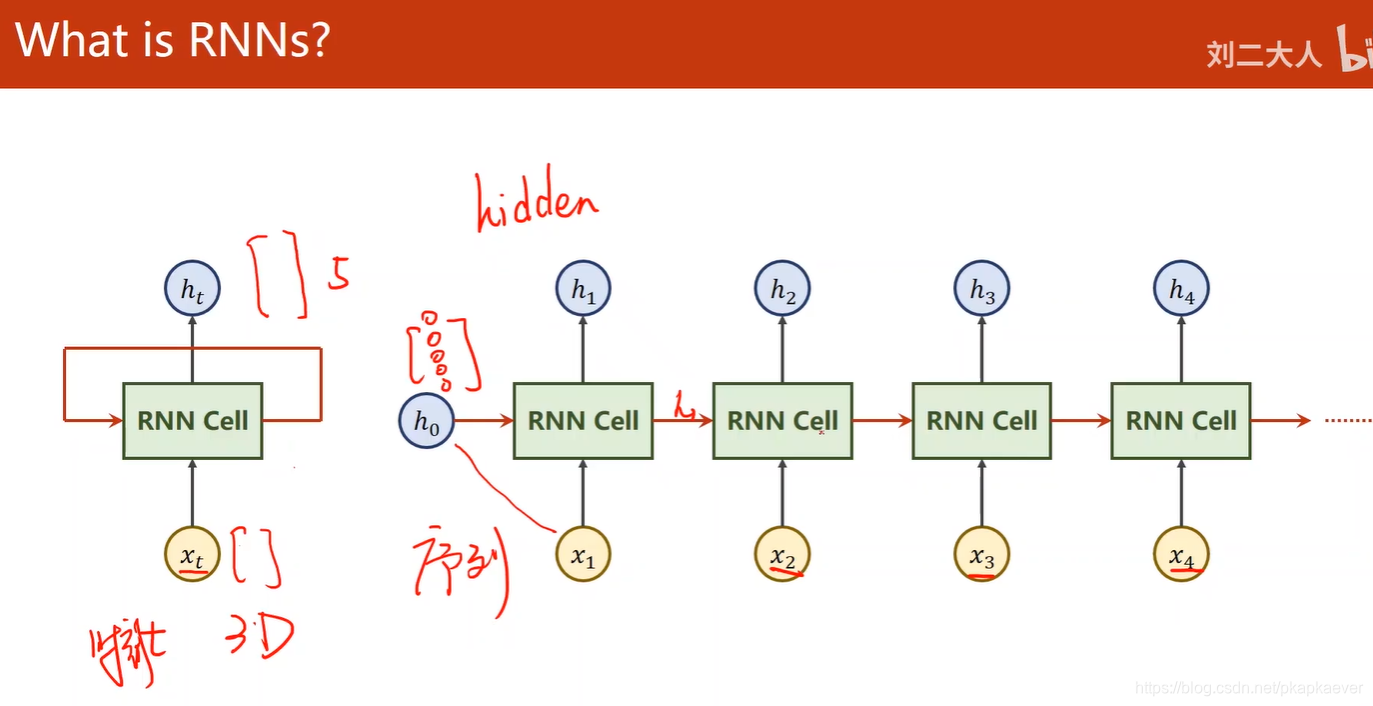

主要用来处理具有序列关系的数据(典型 自然语言)

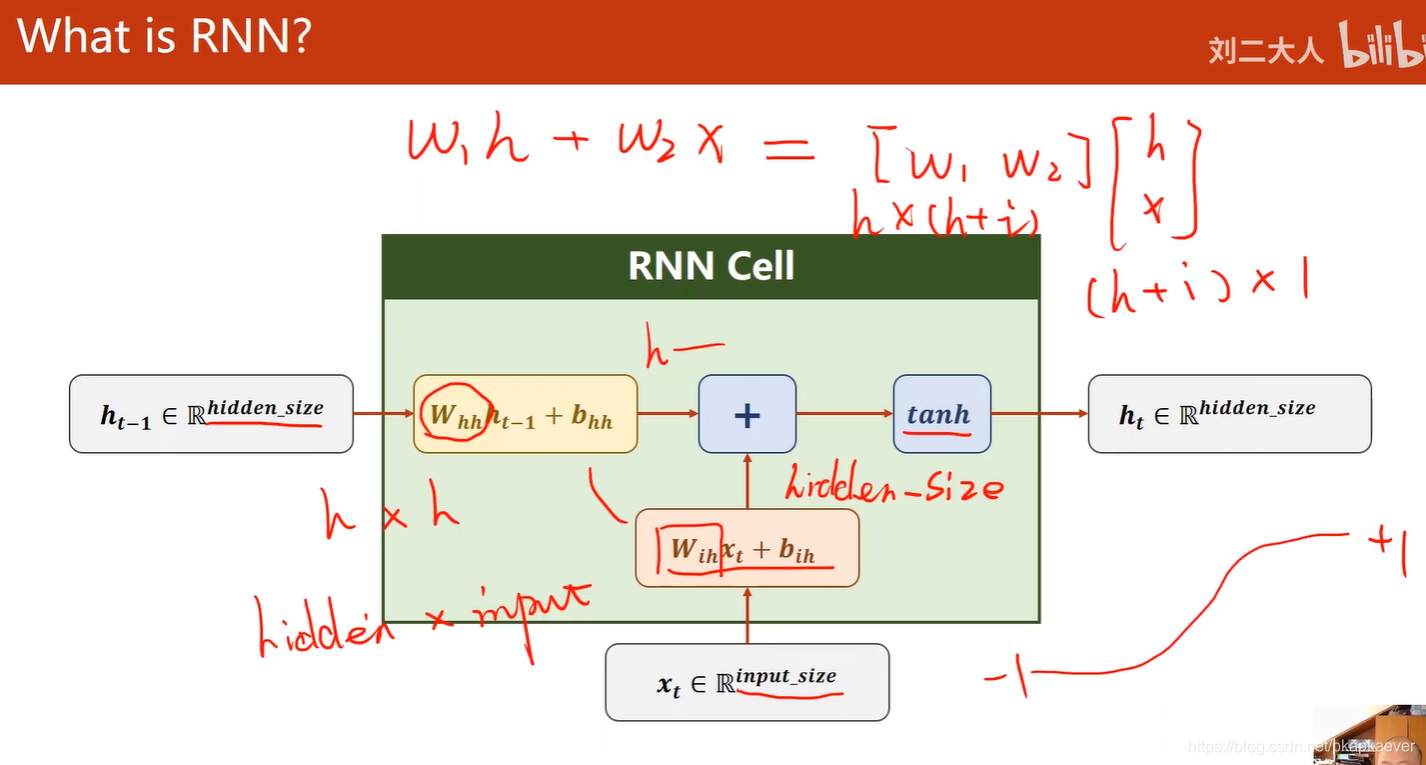

拿RNN cell这个线性层(实际是一个Linear)反复进行运算

1.这个xi会进行线性变换,wih是一个(hidden_sizeinput_size)的矩阵,将x变为hidden维

2.上一层的输入ht-1也进行线性变换,不过这个whh是(hidden_sizehidden_size)

3.最后两个(hidden_size*hidden_size)相加,进行信息融合

4.再用一个tanh激活函数

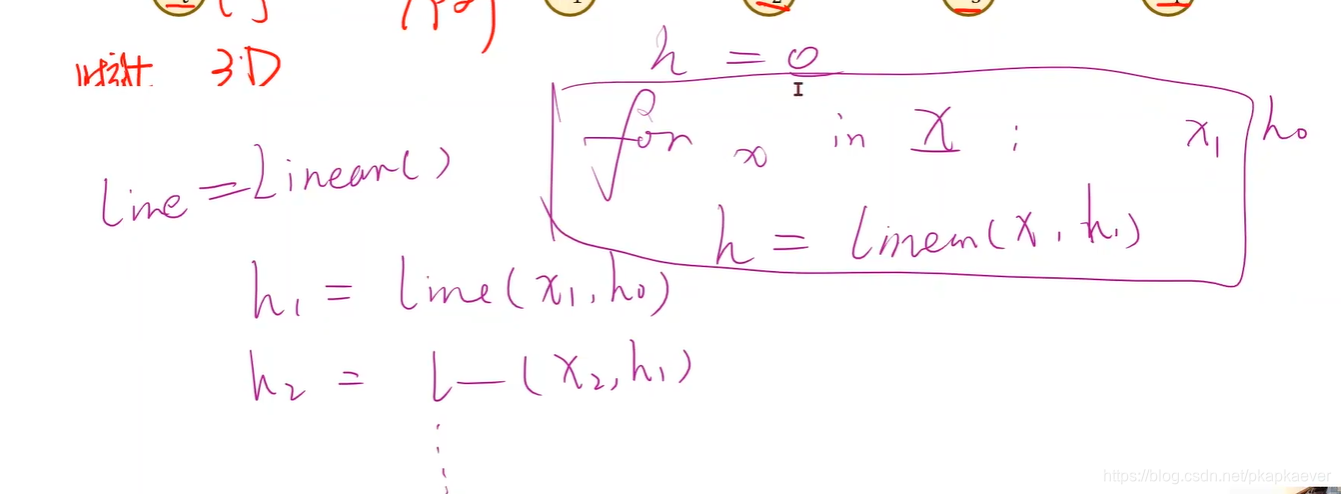

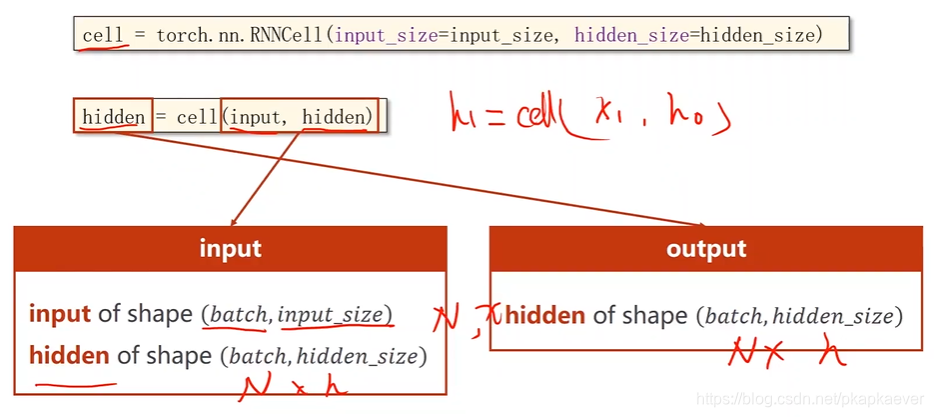

确定一个RNN cell需要两个值

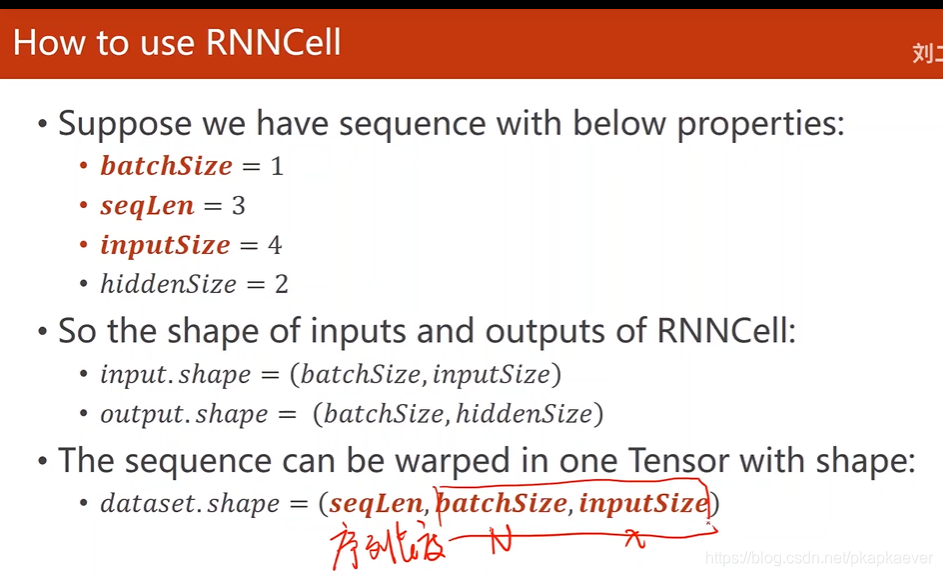

如何使用RNNcell

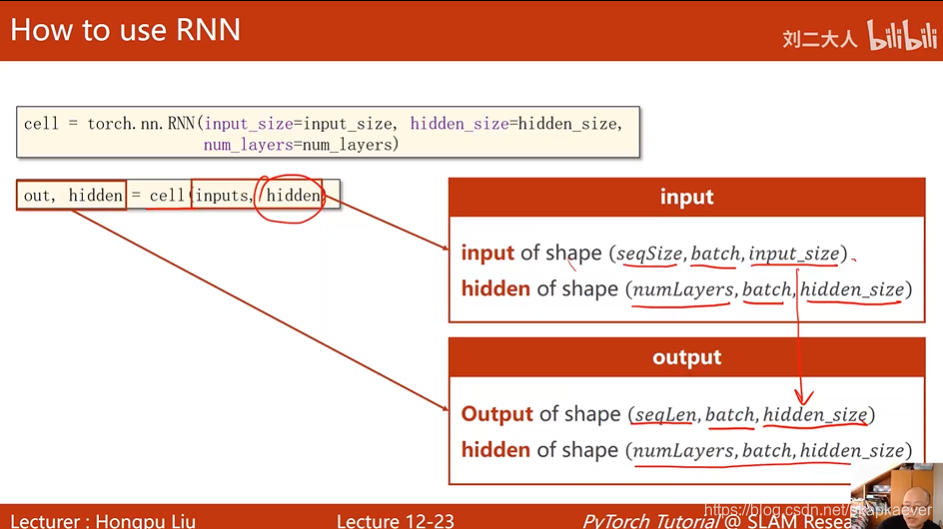

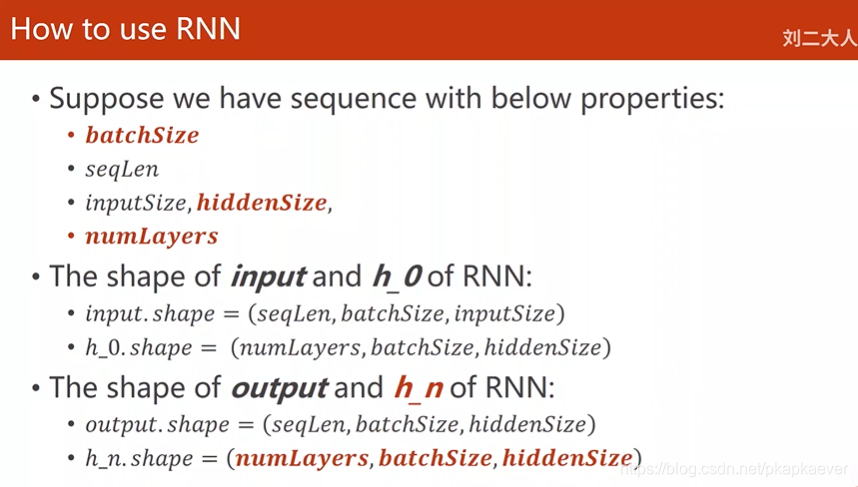

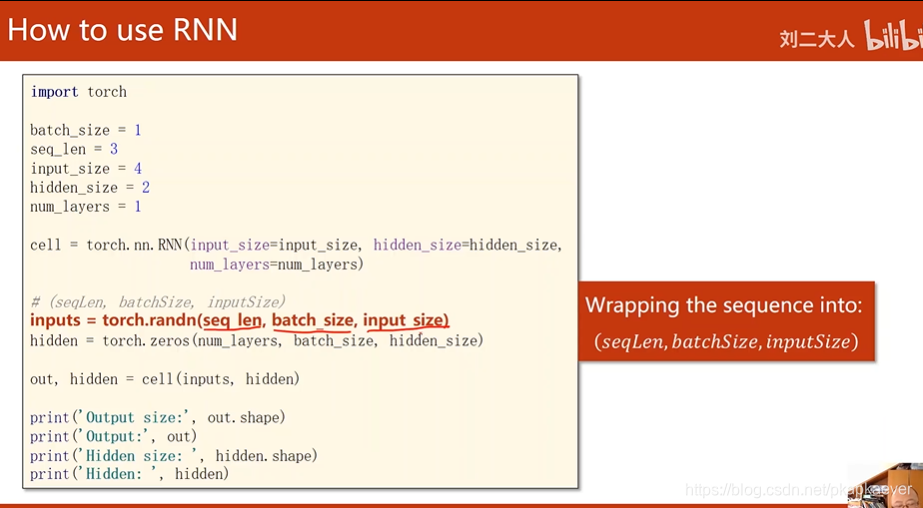

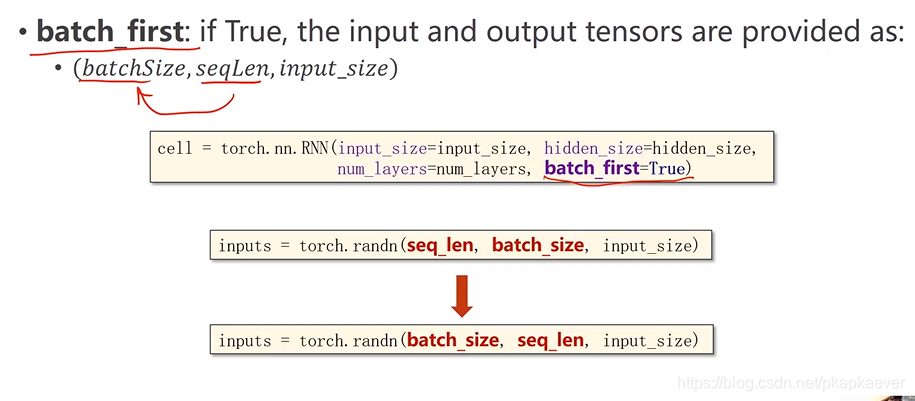

如何使用RNN

使用RNN就不用自己写循环了

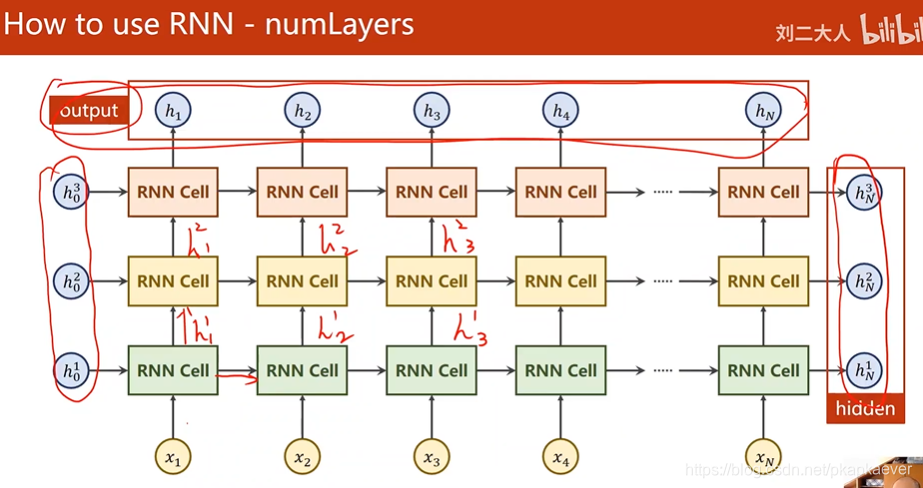

使用numlayer

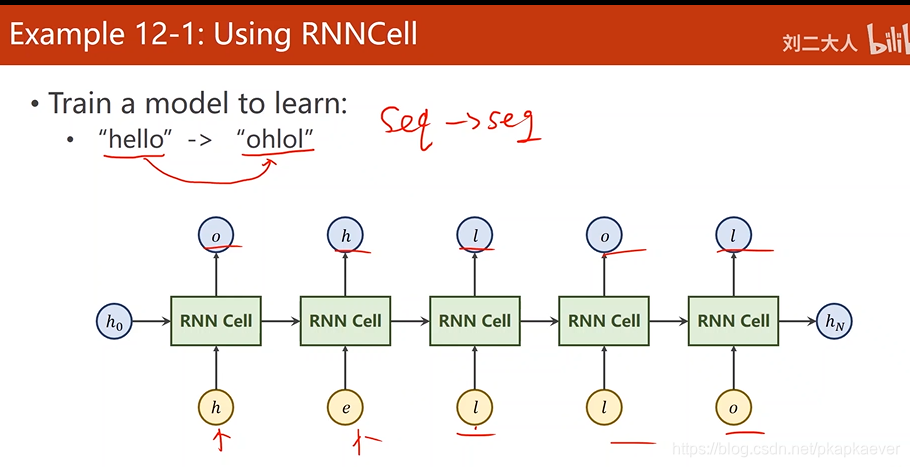

例子

step1

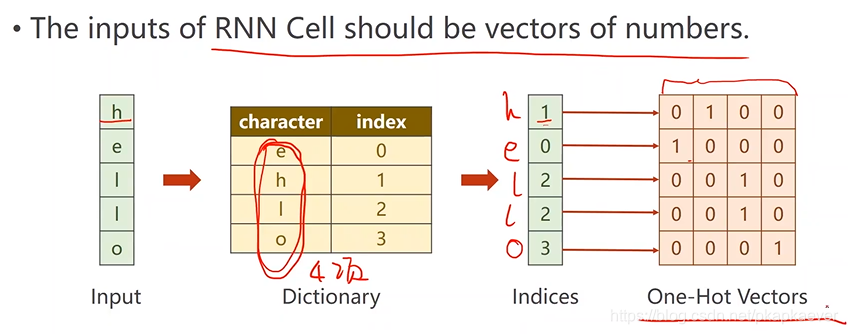

构造一个词典,构造一个向量(词典多少个,向量就多少维(列)),将这个向量作为输入(input_size = 4)

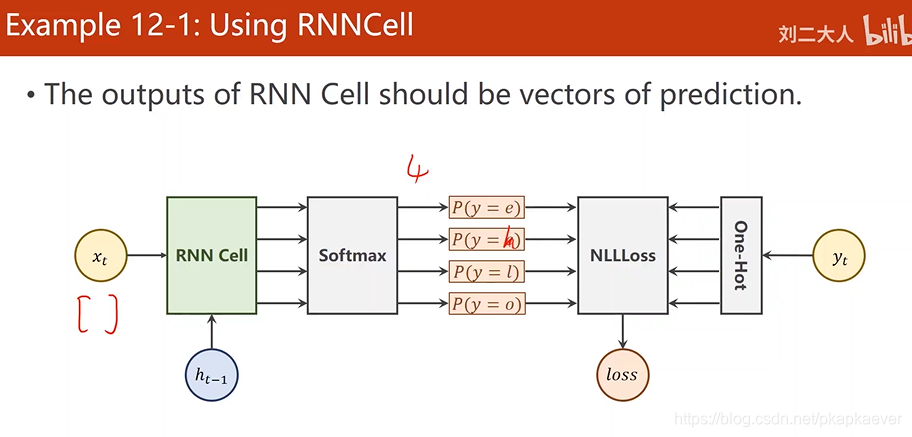

这里的模型是 RNN cell

import torch

input_size = 4

hidden_size = 4

batch_size = 1

##准备数据

idx2char = ['e','h','l','o']

x_data = [1,0,2,2,3]

#hello

y_data = [3,1,2,3,2]

#ohlol

one_hot_lookup = [[1,0,0,0],

[0,1,0,0],

[0,0,1,0],

[0,0,0,1]]

x_one_hot = [one_hot_lookup[x] for x in x_data]

#这一步是创造出x这个矩阵,用one_hot_lookup表示

inputs = torch.Tensor(x_one_hot).view(-1,batch_size,input_size)

#这个-1 因为有一个序列长度

labels = torch.LongTensor(y_data).view(-1,1)

#一个N维的矩阵

class Model(torch.nn.Module):

def __init__(self,input_size,hidden_size,batch_size):

super(Model, self).__init__()

self.batch_size = batch_size

self.input_size = input_size

self.hidden_size = hidden_size

self.rnncell = torch.nn.RNNCell(input_size = self.input_size,

hidden_size =self.hidden_size)

def forward(self,input,hidden):

hidden = self.rnncell(input,hidden)

#做了一次

return hidden

def init_hidden(self):

#构造初始层,构造h0时会用到

return torch.zeros(self.batch_size,self.hidden_size)

net =Model(input_size,hidden_size,batch_size)

criterion = torch.nn.CrossEntropyLoss()#交叉熵size_average=False

optimizer = torch.optim.Adam(net.parameters(), lr = 0.05)#, momentum=0.5

#Adam也就一个基于随机梯度下降的优化器

for epoch in range(15):

loss = 0

optimizer.zero_grad()

hidden= net.init_hidden()

#初始值

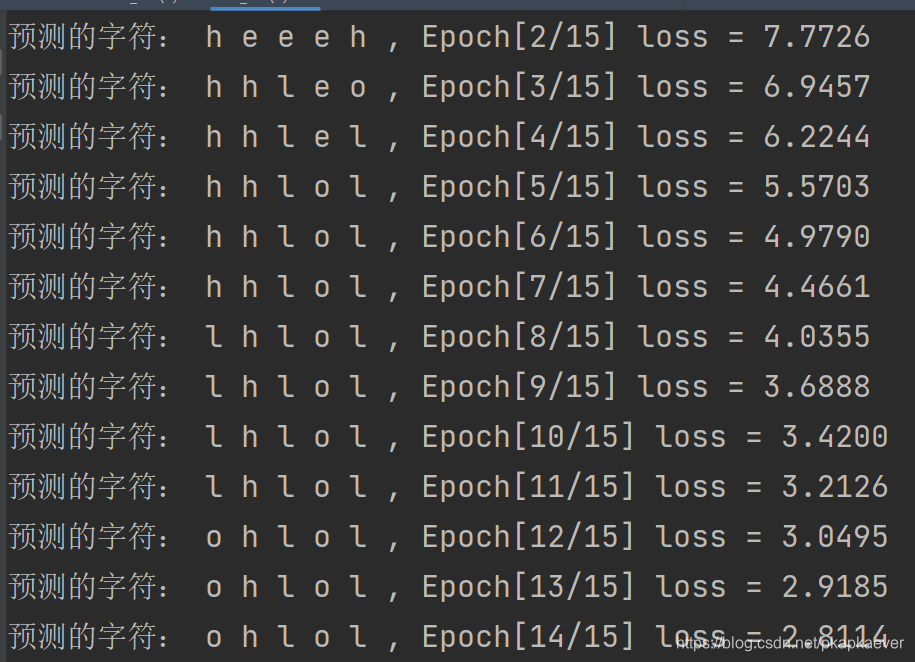

print('预测的字符:',end = ' ')

for input,label in zip(inputs,labels):

#实际遍历的时候,拿到的是不同batch的

hidden = net(input,hidden)

loss+=criterion(hidden,label)

#每一步损失相加

_,idx = hidden.max(dim= 1)

#返回的是 值和下标 这里idx接收下标

print(idx2char[idx.item()],end = ' ')

loss.backward()

optimizer.step()

print(', Epoch[%d/15] loss = %.4f' % (epoch+1,loss.item()))

输出

这里是RNN

import torch

input_size = 4

hidden_size = 4

batch_size = 1

seq_len = 5

num_layers = 1

##准备数据

idx2char = ['e','h','l','o']

x_data = [1,0,2,2,3]

#hello

y_data = [3,1,2,3,2]

#ohlol

one_hot_lookup = [[1,0,0,0],

[0,1,0,0],

[0,0,1,0],

[0,0,0,1]]

x_one_hot = [one_hot_lookup[x] for x in x_data]

#这一步是创造出x这个矩阵,用one_hot_lookup表示

#inputs = torch.Tensor(x_one_hot).view(-1,batch_size,input_size)

inputs = torch.Tensor(x_one_hot).view(seq_len,batch_size,input_size)

#这个-1 因为有一个序列长度

#labels = torch.LongTensor(y_data).view(-1,1)

labels = torch.LongTensor(y_data)

#我读调整为seq*batchsize,1

#一个N维的矩阵

class Model(torch.nn.Module):

def __init__(self,input_size,hidden_size,batch_size):

super(Model, self).__init__()

self.batch_size = batch_size

self.input_size = input_size

self.hidden_size = hidden_size

self.rnncell = torch.nn.RNNCell(input_size = self.input_size,

hidden_size =self.hidden_size)

def forward(self,input,hidden):

hidden = self.rnncell(input,hidden)

#做了一次

return hidden

def init_hidden(self):

#构造初始层,构造h0时会用到

return torch.zeros(self.batch_size,self.hidden_size)

class RNNModel(torch.nn.Module):

def __init__(self,input_size,hidden_size,batch_size,num_layers = 1):

super(RNNModel, self).__init__()

self.num_layers = num_layers

self.batch_size = batch_size

self.input_size = input_size

self.hidden_size = hidden_size

self.rnn = torch.nn.RNN(input_size = self.input_size,

hidden_size = self.hidden_size,

num_layers= num_layers)

def forward(self,input):

#构造h0

hidden = torch.zeros(self.num_layers,

self.batch_size,

self.hidden_size)

out,_ = self.rnn(input,hidden)

return out.view(-1,self.hidden_size)

#输出变成2维的(seq*Batch,1)

#net =Model(input_size,hidden_size,batch_size)

net = RNNModel(input_size,hidden_size,batch_size,num_layers)

criterion = torch.nn.CrossEntropyLoss()#交叉熵size_average=False

optimizer = torch.optim.Adam(net.parameters(), lr = 0.05)#, momentum=0.5

#Adam也就一个基于随机梯度下降的优化器

for epoch in range(25):

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs,labels)

loss.backward()

optimizer.step()

_,idx = outputs.max(dim = 1)

idx = idx.data.numpy()

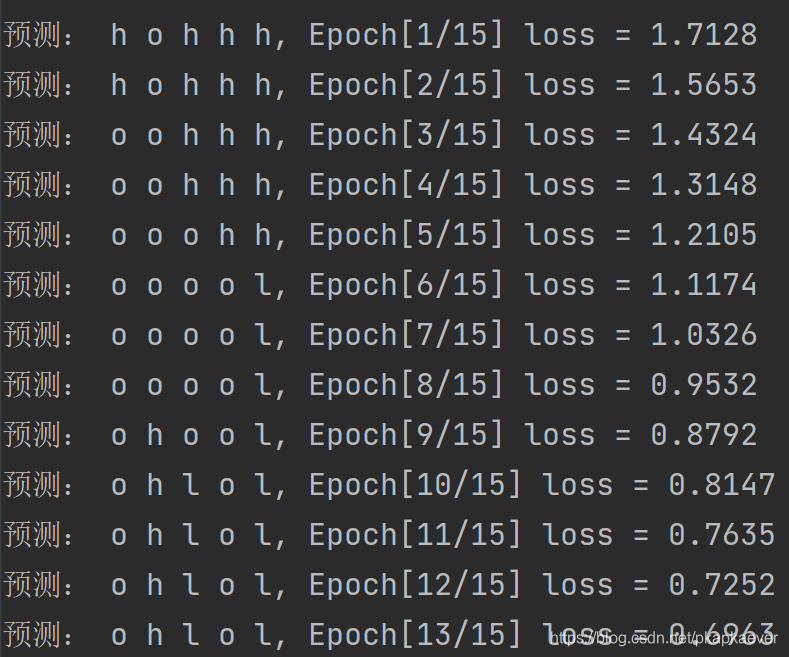

print('预测:',' '.join([idx2char[x] for x in idx]),end = '')

print(', Epoch[%d/15] loss = %.4f' % (epoch+1,loss.item()))

输出

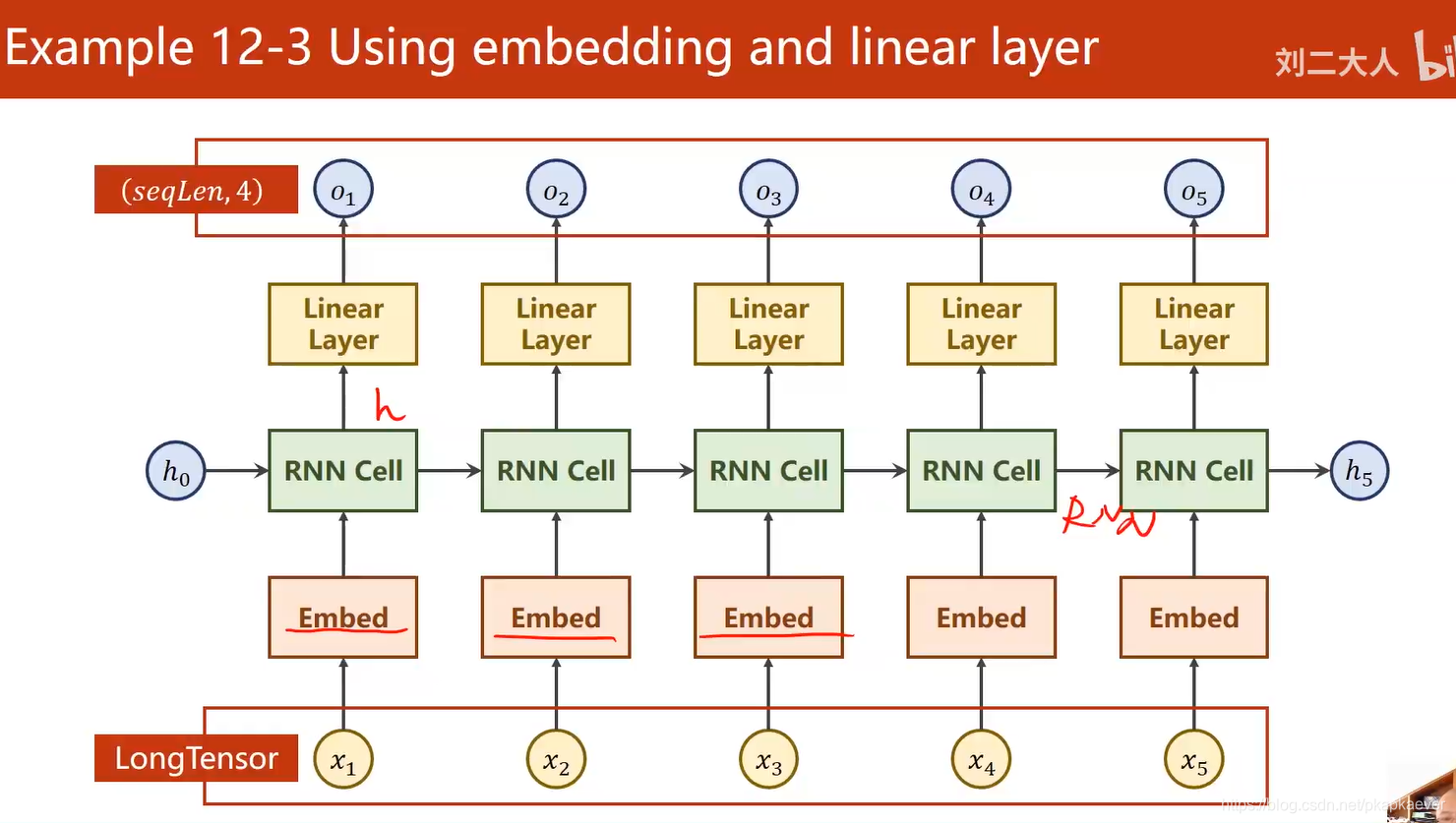

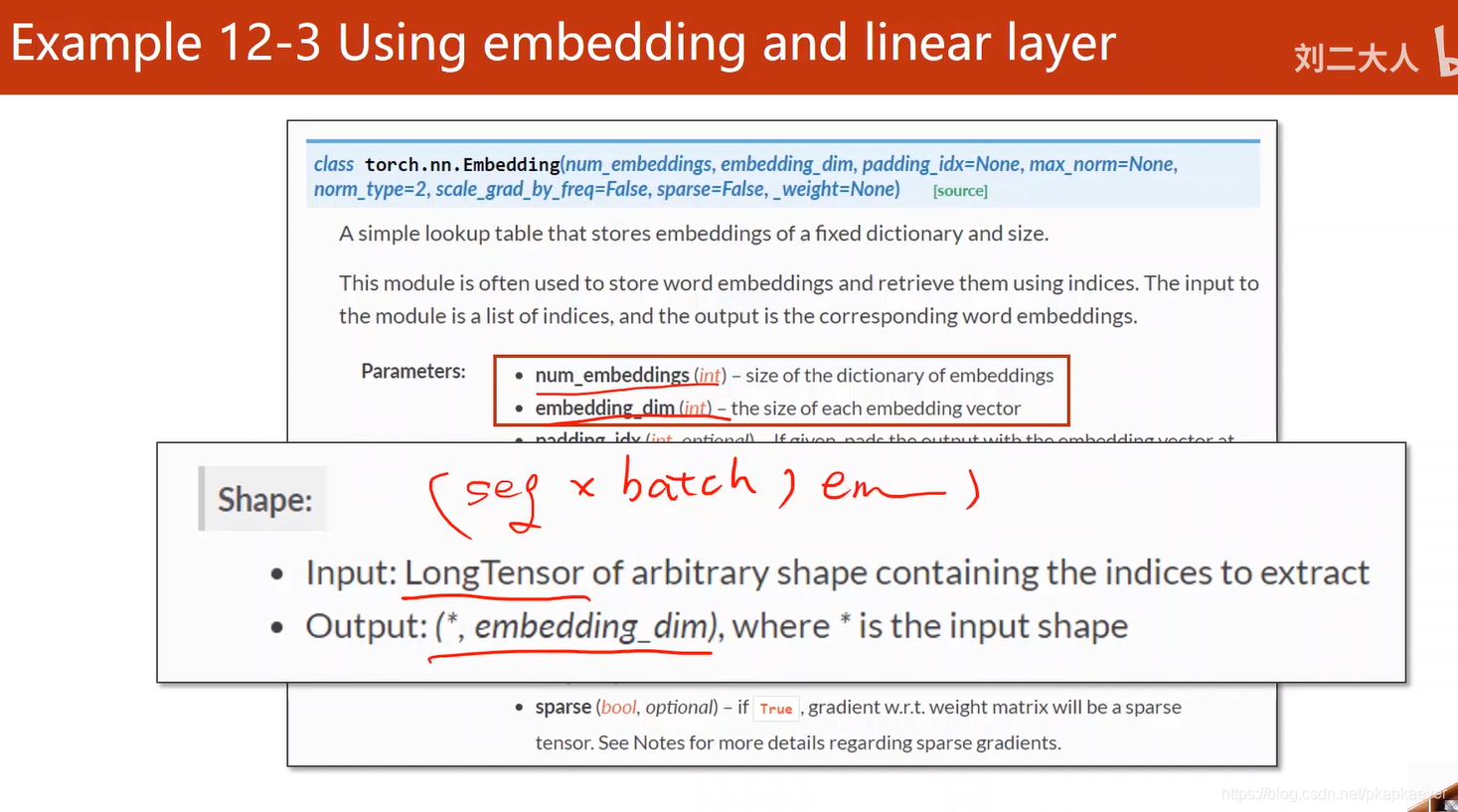

问题:如果字符集很大,那么需要降维–embeddinng

import torch

input_size = 4

hidden_size = 4

batch_size = 1

seq_len = 5

num_layers = 1

embedding_size =10

num_class = 4

#类别

##准备数据

idx2char = ['e','h','l','o']

x_data = [1,0,2,2,3]

#hello

y_data = [3,1,2,3,2]

#ohlol

one_hot_lookup = [[1,0,0,0],

[0,1,0,0],

[0,0,1,0],

[0,0,0,1]]

x_one_hot = [one_hot_lookup[x] for x in x_data]

#这一步是创造出x这个矩阵,用one_hot_lookup表示

#inputs = torch.Tensor(x_one_hot).view(-1,batch_size,input_size)

inputs = torch.Tensor(x_one_hot).view(seq_len,batch_size,input_size)

#这个-1 因为有一个序列长度

#labels = torch.LongTensor(y_data).view(-1,1)

labels = torch.LongTensor(y_data)

#我读调整为seq*batchsize,1

#一个N维的矩阵

class Model(torch.nn.Module):

def __init__(self,input_size,hidden_size,batch_size):

super(Model, self).__init__()

self.batch_size = batch_size

self.input_size = input_size

self.hidden_size = hidden_size

self.rnncell = torch.nn.RNNCell(input_size = self.input_size,

hidden_size =self.hidden_size)

def forward(self,input,hidden):

hidden = self.rnncell(input,hidden)

#做了一次

return hidden

def init_hidden(self):

#构造初始层,构造h0时会用到

return torch.zeros(self.batch_size,self.hidden_size)

class RNNModel(torch.nn.Module):

def __init__(self,input_size,hidden_size,batch_size,num_layers = 1):

super(RNNModel, self).__init__()

self.num_layers = num_layers

self.batch_size = batch_size

self.input_size = input_size

self.hidden_size = hidden_size

self.rnn = torch.nn.RNN(input_size = self.input_size,

hidden_size = self.hidden_size,

num_layers= num_layers)

def forward(self,input):

#构造h0

hidden = torch.zeros(self.num_layers,

self.batch_size,

self.hidden_size)

out,_ = self.rnn(input,hidden)

return out.view(-1,self.hidden_size)

#输出变成2维的(seq*Batch,1)

class RNN_EMB_Model(torch.nn.Module):

def __init__(self,input_size,hidden_size,batch_size,num_layers = 1):

super(RNNModel, self).__init__()

self.emb = torch.nn.Embedding(input_size,embedding_size)

self.rnn = torch.nn.RNN(input_size = embedding_size,

hidden_size = hidden_size,

num_layers = num_layers,

batch_first = True)

self.fc = torch.nn.Linear(hidden_size,num_class)

def forward(self,x):

#构造h0

hidden = torch.zeros(self.num_layers,

x.size(0),

self.hidden_size)

x = self.emb(x)#(batch,seqLen,embeddingSize)

x,_ = self.rnn(x,hidden)

x = self.fc(x)

return x.view(-1,num_class)

#输出变成2维的(seq*Batch,1)

#net =Model(input_size,hidden_size,batch_size)

net = RNNModel(input_size,hidden_size,batch_size,num_layers)

criterion = torch.nn.CrossEntropyLoss()#交叉熵size_average=False

optimizer = torch.optim.Adam(net.parameters(), lr = 0.05)#, momentum=0.5

#Adam也就一个基于随机梯度下降的优化器

for epoch in range(25):

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs,labels)

loss.backward()

optimizer.step()

_,idx = outputs.max(dim = 1)

idx = idx.data.numpy()

print('预测:',' '.join([idx2char[x] for x in idx]),end = '')

print(', Epoch[%d/15] loss = %.4f' % (epoch+1,loss.item()))