coursera-CNN-编程题中遇到的问题

The first week

First assignment

Exercise 3 - conv_forward

在计算vert_start、vert_end、horiz_start、horiz_end 时注意要乘以stride,不然系统会默认stride=1,从而报错说使用了全局变量;

4.1 - Forward Pooling

在最外层循环中应该给予提示:a_prev = None

# for i in range(None): # loop over the training examples

# a_prev = None

Second assignment

Exercise 1 - happyModel

# GRADED FUNCTION: happyModel

def happyModel():

"""

Implements the forward propagation for the binary classification model:

ZEROPAD2D -> CONV2D -> BATCHNORM -> RELU -> MAXPOOL -> FLATTEN -> DENSE

Note that for simplicity and grading purposes, you'll hard-code all the values

such as the stride and kernel (filter) sizes.

Normally, functions should take these values as function parameters.

Arguments:

None

Returns:

model -- TF Keras model (object containing the information for the entire training process)

"""

model = tf.keras.Sequential([

## ZeroPadding2D with padding 3, input shape of 64 x 64 x 3

## Conv2D with 32 7x7 filters and stride of 1

## BatchNormalization for axis 3

## ReLU

## Max Pooling 2D with default parameters

## Flatten layer

## Dense layer with 1 unit for output & 'sigmoid' activation

# YOUR CODE STARTS HERE

#(m, n_H_prev, n_W_prev, n_C_prev) = X_train.shape

tfl.ZeroPadding2D(padding=3, input_shape = (64, 64, 3)),

tfl.Conv2D(32, 7, strides = (1,1)),

tfl.BatchNormalization(axis=3),

tfl.ReLU(),

tfl.MaxPool2D(),

tfl.Flatten(),

tfl.Dense(1,activation='sigmoid')

# YOUR CODE ENDS HERE

])

return model

The second week

测验题

Depthwise convolution

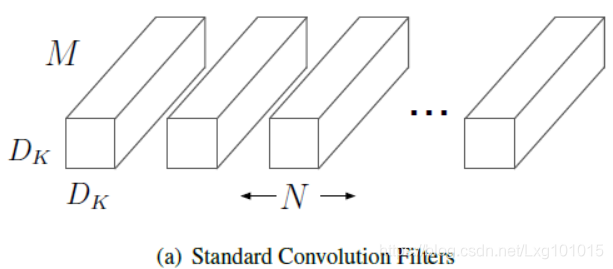

假设传统卷积核:

(

D

K

,

D

K

,

M

,

N

)

(D_{K},D_{K},M,N)

(DK?,DK?,M,N)

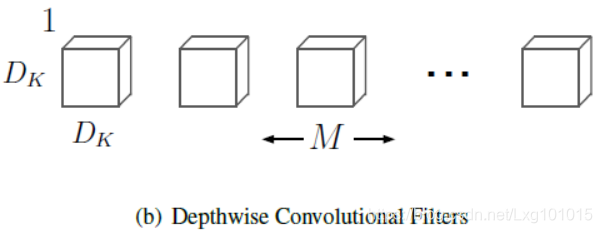

将传统卷积核拆分为深度卷积(depthwise convolution):

(

D

K

,

D

K

,

1

,

M

)

(D_{K},D_{K},1,M)

(DK?,DK?,1,M)

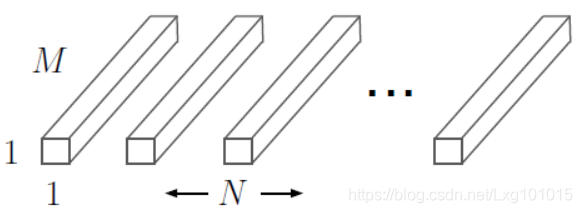

将传统卷积核拆分为逐点卷积(pointwise convolution):

(

1

,

1

,

M

,

N

)

(1,1,M,N)

(1,1,M,N)

将传统的卷积操作拆分为两步:

- The first step calculates an intermediate result by convolving on each of the channels independently. This is the depthwise convolution.

- In the second step, another convolution merges the outputs of the previous step into one. This gets a single result from a single feature at a time, and then is applied to all the filters in the output layer. This is the pointwise convolution.

depthwise层,只改变feature map的大小,不改变通道数。。而Pointwise 层则相反,只改变通道数,不改变大小。这样将常规卷积的做法(改变大小和通道数)拆分成两步走。

这么做的好处就是可以在损失精度不多的情况下大幅度降低参数量和计算量。But, 虽然使用深度可分离卷积可以让参数量变小,但是实际上用GPU训练的时候深度可分离卷积会非常的占用内存,并且比普通的3*3卷积要慢很多(实测)

First assignment

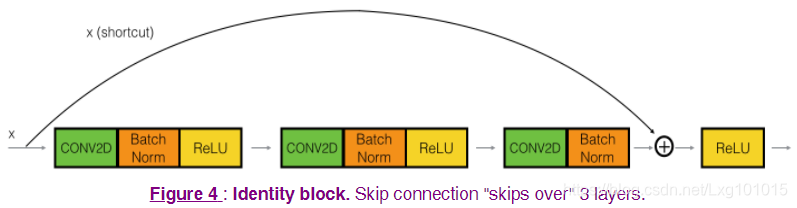

Identity Block

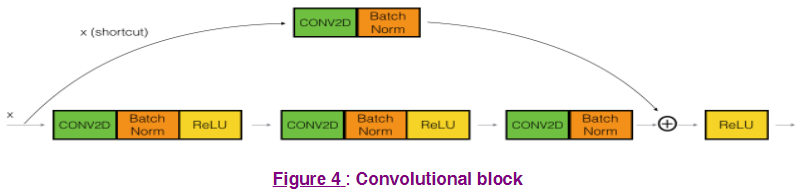

Convolutional Block

convolutional block 是为了解决输入和输出块维度不一样而引入的过度块。

Second assignment

transfer learning—MobileNetV2

A idea:用预训练模型时,因为该模型已在大型数据集上训练,例如MobileNetV2在ImageNet上训练过。将这个预训练模型用到自己的小数据集上,因为大多数图像的 low-level features 具有相似性,只是在 high-level features 上不同。因此,可否将预训练模型的 bottom layers freeze(un-trainable) , 在我的数据集上从高层开始训练,并更新高层的参数,然后做预测。

The third week

测验题

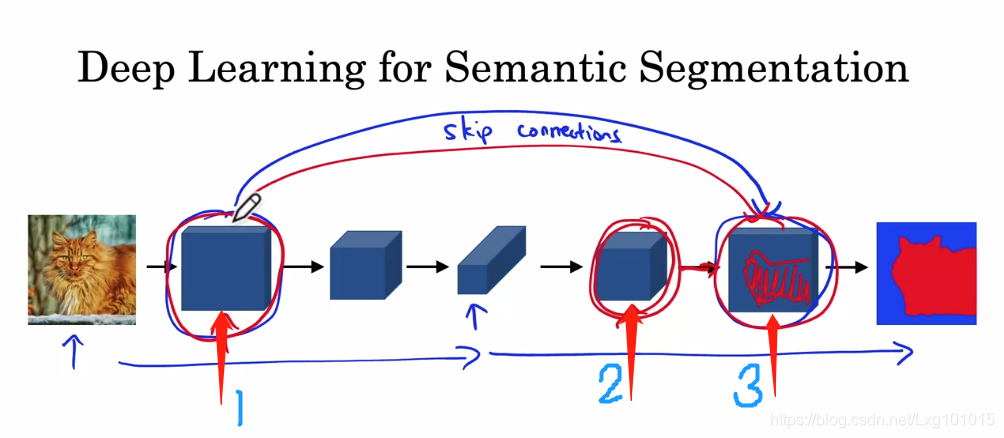

semantic segmentation

图中(3)既包含了(2)中的特性:低的分辨率(lower resolutuin),但却是高的语义信息,又包含了(1)中的特性:低水平的,但却有更详细的纹理,以便更好的确定某个像素是否属于猫的一部分。

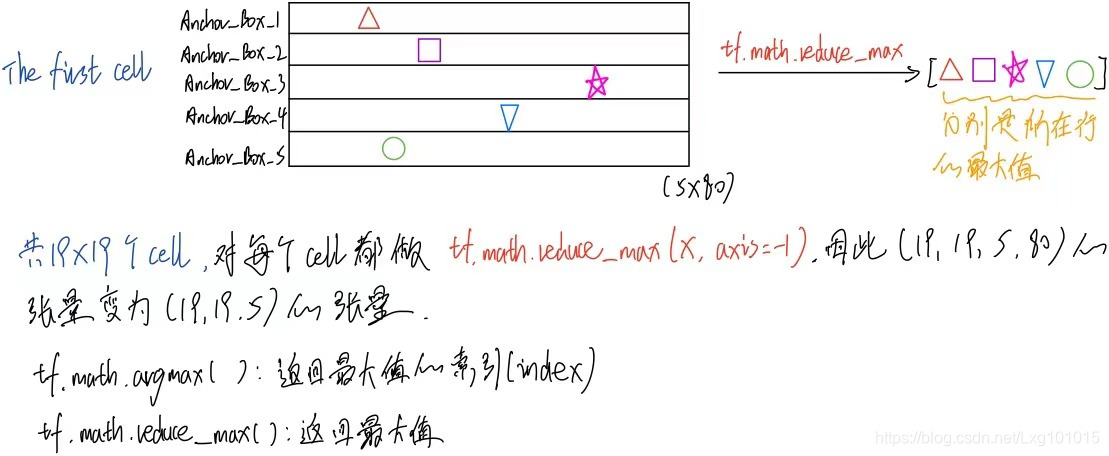

First assignment

tf.math.reduce_max:

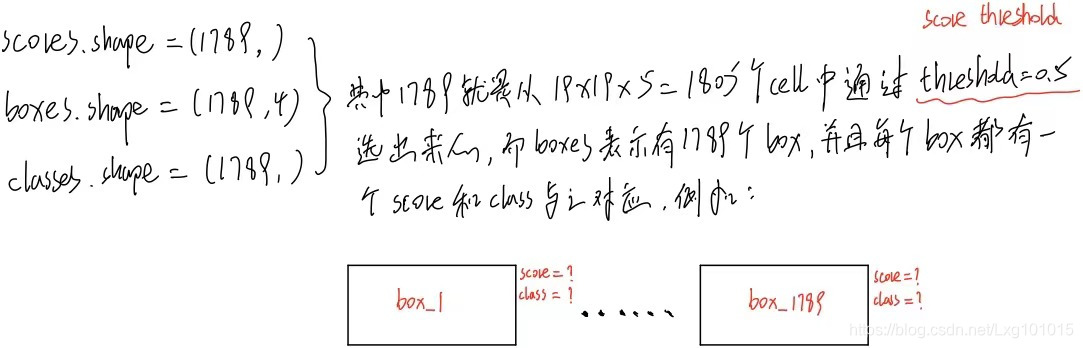

np.max([]):注意圆括号里还有方括号.score threshold:

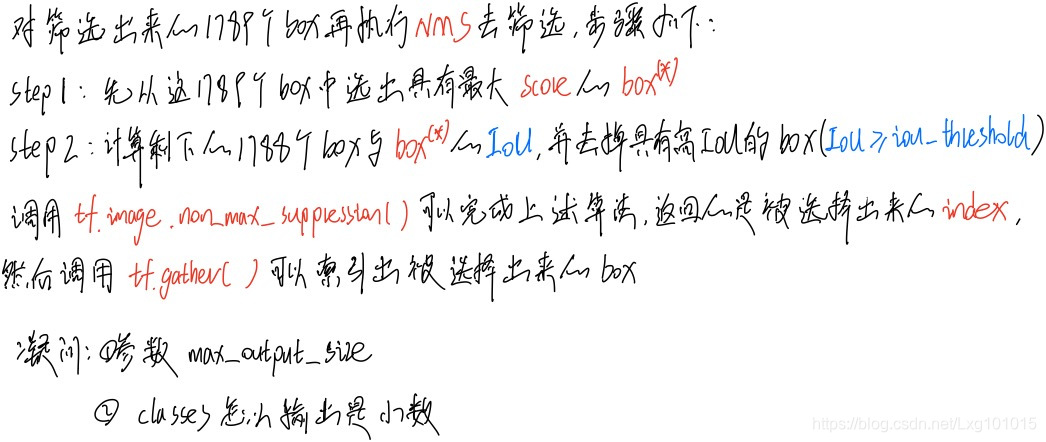

NMS: