len(preds)=9

preds:

preds

Out[1]:

defaultdict(list,

{'person': [['002824.jpg', 0.9683060646057129, 12, 23, 490, 433],

['000358.jpg', 0.5757755041122437, 18, 30, 130, 284],

['003385.jpg', 0.8857487440109253, 160, 69, 317, 332],

['003494.jpg', 0.4133218228816986, 53, 217, 118, 375],

['003494.jpg', 0.13586369156837463, 418, 230, 494, 365],

['007962.jpg', 0.2744079530239105, 139, 160, 377, 383],

['003583.jpg', 0.4448939561843872, 176, 10, 290, 296],

['003583.jpg', 0.20204538106918335, 458, 177, 484, 263],

['003583.jpg', 0.1746450513601303, 453, 172, 479, 297]],

'aeroplane': [['000473.jpg',

0.4259612560272217,

422,

120,

460,

154],

['000473.jpg', 0.25087976455688477, 420, 126, 450, 147],

['003494.jpg', 0.3544943332672119, 16, 22, 499, 268]],

'car': [['000358.jpg', 0.662514865398407, 56, 97, 398, 282],

['003385.jpg', 0.38660484552383423, 147, 90, 236, 132],

['003385.jpg', 0.36484041810035706, 335, 88, 431, 138],

['003385.jpg', 0.25925055146217346, 372, 99, 436, 140],

['003385.jpg', 0.1610412746667862, 426, 111, 458, 133],

['003385.jpg', 0.1278880089521408, 328, 135, 515, 321],

['003385.jpg', 0.11028455942869186, 392, 108, 422, 132],

['003494.jpg', 0.39372316002845764, 20, 207, 40, 233]],

'cat': [['006052.jpg', 0.3838433027267456, 131, 53, 497, 367]],

'dog': [['004758.jpg', 0.2594014108181, 45, 41, 147, 319],

['007962.jpg', 0.13827747106552124, 5, 135, 240, 378]],

'bus': [['003385.jpg', 0.3594896197319031, -4, 60, 193, 332]],

'chair': [['002128.jpg', 0.2410588562488556, 244, 18, 504, 226]],

'sofa': [['007962.jpg', 0.10128864645957947, 21, 22, 491, 259]],

'horse': [['003583.jpg', 0.39006292819976807, 7, 121, 379, 309],

['003583.jpg', 0.255479633808136, 45, 88, 182, 331]]})

len(target)=15

target:

target

Out[2]:

defaultdict(list,

{('002824.jpg', 'person'): [[1, 13, 500, 431]],

('000473.jpg', 'aeroplane'): [[415, 120, 460, 153]],

('000358.jpg', 'car'): [[89, 100, 387, 284]],

('000358.jpg', 'person'): [[23, 33, 110, 287]],

('006052.jpg', 'cat'): [[129, 51, 497, 374]],

('004758.jpg', 'dog'): [[44, 49, 129, 308]],

('003385.jpg', 'car'): [[150, 77, 235, 145],

[350, 88, 448, 137],

[284, 129, 500, 332],

[1, 87, 190, 331]],

('003385.jpg', 'person'): [[139, 70, 352, 331]],

('003494.jpg', 'aeroplane'): [[1, 22, 500, 260]],

('003494.jpg', 'person'): [[58, 206, 118, 375],

[417, 206, 500, 375]],

('002128.jpg', 'bicycle'): [[42, 14, 455, 291]],

('007962.jpg', 'dog'): [[72, 163, 397, 375]],

('007962.jpg', 'person'): [[184, 177, 304, 375]],

('003583.jpg', 'horse'): [[59, 105, 388, 318]],

('003583.jpg', 'person'): [[178, 4, 287, 318],

[459, 181, 480, 292]]})

程序先拿出来预测结果中为aeroplane? 的成员信息,

pred:

[['000473.jpg', 0.4259612560272217, 422, 120, 460, 154],

?['000473.jpg', 0.25087976455688477, 420, 126, 450, 147],

?['003494.jpg', 0.3544943332672119, 16, 22, 499, 268]]

接下来将? image_ids 存储 图片名:

['000473.jpg', '000473.jpg', '003494.jpg']

confidence存储预测的置信度:

array([0.42596126, 0.25087976, 0.35449433])

BB存储预测的标注框的坐标信息:

array([[422, 120, 460, 154],

?????? [420, 126, 450, 147],

?????? [ 16,? 22, 499, 268]])

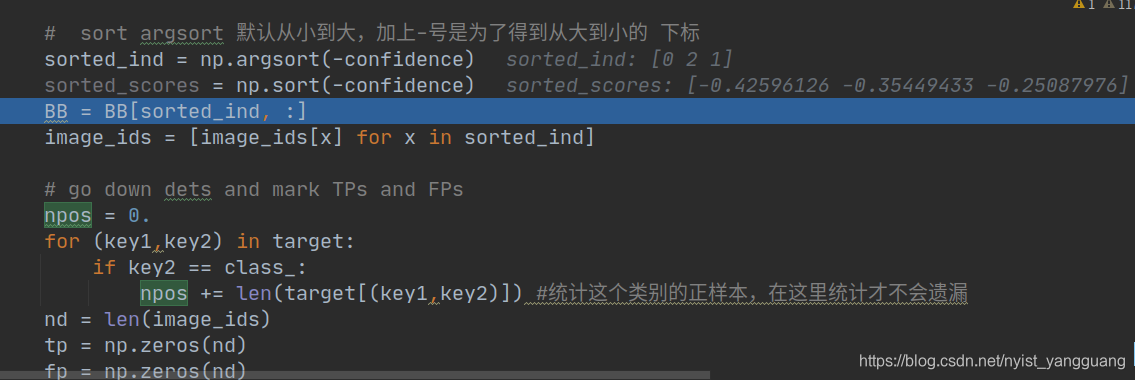

?接着往下看,

像 np.argsort() 和 np.sort()都是默认的从小到达排序。

想办法将预测的置信度数据从大到小排序(加负号),得到元素从大到小的元素的索引,

sorted_ind:

array([0, 2, 1])

将BB中的预测的标注框信息与预测的置信度从大到小的顺序保持一致。

BB:

array([[422, 120, 460, 154],

?????? [ 16,? 22, 499, 268],

?????? [420, 126, 450, 147]])

当然了,图片名也要保持一致。

image_ids:

['000473.jpg', '003494.jpg', '000473.jpg']

现在以预测框的 置信度从大到小排序 为基础,将所有图片中预测的类别为aeroplane的所有预测框进行了排序。

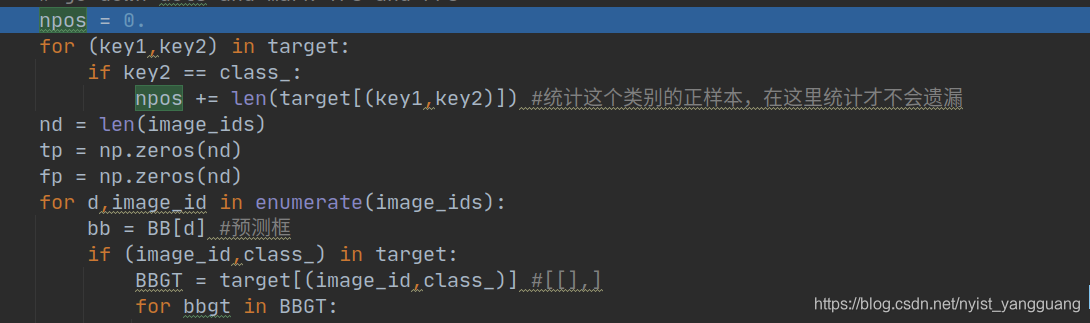

接着看,target咱知道,装的都是GT的内容,是? (图片名,类别):标注框的坐标信息

根据key2==class_,通过遍历target,咱们可以得到GT中类别为aeroplane的共有几个标注框,

npos:

2.0

奥,有两个,然后统计下预测的标注框的类别为aeroplane的有几个。

nd:

3

是3个,根据这个3个,创建tp、fp,它俩一样,写一个就行了。

tp、fp:

array([0., 0., 0.])

下面是给image_ids遍历,注意啊,这个image_ids已经根据置信度从大到小进行排序了。

BB[d]是取出来第一个预测框的坐标信息,

bb:

array([422, 120, 460, 154])

咱们写一个target【(image_id,class_)】是为了找到target里面(GT)与预测对应的标注框信息,当然,咱们看到了target里面的标注框有的可不止一个,所以咱们要遍历for bbgt in BBGT.

然后,看看bbgt是干啥的,

bbgt:

[415, 120, 460, 153]

就这1个,不得不说,预测的相当精准。

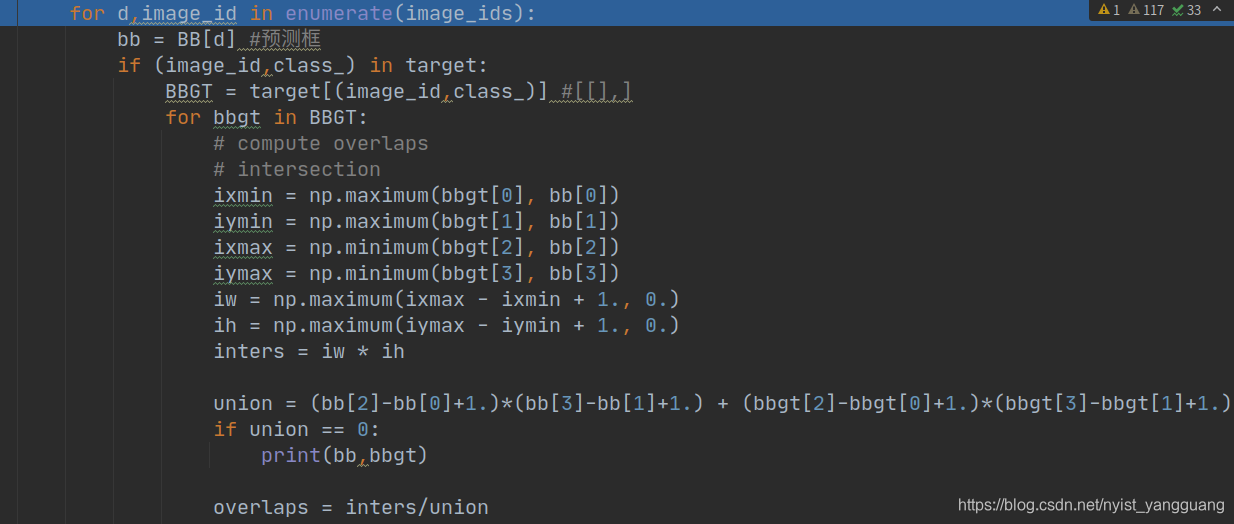

下面就是计算IOU(预测框和GT框)。

作者这里设置了一个阈值0.5,大于0.5,才有资格将tp[0]设置为1,然后将这个匹配的框信息从BBGT中去掉,这个(image_id,classes_)所有的框都匹配完了,就将target中这个键值删掉。

target[(image_id,class_)]:

[]

很明显,作者只要求有一个图片中同一个类别的标注框,overlaps(交叠)>threshold(阈值)符合要求就行,因为咱们要break。

三次循环,for d,image_id in enumerate(image_ids),

每次循环,符合大于阈值0.5,咱们都记录tp[d]=1 , fp[d]是1-tp[d] ,fp[0]=1-tp[0]=1-1=0.

仔细看,什么时候会fp[d]=1-tp[d],也就是同一个图片中同一个类别标注信息GT,出现了两个或两个以上,因为咱们统计一个,就把键值从target中删掉了(删掉键,就是删掉这个键和它对应的值)。这也能看到作者为什么现将预测框的置信度排序,因为和GT比较就一次,肯定要最优的先来(IOU最大的)。

?经过三次循环,咱们可以得到:

fp:

?array([0., 0., 1.])

tp:

array([1., 1., 0.])

然后,用np.cumsum()进行默认坐标轴上元素的累加,fp保持不变,

tp:

array([1., 2., 2.])

因为npos是2,

rec:

array([0.5, 1. , 1. ])

FP: 将负类预测为正类数 ? TP: 将正类预测为正类数 ? 精确率(precision) = TP/(TP+FP)

精确率:预测的正样本中有多少个正样本

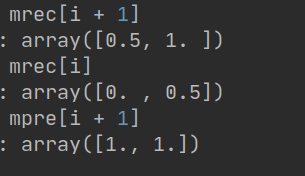

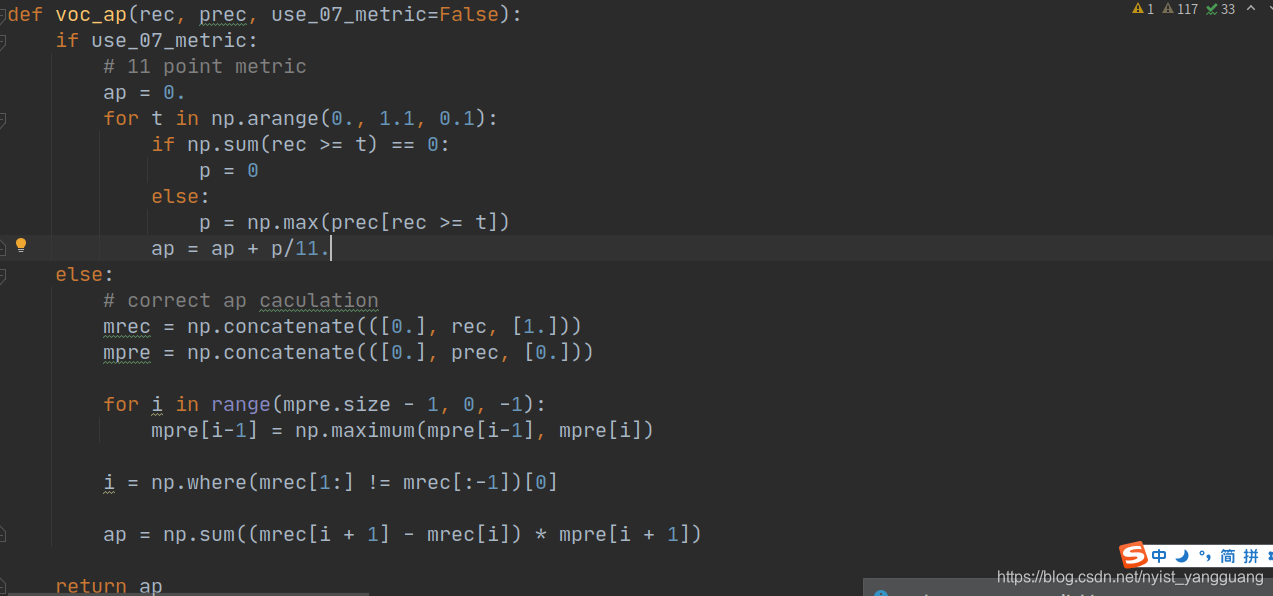

?接着看voc_ap函数,

mrec:

array([0. , 0.5, 1. , 1. , 1. ])

mpre:

array([0.??????? , 1.??????? , 1.??????? , 0.66666667, 0.??????? ])

mpre.size - 1:

4

这个for循环说白了,就是要求出mpre中的最大值嘛,作者这样写很麻烦啊,

mpre:

?array([1.??????? , 1.??????? , 1.??????? , 0.66666667, 0.??????? ])

mrec:

?i:

array([0, 1])

ap:

1.0

Code:

# -*- coding: utf-8 -*-

"""

@Time : 2020/08/12 18:30

@Author : Bryce

@File : eval_voc.py

@Noice :

@Modificattion :

@Author :

@Time :

@Detail :

"""

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

import numpy as np

VOC_CLASSES = ( # always index 0

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair',

'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor')

Color = [

[0, 0, 0],

[128, 0, 0],

[0, 128, 0],

[128, 128, 0],

[0, 0, 128],

[128, 0, 128],

[0, 128, 128],

[128, 128, 128],

[64, 0, 0],

[192, 0, 0],

[64, 128, 0],

[192, 128, 0],

[64, 0, 128],

[192, 0, 128],

[64, 128, 128],

[192, 128, 128],

[0, 64, 0],

[128, 64, 0],

[0, 192, 0],

[128, 192, 0],

[0, 64, 128]

]

def voc_ap(rec, prec, use_07_metric=False):

if use_07_metric:

# 11 point metric

ap = 0.

for t in np.arange(0., 1.1, 0.1):

if np.sum(rec >= t) == 0:

p = 0

else:

p = np.max(prec[rec >= t])

ap = ap + p/11.

else:

# correct ap caculation

mrec = np.concatenate(([0.], rec, [1.]))

mpre = np.concatenate(([0.], prec, [0.]))

for i in range(mpre.size - 1, 0, -1):

mpre[i-1] = np.maximum(mpre[i-1], mpre[i])

i = np.where(mrec[1:] != mrec[:-1])[0]

ap = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1])

return ap

def voc_eval(preds, target, VOC_CLASSES=VOC_CLASSES, threshold=0.5, use_07_metric=False,):

'''

preds {'cat':[[image_id,confidence,x1,y1,x2,y2],...],'dog':[[],...]}

target {(image_id,class):[[],]}

'''

aps = []

for i,class_ in enumerate(VOC_CLASSES):

pred = preds[class_] # [[image_id,confidence,x1,y1,x2,y2],...]

if len(pred) == 0: # 如果这个类别一个都没有检测到的异常情况

ap = -1

print('---class {} ap {}---'.format(class_,ap))

aps += [ap]

break

#print(pred)

image_ids = [x[0] for x in pred]

confidence = np.array([float(x[1]) for x in pred])

BB = np.array([x[2:] for x in pred])

# sort by confidence

# sort argsort 默认从小到大,加上-号是为了得到从大到小的 下标

sorted_ind = np.argsort(-confidence)

sorted_scores = np.sort(-confidence)

BB = BB[sorted_ind, :]

image_ids = [image_ids[x] for x in sorted_ind]

# go down dets and mark TPs and FPs

npos = 0.

for (key1,key2) in target:

if key2 == class_:

npos += len(target[(key1,key2)]) #统计这个类别的正样本,在这里统计才不会遗漏

nd = len(image_ids)

tp = np.zeros(nd)

fp = np.zeros(nd)

for d,image_id in enumerate(image_ids):

bb = BB[d] #预测框

if (image_id,class_) in target:

BBGT = target[(image_id,class_)] #[[],]

for bbgt in BBGT:

# compute overlaps

# intersection

ixmin = np.maximum(bbgt[0], bb[0])

iymin = np.maximum(bbgt[1], bb[1])

ixmax = np.minimum(bbgt[2], bb[2])

iymax = np.minimum(bbgt[3], bb[3])

iw = np.maximum(ixmax - ixmin + 1., 0.)

ih = np.maximum(iymax - iymin + 1., 0.)

inters = iw * ih

union = (bb[2]-bb[0]+1.)*(bb[3]-bb[1]+1.) + (bbgt[2]-bbgt[0]+1.)*(bbgt[3]-bbgt[1]+1.) - inters

if union == 0:

print(bb,bbgt)

overlaps = inters/union

if overlaps > threshold:

tp[d] = 1

BBGT.remove(bbgt) #这个框已经匹配到了,不能再匹配

if len(BBGT) == 0:

del target[(image_id,class_)] #删除没有box的键值

break

fp[d] = 1-tp[d]

else:

fp[d] = 1

fp = np.cumsum(fp)

tp = np.cumsum(tp)

# npos 所有的图片里面 某一类 标注框的总数

rec = tp/float(npos)

prec = tp/np.maximum(tp + fp, np.finfo(np.float64).eps)

#print(rec,prec)

ap = voc_ap(rec, prec, use_07_metric)

print('---class {} ap {}---'.format(class_,ap))

aps += [ap]

print('---map {}---'.format(np.mean(aps)))

def test_eval():

preds = {'cat':[['image01',0.9,20,20,40,40],['image01',0.8,20,20,50,50],['image02',0.8,30,30,50,50]],'dog':[['image01',0.78,60,60,90,90]]}

target = {('image01','cat'):[[20,20,41,41]],('image01','dog'):[[60,60,91,91]],('image02','cat'):[[30,30,51,51]]}

voc_eval(preds,target,VOC_CLASSES=['cat','dog'])

if __name__ == '__main__':

#test_eval()

from predict import *

from collections import defaultdict

from tqdm import tqdm

target = defaultdict(list)

preds = defaultdict(list)

image_list = [] #image path list

f = open('datasets/voc2007test.txt')

lines = f.readlines()

file_list = []

for line in lines:

splited = line.strip().split()

file_list.append(splited)

f.close()

print('---prepare target---')

ii=0

for index,image_file in enumerate(file_list):

image_id = image_file[0]

image_list.append(image_id)

num_obj = (len(image_file) - 1) // 5

for i in range(num_obj):

x1 = int(image_file[1+5*i])

y1 = int(image_file[2+5*i])

x2 = int(image_file[3+5*i])

y2 = int(image_file[4+5*i])

c = int(image_file[5+5*i])

class_name = VOC_CLASSES[c]

target[(image_id,class_name)].append([x1,y1,x2,y2])

#

#start test

#

print('---start test---')

# model = vgg16_bn(pretrained=False)

model = resnet50()

# model.classifier = nn.Sequential(

# nn.Linear(512 * 7 * 7, 4096),

# nn.ReLU(True),

# nn.Dropout(),

# #nn.Linear(4096, 4096),

# #nn.ReLU(True),

# #nn.Dropout(),

# nn.Linear(4096, 1470),

# )

model.load_state_dict(torch.load('checkpoints/best.pth'))

model.eval()

model.cuda()

count = 0

for image_path in tqdm(image_list):

result = predict_gpu(model,image_path,root_path='datasets/images/') #result[[left_up,right_bottom,class_name,image_path],]

for (x1,y1),(x2,y2),class_name,image_id,prob in result: #image_id is actually image_path

preds[class_name].append([image_id,prob,x1,y1,x2,y2])

# print(image_path)

# image = cv2.imread('/home/xzh/data/VOCdevkit/VOC2012/allimgs/'+image_path)

# for left_up,right_bottom,class_name,_,prob in result:

# color = Color[VOC_CLASSES.index(class_name)]

# cv2.rectangle(image,left_up,right_bottom,color,2)

# label = class_name+str(round(prob,2))

# text_size, baseline = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.4, 1)

# p1 = (left_up[0], left_up[1]- text_size[1])

# cv2.rectangle(image, (p1[0] - 2//2, p1[1] - 2 - baseline), (p1[0] + text_size[0], p1[1] + text_size[1]), color, -1)

# cv2.putText(image, label, (p1[0], p1[1] + baseline), cv2.FONT_HERSHEY_SIMPLEX, 0.4, (255,255,255), 1, 8)

# cv2.imwrite('testimg/'+image_path,image)

# count += 1

# if count == 100:

# break

print('---start evaluate---')

voc_eval(preds,target,VOC_CLASSES=VOC_CLASSES)