Memory-Associated Differential Learning论文及代码解读

论文来源:

论文PDF:

Memory-Associated Differential Learning论文

论文代码:

Memory-Associated Differential Learning代码

论文解读:

1.Abstract

Conventional Supervised Learning approaches focus on the mapping from input features to output labels. After training, the learnt models alone are adapted onto testing features to predict testing labels in isolation, with training data wasted and their associations ignored. To take full advantage of the vast number of training data and their associations, we propose a novel learning paradigm called Memory-Associated Differential (MAD) Learning. We first introduce an additional component called Memory to memorize all the training data. Then we learn the differences of labels as well as the associations of features in the combination of a differential equation and some sampling methods. Finally, in the evaluating phase, we predict unknown labels by inferencing from the memorized facts plus the learnt differences and associations in a geometrically meaningfull manner. We gently build this theory in unary situations and apply it on Image Recognition, then extend it into Link Prediction as a binary situation, in which our method outperforms strong state-of-the-art baselines on three citation networks and ogbl-ddi dataset.

传统的监督学习方法侧重于从输入特征到输出标签的映射。 在训练之后,单独学习的模型被调整到测试特征上以单独预测测试标签,训练数据被浪费并且它们的关联被忽略。 为了充分利用大量的训练数据及其关联,我们提出了一种新的学习范式,称为记忆关联差分学习。 我们首先引入一个名为Memory的附加组件来记忆所有的训练数据。 然后在微分方程和一些抽样方法的组合中,我们学习标签的差异以及特征的关联。 最后,在评估阶段,我们通过从记忆的事实加上学习的差异和联系中推断出几何意义上的完全方式来预测未知标签。 我们在一元情况下温和地构建这一理论,并将其应用于图像识别,然后将其扩展为二元情况下的链接预测,其中我们的方法在三个引用网络和ogbl-ddi数据集上优于强大的最先进的基线。

2.Introduction

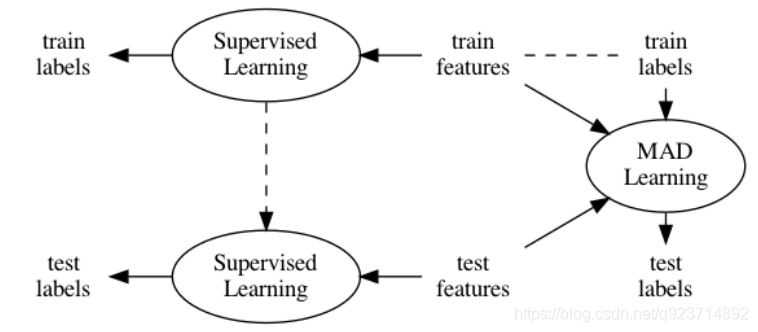

Figure 1: The difference between Conventional Supervised Learning and MAD Learning. The former learns the mapping from features to labels in training data and apply this mapping on testing data, while the latter learns the differences and associations among data and inferences testing labels from memorized training data.

图1:常规监督学习和MAD学习的区别。 前者学习训练数据中从特征到标签的映射,并将该映射应用于测试数据,而后者学习数据之间的差异和关联,并从记忆的训练数据中推断测试标签。

3.Related Works

Instead of treating External Memory as a way to add more learnable parameters to store uninterpretable hidden states, we try to memorize the facts as they are, and then learn the differences and associations between them.

我们不是把外部记忆当作一种添加更多可学习的参数来存储无法解释的隐藏状态的方式,而是试图记住事实的本来面目,然后学习它们之间的区别和联系。

Most of the experiments in this article are designed to solve Link Prediction problem that we predict whether a pair of nodes in a graph are likely to be connected, how much the weight their edge bares, or what attributes their edge should have.

本文中的大部分实验都是为了解决链接预测问题,即我们预测图中的一对节点是否可能连通,它们的边露出多少权重,或者它们的边应该具有什么属性。

Although our method is derived from a different perspective of view, we point out that Matrix Factorization can be seen as a simplification of MAD Learning with no memory and no sampling.

虽然我们的方法是从不同的角度推导出来的,但我们指出,矩阵分解可以被视为无记忆、无采样的MAD学习的简化。

4.Proposed Approach

4.1 Memory-Associated Differential Learning

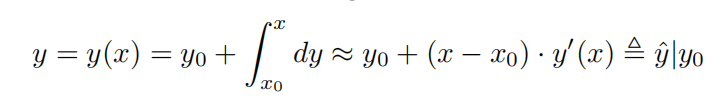

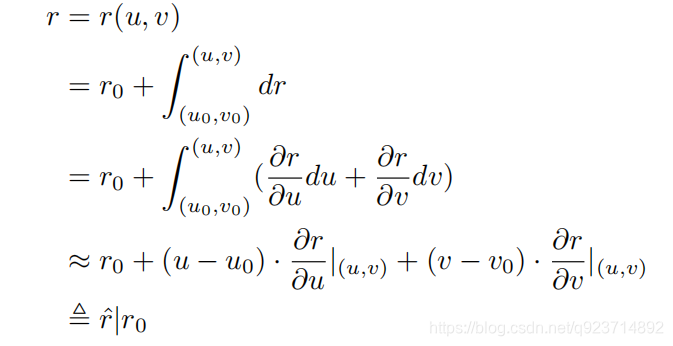

By applying Mean Value Theorem for Definite Integrals [Comenetz, 2002], we can estimate the unknown y with known y0 if x0 is close enough to x:

应用定积分中值定理[Comenetz,2002],如果x0与x足够接近,我们可以用已知y0来估计未知y:

In such way, we connect the current prediction tasks y to the past fact y0, which can be stored in external memory, and convert the learning of our target function y(x) to the learning of a differential function y0(x), which in general is more accessible than the former.

以这种方式,我们将当前预测任务y连接到可以存储在外部存储器中的过去事实y0,并将我们的目标函数y(x)的学习转换成微分函数y0(x)的学习,微分函数y0(x)通常比前者更容易访问。

4.2 Inferencing from Multiple References

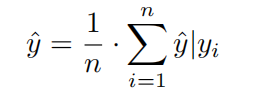

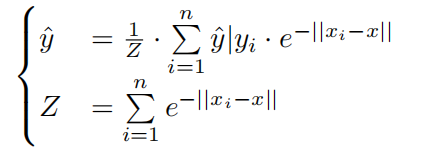

To get a steady and accurate estimation of y, we can sample n references x1, x2, · · · , xn to get n estimations y?|y1, y?|y2, · · · , y?|yn and combine them with an aggregator such as mean:

为了获得对y的稳定而精确的估计,我们可以对n个参考x1、x2、、、xn进行采样,以获得n个估计yˇ| y1、yˇ| y2、,yˇ| yn,并将它们与均值结合。

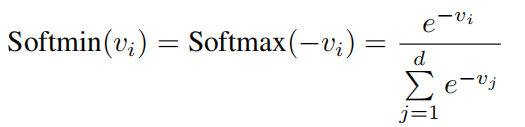

Here we adopt a function Softmin derived from Softmax which rescales the inputted d-dimentional array v so that every element of v lies in the range [0,1] and all of them sum to 1:

这里,我们采用从Softmax导出的函数Softmin,该函数对输入的d维数组v进行重新缩放,使得v的每个元素都位于[0,1]的范围内,并且它们的总和为1:

By applying Softmin we get the aggregated estimation:

通过Softmin最小,我们得到了总的估计:

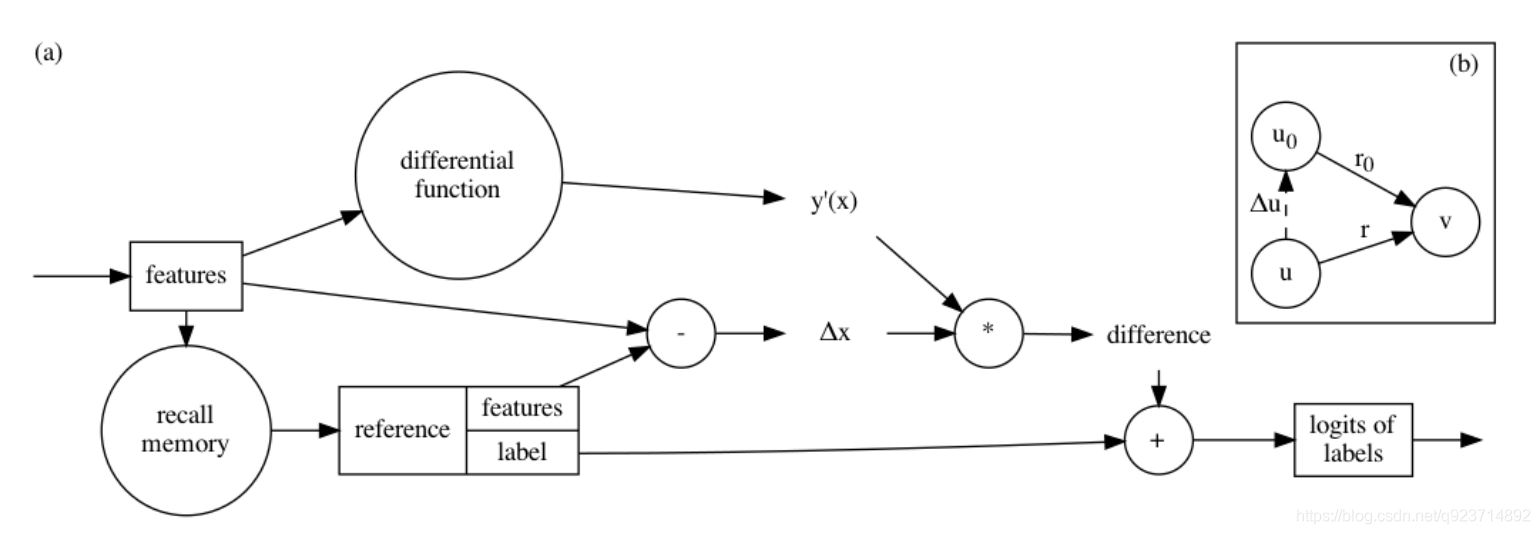

Figure 2: (a) Memory-Associated Differential Learning inferences labels from memorized ones following the first-order Taylor series approximation: y ≈ y0 +?x · y0(x). (b) In binary MAD Learning, when v = v0 holds, ?u?r |(u,v) is simplified to be ?u?r |v since it is the change

图2: (a)记忆相关差分学习根据一阶泰勒级数近似从记忆的标签中推断标签:y≈y0+?x y0(x)。(b)在二进制MAD学习中,当v = v0成立时,?u?r |(u,v)简化为?u?r |v,因为它是变化在v固定的情况下,将u轻微移动到u0后的r。

4.3 Soft Sentinels and Uncertainty

we introduce a mechanism on top of Softmin named Soft Sentinel. A Soft Sentinel is a dummy element mixed into the array of estimations with no information (e.g. the logit is 0) but a set distance (e.g. 0).

我们在Softmin之上引入了一个名为Soft Sentinel的机制。 软哨点是一个混合到无信息估计数组中的虚拟元素(例如: logit为0)但是设定的距离(例如.:0).

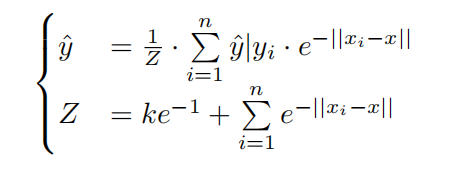

The estimation after k Soft Sentinels distant at 1 added is:

增加k个软哨兵距离为1后的估计值为:

When Soft Sentinels involved, only estimations given by close-enough references can have most of their impacts on the final result that unreliable estimations are supressed.

当涉及软哨兵时,只有由足够接近的参考文献给出的估计才能对最终结果产生最大影响,即抑制不可靠的估计。

4.4 Other Details

For the sake of flexibility and performance, we usually do not use inputted features x directly, but to first convert x into position f(x).

为了灵活性和性能,我们通常不直接使用输入的特征x,而是先将x转换为位置f(x)。

To adapt to this situation, we generally wrap the memory with an adaptor function m such as a one-layer MLP, getting y?|y0 = m(y0) + (f(x) ? f(x0)) · g(x) where g(x) stands for gradient.

为了适应这种情况,我们通常用适配器函数m包装存储器,例如一层MLP,得到y\ | y0 = m(y0)+(f(x)-f(x0))g(x),其中g(x)代表梯度。

When the encodings of nodes are dynamic and no features are provided, we usually adopt Random Mode in the training phase for efficiency and adopt Dynamic NN Mode in the evaluation phase for performance.

当节点的编码是动态的并且没有提供特征时,我们通常在训练阶段采用随机模式来提高效率,在评估阶段采用动态神经网络模式来提高性能。

4.5 Binary MAD Learning

We model the relationship between a pair of nodes in a graph by extending MAD Learning into binary situations.

我们通过将MAD学习扩展到二元情况来建模图中一对节点之间的关系。

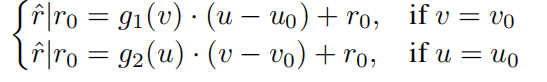

Therefore, we may further assume ?r?u |(u,v) = g1(v) if v = v0 and ?r?v |(u,v) = g2(u) if u = u0, making

Here g1(·) is destination differential function and g2(·) is source differential function. If the edge is undirected, these two functions can be shared.

这里g1()是目的微分函数,g2()是源微分函数。 如果边是无向的,这两个函数可以共享。

5.Experiments

In the training phase, we sample arbitrary pairs of nodes to construct negative samples [Grover and Leskovec, 2016] and compare the scores between connected pairs and negative samples with Cross-Entropy as the loss function:

在训练阶段,我们对任意节点对进行采样,构造负样本[Grover和Leskovec,2016],并以交叉熵为损失函数,比较连通对和负样本之间的得分:

where y is the number of positive samples and n of negative samples, py(i) is the predicted probability of the i-th positive sample and pn(i) of the i-th negative sample. In the evaluating phase, we record the scores not only in Dynamic NN Mode but also in Random Mode.

其中y是正样本的数量,n是负样本的数量,py(i)是第I个正样本的预测概率,pn(i)是第I个负样本的预测概率。 在评估阶段,我们不仅在动态神经网络模式下记录分数,还在随机模式下记录分数。

We have these three experimental settings to examine the contribution of Softmin and Soft Sentinels:

mean. Estimations are aggregated by mean function.

softmin. Estimations given by different references are summed up weighted by the results of Softmin applied to the distances.

sentinel. Estimations of softmin with 8 Soft Sentinels at distance 1 added.

As is shown in Figure 4(b), it is no much difference between mean and Softmin. But when mixed with Soft Sentinels, MAD Learning performs better and converges faster.

我们有这三个实验设置来检验软敏和软哨兵的贡献:

mean: 估计通过均值函数聚合。

softmin: 不同参考文献给出的估计值通过应用于距离的软最小结果进行加权求和。

sentinel:在距离1处增加了8个软哨兵时,软敏度的估计值。

如图4(b)所示,平均值和软最小值之间没有太大差异。 但是当与软哨兵混合时,MAD学习表现更好,收敛更快。

we repeat that MAD Learning does not predict directly. From another point of view, this experiment implies that undirect references can also be beneficial on par with direct information.

我们重申,MAD学习不能直接预测。 从另一个角度来看,这个实验意味着无向引用与直接信息一样有益。

6.Discussion

by extending it from a scalar to a vector, MAD Learning can be used for graphs with featured edges.

通过将它从标量扩展到向量,MAD学习可以用于具有特征边的图。

We also point out that MAD Learning can learn relations in heterogeneous graphs where nodes belong to different types (usually represented by encodings in different lengths). The only requirement is that positions of the source nodes should match with gradients of the destination nodes and vice versa.

我们还指出,MAD Learning可以学习节点属于不同类型(通常由不同长度的编码表示)的异构图中的关系。 唯一的要求是源节点的位置应该与目标节点的梯度相匹配,反之亦然。

7. Conclusion

In this work, we explore a novel learning paradigm which is flexible, effective and interpretable. The outstanding results, especially on Link Prediction, open the door for several research directions:

-

The most important part of MAD Learning is memory.However, MAD Learning have to index the whole training data for random access. In Link Prediction, we implement memory as a dense adjacency matrix which results in huge occupation of space. The way to shrink memory and improve the utilization of space should be investigated in the future.

-

Based on memory as the ground-truth, MAD Learning appends some difference as the second part. We implement this difference simply as the product of distance and differential function, but we believe there exist different ways to model it.

-

The third part of MAD Learning is the similarity, which is used to assign weights to estimations given by different references. We reuse distance to compute the similarity, but decoupling it by some other embeddings and some other measurements such as inner product should also be worthy to explore.

-

In this work, we do deliberately not combine direct information to focus only on MAD Learning. Since MAD Learning takes another parallel route to predict, we believe integrating MAD Learning and Conventional Supervised Learning is also a promising direction.

在这项工作中,我们探索了一种灵活、有效和可解释的新型学习范式。 突出的结果,尤其是在链接预测方面,为几个研究方向打开了大门:

-

MAD学习最重要的部分是记忆.然而,MAD Learning必须对整个训练数据进行索引,以便随机访问。 在链路预测中,我们将内存实现为密集的邻接矩阵,这导致了巨大的空间占用。 未来应该研究缩小内存和提高空间利用率的方法。

-

基于记忆作为基础事实,MAD学习附加了一些区别作为第二部分。 我们将这种差异简单地实现为距离和微分函数的乘积,但我们认为存在不同的建模方法。

-

MAD学习的第三部分是相似度,它被用来给不同参考文献给出的估计赋值。 我们使用距离来计算相似度,但是通过一些其他嵌入和一些其他度量(例如内积)来解耦它也应该是值得探索的。

-

在这项工作中,我们故意不结合直接信息,只专注于MAD学习。 由于MAD学习采取了另一种平行的预测路线,我们认为将MAD学习和常规监督学习相结合也是一个有前途的方向。