数据集

提取码:1234

里面的json文件夹的使用我没有写

对抗神经网络基于pytorch的生成相应的曲线

循环神经网络基于pytorch之手写数字识别

卷积神经网络基于pytorch的实现手写数字识别

强化学习基于pyotrch之立杆子

项目思路

- 首先下载相应的数据集

- 将数据集的路径进行相应的规划出来

- 使用datasets.ImageFolder将数据集和验证集进行导入进去,同时使用 DataLoader将数据集加载成为相应的数据和标签的形式

- 选择相应的GPU还是CPU

- 导入预训练模型,使用initialize_model对模型进行相应的初始化,使用set_parameter_requires_grad函数设定除了卷积层的参数进行相应的更新,其他的参数不会进行相应的更新,(也就是不会进行相应的求导操作即require_grad = False)

- 定义相应的损失函数和优化函数(Adam)。

- 定义相应的训练函数,训练函数需要传入相应的预训练模型,DataLoader处理过的数据,激活函数和优化函数

需要计算的分别有测试集和验证集的准确率和损失率

直接上菜

首先导入相关的包

import os

import matplotlib.pyplot as plt

import numpy as np

import torch

from torch import nn

import torch.optim as optim

import torchvision

# torchvision.models 加载预训练处理模型

from torchvision import transforms, models, datasets

import time

import copy

from PIL import Image

os模块

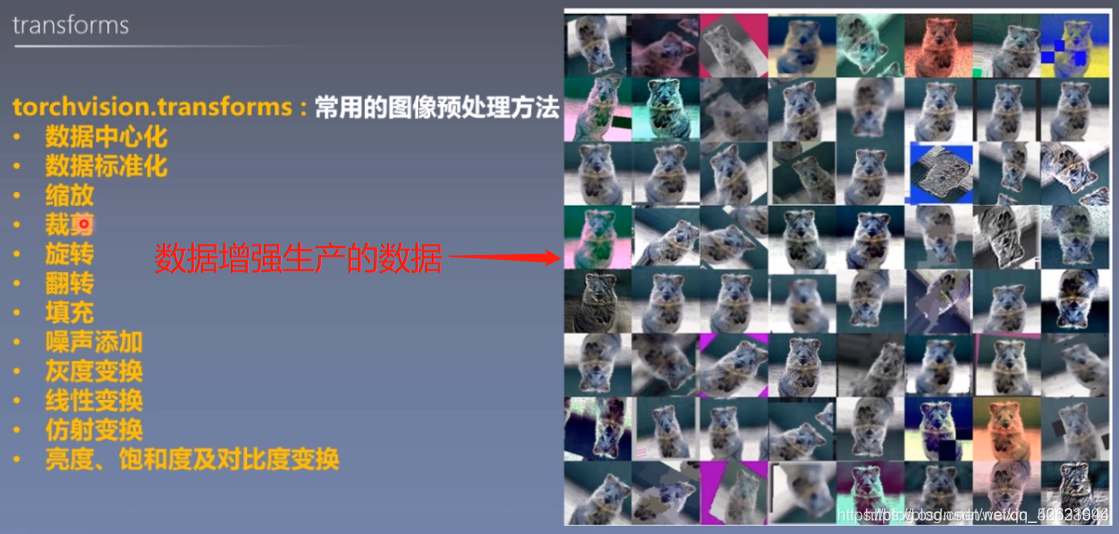

很多基于Pytorch的工具集都非常好用,比如处理自然语言的torchtext,处理音频的torchaudio,以及处理图像视频的torchvision

torchvision.transform

将shape为(C,H,W)的Tensor或shape为(H,W,C)的numpy.ndarray转换成PIL.Image

导入相关的数据

data_dir = './flower_data/'

train_dir = data_dir + '/train'

valid_dir = data_dir + '/valid'

进行相关的数据增强

data_transforms = {

'train': transforms.Compose([transforms.RandomRotation(45), # 随机旋转,-45到45度之间随机选

transforms.CenterCrop(224), # 从中心开始裁剪

transforms.RandomHorizontalFlip(p=0.5), # 随机水平翻转 选择一个概率概率

transforms.RandomVerticalFlip(p=0.5), # 随机垂直翻转

transforms.ColorJitter(brightness=0.2, contrast=0.1, saturation=0.1, hue=0.1),

# 参数1为亮度,参数2为对比度,参数3为饱和度,参数4为色相

transforms.RandomGrayscale(p=0.025), # 概率转换成灰度率,3通道就是R=G=B

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) # 均值,标准差

]),

'valid': transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

将数据进行相关的处理变成可以进行训练的数据

batch_size = 8

# 加载相应的数据

image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x), data_transforms[x]) for x in ['train', 'valid']}

# 将数据改变为为可以进行相应的训练的形式

dataloaders = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=batch_size, shuffle=True) for x in

['train', 'valid']}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'valid']}

class_names = image_datasets['train'].classes

导入相应的预训练模型

model_name = 'resnet'

# 是否用人家训练好的特征来做

feature_extract = True

进行选择选择相应的设备

train_on_gpu = torch.cuda.is_available()

# 是否采用相关的gpu进行计算

if not train_on_gpu:

print('CUDA is not available. Training on CPU ...')

else:

print('CUDA is available! Training on GPU ...')

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

进行预训练模型的初始化

model_ft, input_size = initialize_model(model_name, feature_extract, use_pretrained=True)

初始化函数

# 神经网络中的特征层的权重的参数不动,只更新全连接层(输出层)的参数

def set_parameter_requires_grad(model, feature_extracting):

if feature_extracting:

for param in model.parameters():

param.requires_grad = False

def initialize_model(model_name, feature_extract, use_pretrained=True):

# 选择合适的模型,不同模型的初始化方法稍微有点区别

model_ft = None

input_size = 0

if model_name == "resnet":

""" Resnet152

"""

model_ft = models.resnet152(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

# 获取全连接层的输入特征数

num_ftrs = model_ft.fc.in_features

# 重置全连接层

model_ft.fc = nn.Sequential(nn.Linear(num_ftrs, 102),

# dim=0 是矩阵中对应位置相加为1

# dim=1 是矩阵中列位置相加为1

# dim=2 是矩阵中行位置相加为1

nn.LogSoftmax(dim=1))

input_size = 224

return model_ft, input_size

model.state_dict()与model.parmeters()的区别

- model.state_dict()返回的是一个字典,可以知道层的名字和参数与model.parmeters返回的是一个生成器,只有相应的参数。

步骤

# GPU计算

model_ft = model_ft.to(device)

# 模型保存

filename = 'checkpoint.pth'

定义相应的优化函数和损失函数

# 是否训练所有层

params_to_update = model_ft.parameters()

print("Params to learn:")

# 定义相应的优化函数

optimizer_ft = optim.Adam(params_to_update, lr=1e-2)

scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1) # 学习率每7个epoch衰减成原来的1/10

# 最后一层已经LogSoftmax()了,所以不能nn.CrossEntropyLoss()来计算了,nn.CrossEntropyLoss()相当于logSoftmax()和nn.NLLLoss()整合

criterion = nn.NLLLoss()

定义其中的训练函数

def train_model(model, dataloaders, criterion, optimizer, num_epochs=25, filename=filename):

# 记录初始时间,便于计算一个epoch的时间

since = time.time()

best_acc = 0

"""

checkpoint = torch.load(filename)

best_acc = checkpoint['best_acc']

model.load_state_dict(checkpoint['state_dict'])

optimizer.load_state_dict(checkpoint['optimizer'])

model.class_to_idx = checkpoint['mapping']

"""

# 使用相应的GPU或者CPU计算

model.to(device)

val_acc_history = []

train_acc_history = []

train_losses = []

valid_losses = []

# optimizer.param_groups: 是长度为2的list,其中的元素是2个字典;

# optimizer.param_groups[0]: 长度为6的字典,包括[‘amsgrad’, ‘params’, ‘lr’, ‘betas’, ‘weight_decay’, ‘eps’]这6个参数;

# optimizer.param_groups[1]: 好像是表示优化器的状态的一个字典;

# 进行动态的修改相应的学习率

LRs = [optimizer.param_groups[0]['lr']]

best_model_wts = copy.deepcopy(model.state_dict())

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

# 训练和验证

for phase in ['train', 'valid']:

if phase == 'train':

print("开始")

# model

model.train() # 训练

else:

model.eval() # 验证

running_loss = 0.0

running_corrects = 0

# 把数据都取个遍

for inputs, labels in dataloaders[phase]:

# 将数据加载到指定的设备上比如:CPU或者GPU

inputs = inputs.to(device)

labels = labels.to(device)

# 清零

optimizer.zero_grad()

# 只有训练的时候计算和更新梯度

# 要设置了torch.set_grad_enabled(False)那么接下来所有的tensor运算产生的新的节点都是不可求导的,这就保证了只在训练数据更新相应的数据,而测试数据不会更新数据

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

loss = criterion(outputs, labels)

# 返回索引

_, preds = torch.max(outputs, 1)

# 训练阶段更新权重

if phase == 'train':

loss.backward()

optimizer.step()

# 计算损失

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

# 计算损失效果

epoch_loss = running_loss / len(dataloaders[phase].dataset)

# 计算准确率

epoch_acc = running_corrects.double() / len(dataloaders[phase].dataset)

# 计算相应的时间

time_elapsed = time.time() - since

print('Time elapsed {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))

# 得到最好那次的模型

if phase == 'valid' and epoch_acc > best_acc:

best_acc = epoch_acc

# 将现在的网络的权重参数复制到best_mode

best_model_wts = copy.deepcopy(model.state_dict())

state = {

'state_dict': model.state_dict(),

'best_acc': best_acc,

'optimizer': optimizer.state_dict(),

}

torch.save(state, filename)

if phase == 'valid':

val_acc_history.append(epoch_acc)

valid_losses.append(epoch_loss)

scheduler.step(epoch_loss)

if phase == 'train':

train_acc_history.append(epoch_acc)

train_losses.append(epoch_loss)

print('Optimizer learning rate : {:.7f}'.format(optimizer.param_groups[0]['lr']))

LRs.append(optimizer.param_groups[0]['lr'])

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('Best val Acc: {:4f}'.format(best_acc))

# 训练完后用最好的一次当做模型最终的结果

model.load_state_dict(best_model_wts)

return model, val_acc_history, train_acc_history, valid_losses, train_losses, LRs

进行相应的训练

# model_fit 代表返回更改过后的预训练模型

model_ft, val_acc_history, train_acc_history, valid_losses, train_losses, LRs = train_model(model_ft, dataloaders,

criterion, optimizer_ft,

num_epochs=20)

自己以前没用过的代码

# 通过transform对图片进行相应的操作, 从而达到相应的数据增强的效果

data_transforms = {

'train': transforms.Compose([transforms.RandomRotation(45), # 随机旋转,-45到45度之间随机选

transforms.CenterCrop(224), # 从中心开始裁剪

transforms.RandomHorizontalFlip(p=0.5), # 随机水平翻转 选择一个概率概率

transforms.RandomVerticalFlip(p=0.5), # 随机垂直翻转

transforms.ColorJitter(brightness=0.2, contrast=0.1, saturation=0.1, hue=0.1),

# 参数1为亮度,参数2为对比度,参数3为饱和度,参数4为色相

transforms.RandomGrayscale(p=0.025), # 概率转换成灰度率,3通道就是R=G=B

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) # 均值,标准差

]),

'valid': transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

# 因为以前没有gpu,所以没有考虑过设备的问题

train_on_gpu = torch.cuda.is_available()

# 是否采用相关的gpu进行计算

if not train_on_gpu:

print('CUDA is not available. Training on CPU ...')

else:

print('CUDA is available! Training on GPU ...')

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1) # 学习率每7个epoch衰减成原来的1/10