Pytorch搭建LeNet5

1. LeNet神经网络介绍

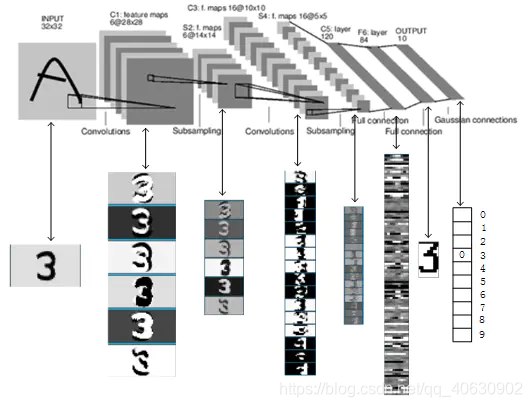

LeNet神经网络由深度学习三巨头之一的Yan LeCun提出,他同时也是卷积神经网络 (CNN,Convolutional Neural Networks)之父。LeNet主要用来进行手写字符的识别与分类,并在美国的银行中投入了使用。LeNet的实现确立了CNN的结构,现在神经网络中的许多内容在LeNet的网络结构中都能看到,例如卷积层,Pooling层,ReLU层。虽然LeNet早在20世纪90年代就已经提出了,但由于当时缺乏大规模的训练数据,计算机硬件的性能也较低,因此LeNet神经网络在处理复杂问题时效果并不理想。虽然LeNet网络结构比较简单,但是刚好适合神经网络的入门学习。

2. LeNet神经网络结构

3. Pytorch搭建LeNet5

import torch.nn as nn

from collections import OrderedDict

import torch

# 利用torch.nn.Sequential和collections.OrderedDict()来构建每一个layer

class C1(nn.Module):

def __init__(self):

super(C1, self).__init__()

self.c1 = nn.Sequential(OrderedDict([ # 1代表输入通道数,6代表输出通道数,5代表卷积核的大小5x5,默认stride=1

('c1', nn.Conv2d(1, 6, 5)), # 6@28×28

('relu1', nn.ReLU()),

('s2', nn.MaxPool2d(2, 2)) # 6@14x14,通道数不变,超参数2分别表示池化大小和步长

]))

def forward(self, img):

output = self.c1(img) # 输入是1x32x32

return output

class C3(nn.Module):

def __init__(self):

super(C3, self).__init__()

self.c3 = nn.Sequential(OrderedDict([

('c3', nn.Conv2d(6, 16, 5)), # 16@10×10

('relu2', nn.ReLU()),

('s4', nn.MaxPool2d(2, 2)) # 16@5×5

]))

def forward(self, img):

output = self.c3(img)

return output

class C5(nn.Module):

def __init__(self):

super(C5, self).__init__()

self.c5 = nn.Sequential(OrderedDict([

('c5', nn.Conv2d(16, 120, 5)), # 120@1x1

('relu3', nn.ReLU())

]))

def forward(self, img):

output = self.c5(img)

return output

class F6(nn.Module):

def __init__(self):

super(F6, self).__init__()

self.f6 = nn.Sequential(OrderedDict([

('f4', nn.Linear(120, 84)),

('relu4', nn.ReLU())

]))

def forward(self, img):

output = self.f6(img)

return output

class F7(nn.Module):

def __init__(self):

super(F7, self).__init__()

self.f7 = nn.Sequential(OrderedDict([

('f7', nn.Linear(84, 10)),

('sig8', nn.LogSoftmax(dim=-1))

]))

def forward(self, img):

output = self.f7(img)

return output

class LeNet5(nn.Module):

"""

Input - 1x32x32

Output - 10

"""

def __init__(self):

super(LeNet5, self).__init__()

self.c1 = C1()

self.c3_1 = C3()

self.c3_2 = C3()

self.c5 = C5()

self.f6 = F6()

self.f7 = F7()

def forward(self, img):

output = self.c1(img)

x = self.c3_1(output)

output = self.c3_2(output)

output += x

output = self.c5(output)

output = output.view(img.size(0), -1) # 将卷积层的输出摊平为120维向量

output = self.f6(output) # 全连接层将120维变为84维

output = self.f7(output) # 全连接层将84维变为10维,用于预测输出的结果

return output

if __name__ == '__main__':

model = LeNet5() # 网络实例化

print(model)

in_put = torch.rand(1, 1, 32, 32) # 随机构建一张一个通道的32*32的输入图片

out = model(in_put)

print(out) # 哪一维的数字最大,输入就属于那一类别

LeNet5(

(c1): C1(

(c1): Sequential(

(c1): Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))

(relu1): ReLU()

(s2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

)

(c3_1): C3(

(c3): Sequential(

(c3): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1))

(relu2): ReLU()

(s4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

)

(c3_2): C3(

(c3): Sequential(

(c3): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1))

(relu2): ReLU()

(s4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

)

(c5): C5(

(c5): Sequential(

(c5): Conv2d(16, 120, kernel_size=(5, 5), stride=(1, 1))

(relu3): ReLU()

)

)

(f6): F6(

(f6): Sequential(

(f4): Linear(in_features=120, out_features=84, bias=True)

(relu4): ReLU()

)

)

(f7): F7(

(f7): Sequential(

(f7): Linear(in_features=84, out_features=10, bias=True)

(sig8): LogSoftmax()

)

)

)

tensor([[-2.2676, -2.2554, -2.3434, -2.3523, -2.4532, -2.2200, -2.1823, -2.3595,

-2.3212, -2.2984]], grad_fn=<LogSoftmaxBackward>)

Process finished with exit code 0