- 数据读入

- 构建停用词表

- 自定义分词词典

- 对文本分词,去掉单字,数字,去掉停用词

- 把词和词频保存在本地cipin.csv里

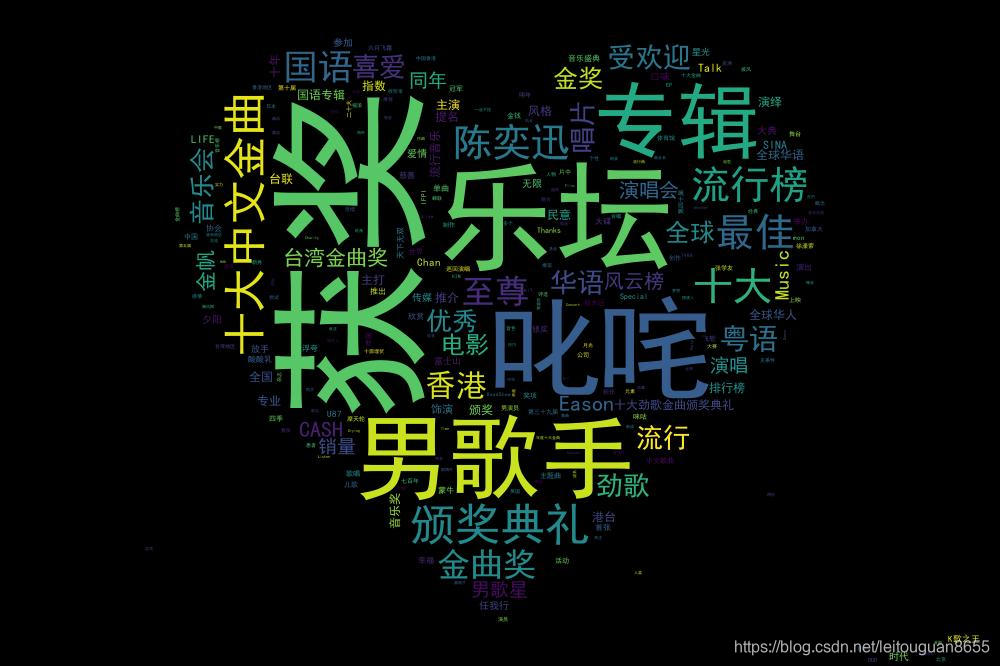

- 可视化,横坐标是关键词,纵坐标是关键词对应的频数

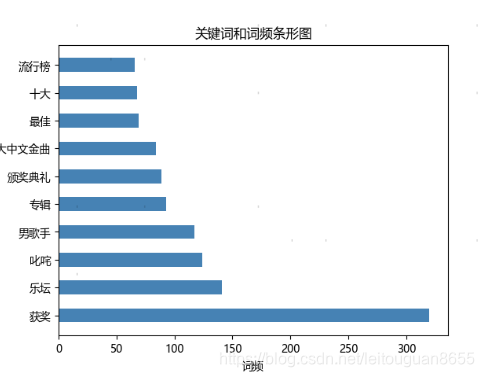

- 绘制词云图

#!/usr/bin/python

# -*- coding: utf-8 -*-

"""

@author:

@contact:

@time:

@context:python nlp系列-画词云图(中文)

数据读入

构建停用词表

自定义分词词典

对文本分词,去掉单字,数字,去掉停用词

把词和词频保存在本地cipin.csv里

可视化,横坐标是关键词,纵坐标是关键词对应的频数

绘制词云图

"""

from wordcloud import WordCloud

import jieba.posseg as jp, jieba,matplotlib.pyplot as plt

from pandas.core.frame import DataFrame

# 中文的正常显示

plt.rcParams[ 'font.sans-serif'] = [ 'Microsoft YaHei']

#数据读入

text = open('data.txt',encoding='utf-8',errors='ignore').read()

# 构建停用词表

def get_custom_stopwords(stop_words_file):

with open(stop_words_file, encoding='utf-8') as f:

stopwords = f.read()

stopwords_list = stopwords.split('\n')

custom_stopwords_list = [i for i in stopwords_list]

return custom_stopwords_list

stop_words_file = "stopwords.txt"

stopwords = get_custom_stopwords(stop_words_file)

# 自定义分词词典

fenci_name = open('fenci_name.txt',encoding='utf-8',errors='ignore').read().split('\n')

for line in fenci_name:

jieba.add_word(line)

# 对文本分词,去掉单字,数字,去掉停用词

words = [word.word for word in jp.cut(text) if len(word.word) > 1

and word.word.isdigit() != True and word.word not in stopwords]

# wordslist存储的是词

wordslist = list(set(words))

# cipin存储的是词频

cipin=[]

for item in wordslist:

cipin.append(words.count(item))

# 词和词频合一起

dict = {"words": wordslist,"cipin": cipin}

df = DataFrame(dict)

# 按照词频降序排

df=df.sort_values(by="cipin" , ascending=False)

# 把词和词频保存在本地cipin.csv里

df.to_csv('cipin.csv')

# 可视化,横坐标是关键词,纵坐标是关键词对应的频数

p1 = plt.bar(bottom=df.iloc[[0,1,2,3,4,5,6,7,8,9],:]['words'],

width=df.iloc[[0,1,2,3,4,5,6,7,8,9],:]['cipin'],

color='steelblue', x=0,height=0.5, orientation='horizontal')

plt.title( '关键词和词频条形图')# 设置坐标轴标签和标题

plt.xlabel( '词频')

plt.ylabel( '关键词')

plt.show()

# 绘制词云图

result = " ".join(words)# 以空格拼接起来

wc = WordCloud(

font_path='simhei.ttf',

max_words=20000,

collocations=False,

mask=plt.imread('4.jpg') #背景图片

)

wc.generate(result)

wc.to_file('2.jpg') #图片保存

plt.figure('陈奕迅') ##显示图片:显示的名字

plt.imshow(wc)

plt.axis('off') #关闭坐标

plt.show()

关键词和词频图:

词云图: