第四课

简述

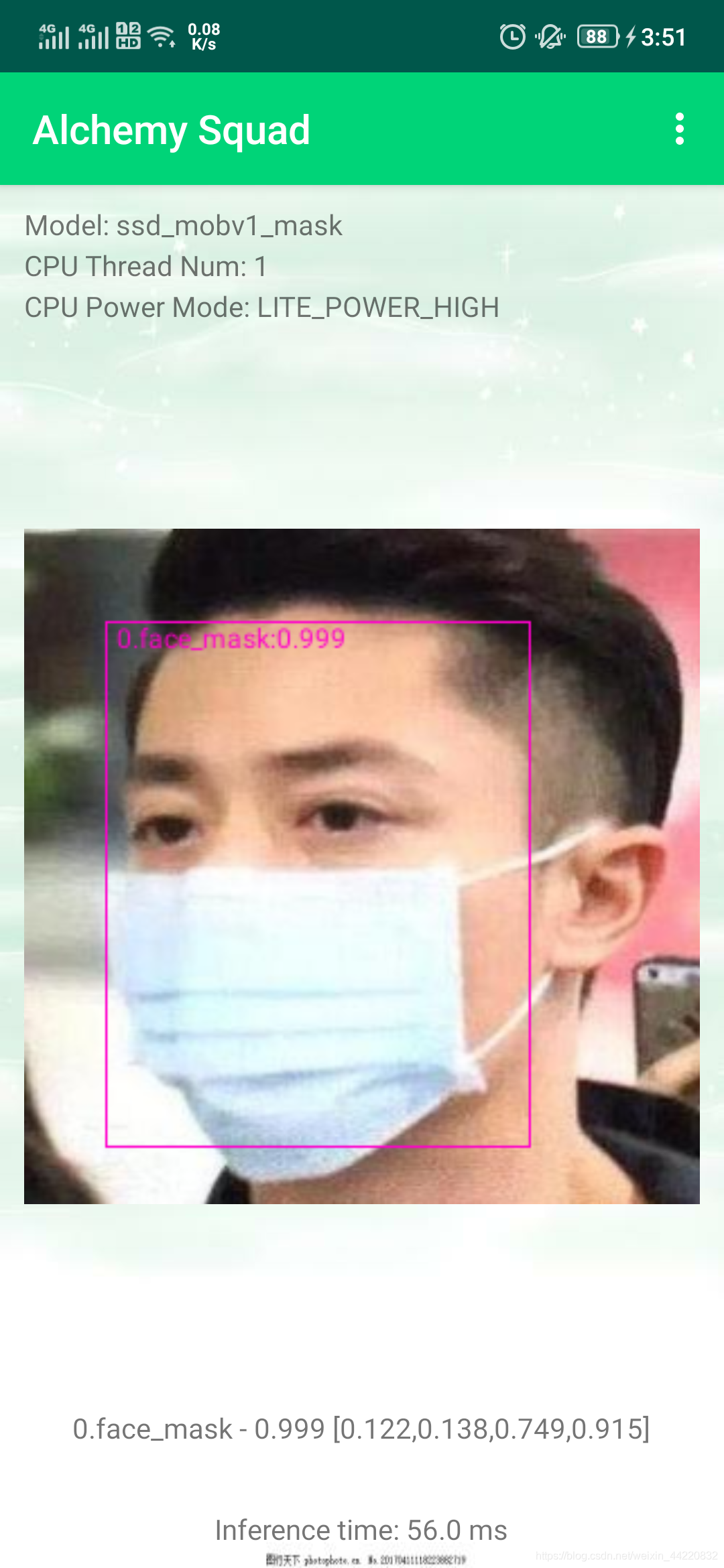

第四课主要讲的是使用PaddleLite,通过Android Studio进行安卓部署。

数据集制作

这节课是以口罩目标检测模型的部署为例。

做数据集首先要进行数据标注,可参考第二课笔记

数据集分割

核心代码:

!paddlex --split_dataset --format VOC --dataset_dir masks/VOC_MASK --val_value 0.2 --test_value 0.1

代码意思为将数据集按照7:2:1的比例分割

模型训练

可参考第三课笔记

PaddleLite生成.nb模型文件

# 准备PaddleLite依赖

!pip install paddlelite

# 准备PaddleLite部署模型

#--valid_targets中参数(arm)用于传统手机,(npu,arm )用于华为带有npu处理器的手机

!paddle_lite_opt \

--model_file=inference/ssd_mobilenet_v1_300_120e_voc/model.pdmodel \

--param_file=inference/ssd_mobilenet_v1_300_120e_voc/model.pdiparams \

--optimize_out=./inference/ssd_mobilenet_v1_300_120e_voc \

--optimize_out_type=naive_buffer \

--valid_targets=arm

#--valid_targets=npu,arm

安卓端部署

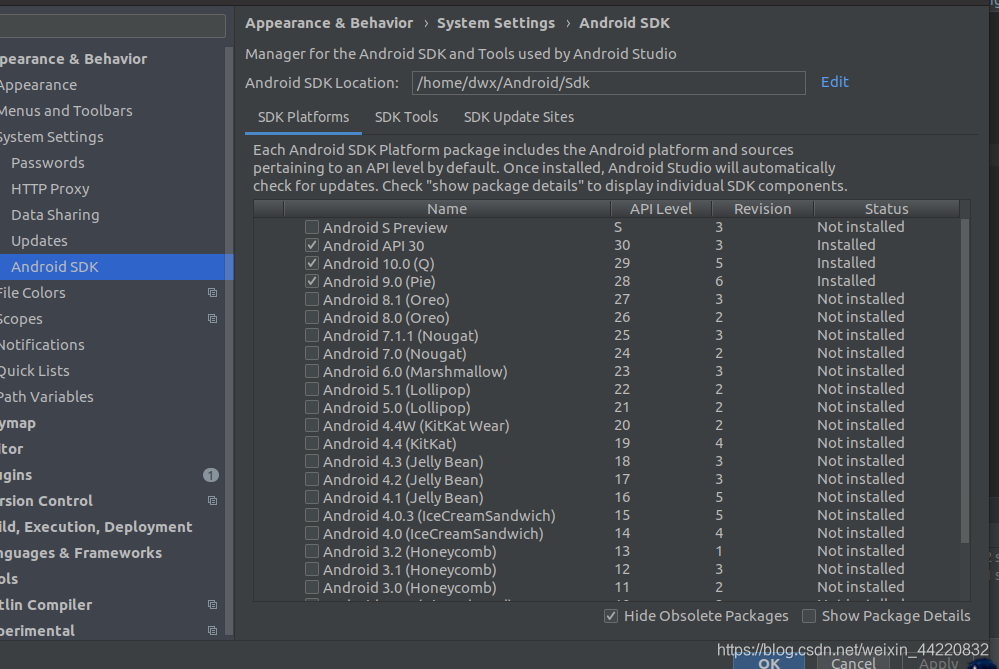

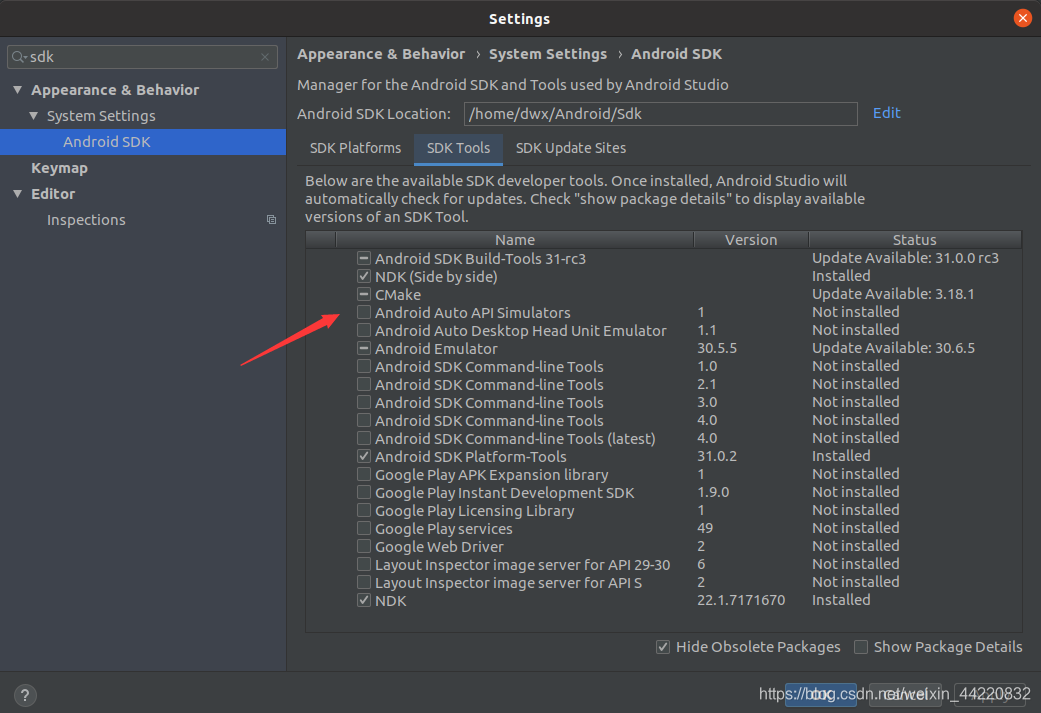

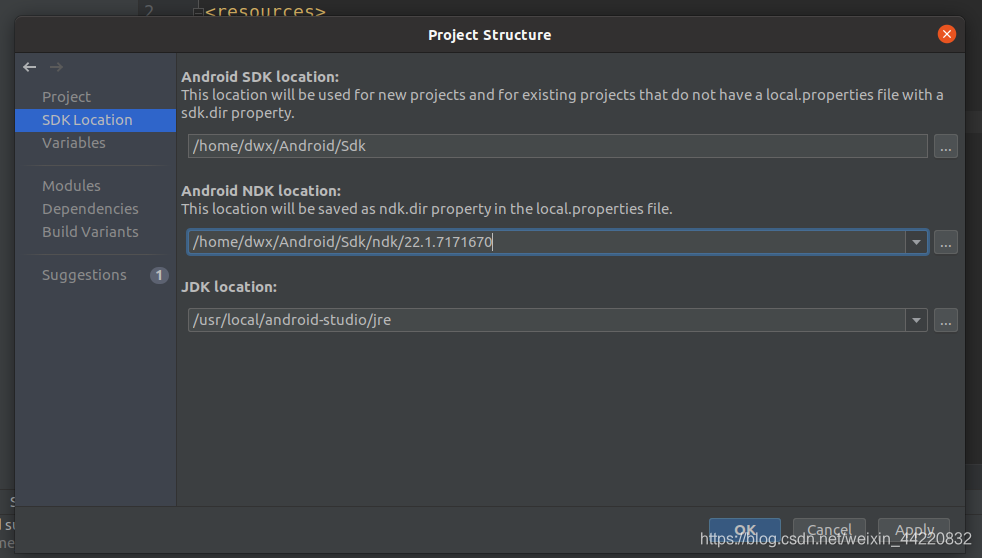

Android Studio环境搭建

sudo apt-get install libc6:i386 libncurses5:i386 libstdc++6:i386 lib32z1 libbz2-1.0:i386

#查看java版本

java --version

#下载OpenJDK 8

sudo apt-get install openjdk-8-jdk

#若系统存在多个版本的java,输入对应选项的数字切换Java版本(初次安装则不需要执行下面)

update-alternatives --config java

#安装cmake和ninja环境

# root用户进入命令

sudo su

# root用户退出命令

exit

# 1. Install basic software 参考官方文档,注意权限,应该是root用户

apt update

apt-get install -y --no-install-recommends \

gcc g++ git make wget python unzip adb curl

# 2. Install cmake 3.10 or above 参考官方文档,注意权限,应该是root用户

wget -c https://mms-res.cdn.bcebos.com/cmake-3.10.3-Linux-x86_64.tar.gz && \

tar xzf cmake-3.10.3-Linux-x86_64.tar.gz && \

mv cmake-3.10.3-Linux-x86_64 /opt/cmake-3.10 && \

ln -s /opt/cmake-3.10/bin/cmake /usr/bin/cmake && \

ln -s /opt/cmake-3.10/bin/ccmake /usr/bin/ccmake

# 3. Install ninja-build 此处需退出root用户

sudo apt-get install ninja-build

#使用Ubuntu虚拟机主要是因为不小心把环境搞坏或出现一些问题时可以直接删除重装虚拟机

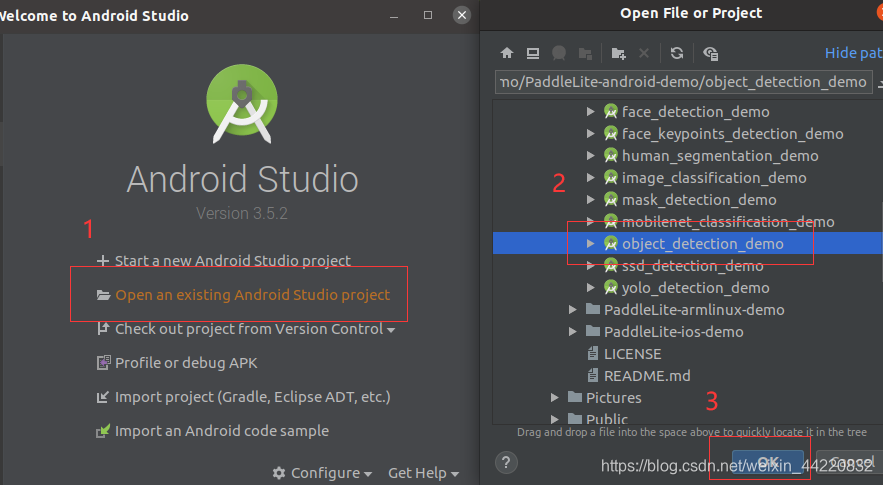

导入Paddle-Lite-Demo

#demo

git clone https://gitee.com/paddlepaddle/Paddle-Lite-Demo.git

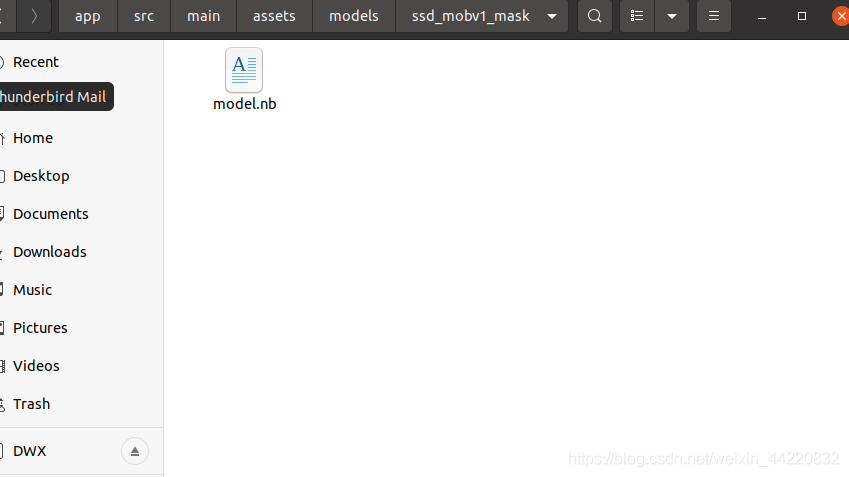

模型拷贝 到PaddleLite-android-demo/object_detection_demo/app/src/main/assets/models目录下,新建ssd_mobv1_mask文件夹,将刚刚生成的.nb文件拷贝到该文佳夹下,并重命名为model.nb,如下图所示

项目初始界面

第五课

简述

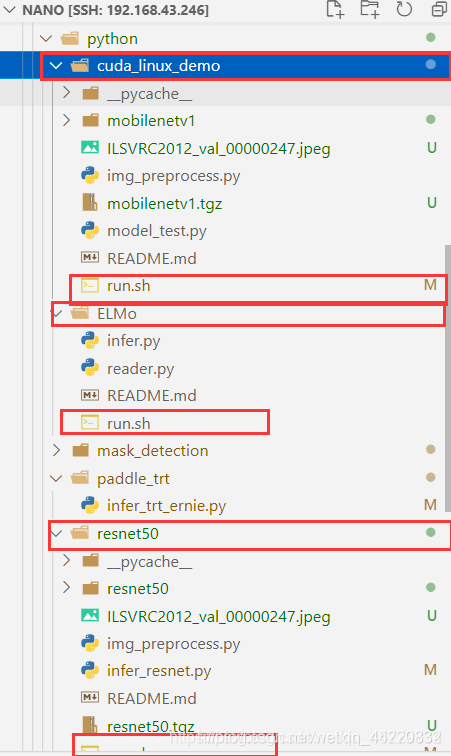

第五课是在地平线和英伟达nano上部署

Jetson nano配置可参考配置方法

直接下载官方编译好的Jetson nano预测库

# 安装whl

```python

pip3 install paddlepaddle_gpu-2.1.1-cp36-cp36m-linux_aarch64.whl

# 打开python3测试

import paddle

paddle.fluid.install_check.run_check()```

测试Paddle Inference

(1)拉取Paddle-Inference-Demo:

!git clone https://github.com/PaddlePaddle/Paddle-Inference-Demo.git

(2)测试跑通GPU预测模型

需要注意的是,需要将所有子文件夹中的run.sh最后的python修改为python3:

部署自己的目标检测模型

import cv2

import numpy as np

from paddle.inference import Config

from paddle.inference import PrecisionType

from paddle.inference import create_predictor

import yaml

import time

# ————————————————图像预处理函数———————————————— #

def resize(img, target_size):

"""resize to target size"""

if not isinstance(img, np.ndarray):

raise TypeError('image type is not numpy.')

im_shape = img.shape

im_size_min = np.min(im_shape[0:2])

im_size_max = np.max(im_shape[0:2])

im_scale_x = float(target_size) / float(im_shape[1])

im_scale_y = float(target_size) / float(im_shape[0])

img = cv2.resize(img, None, None, fx=im_scale_x, fy=im_scale_y)

return img

def normalize(img, mean, std):

img = img / 255.0

mean = np.array(mean)[np.newaxis, np.newaxis, :]

std = np.array(std)[np.newaxis, np.newaxis, :]

img -= mean

img /= std

return img

def preprocess(img, img_size):

mean = [0.485, 0.456, 0.406]

std = [0.229, 0.224, 0.225]

img = resize(img, img_size)

img = img[:, :, ::-1].astype('float32') # bgr -> rgb

img = normalize(img, mean, std)

img = img.transpose((2, 0, 1)) # hwc -> chw

return img[np.newaxis, :]

# ——————————————————————模型配置、预测相关函数—————————————————————————— #

def predict_config(model_file, params_file):

'''

函数功能:初始化预测模型predictor

函数输入:模型结构文件,模型参数文件

函数输出:预测器predictor

'''

# 根据预测部署的实际情况,设置Config

config = Config()

# 读取模型文件

config.set_prog_file(model_file)

config.set_params_file(params_file)

# Config默认是使用CPU预测,若要使用GPU预测,需要手动开启,设置运行的GPU卡号和分配的初始显存。

config.enable_use_gpu(500, 0)

# 可以设置开启IR优化、开启内存优化。

config.switch_ir_optim()

config.enable_memory_optim()

config.enable_tensorrt_engine(workspace_size=1 << 30, precision_mode=PrecisionType.Float32,max_batch_size=1, min_subgraph_size=5, use_static=False, use_calib_mode=False)

predictor = create_predictor(config)

return predictor

def predict(predictor, img):

'''

函数功能:初始化预测模型predictor

函数输入:模型结构文件,模型参数文件

函数输出:预测器predictor

'''

input_names = predictor.get_input_names()

for i, name in enumerate(input_names):

input_tensor = predictor.get_input_handle(name)

input_tensor.reshape(img[i].shape)

input_tensor.copy_from_cpu(img[i].copy())

# 执行Predictor

predictor.run()

# 获取输出

results = []

# 获取输出

output_names = predictor.get_output_names()

for i, name in enumerate(output_names):

output_tensor = predictor.get_output_handle(name)

output_data = output_tensor.copy_to_cpu()

results.append(output_data)

return results

# ——————————————————————后处理函数—————————————————————————— #

def draw_bbox_image(frame, result, label_list, threshold=0.5):

for res in result:

cat_id, score, bbox = res[0], res[1], res[2:]

if score < threshold:

continue

for i in bbox:

int(i)

xmin, ymin, xmax, ymax = bbox

cv2.rectangle(frame, (xmin, ymin), (xmax, ymax), (255,0,255), 2)

print('category id is {}, bbox is {}'.format(cat_id, bbox))

try:

label_id = label_list[int(cat_id)]

# #cv2.putText(图像, 文字, (x, y), 字体, 大小, (b, g, r), 宽度)

cv2.putText(frame, label_id, (int(xmin), int(ymin-2)), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255,0,0), 2)

cv2.putText(frame, str(round(score,2)), (int(xmin-35), int(ymin-2)), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0,255,0), 2)

except KeyError:

pass

if __name__ == '__main__':

# 从infer_cfg.yml中读出label

infer_cfg = open('yolov3_r50vd_dcn_270e_coco/infer_cfg.yml')

data = infer_cfg.read()

yaml_reader = yaml.load(data)

label_list = yaml_reader['label_list']

print(label_list)

# 配置模型参数

model_file = "./yolov3_r50vd_dcn_270e_coco/model.pdmodel"

params_file = "./yolov3_r50vd_dcn_270e_coco/model.pdiparams"

# 初始化预测模型

predictor = predict_config(model_file, params_file)

cap = cv2.VideoCapture(0)

# 图像尺寸相关参数初始化

ret, img = cap.read()

im_size = 224

scale_factor = np.array([im_size * 1. / img.shape[0], im_size * 1. / img.shape[1]]).reshape((1, 2)).astype(np.float32)

im_shape = np.array([im_size, im_size]).reshape((1, 2)).astype(np.float32)

while True:

ret, frame = cap.read()

# 预处理

data = preprocess(frame, im_size)

time_start=time.time()

# 预测

result = predict(predictor, [im_shape, data, scale_factor])

print('Time Cost:{}'.format(time.time()-time_start) , "s")

draw_bbox_image(frame, result[0], label_list, threshold=0.1)

cv2.imshow("frame", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

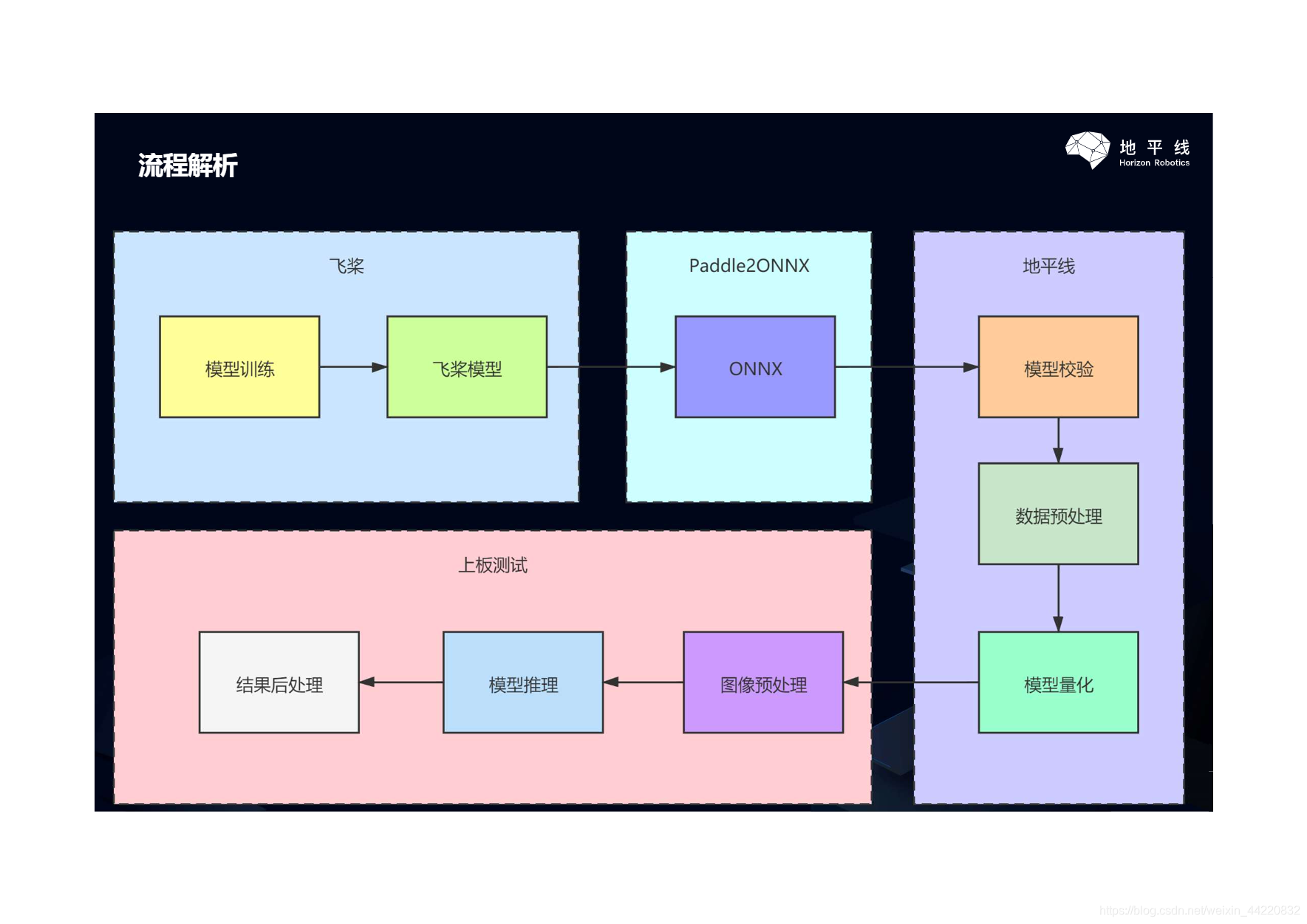

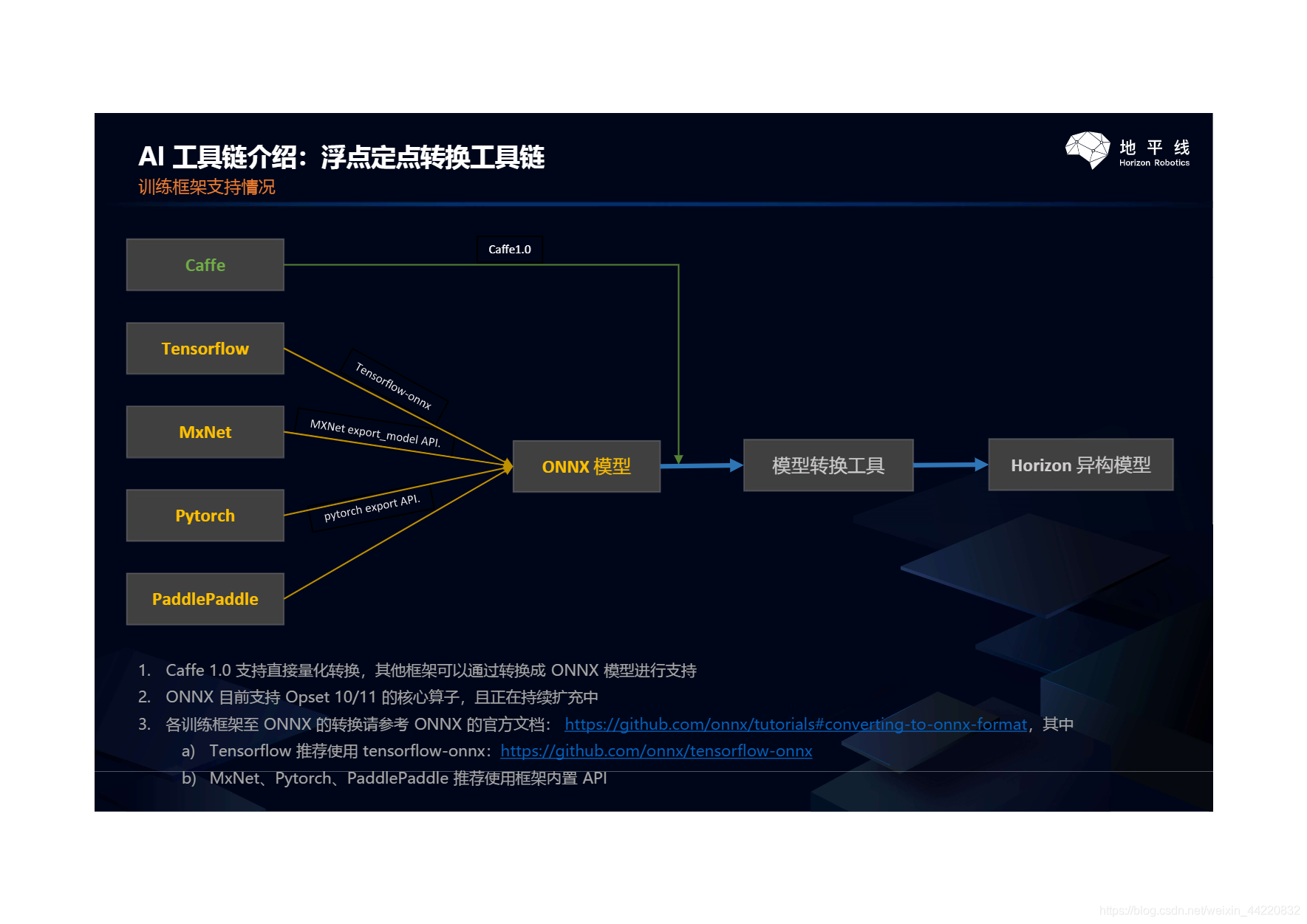

地平线部署

第六课

简述

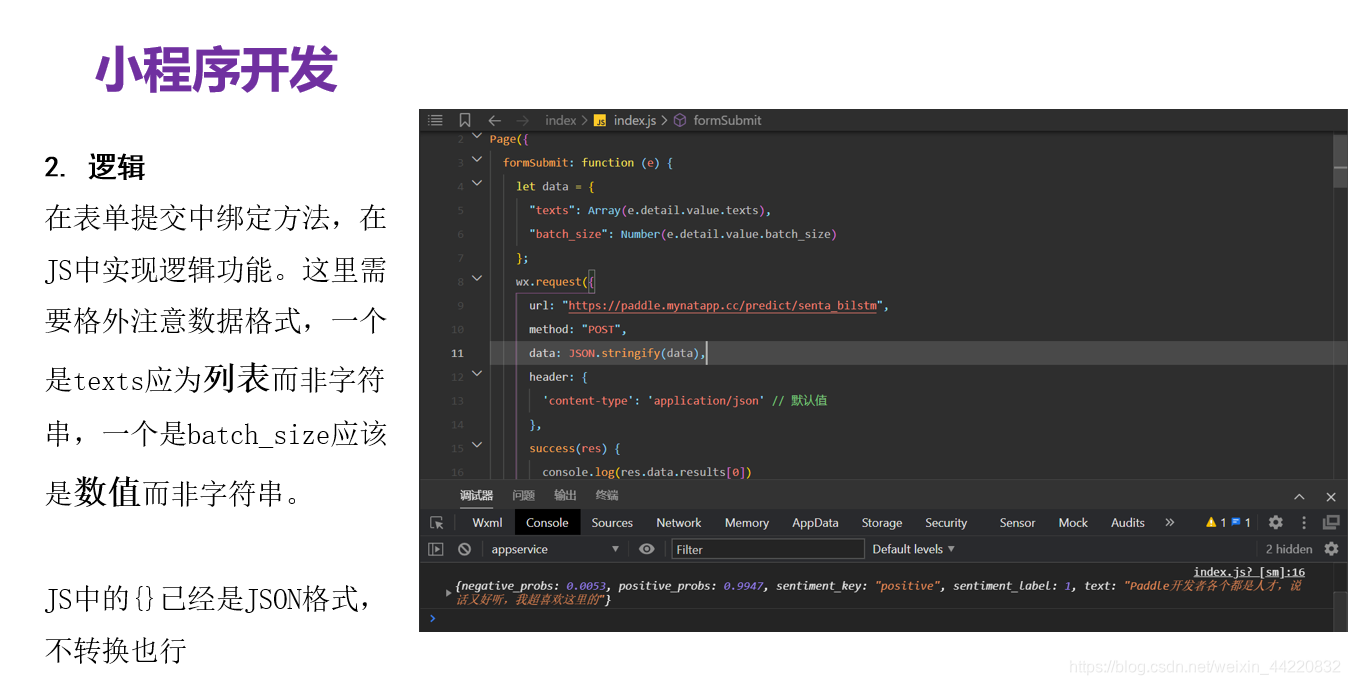

第六课是小程序部署

参考链接

最后:

有点难啊,跟不上了