循环神经网络(RNN)

申明:本书的理论和代码来自于开源书籍,TensorFlow深度学习

地址:https://github.com/jiajunhua/dragen1860-Deep-Learning-with-TensorFlow-book

1. Pytorch的RNN简单实现

2.1. 单层RNNCell

[s,b,n], s为句子长度,b为句子数量,n为词向量长度,h为状态长度

import torch

from torch import nn

from torch import optim

from torch.nn import functional as F

# [s,b,n] s:句长,b:句子数,n:词向量长度

x = torch.randn(80, 4, 100)

# param1:input_size | param2:hidden_size

cell1 = nn.RNNCell(100, 64)

# [batch, hidden_size]

h1 = torch.zeros(4, 64)

# xt [batch, input_size]

for xt in x:

h1 = cell1(xt, h1)

print(h1.shape) # [4, 64]

2.2. 多层 RNNCell

x = torch.randn(80, 4, 100)

cell1 = nn.RNNCell(100, 64)

cell2 = nn.RNNCell(64, 20)

h1 = torch.zeros(4, 64)

h2 = torch.zeros(4, 20)

for xt in x:

h1 = cell1(xt, h1)

h2 = cell2(h1, h2)

print(h2.shape) # [4, 20]

2.3 RNN

# 单层

rnn = nn.RNN(input_size=100, hidden_size=64, num_layers=1)

x = torch.randn(80, 4, 100)

out, h = rnn(x, torch.zeros(1, 4, 64))

print(out.shape, h.shape) # torch.Size([80, 4, 64]) torch.Size([1, 4, 64])

# 多层

rnn = nn.RNN(input_size=100, hidden_size=64, num_layers=3)

x = torch.randn(80, 4, 100)

out, h = rnn(x, torch.zeros(3, 4, 64))

print(out.shape, h.shape)

# print(vars(rnn)) # torch.Size([80, 4, 64]) torch.Size([3, 4, 64])

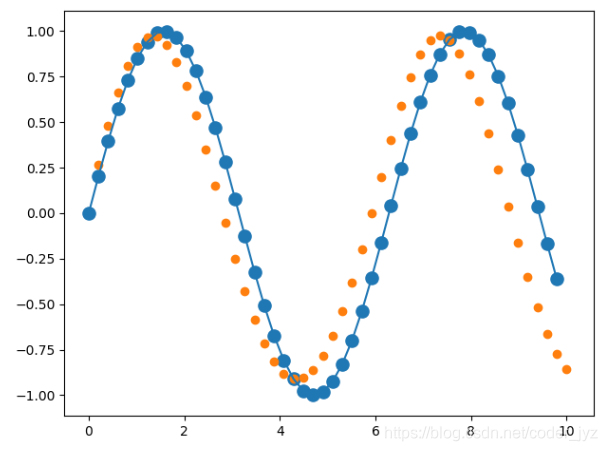

2.RNN 时间序列预测

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

from matplotlib import pyplot as plt

num_time_steps = 50

input_size = 1

hidden_size = 16

output_size = 1

lr = 0.01

class Net(nn.Module):

def __init__(self, ):

super(Net, self).__init__()

# batch_first=True,则输入输出(batch, seq, feature)

self.rnn = nn.RNN(

input_size=input_size,

hidden_size=hidden_size,

num_layers=1,

batch_first=True,

)

for p in self.rnn.parameters():

nn.init.normal_(p, mean=0.0, std=0.001)

self.linear = nn.Linear(hidden_size, output_size)

def forward(self, x, hidden_prev):

out, hidden_prev = self.rnn(x, hidden_prev)

# [b, seq, h]

out = out.view(-1, hidden_size)

out = self.linear(out)

out = out.unsqueeze(dim=0)

return out, hidden_prev

model = Net()

criterion = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr)

# [num_layer,batch,hidden]

hidden_prev = torch.zeros(1, 1, hidden_size)

for iter in range(6000):

start = np.random.randint(3, size=1)[0]

time_steps = np.linspace(start, start + 10, num_time_steps)

data = np.sin(time_steps)

data = data.reshape(num_time_steps, 1)

x = torch.tensor(data[:-1]).float().view(1, num_time_steps - 1, 1)

y = torch.tensor(data[1:]).float().view(1, num_time_steps - 1, 1)

output, hidden_prev = model(x, hidden_prev)

hidden_prev = hidden_prev.detach()

loss = criterion(output, y)

model.zero_grad()

loss.backward()

# for p in model.parameters():

# print(p.grad.norm())

# torch.nn.utils.clip_grad_norm_(p, 10)

optimizer.step()

if iter % 100 == 0:

print("Iteration: {} loss {}".format(iter, loss.item()))

start = np.random.randint(3, size=1)[0]

time_steps = np.linspace(start, start + 10, num_time_steps)

data = np.sin(time_steps)

data = data.reshape(num_time_steps, 1)

x = torch.tensor(data[:-1]).float().view(1, num_time_steps - 1, 1)

y = torch.tensor(data[1:]).float().view(1, num_time_steps - 1, 1)

predictions = []

input = x[:, 0, :]

for _ in range(x.shape[1]):

input = input.view(1, 1, 1)

(pred, hidden_prev) = model(input, hidden_prev)

input = pred

# ravel拉平,变为一维数组

predictions.append(pred.detach().numpy().ravel()[0])

x = x.data.numpy().ravel()

y = y.data.numpy()

plt.scatter(time_steps[:-1], x.ravel(), s=90)

plt.plot(time_steps[:-1], x.ravel())

plt.scatter(time_steps[1:], predictions)

plt.show()

这段代码我自己看的有点晕,勉勉轻轻看懂了点。

3.LSTM

3.1 LSTMCell

print('one layer lstm')

x = torch.randn(80, 4, 100)

cell = nn.LSTMCell(input_size=100, hidden_size=64)

h = torch.zeros(4, 64)

c = torch.zeros(4, 64)

for xt in x:

h, c = cell(xt, [h, c])

print(h.shape, c.shape) # torch.Size([4, 64]) torch.Size([4, 64])

print('two layer lstm')

x = torch.randn(80, 4, 100)

cell1 = nn.LSTMCell(input_size=100, hidden_size=64)

cell2 = nn.LSTMCell(input_size=64, hidden_size=20)

h1 = torch.zeros(4, 64)

c1 = torch.zeros(4, 64)

h2 = torch.zeros(4, 20)

c2 = torch.zeros(4, 20)

for xt in x:

h1, c1 = cell1(xt, [h1, c1])

h2, c2 = cell2(h1, [h2, c2])

print(h2.shape, c2.shape) # torch.Size([4, 20]) torch.Size([4, 20])

3.2LSTM

print('Lstm')

x = torch.randn(80, 4, 100)

lstm = nn.LSTM(input_size=100, hidden_size=64, num_layers=3)

print(lstm)

out, (h, c) = lstm(x)

print(out.shape, h.shape, c.shape) # torch.Size([80, 4, 64]) torch.Size([3, 4, 64]) torch.Size([3, 4, 64])

4.GRU

4.1 GRUCell

单层

rnn = nn.GRUCell(100, 64)

x = torch.randn(80, 4, 100)

hx = torch.randn(4, 64)

out = []

for xt in x:

hx = rnn(xt, hx)

out.append(hx)

print(hx.shape) # torch.Size([4, 64])

多层

x = torch.randn(80, 4, 100)

cell1 = nn.GRUCell(100, 64)

cell2 = nn.GRUCell(64, 20)

h1 = torch.zeros(4, 64)

h2 = torch.zeros(4, 20)

for xt in x:

h1 = cell1(x[i],h1)

h2 = cell2(h1,h2)

print(h1.shape, h2.shape) # torch.Size([4, 64]) torch.Size([4, 20])

4.2 GRU

多层

# 参数:input_size ,hidden_size ,num_layers

rnn = nn.GRU(100, 64, 3)

# [sequence_length,batch_size,input_size]

input = torch.randn(80, 4, 100)

# [layer,batch_size,hidden]

h0 = torch.randn(3, 4, 64)

out, hn = rnn(input, h0)

print(out.shape,hn.shape) # torch.Size([80, 4, 64]) torch.Size([3, 4, 64])

感觉网上的PyTorch的例子要比TensorFlow2的例子少,等以后有机会再补充。