简介

为了更好地理解 pytorch 的 CrossEntropyLoss,于是打算进行简单的实现。

官方文档:

https://pytorch.org/docs/stable/nn.html?highlight=crossentropyloss#torch.nn.CrossEntropyLoss

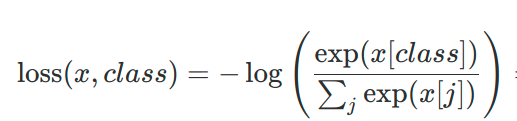

官网 loss 的公式:

?

x 的维度是 (batch_size, C)

class 的维度是 (batch_size)

(这里的 C 是分类的个数)

?

import numpy as np

import torch

import torch.nn as nn

class CrossEntropyLoss():

def __init__(self, weight=None, size_average=True):

"""

初始化参数,因为要实现 torch.nn.CrossEntropyLoss 的两个比较重要的参数

:param weight: 给予每个类别不同的权重

:param size_average: 是否要对 loss 求平均

"""

self.weight = weight

self.size_average = size_average

def __call__(self, input, target):

"""

计算损失

这个方法让类的实例表现的像函数一样,像函数一样可以调用

:param input: (batch_size, C),C是类别的总数

:param target: (batch_size, 1)

:return: 损失

"""

batch_loss = 0.

for i in range(input.shape[0]):

# print('***',input[i, target[i]],i,target[i],np.exp(input[i, :]))

numerator = np.exp(input[i, target[i]]) # 分子

denominator = np.sum(np.exp(input[i, :])) # 分母

# 计算单个损失

loss = -np.log(numerator / denominator)

if self.weight:

loss = self.weight[target[i]] * loss

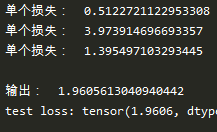

print("单个损失: ",loss)

# 损失累加

batch_loss += loss

# 整个 batch 的总损失是否要求平均

if self.size_average == True:

batch_loss /= input.shape[0]

return batch_loss

if __name__ == "__main__":

input = np.array([[-1.5616, -0.7906, 1.4143, -0.0957, 0.1657],

[-1.4285, 0.3045, 1.5844, -2.1508, 1.8181],

[ 1.0205, -1.3493, -1.2965, 0.1715, -1.2118]])

target = np.array([2, 0, 3])

criterion = CrossEntropyLoss()

# 类中实现了 __call__,所以类实例可以像函数一样可以调用

loss = criterion(input, target)

print()

print("输出: ", loss)

#torch.nn中库函数

test_loss = nn.CrossEntropyLoss()

test_input = torch.from_numpy(input)

test_target = torch.from_numpy(target).long()

test_out = test_loss(test_input,test_target)

print('test loss:',test_out)

?参考:https://blog.csdn.net/qq_41805511/article/details/99438838