绪论

随着卷积神经网络部署在终端的需求越来越强烈,很多研究者们开始研究如何降低神经网络的计算量。一种方法是在一个已经训练好的网络基础上做一些裁剪和量化,比如模型剪枝、低比特量化、知识蒸馏;另外一种方法是设计高效的神经网络架构,比如MobileNetv1-v3系列、ShuffleNet等等。

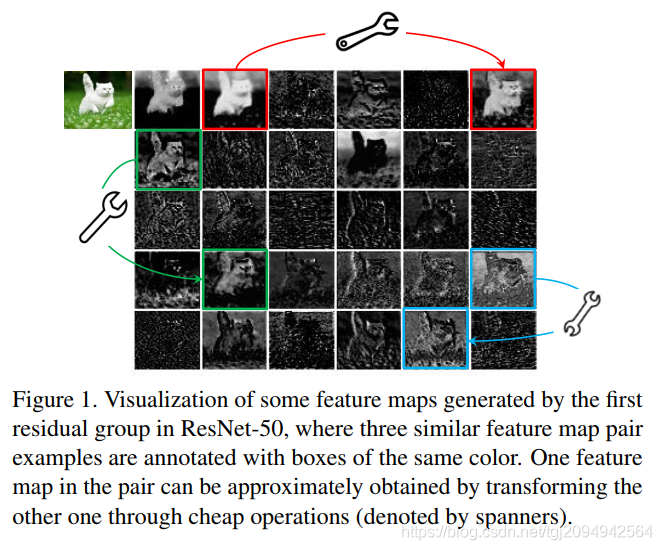

特征冗余性是卷积神经网络的重要特性之一,一些轻量化网络的工作恰恰是利用特征的冗余性,通过裁掉部分冗余特征达到模型轻量化的效果。

与其他工作不同,这篇文章并没有刻意裁剪冗余的特征,而是用一种比传统卷积层更轻量化的方法去生成冗余的特征。通过“少量传统卷积计算”+“轻量的冗余特征生成器”的方式,既能减少网络的整体计算量,又能保证网络的精度。

可以看到上述 两个扳手连接起来的特征图是十分相似的,在这里可以通过十分简便的形式来得到对应的特征图

GhosNet详细介绍可以参考这里

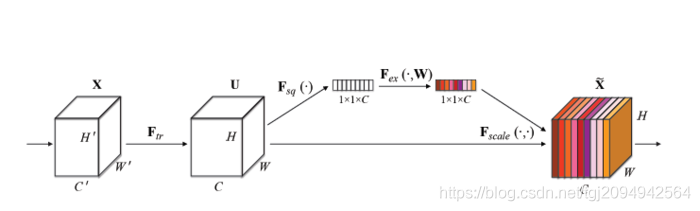

其中文中还使用的SENET网络结构

SENET结构请参考这里

图中的Ftr是传统的卷积结构,X和U是Ftr的输入(C’xH’xW’)和输出(CxHxW),这些都是以往结构中已存在的。SENet增加的部分是U后的结构:对U先做一个Global Average Pooling(图中的Fsq(.),作者称为Squeeze过程),输出的1x1xC数据再经过两级全连接(图中的Fex(.),作者称为Excitation过程),最后用sigmoid(论文中的self-gating mechanism)限制到[0,1]的范围,把这个值作为scale乘到U的C个通道上, 作为下一级的输入数据。这种结构的原理是想通过控制scale的大小,把重要的特征增强,不重要的特征减弱,从而让提取的特征指向性更强。下面来看下SENet的一些细节:

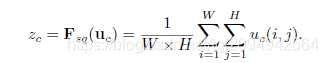

先是Squeeze部分。GAP有很多算法,作者用了最简单的求平均的方法(公式1),将空间上所有点的信息都平均成了一个值。这么做是因为最终的scale是对整个通道作用的,这就得基于通道的整体信息来计算scale。另外作者要利用的是通道间的相关性,而不是空间分布中的相关性,用GAP屏蔽掉空间上的分布信息能让scale的计算更加准确。

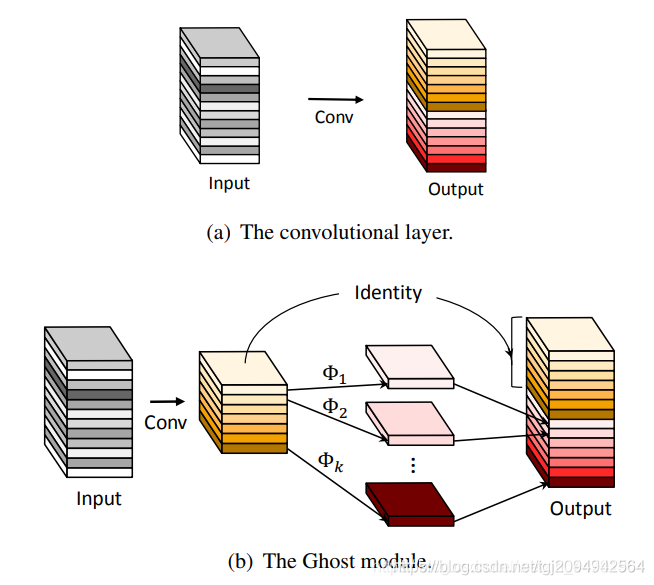

在Ghostnet网络模型中主要是G-bnet的设计

Ghost Module将普通卷积分为两部分,首先进行一个普通的1x1卷积,这是一个少量卷积,比如正常使用32通道的卷积,这里就用16通道的卷积,这个1x1卷积的作用类似于特征整合,生成输入特征层的特征浓缩。

然后我们再进行深度可分离卷积,这个深度可分离卷积是逐层卷积,它也就是我们上面提到的Cheap Operations。它利用上一步获得的特征浓缩生成Ghost特征图。

因此,如果我们从整体上去看这个Ghost Module,它其实就是两步简单思想的汇总:

1、利用1x1卷积获得输入特征的必要特征浓缩。

2、利用深度可分离卷积获得特征浓缩的相似特征图(Ghost)

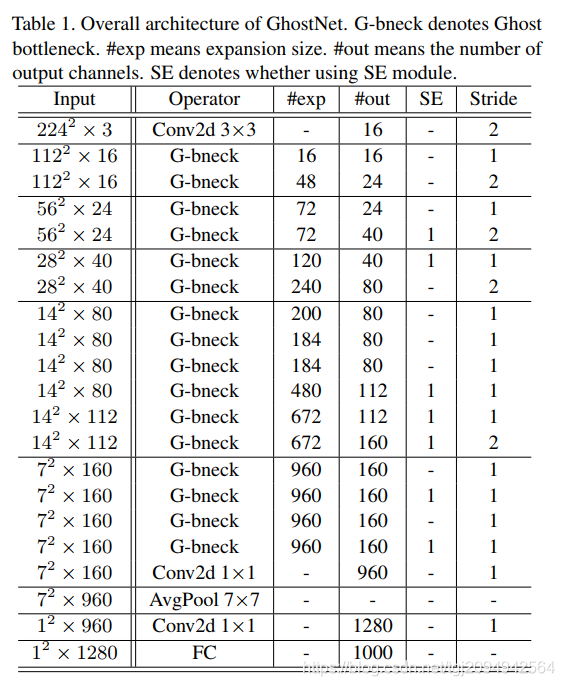

模型整体结构如下

实现代码如下

import torch

import torchvision

import torch.nn as nn

import torch.nn.functional as F

import PIL.Image as Image

import math

INPUT_SIZE = 224

class Ghostnet(nn.Module):

def __init__(self):

'''

is_squeeze:是否使用SE结构

'''

super(Ghostnet, self).__init__()

self.conv1 = _Conv_block(in_channels=3, out_channels=16, kernel_size=3, padding=1, stride=2)

self.ghost1_1 = _ghost_bottlenect(in_channels=16, out_channels=16, exp=16, ratio=2, kernel_size=3, padding=1, stride=1)

self.ghost1_2 = _ghost_bottlenect(in_channels=16, out_channels=24, exp=48, ratio=2, kernel_size=3, padding=1, stride=2)

self.ghost2_1 = _ghost_bottlenect(in_channels=24, out_channels=24, exp=72, ratio=2, kernel_size=3, padding=1, stride=1)

self.ghost2_2 = _ghost_bottlenect(in_channels=24, out_channels=40, exp=72, ratio=2, kernel_size=3, padding=1, stride=2, is_squeeze=True)

self.ghost3_1 = _ghost_bottlenect(in_channels=40, out_channels=40, exp=120, ratio=2, kernel_size=3, padding=1, stride=1, is_squeeze=True)

self.ghost3_2 = _ghost_bottlenect(in_channels=40, out_channels=80, exp=240, ratio=2, kernel_size=3, padding=1, stride=2)

self.ghost4_1 = _ghost_bottlenect(in_channels=80, out_channels=80, exp=200, ratio=2, kernel_size=3, padding=1, stride=1)

self.ghost4_2 = _ghost_bottlenect(in_channels=80, out_channels=80, exp=184, ratio=2, kernel_size=3, padding=1, stride=1)

self.ghost4_3 = _ghost_bottlenect(in_channels=80, out_channels=80, exp=184, ratio=2, kernel_size=3, padding=1, stride=1)

self.ghost4_4 = _ghost_bottlenect(in_channels=80, out_channels=112, exp=480, ratio=2, kernel_size=3, padding=1, stride=1, is_squeeze=True)

self.ghost4_5 = _ghost_bottlenect(in_channels=112, out_channels=112, exp=672, ratio=2, kernel_size=3, padding=1, stride=1, is_squeeze=True)

self.ghost4_6 = _ghost_bottlenect(in_channels=112, out_channels=160, exp=672, ratio=2, kernel_size=3, padding=1, stride=2, is_squeeze=True)

self.ghost5_1 = _ghost_bottlenect(in_channels=160, out_channels=160, exp=960, ratio=2, kernel_size=3, padding=1, stride=1)

self.ghost5_2 = _ghost_bottlenect(in_channels=160, out_channels=160, exp=960, ratio=2, kernel_size=3, padding=1, stride=1, is_squeeze=True)

self.ghost5_3 = _ghost_bottlenect(in_channels=160, out_channels=160, exp=960, ratio=2, kernel_size=3, padding=1, stride=1)

self.ghost5_4 = _ghost_bottlenect(in_channels=160, out_channels=160, exp=960, ratio=2, kernel_size=3, padding=1, stride=1, is_squeeze=True)

self.Conv5_5 = _Conv_block(in_channels=160, out_channels=960, stride=1, kernel_size=1)

self.avgpool6 = nn.AvgPool2d(kernel_size=(7, 7))

self.Conv_7 = nn.Conv2d(in_channels=960, out_channels=1280, kernel_size=1, padding=0, stride=1)

self.FC = nn.Linear(in_features=1280, out_features=1000)

def forward(self, x):

x = self.conv1(x)

x = self.ghost1_1(x)

x = self.ghost1_2(x)

x = self.ghost2_1(x)

x = self.ghost2_2(x)

x = self.ghost3_1(x)

feat1 = x.clone()

x = self.ghost3_2(x)

x = self.ghost4_1(x)

x = self.ghost4_2(x)

x = self.ghost4_3(x)

x = self.ghost4_4(x)

x = self.ghost4_5(x)

feat2 = x.clone()

x = self.ghost4_6(x)

x = self.ghost5_1(x)

x = self.ghost5_2(x)

x = self.ghost5_3(x)

x = self.ghost5_4(x)

feat3 = x.clone()

x = self.Conv5_5(x)

x = self.avgpool6(x)

x = self.Conv_7(x)

x = x.view(-1, 1280)

x = self.FC(x)

return [feat1, feat2, feat3], F.softmax(x, dim=1)

class _ghost_bottlenect(nn.Module):

def __init__(self, in_channels, out_channels, exp, ratio, kernel_size, stride, padding=0, is_squeeze=0):

super(_ghost_bottlenect, self).__init__()

self.ghost1 = _ghost_module(in_channels=in_channels, exp=exp, ratio=ratio, kernel_size=kernel_size,

stride=stride, padding=padding)

self._dpwise_conv = _depwise_conv(in_channels=exp, out_channels=exp, kernel_size=kernel_size, stride=stride, padding=1)

self.ghost2 = _ghost_module(in_channels=exp, exp=out_channels,

ratio=ratio, kernel_size=kernel_size, stride=stride, padding=padding)

self.Conv = _Conv_block(in_channels=in_channels, out_channels=out_channels, stride=stride, kernel_size=kernel_size, padding=padding)

self.is_squeeze = is_squeeze

self.stride = stride

def forward(self, inputs):

x = self.ghost1(inputs)

if self.stride > 1:

x = self._dpwise_conv(x)

if self.is_squeeze:

x = _squeeze(x, ratio=2)

inputs = self.Conv(inputs)

x = self.ghost2(x)

return x.add_(inputs)

class _ghost_module(nn.Module):

def __init__(self, in_channels, exp, ratio, kernel_size, stride, padding=0):

super(_ghost_module, self).__init__()

out_channels = math.ceil(exp * 1.0 / ratio)

self.exp = exp

self.conv = _Conv_block(in_channels=in_channels, out_channels=out_channels,

kernel_size=kernel_size, padding=padding, stride=1)

self.dw_conv = _depwise_conv(in_channels=out_channels, out_channels=out_channels,

kernel_size=kernel_size, stride=1, padding=padding)

def forward(self, x):

x = self.conv(x)

x_s = self.dw_conv(x)

return torch.cat([x, x_s], dim=1)[:, :self.exp, :, :]

class _depwise_conv(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, use_bias=False):

super(_depwise_conv, self).__init__()

self._conv1 = _Conv_block(in_channels=in_channels, out_channels=in_channels, kernel_size=kernel_size,

stride=stride, padding=padding, use_bias=use_bias)

self._conv2 = _Conv_block(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=1,

padding=0,use_bias=use_bias)

def forward(self, x):

return self._conv2(self._conv1(x))

class _Conv_block(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, use_bias=False):

super(_Conv_block, self).__init__()

self.conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,

stride=stride, padding=padding, bias=use_bias)

self.norm = nn.BatchNorm2d(num_features=out_channels)

self.activation = nn.ReLU()

def forward(self, x):

return self.activation(self.norm(self.conv(x)))

def _squeeze(x, ratio):

_, C, H, W = x.size()

x_s = nn.AvgPool2d(kernel_size=(H, W))(x)

x_s = x_s.view(-1, C)

x_s = nn.Linear(in_features=C, out_features=math.ceil(C/ratio))(x_s)

x_s = F.relu6(x_s)

x_s = nn.Linear(in_features=math.ceil(C / ratio), out_features=C)(x_s)

x_s = x_s.view(-1, C, 1, 1)

x_s = torch.sigmoid(x_s)

return x_s * x

def Activation(activation='relu6'):

activation = {

'relu':nn.ReLU(),

'relu6':nn.ReLU6(),

'softmax':nn.Softmax(),

}[activation]

return activation

x = torch.randn((1,3, 224, 224))

# b = _Conv_block(in_channels=20, out_channels=30, kernel_size=3, padding=1, stride=1)(x)

net = Ghostnet()

[feat1, feat2, feat3], result = net.forward(x)

print(feat1.size(), feat2.size(), feat3.size())

print("=" * 20)

print(result.size())

如果代码或者文章出现问题,希望各位请积极联系我,真心的希望得到各位的批评