Libtorch在visual studio 2019 上的部署

Libtorch对于YOLOv5的部署(供自己以后复习使用)

libtorch是pytorch在c++的前端,C++对torch的各种操作还是比较友好,通过torch::或者后加_的方法都可以找到对应实现,而我也是为了学习c++,所以训练一下

各库的安装

1.libtorch

libtorch直接可以在官网下载,但是使用的话还是要注意,就是你后面训练的模型使用的pytorch版本要和libtorch对应(如我使用的是torch1.8.1+cu11.1和libtorch1.8.1GPU版本),还有一点要注意,libtorch有两个版本:release版本和debug版本,两个版本顾名思义,好像debug更大,release版本用来开发,节约成本。pytroch官网

安装成功,测试代码:

#include "torch/torch.h"

#include "torch/script.h"

int main()

{

torch::Tensor output = torch::randn({ 3,2 });

std::cout << output;

return 0;

}

2.opencv和cmake

opencv和cmake也直接在官网下载

环境变量和各种与visual studio 2019搭配的头文件、库文件、依赖的设置

参考这篇博文

跟着一步步来,应该没什么问题,我觉得需要注意的是

这两个头文件路径中常用的头文件分别是:

#include “torch/script.h”

#include “torch/torch.h”

第二个头文件,但是一般都没有说这个头文件所在路径,可能导致程序找不到很多定义。

模型转换(ptrorch——script model)

参考这篇知乎文章](https://zhuanlan.zhihu.com/p/146453159)

讲的很详细,我在这是用的yolov5官方最新5.0代码和权重。官方默认使用cpu转换,好像CPU转换的模型用libtorchGPU版本可以编译,而GPU转换的模型用libtorch cpu版本则不行。

import argparse

import sys

import time

from pathlib import Path

import torch

import torch.nn as nn

from torch.utils.mobile_optimizer import optimize_for_mobile

FILE = Path(__file__).absolute()

sys.path.append(FILE.parents[0].as_posix()) # add yolov5/ to path

from models.common import Conv

from models.yolo import Detect

from models.experimental import attempt_load

from utils.activations import Hardswish, SiLU

from utils.general import colorstr, check_img_size, check_requirements, file_size, set_logging

from utils.torch_utils import select_device

def run(weights='./last6.9.pt', # weights path

img_size=(640, 640), # image (height, width)

batch_size=1, # batch size

device=torch.device('cpu'), # cuda device, i.e. 0 or 0,1,2,3 or cpu

include=('torchscript', 'onnx', 'coreml'), # include formats

half=False, # FP16 half-precision export

inplace=False, # set YOLOv5 Detect() inplace=True

train=False, # model.train() mode

optimize=False, # TorchScript: optimize for mobile

dynamic=False, # ONNX: dynamic axes

simplify=False, # ONNX: simplify model

opset_version=12, # ONNX: opset version

):

t = time.time()

include = [x.lower() for x in include]

img_size *= 2 if len(img_size) == 1 else 1 # expand

# Load PyTorch model

device = select_device(device)

assert not (device.type == 'cpu' and half), '--half only compatible with GPU export, i.e. use --device 0'

model = attempt_load(weights, map_location=device) # load FP32 model

labels = model.names

# Input

gs = int(max(model.stride)) # grid size (max stride)

img_size = [check_img_size(x, gs) for x in img_size] # verify img_size are gs-multiples

img = torch.zeros(batch_size, 3, *img_size).to(device) # image size(1,3,320,192) iDetection

# Update model

if half:

img, model = img.half(), model.half() # to FP16

model.train() if train else model.eval() # training mode = no Detect() layer grid construction

for k, m in model.named_modules():

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

if isinstance(m, Conv): # assign export-friendly activations

if isinstance(m.act, nn.Hardswish):

m.act = Hardswish()

elif isinstance(m.act, nn.SiLU):

m.act = SiLU()

elif isinstance(m, Detect):

m.inplace = inplace

m.onnx_dynamic = dynamic

# m.forward = m.forward_export # assign forward (optional)

for _ in range(2):

y = model(img) # dry runs

print(f"\n{colorstr('PyTorch:')} starting from {weights} ({file_size(weights):.1f} MB)")

# TorchScript export -----------------------------------------------------------------------------------------------

if 'torchscript' in include or 'coreml' in include:

prefix = colorstr('TorchScript:')

try:

print(f'\n{prefix} starting export with torch {torch.__version__}...')

f = weights.replace('.pt', '.torchscript.pt') # filename

ts = torch.jit.trace(model, img, strict=False)

output1 = ts(torch.ones(1, 3, 640, 640))

output2 = model(torch.ones(1, 3, 640, 640))

print(output1)

print(output2)

(optimize_for_mobile(ts) if optimize else ts).save(f)

print(f'{prefix} export success, saved as {f} ({file_size(f):.1f} MB)')

except Exception as e:

print(f'{prefix} export failure: {e}')

# # ONNX export ------------------------------------------------------------------------------------------------------

# if 'onnx' in include:

# prefix = colorstr('ONNX:')

# try:

# import onnx

#

# print(f'{prefix} starting export with onnx {onnx.__version__}...')

# f = weights.replace('.pt', '.onnx') # filename

# torch.onnx.export(model, img, f, verbose=False, opset_version=opset_version,

# training=torch.onnx.TrainingMode.TRAINING if train else torch.onnx.TrainingMode.EVAL,

# do_constant_folding=not train,

# input_names=['images'],

# output_names=['output'],

# dynamic_axes={'images': {0: 'batch', 2: 'height', 3: 'width'}, # shape(1,3,640,640)

# 'output': {0: 'batch', 1: 'anchors'} # shape(1,25200,85)

# } if dynamic else None)

#

# # Checks

# model_onnx = onnx.load(f) # load onnx model

# onnx.checker.check_model(model_onnx) # check onnx model

# # print(onnx.helper.printable_graph(model_onnx.graph)) # print

#

# # Simplify

# if simplify:

# try:

# check_requirements(['onnx-simplifier'])

# import onnxsim

#

# print(f'{prefix} simplifying with onnx-simplifier {onnxsim.__version__}...')

# model_onnx, check = onnxsim.simplify(

# model_onnx,

# dynamic_input_shape=dynamic,

# input_shapes={'images': list(img.shape)} if dynamic else None)

# assert check, 'assert check failed'

# onnx.save(model_onnx, f)

# except Exception as e:

# print(f'{prefix} simplifier failure: {e}')

# print(f'{prefix} export success, saved as {f} ({file_size(f):.1f} MB)')

# except Exception as e:

# print(f'{prefix} export failure: {e}')

#

# # CoreML export ----------------------------------------------------------------------------------------------------

# if 'coreml' in include:

# prefix = colorstr('CoreML:')

# try:

# import coremltools as ct

#

# print(f'{prefix} starting export with coremltools {ct.__version__}...')

# assert train, 'CoreML exports should be placed in model.train() mode with `python export.py --train`'

# model = ct.convert(ts, inputs=[ct.ImageType('image', shape=img.shape, scale=1 / 255.0, bias=[0, 0, 0])])

# f = weights.replace('.pt', '.mlmodel') # filename

# model.save(f)

# print(f'{prefix} export success, saved as {f} ({file_size(f):.1f} MB)')

# except Exception as e:

# print(f'{prefix} export failure: {e}')

#

# # Finish

# print(f'\nExport complete ({time.time() - t:.2f}s). Visualize with https://github.com/lutzroeder/netron.')

#

def parse_opt():

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='./last6.9.pt', help='weights path')

parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='image (height, width)')

parser.add_argument('--batch-size', type=int, default=1, help='batch size')

parser.add_argument('--device', default='cpu', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--include', nargs='+', default=['torchscript', 'onnx', 'coreml'], help='include formats')

parser.add_argument('--half', action='store_true', help='FP16 half-precision export')

parser.add_argument('--inplace', action='store_true', help='set YOLOv5 Detect() inplace=True')

parser.add_argument('--train', action='store_true', help='model.train() mode')

parser.add_argument('--optimize', action='store_true', help='TorchScript: optimize for mobile')

parser.add_argument('--dynamic', action='store_true', help='ONNX: dynamic axes')

parser.add_argument('--simplify', action='store_true', help='ONNX: simplify model')

parser.add_argument('--opset-version', type=int, default=12, help='ONNX: opset version')

opt = parser.parse_args()

return opt

def main(opt):

set_logging()

print(colorstr('export: ') + ', '.join(f'{k}={v}' for k, v in vars(opt).items()))

run(**vars(opt))

if __name__ == "__main__":

opt = parse_opt()

main(opt)

Libtorch在visual studio 2019上的部署

我是用了这篇博文的代码

使用yolov5x.torchscript.pt,我把它修改成了只测试图片,但还有点问题

#include <opencv2/opencv.hpp>

#include <torch/script.h>

#include <torch/torch.h>

#include <algorithm> //很常用的一个库,包含很多常用

#include <iostream>

#include <time.h>

using namespace std;

vector<torch::Tensor> non_max_suppression(torch::Tensor preds, float score_thresh = 0.25, float iou_thresh = 0.45)

{

std::vector<torch::Tensor> output;

for (size_t i = 0; i < preds.sizes()[0]; ++i)

{

torch::Tensor pred = preds.select(0, i);

//GPU推理结果为cuda数据类型,nms之前要转成cpu,否则会报错

pred = pred.to(at::kCPU); //增加到函数里pred = pred.to(at::kCPU); 注意preds的数据类型,转成cpu进行后处理。

// Filter by scores

torch::Tensor scores = pred.select(1, 4) * std::get<0>(torch::max(pred.slice(1, 5, pred.sizes()[1]), 1));// pred.select按index中选定的维度对tensor进行切片,slice也差不太多

/*cout << scores << endl;*/

pred = torch::index_select(pred, 0, torch::nonzero(scores > score_thresh).select(1, 0));//在某个维度上,获取某些行或者某些列

if (pred.sizes()[0] == 0) continue;

// (center_x, center_y, w, h) to (left, top, right, bottom)

pred.select(1, 0) = pred.select(1, 0) - pred.select(1, 2) / 2;

pred.select(1, 1) = pred.select(1, 1) - pred.select(1, 3) / 2;

pred.select(1, 2) = pred.select(1, 0) + pred.select(1, 2);

pred.select(1, 3) = pred.select(1, 1) + pred.select(1, 3);

// Computing scores and classes

std::tuple<torch::Tensor, torch::Tensor> max_tuple = torch::max(pred.slice(1, 5, pred.sizes()[1]), 1);//tuple的用法就是这样tuple<torch::Tensor, torch::Tensor>

pred.select(1, 4) = pred.select(1, 4) * std::get<0>(max_tuple);

pred.select(1, 5) = std::get<1>(max_tuple);

torch::Tensor dets = pred.slice(1, 0, 6);

torch::Tensor keep = torch::empty({ dets.sizes()[0] });

torch::Tensor areas = (dets.select(1, 3) - dets.select(1, 1)) * (dets.select(1, 2) - dets.select(1, 0));

std::tuple<torch::Tensor, torch::Tensor> indexes_tuple = torch::sort(dets.select(1, 4), 0, 1);

torch::Tensor v = std::get<0>(indexes_tuple);

torch::Tensor indexes = std::get<1>(indexes_tuple);

int count = 0;

while (indexes.sizes()[0] > 0)

{

keep[count] = (indexes[0].item().toInt());

count += 1;

// Computing overlaps

torch::Tensor lefts = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor tops = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor rights = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor bottoms = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor widths = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor heights = torch::empty(indexes.sizes()[0] - 1);

for (size_t i = 0; i < indexes.sizes()[0] - 1; ++i)

{

lefts[i] = std::max(dets[indexes[0]][0].item().toFloat(), dets[indexes[i + 1]][0].item().toFloat());

tops[i] = std::max(dets[indexes[0]][1].item().toFloat(), dets[indexes[i + 1]][1].item().toFloat());

rights[i] = std::min(dets[indexes[0]][2].item().toFloat(), dets[indexes[i + 1]][2].item().toFloat());

bottoms[i] = std::min(dets[indexes[0]][3].item().toFloat(), dets[indexes[i + 1]][3].item().toFloat());

widths[i] = std::max(float(0), rights[i].item().toFloat() - lefts[i].item().toFloat());

heights[i] = std::max(float(0), bottoms[i].item().toFloat() - tops[i].item().toFloat());

}

torch::Tensor overlaps = widths * heights;

// FIlter by IOUs

torch::Tensor ious = overlaps / (areas.select(0, indexes[0].item().toInt()) + torch::index_select(areas, 0, indexes.slice(0, 1, indexes.sizes()[0])) - overlaps);

indexes = torch::index_select(indexes, 0, torch::nonzero(ious <= iou_thresh).select(1, 0) + 1);

}

keep = keep.toType(torch::kInt64);

output.push_back(torch::index_select(dets, 0, keep.slice(0, 0, count)));

}

return output;

}

#include <torch/script.h>

#include <iostream>

#include <memory>

//int main(int argc, const char* argv[]) {

// std::cout << "cuda::is_available():" << torch::cuda::is_available() << std::endl;

// torch::DeviceType device_type = at::kCPU; // 定义设备类型

// if (torch::cuda::is_available())

// device_type = at::kCUDA;

//}

int main()

{

std::cout << "cuda::is_available():" << torch::cuda::is_available() << std::endl;

std::cout << "cudnn::is_available():" << torch::cuda::cudnn_is_available() << std::endl;

torch::DeviceType device_type = at::kCPU; // 定义设备类型

if (torch::cuda::is_available())

device_type = at::kCUDA;

// Loading Module

char* model_path= "E:\\Project2\\yolov5s.torchscript.pt";

torch::jit::script::Module module = torch::jit::load(model_path);//best.torchscript3.pt//yolov5x.torchscript.pt

module.to(device_type); // 模型加载至GPU

std::vector<std::string> classnames;

std::ifstream f("coco.names");

std::string name = "";

while (std::getline(f, name)) //cin.getline:,std::istream::getline:输入数据

{

classnames.push_back(name);

}

//if (argc < 2)

//{

// std::cout << "Please run with test video." << std::endl;

// return -1;

//}

double t_start, t_end, t_cost;

t_start = cv::getTickCount();

std::string video = "E:\\Project2\\bicycle.jpg";

// cap.set(cv::CAP_PROP_FRAME_WIDTH, 1920);

// cap.set(cv::CAP_PROP_FRAME_HEIGHT, 1080);

cv::Mat frame, img;

frame = cv::imread(video);

int width = frame.size().width;

int height = frame.size().height;

int count = 0;

// Preparing input tensor

cv::resize(frame, img, cv::Size(640, 640));

// cv::cvtColor(img, img, cv::COLOR_BGR2RGB);

// torch::Tensor imgTensor = torch::from_blob(img.data, {img.rows, img.cols,3},torch::kByte);

// imgTensor = imgTensor.permute({2,0,1});

// imgTensor = imgTensor.toType(torch::kFloat);

// imgTensor = imgTensor.div(255);

// imgTensor = imgTensor.unsqueeze(0);

// imgTensor = imgTensor.to(device_type);

cv::cvtColor(img, img, cv::COLOR_BGR2RGB); // BGR -> RGB

img.convertTo(img, CV_32FC3, 1.0f / 255.0f); // normalization 1/255

auto imgTensor = torch::from_blob(img.data, { 1, img.rows, img.cols, img.channels() }).to(device_type);

imgTensor = imgTensor.permute({ 0, 3, 1, 2 }).contiguous(); // BHWC -> BCHW (Batch, Channel, Height, Width)

std::vector<torch::jit::IValue> inputs;

inputs.emplace_back(imgTensor);

// preds: [?, 15120, 9]

torch::jit::IValue output = module.forward(inputs);

auto preds = output.toTuple()->elements()[0].toTensor();

// torch::Tensor preds = module.forward({ imgTensor }).toTensor();

std::vector<torch::Tensor> dets = non_max_suppression(preds, 0.35, 0.5);

std::cout << "预测结果:" << dets<<std::endl;

t_end = cv::getTickCount();

t_cost = (t_end - t_start)/ cv::getTickFrequency();

printf("time cost:", t_cost);

if (dets.size() > 0)

{

// Visualize result

for (size_t i = 0; i < dets[0].sizes()[0]; ++i)

{

float left = dets[0][i][0].item().toFloat() * frame.cols / 640;

float top = dets[0][i][1].item().toFloat() * frame.rows / 640;

float right = dets[0][i][2].item().toFloat() * frame.cols / 640;

float bottom = dets[0][i][3].item().toFloat() * frame.rows / 640;

float score = dets[0][i][4].item().toFloat();

int classID = dets[0][i][5].item().toInt();

cv::rectangle(frame, cv::Rect(left, top, (right - left), (bottom - top)), cv::Scalar(0, 255, 0), 2);

/* cv::putText(frame,

classnames[classID] + ": " + cv::format("%.2f", score),

cv::Point(left, top),

cv::FONT_HERSHEY_SIMPLEX, (right - left) / 200, cv::Scalar(0, 255, 0), 2);*/

}

}

// std::cout << "-[INFO] Frame:" << std::to_string(count) << " FPS: " + std::to_string(float(1e7 / (clock() - start))) << std::endl;

std::cout << "-[INFO] Frame:" << std::to_string(count) << std::endl;

/* cv::putText(frame, "FPS: " + std::to_string(int(1e7 / (clock() - start))),

cv::Point(50, 50),

cv::FONT_HERSHEY_SIMPLEX, 1, cv::Scalar(0, 255, 0), 2);*/

cv::imshow("", frame);

// cv::imwrite("../images/"+cv::format("%06d", count)+".jpg", frame);

cv::resize(frame, frame, cv::Size(width, height));

cv::waitKey(0);

return 0;

}

(1)这一段代码总是报错,我没解决掉

/* cv::putText(frame,

classnames[classID] + ": " + cv::format("%.2f", score),

cv::Point(left, top),

cv::FONT_HERSHEY_SIMPLEX, (right - left) / 200, cv::Scalar(0, 255, 0), 2);*/

(2)这一段代码,我也修改了他的,因为总是报c10:error的错误,他是直接加载权重,我先定义变量再加载。

char* model_path= "E:\\Project2\\yolov5s.torchscript.pt";

torch::jit::script::Module module = torch::jit::load(model_path);//best.torchscript3.pt//yolov5x.torchscript.pt

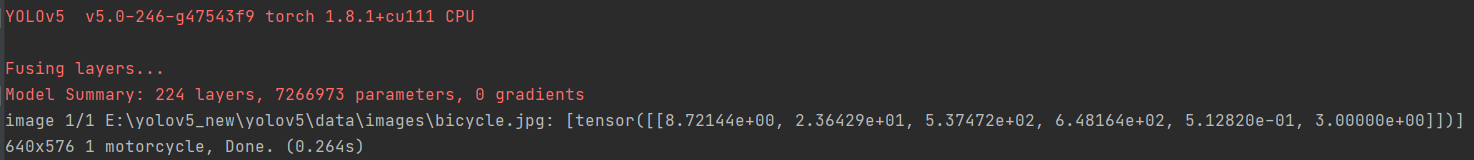

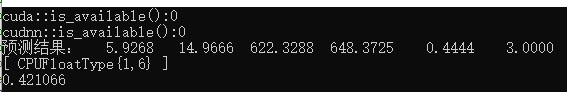

运行结果

因为没解决cv::putText的错误,就只有这个了

pytorch和libtorch在CPU上的运行比较

libtorch上的cpu推理421 ms(其实是我GPU真的用不上,以后在解决吧,废了!结果是不是也有点不太对,算了)

pytorch上cpu推理264 ms(听说在cpu上c++比pytorch慢是正常的?)