完整工程代码点击这里,如果对你的学习有所帮助,欢迎给个star,谢谢~

一、AlexNet网络模型

AlexNet算是继LeNet-5后的又一个很经典的模型,在论文《ImageNet Classification with Deep Convolutional Neural Networks》被提出,并且该网络在2012年的ImageNet竞赛中取得了冠军,作者是多伦多大学的Alex Krizhevsky等人。算是曾经风靡一时的模型了。

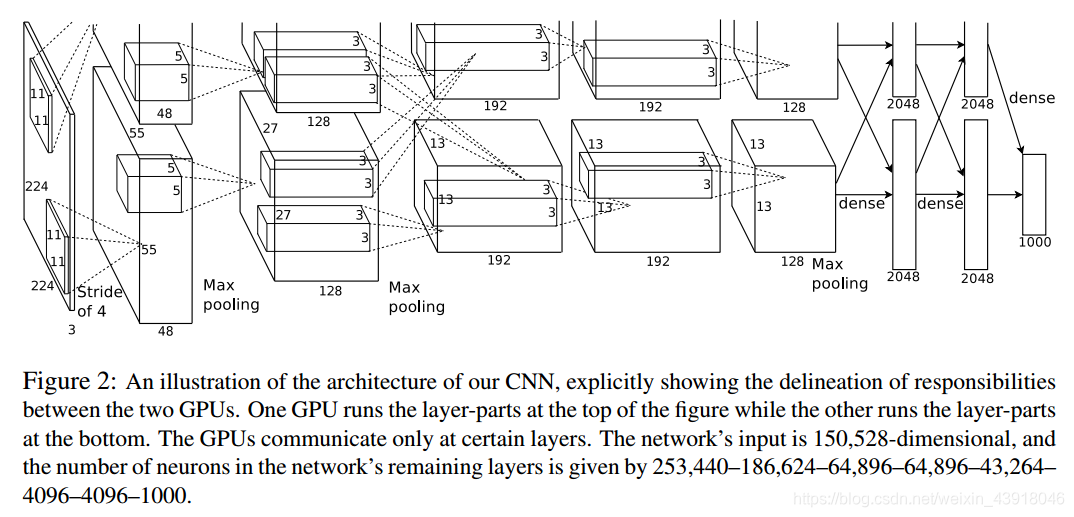

下图为AlexNet网络模型,来源于原始论文:

严格意义上的AlexNet模型实际上是通过某种联系方式分别在两个GPU上跑的,当然我们实际操作按照作者对模型的描述可以只实现一个在一个GPU上跑模型也可,效果也是非常不错的。

值得一提的是,LeNet-5我们描述到是针对单通道的灰度图像,而AlexNet一开始设计是针对三通道(彩色)图像,当然你也可以修改模型的输入形状,让AlexNet训练单通道灰度图像(同理LeNet-5也可以训练三通道,只是LeNet-5的作者一开始主要为训练单通道灰色图像,修改模型输入形状即可)。

下面为模型每层参数的详细描述:

第一层为卷积层,卷积核个数为96,原始论文中的两个GPU分别计算48个卷积核, 卷积核的尺寸为11 x 11,步长stride = 4x4, 不扩展边界所以padding为valid,后面直接跟着池化层,使用尺寸为3×3并且步长为2x2的池化单元;输入图像尺寸为227x227x3。

第二层为卷积层,卷积核个数为256,原始论文中的两个GPU分别计算128个卷积核,卷积核的尺寸为 5 × 5,padding = 2, 步长stride = 1x1; 然后做 LRN(局部响应归一化), 后面直接跟着池化层,使用3×3步长为2x2的池化单元;

第三层为卷积层,卷积核个数为384,卷积核尺寸为3 × 3,步长stride=1x1,padding = 1,该层没有做LRN和Pool池化;

第四层为卷积层,卷积核个数为384,卷积核尺寸为3 × 3,步长stride=1x1,padding = 1,该层没有做LRN和Pool池化;

第五层为卷积层,卷积核个数为256,卷积核尺寸为3 × 3,步长stride=1x1,padding = 1,后面直接跟着池化层,使用3×3步长为2的池化单元;

第六、七层是全连接层,每一层的神经元的个数为4096;

第八层是输出层,神经元个数为类别数目,采用softmax映射输出。

该模型隐层部分都采用的ReLU激活函数。

常见的激活函数(Sigmoid,tanh双曲正切,ReLU修正线性单元,Leaky ReLU函数)。

二、keras实现AlexNet

按照上面对每层的描述,直接搭建即可。

PS:第二层采用的LRN局部响应归一化在后来的VGG作者的反复实验证明没啥用,所以这里直接舍去了。

input_shape=(227,227,3)#3通道图像数据

num_class=10#类别数目

model = Sequential([

#第一层

Conv2D(96,(11,11),padding='valid',strides=(4,4),activation='relu',input_shape=input_shape),#输入图像尺寸为32x32

BatchNormalization(),#加入批标准化优化模型

#后面直接跟着池化层

MaxPooling2D(pool_size=(3,3),strides=(2,2)),

Dropout(0.5),#加入dropout防止过拟合

#第二层

Conv2D(256,(5,5),padding='same',strides=(1,1),activation='relu'),

BatchNormalization(),

#后面直接跟着池化层

MaxPooling2D(pool_size=(3,3),strides=(2,2)),

Dropout(0.5),#加入dropout防止过拟合

#第三层

Conv2D(384,(3,3),padding='same',strides=(1,1),activation='relu'),

BatchNormalization(),

Dropout(0.5),#加入dropout防止过拟合

#第四层

Conv2D(384,(3,3),padding='same',strides=(1,1),activation='relu'),

BatchNormalization(),

Dropout(0.5),#加入dropout防止过拟合

#第五层

Conv2D(256,(3,3),padding='same',strides=(1,1),activation='relu'),

BatchNormalization(),

#后面直接跟着池化层

MaxPooling2D(pool_size=(3,3),strides=(2,2)),

Dropout(0.5),#加入dropout防止过拟合

#展开所有二维数据矩阵为一维数据核全连接层对接

Flatten(),

#第六层为全连接层,4096个节点

Dense(4096,activation='relu'),

BatchNormalization(),

Dropout(0.5),#加入dropout防止过拟合

#第七层为全连接层,4096个节点;

Dense(4096,activation='relu'),

BatchNormalization(),

Dropout(0.5),#加入dropout防止过拟合

#第八层为输出层,激活函数为softmax。

Dense(num_class,activation='softmax')

])

model.summary()#显示模型结构

打印的结构图如下:

Model: “sequential_1”

Layer (type) Output Shape Param #

conv2d_1 (Conv2D) (None, 35, 35, 96) 34944

batch_normalization_1 (Batch (None, 35, 35, 96) 384

max_pooling2d_1 (MaxPooling2 (None, 17, 17, 96) 0

dropout_1 (Dropout) (None, 17, 17, 96) 0

conv2d_2 (Conv2D) (None, 17, 17, 256) 614656

batch_normalization_2 (Batch (None, 17, 17, 256) 1024

max_pooling2d_2 (MaxPooling2 (None, 8, 8, 256) 0

dropout_2 (Dropout) (None, 8, 8, 256) 0

conv2d_3 (Conv2D) (None, 8, 8, 384) 885120

batch_normalization_3 (Batch (None, 8, 8, 384) 1536

dropout_3 (Dropout) (None, 8, 8, 384) 0

conv2d_4 (Conv2D) (None, 8, 8, 384) 1327488

batch_normalization_4 (Batch (None, 8, 8, 384) 1536

dropout_4 (Dropout) (None, 8, 8, 384) 0

conv2d_5 (Conv2D) (None, 8, 8, 256) 884992

batch_normalization_5 (Batch (None, 8, 8, 256) 1024

max_pooling2d_3 (MaxPooling2 (None, 3, 3, 256) 0

dropout_5 (Dropout) (None, 3, 3, 256) 0

flatten_1 (Flatten) (None, 2304) 0

dense_1 (Dense) (None, 4096) 9441280

batch_normalization_6 (Batch (None, 4096) 16384

dropout_6 (Dropout) (None, 4096) 0

dense_2 (Dense) (None, 4096) 16781312

batch_normalization_7 (Batch (None, 4096) 16384

dropout_7 (Dropout) (None, 4096) 0

dense_3 (Dense) (None, 10) 40970

Total params: 30,049,034

Trainable params: 30,029,898

Non-trainable params: 19,136

三、AlexNet训练人脸识别数据集GTSRB

关于数据集,GTSRB数据集是德国交通标志数据集,也是三通道图像,内容丰富,庞大,比较适合训练模型,下载连接。

https://sid.erda.dk/public/archives/daaeac0d7ce1152aea9b61d9f1e19370/published-archive.html

找到这个,点击下载。

共43个类别,每个子文件夹下都有对应的一种交通标志。

AlexNet训练代码

from unicodedata import normalize

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from collections import Counter

from sklearn.preprocessing import MinMaxScaler

from sklearn.model_selection import train_test_split

from sklearn import preprocessing

from sklearn.metrics import accuracy_score

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers.convolutional import Conv2D, MaxPooling2D

import keras

from keras.layers import Dense,MaxPool2D,Conv2D,Flatten,Dropout,BatchNormalization,Activation,Add,\

Input,ZeroPadding2D,MaxPooling2D,AveragePooling2D

from keras.initializers import glorot_uniform

from keras.models import Sequential,Model

from keras.optimizers import Adam

import os

from tqdm import tqdm

from input_data import load_cifar10#导入加载cifat数据的函数

import cv2

X_data = []

Y_data = []

input_shape=(227,227,3)#3通道图像数据

num_class = 43#数据类别数目

path='Dataset/GTSRB'

for file in tqdm(os.listdir(path)):

lab=int(file)

for photo_file in os.listdir(path+'/'+file):

if photo_file[0]=='G':

continue

photo_file_path=path+'/'+file+'/'+photo_file

img = cv2.imread(photo_file_path,1)

img = cv2.resize(img,(input_shape[0],input_shape[1]))

X_data.append(img)

Y_data.append(lab)

print(len(X_data))

print(Counter(Y_data))

X_data=np.array(X_data)

X_data=X_data/255.0

Y_data=np.array(Y_data)

# 对训练集进行切割,然后进行训练

train_x,test_x,train_y,test_y = train_test_split(X_data,Y_data,test_size=0.2)

lb=preprocessing.LabelBinarizer().fit(np.array(range(num_class)))#对标签进行ont_hot编码

train_y=lb.transform(train_y)#因为是多分类任务,必须进行编码处理

test_y=lb.transform(test_y)

model = Sequential([

#第一层

Conv2D(96,(11,11),padding='valid',strides=(4,4),activation='relu',input_shape=input_shape),#输入图像尺寸为32x32

BatchNormalization(),#加入批标准化优化模型

#后面直接跟着池化层

MaxPooling2D(pool_size=(3,3),strides=(2,2)),

Dropout(0.5),#加入dropout防止过拟合

#第二层

Conv2D(256,(5,5),padding='same',strides=(1,1),activation='relu'),

BatchNormalization(),

#后面直接跟着池化层

MaxPooling2D(pool_size=(3,3),strides=(2,2)),

Dropout(0.5),#加入dropout防止过拟合

#第三层

Conv2D(384,(3,3),padding='same',strides=(1,1),activation='relu'),

BatchNormalization(),

Dropout(0.5),#加入dropout防止过拟合

#第四层

Conv2D(384,(3,3),padding='same',strides=(1,1),activation='relu'),

BatchNormalization(),

Dropout(0.5),#加入dropout防止过拟合

#第五层

Conv2D(256,(3,3),padding='same',strides=(1,1),activation='relu'),

BatchNormalization(),

#后面直接跟着池化层

MaxPooling2D(pool_size=(3,3),strides=(2,2)),

Dropout(0.5),#加入dropout防止过拟合

#展开所有二维数据矩阵为一维数据核全连接层对接

Flatten(),

#第六层为全连接层,4096个节点

Dense(4096,activation='relu'),

BatchNormalization(),

Dropout(0.5),#加入dropout防止过拟合

#第七层为全连接层,4096个节点;

Dense(4096,activation='relu'),

BatchNormalization(),

Dropout(0.5),#加入dropout防止过拟合

#第八层为输出层,激活函数为softmax。

Dense(num_class,activation='softmax')

])

model.summary()#显示模型结构

#编译模型,定义损失函数loss,采用的优化器optimizer为Adam

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

#开始训练模型

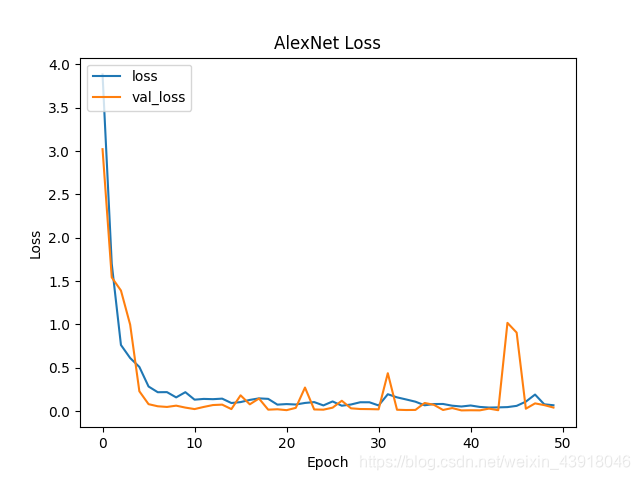

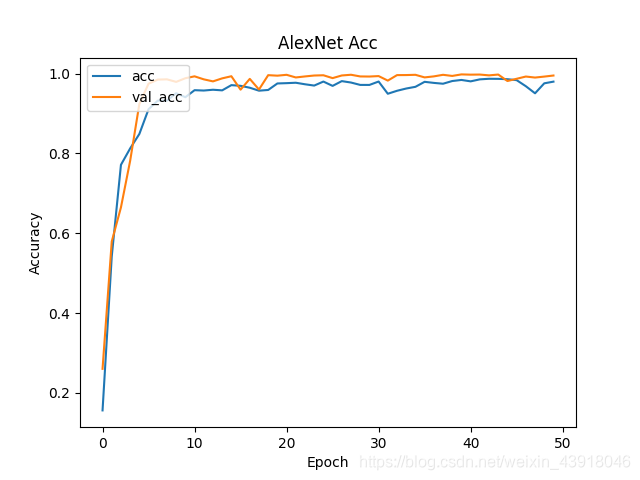

history=model.fit(train_x,train_y,batch_size = 128,epochs=50,validation_data=(test_x, test_y))#训练1000个批次,每个批次数据量为126

#绘制训练过程的acc和loss

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.legend(['acc', 'val_acc'], loc='upper left')

plt.title('AlexNet Acc')

plt.xlabel("Epoch")#横坐标名

plt.ylabel("Accuracy")#纵坐标名

plt.show()

#图像保存方法

plt.savefig('AlexNet acc.png')

plt.figure()

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.legend(['loss', 'val_loss'], loc='upper left')

plt.title('AlexNet Loss')

plt.xlabel("Epoch")#横坐标名

plt.ylabel("Loss")#纵坐标名

plt.show()

#图像保存方法

plt.savefig('AlexNet loss.png')

训练结果:

希望我的分享对你的学习有所帮助,如果有问题请及时指出,谢谢~