第5讲 处理多维特征的输入 Multiple Dimension Input

pytorch学习视频——B站视频链接:《PyTorch深度学习实践》完结合集_哔哩哔哩_bilibili

以下是视频内容笔记以及小练习源码,笔记纯属个人理解,如有错误欢迎路过的大佬指出 。

1. 多维logistic 回归模型

-

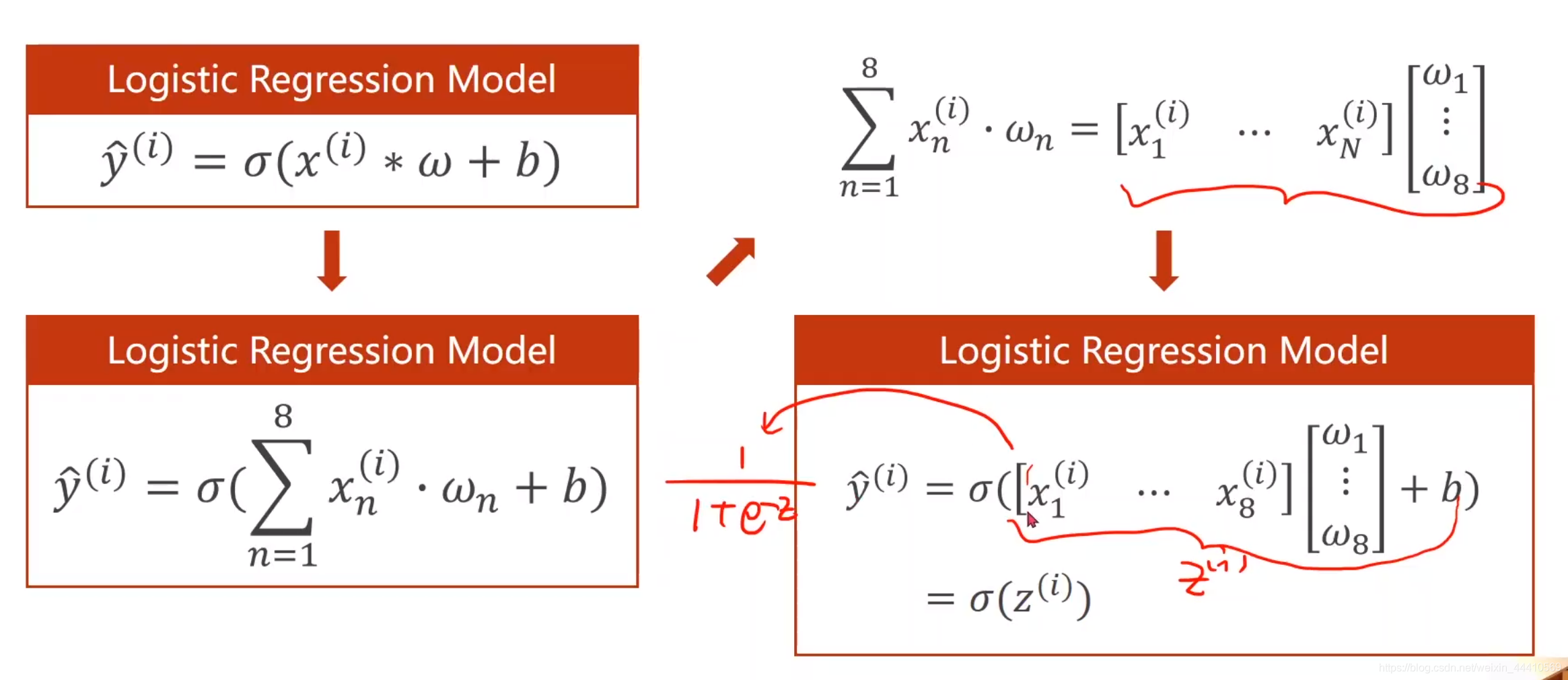

模型的变化

输入x有多个维度,此时的权重 w n w_n wn?也有多个维度,x与w相乘(内积)后得到的是一个标量Z,Z加上偏置值b,再代入logistic 函数 σ ( x ) = 1 1 + e ? x \sigma(x)=\frac{1}{1+e^{-x}} σ(x)=1+e?x1?

公式如图所示:

-

转换成矩阵的计算

pytorch的计算按照向量进行运算,将 使用for循环输入单组数据然后计算输出 的形式,转换成矩阵计算的形式,可以直接按照向量计算快速得到输出,如图所示:

? (输入x是8维,输出z是1维)

2. 神经网络的形成

-

激活函数

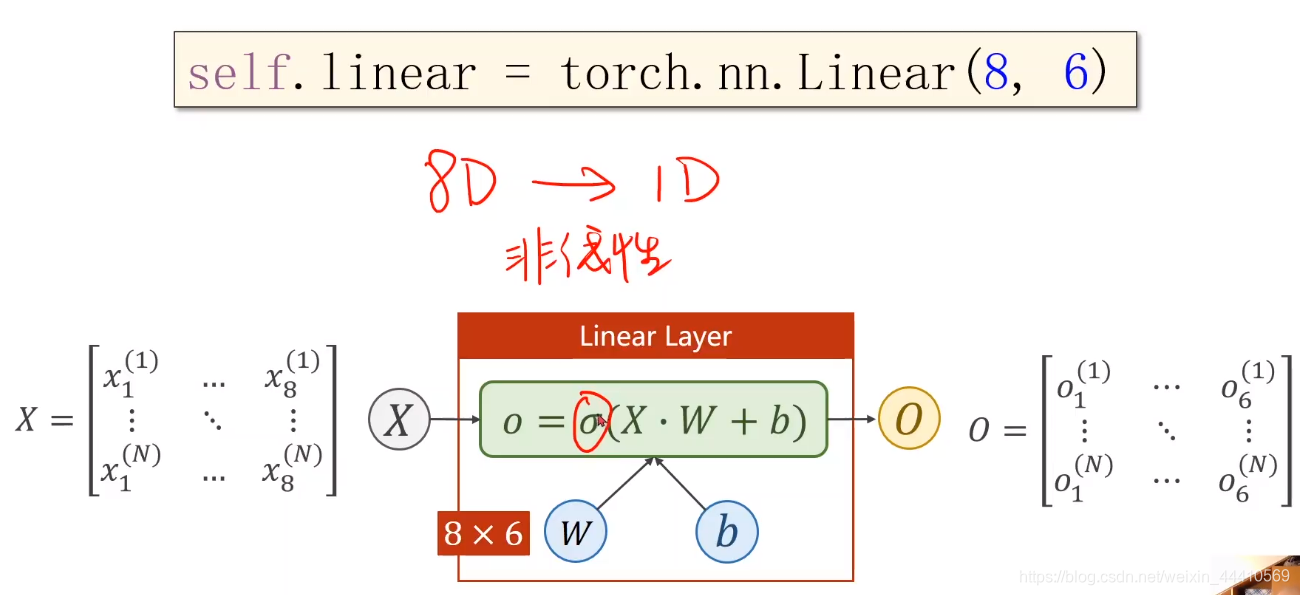

矩阵可以看成是一种空间变换函数——线性映射。

多个线性变换层组合起来模拟非线性变换,而神经网络就是在寻找一种最优的非线性空间变换函数。

如图所示,linear空间变换能做到空间维度变换,多个linear层组合成神经网络,但是每一层都是纯线性变换,所以在每一层线性变换上加上一个 σ \sigma σ函数(非线性因子),即激活函数,这样就将线性变换转换成非线性变换。

-

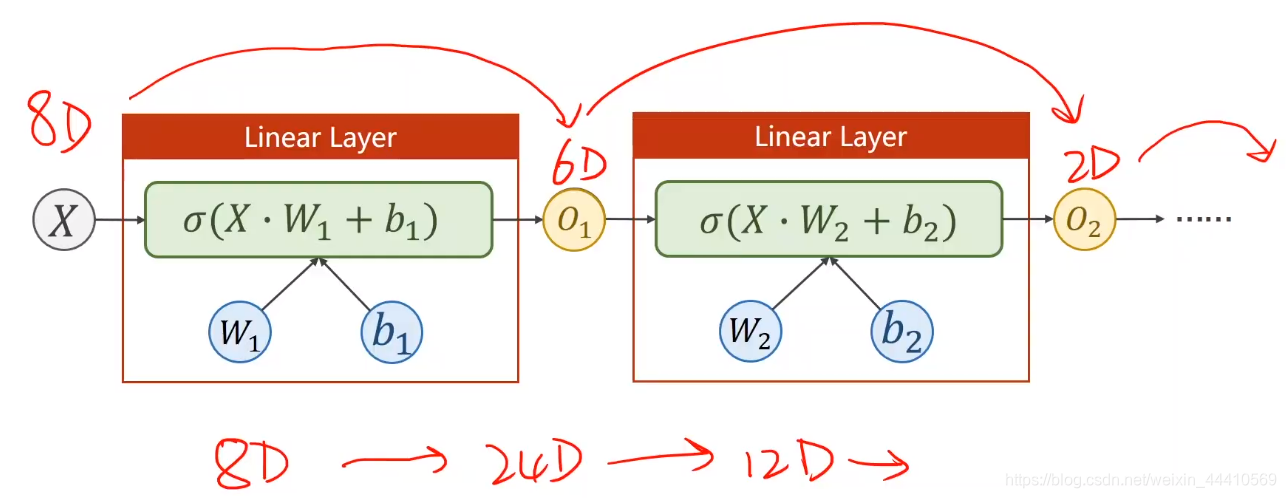

多层神经网络

将多个linear加上激活函数后,组合到一个网络中形成如图所示的多层网络:

注:一般来说,中间层数越多,网络对非线性映射的拟合程度越好,学习能力越强,但是学习能力太强可能会将输入的噪声也学习进去,所以网络学习能力太强也不好,学习应该具有泛化能力。

(插播一条心得:学习新知识,提高泛化能力——学会读文档+计算机系统基本架构理解)

-

for example

多层网络,(8, 6)——(6, 4)——(4, 1)

如图所示的3层网络

-

3. 代码实现

问题描述:输入x是8个维度(8个feature/特征),输出是0或1。

应用场景描述:x是病人的各项指标,y是病人未来一年病情是否加重,1表示加重,0表示不会。

源码 multiple_dimension_linear.py

import torch

import numpy as np

# 加载数据集,用逗号分隔

xy = np.loadtxt('diabetes.csv.gz', delimiter=',', dtype=np.float32)

# from_numpy将取出来的x和y转换为tensor

# x取所有行, 然后除开最后一列————xy[:, :-1],[]前面表示行,后面表示列

x_data = torch.from_numpy(xy[:, :-1])

# y取每一行的最后一个数,[-1]——-1表示取最后一列,[]表示将向量转换成矩阵

y_data = torch.from_numpy(xy[:, [-1]])

print(x_data.shape)

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = torch.nn.Linear(8, 6)

self.linear2 = torch.nn.Linear(6, 4)

self.linear3 = torch.nn.Linear(4, 1)

self.sigmoid = torch.nn.Sigmoid()

def forward(self, x):

x = self.relu(self.linear1(x))

x = self.relu(self.linear2(x))

x = self.sigmoid(self.linear3(x))

return x

model = Model()

criterion = torch.nn.BCELoss(size_average=True)

optimizer = torch.optim.SGD(model.parameters(), lr=0.1)

for epoch in range(100):

# Forward

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

# backward

optimizer.zero_grad()

loss.backward()

# update

optimizer.step()

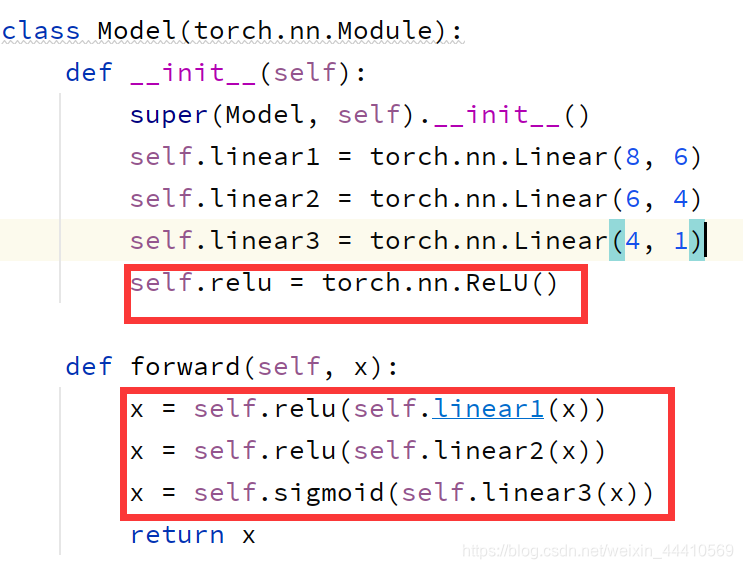

- 替换其它的激活函数

for example:

relu函数,在以下两处修改即可

self.relu = torch.nn.ReLU()

x = self.relu(self.linear1(x))

x = self.relu(self.linear2(x))

x = self.sigmoid(self.linear3(x))

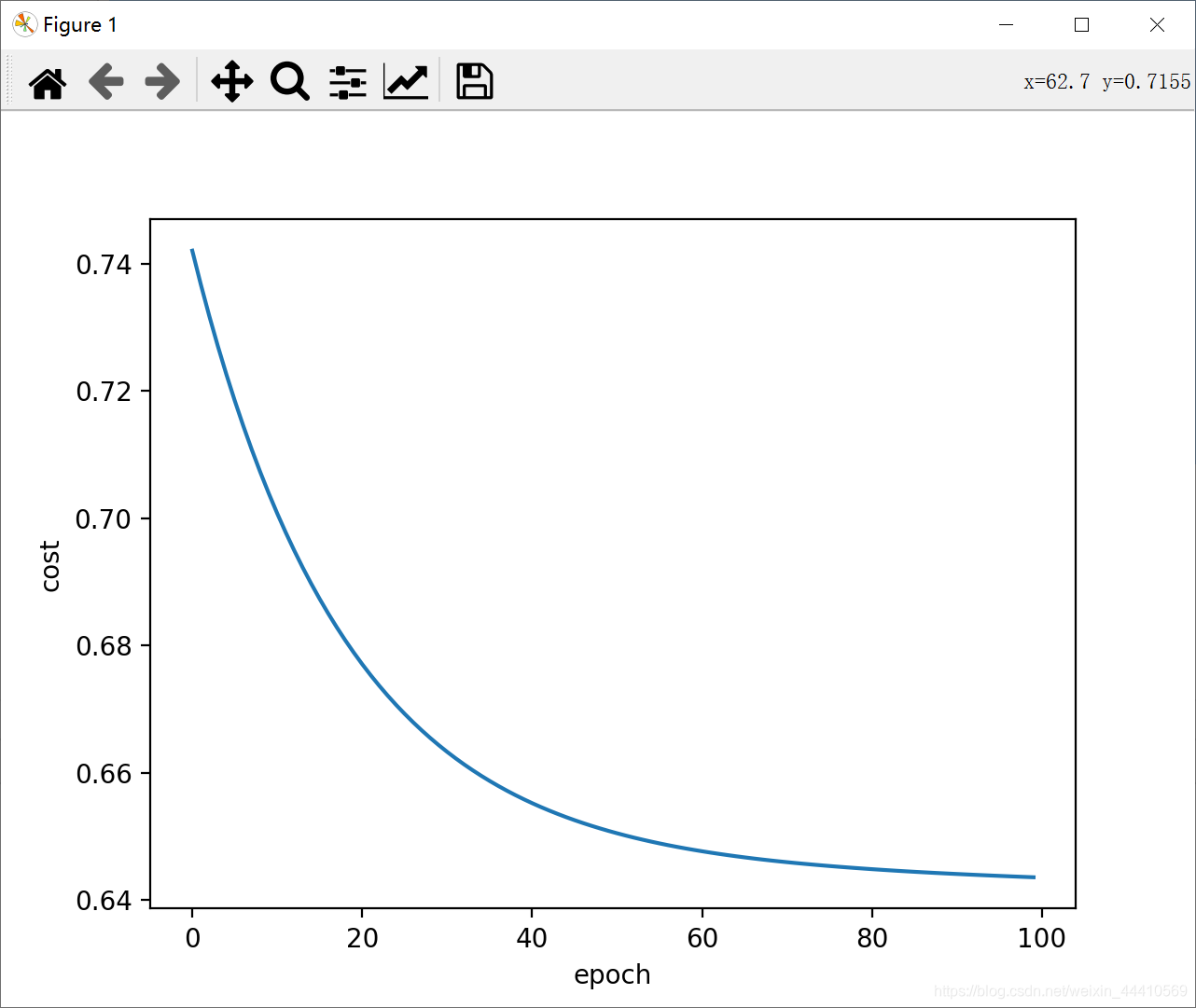

如图所示:

源码 relu.py

import torch

import numpy as np

import matplotlib.pyplot as plt

# 加载数据集,用逗号分隔

xy = np.loadtxt('diabetes.csv.gz', delimiter=',', dtype=np.float32)

# from_numpy将取出来的x和y转换为tensor

# x取所有行, 然后除开最后一列————xy[:, :-1],[]前面表示行,后面表示列

x_data = torch.from_numpy(xy[:, :-1])

# y取每一行的最后一个数,[-1]——-1表示取最后一列,[]表示将向量转换成矩阵

y_data = torch.from_numpy(xy[:, [-1]])

print(x_data.shape)

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = torch.nn.Linear(8, 6)

self.linear2 = torch.nn.Linear(6, 4)

self.linear3 = torch.nn.Linear(4, 1)

self.sigmoid = torch.nn.Sigmoid()

self.relu = torch.nn.ReLU()

def forward(self, x):

# x = self.sigmoid(self.linear1(x))

# x = self.sigmoid(self.linear2(x))

# x = self.sigmoid(self.linear3(x))

x = self.relu(self.linear1(x))

x = self.relu(self.linear2(x))

x = self.sigmoid(self.linear3(x))

return x

model = Model()

criterion = torch.nn.BCELoss(size_average=True)

optimizer = torch.optim.SGD(model.parameters(), lr=0.1)

loss_list = []

for epoch in range(100):

# Forward

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

loss_list.append(loss.item())

# backward

optimizer.zero_grad()

loss.backward()

# update

optimizer.step()

plt.plot(range(100), loss_list)

plt.xlabel('epoch')

plt.ylabel('cost')

plt.show()

- 结果:

都不太收敛