1. 前言

本文使用飞桨(PaddlePaddle)复现卷积神经网络ResNet。本文ResNet复现代码比PaddlePaddle官方内置ResNet代码结构更加清晰,建议参考本文中ResNet复现代码了解ResNet模型搭建流程。

本人全部文章请参见:博客文章导航目录

本文归属于:经典CNN复现系列

前文:GoogLeNet

2. ResNet

2013年,Lei Jimmy Ba和Rich Caurana在Do Deep Nets Really Need to be Deep?一文中分析了深度神经网络,并从理论和实践上证明了更深的卷积神经网络能够达到更高的识别准确率。

将深层网络增加的层变成恒等映射,原浅层网络层权重保持不变,则深层网络可获得与浅层网络相同的性能。即浅层网络的解空间是深层网络解空间的子集,深层网络的解空间中至少存在不差于浅层网络的解。

2015年,ResNet的作者何恺明等人首先发现随着网络叠加更多的层,训练一个相对浅层的网络,在训练集和测试集上均比深层网络表现更好,而且是在训练的各个阶段持续表现的更好,即叠加更多的层后,网络性能出现了快速下降的情况。

训练集上的性能下降,可以排除过拟合,Batch Normalization层的引入也基本解决了Plain Network的梯度消失和梯度爆炸问题。这种神经网络的“退化”现象反映出结构相似的但深度不同模型,其优化难度是不一样的,且难度的增长并不是线性的,越深的模型越难以优化。

神经网络“退化”问题有两种解决思路:一种是调整求解方法,比如更好的初始化、更好的梯度下降算法等;另一种是调整模型结构,让模型更易于优化(改变模型结构实际上是改变了Error Surface的形态)。

ResNet是2015年ImageNet比赛的冠军,其将ImageNet分类Top-5错误率降到了3.57%,这个结果甚至超出了正常人眼识别的精度。ResNet从调整模型结构方面入手,解决神经网络“退化”问题。

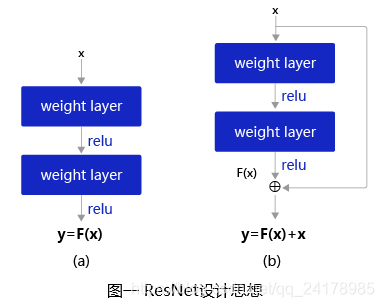

将堆叠的几层Layer称之为一个Block,对于某个Block,可以表示的函数为

F

(

x

)

F(x)

F(x),该Block期望的潜在映射为

H

(

x

)

H(x)

H(x)。ResNet提出与其让

F

(

x

)

F(x)

F(x) 如图一(a)所示直接学习潜在的映射

H

(

x

)

H(x)

H(x),不如如图一(b)所示去学习残差

H

(

x

)

?

x

H(x)?x

H(x)?x,即将

F

(

x

)

F(x)

F(x)定义为

H

(

x

)

?

x

H(x)?x

H(x)?x。这样处理可使得原本的前向路径变成

F

(

x

)

+

x

F(x)+x

F(x)+x,即用

F

(

x

)

+

x

F(x)+x

F(x)+x来拟合

H

(

x

)

H(x)

H(x)。ResNet作者何凯明等人认为这样处理可使得模型更易于优化,因为相比于将

F

(

x

)

F(x)

F(x)学习成恒等映射,让

F

(

x

)

→

0

F(x)\rarr0

F(x)→0要更加容易。在网络进行训练时,如果经过某卷积层并不能提升性能(甚至因为网络“退化”而降低性能),那么网络就会倾向于通过更新权重参数使

F

(

x

)

F(x)

F(x)计算结果趋近于0,那么相应层的输出就近似为输入

x

x

x,也就相当于网络计算“跨过了”该层,从而通过这种跨层连接缓解网络退化现象。

2.1 残差块(Residual Block)

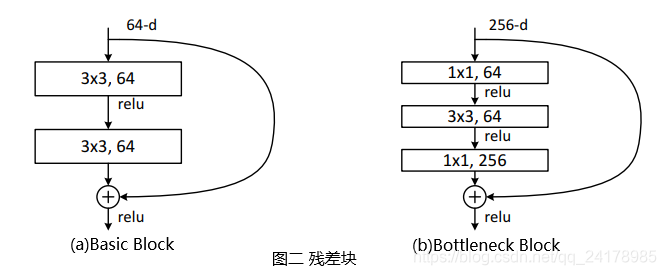

残差块是残差网络(ResNet)的基础,多个相似的残差块串联构成ResNet。如图二所示,一个残差块有2条路径

F

(

x

)

F(x)

F(x)和

x

x

x,

F

(

x

)

F(x)

F(x)路径拟合残差,被称为残差路径,

x

x

x路径为恒等映射(Identity Mapping),被称为Shortcut。输入

x

x

x通过跨层连接,能更快的向前传播数据,或者向后传播梯度。

残差块共分为两种,一种如图二(b)所示包含瓶颈结构(Bottleneck),Bottleneck主要用于降低计算复杂度,输入数据先经过1x1卷积层减少通道数,再经过3x3卷积层提取特征,最后再经过1x1卷积层恢复通道数。该种结构像一个中间细两头粗的瓶颈,所以被称为Bottleneck。另一种如图二(a)所示没有Bottleneck,被称为Basic Block,Basic Block由2个3×3卷积层构成。Bottleneck Block被用于ResNet50、ResNet101和ResNet152,而Basic Block被用于ResNet18和ResNet34。

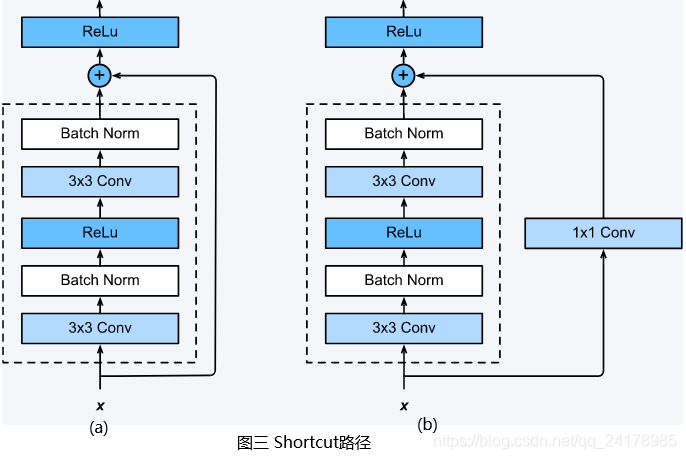

Shortcut路径也分为两种,如下图(a)所示,当残差路径输出与输入

x

x

x的通道数量和特征图尺寸均相同时,Shortcut路径将输入

x

x

x原封不动地输出。若残差路径输出与输入

x

x

x的通道数量或特征图尺寸不同时,Shortcut路径使用1x1的卷积对输入

x

x

x进行降采样,使得Shortcut路径输出与残差路径输出的通道数量和特征图尺寸均相同。

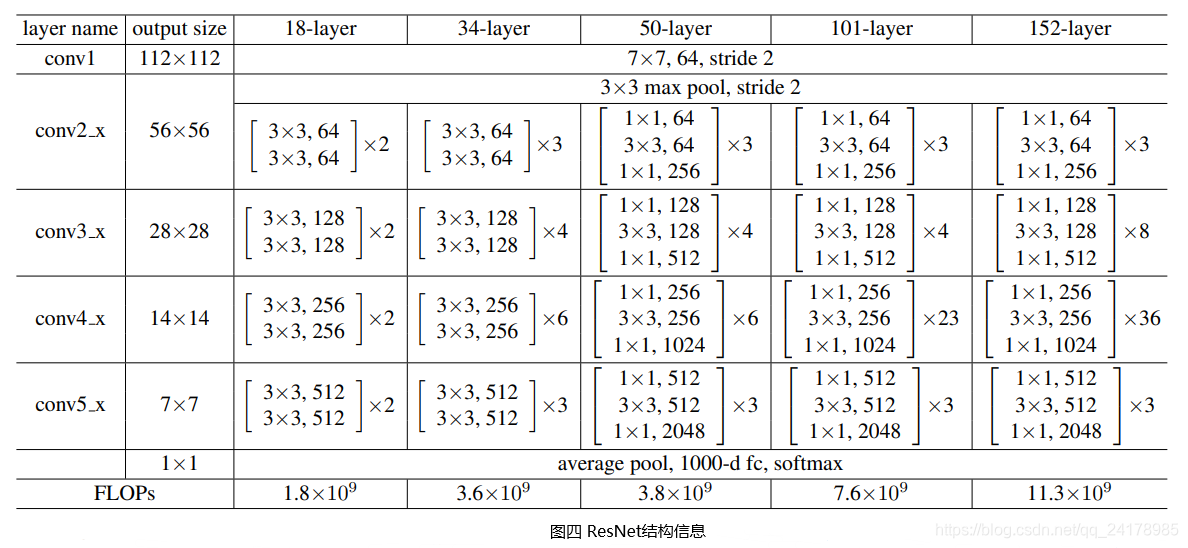

2.2 ResNet网络结构

ResNet由多个Bottleneck Block串联而成,其通过“跨层连接”的方式,使网络在无法继续通过增加层数来进一步提升性能时,跳过部分层。这样能够大大缓解深层网络“退化”现象,从而实现成百上千层的网络,大大提升了深度神经网络性能。

从上面的ResNet结构信息图可知,各种层数配置的ResNet网络的“头”和“尾”都是相同的。开头先用一个7×7的卷积层提取输入图片的纹理细节特征,最后接一个全局平均池化(GAP,将特征图降到1×1尺寸)和一个全连接层(对齐输出维度为分类数)。决不同层数配置ResNet的是它们各自包含的残差块的种类的数量。ResNet18和ResNet34中的残差块为Basic Block,ResNet50、ResNet101和ResNet152中的残差块为Bottleneck Block。

3. ResNet模型复现

使用飞桨(PaddlePaddle)复现ResNet,首先定义继承自paddle.nn.Layer的BasicBlock和BottleneckBlock模块,具体代码如下所示:

# -*- coding: utf-8 -*-

# @Time : 2021/8/19 19:11

# @Author : He Ruizhi

# @File : resnet.py

# @Software: PyCharm

import paddle

class BasicBlock(paddle.nn.Layer):

"""

用于resnet18和resnet34的残差块

Args:

input_channels (int): 该残差块输入的通道数

output_channels (int): 该残差块的输出通道数

stride (int): 残差块中第一个卷积层的步长,当步长为2时,输出特征图大小减半

"""

def __init__(self, input_channels, output_channels, stride):

super(BasicBlock, self).__init__()

self.input_channels = input_channels

self.output_channels = output_channels

self.stride = stride

self.conv_bn_block1 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=input_channels, out_channels=output_channels, kernel_size=3,

stride=stride, padding=1, bias_attr=False),

# BatchNorm2D算子对每一个batch数据中各通道分别进行归一化,因此须指定通道数

paddle.nn.BatchNorm2D(output_channels),

paddle.nn.ReLU()

)

self.conv_bn_block2 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=output_channels, out_channels=output_channels, kernel_size=3,

stride=1, padding=1, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels)

)

# 当stride不等于1或者输入残差块的通道数和输出该残差块的通道数不想等时

# 需要对该残差块输入进行变换

if stride != 1 or input_channels != output_channels:

self.down_sample_block = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=input_channels, out_channels=output_channels, kernel_size=1,

stride=stride, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels)

)

self.relu_out = paddle.nn.ReLU()

def forward(self, inputs):

x = self.conv_bn_block1(inputs)

x = self.conv_bn_block2(x)

# 如果inputs和x的shape不一致,则调整inputs

if self.stride != 1 or self.input_channels != self.output_channels:

inputs = self.down_sample_block(inputs)

outputs = paddle.add(inputs, x)

outputs = self.relu_out(outputs)

return outputs

class BottleneckBlock(paddle.nn.Layer):

"""

用于resnet50、resnet101和resnet152的残差块

Args:

input_channels (int): 该残差块输入的通道数

output_channels (int): 该残差块的输出通道数

stride (int): 残差块中3x3卷积层的步长,当步长为2时,输出特征图大小减半

"""

def __init__(self, input_channels, output_channels, stride):

super(BottleneckBlock, self).__init__()

self.input_channels = input_channels

self.output_channels = output_channels

self.stride = stride

self.conv_bn_block1 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=input_channels, out_channels=output_channels // 4, kernel_size=1,

stride=1, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels // 4),

paddle.nn.ReLU()

)

self.conv_bn_block2 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=output_channels // 4, out_channels=output_channels // 4, kernel_size=3,

stride=stride, padding=1, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels // 4),

paddle.nn.ReLU()

)

self.conv_bn_block3 = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=output_channels // 4, out_channels=output_channels, kernel_size=1,

stride=1, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels)

)

# 如果【输入】和【经过三个conv_bn_block后的输出】的shape不一致

# 添加一个1x1卷积作用到输出数据上,使得【输入】和【经过三个conv_bn_block后的输出】的shape一致

if stride != 1 or input_channels != output_channels:

self.down_sample_block = paddle.nn.Sequential(

paddle.nn.Conv2D(in_channels=input_channels, out_channels=output_channels, kernel_size=1,

stride=stride, bias_attr=False),

paddle.nn.BatchNorm2D(output_channels)

)

self.relu_out = paddle.nn.ReLU()

def forward(self, inputs):

x = self.conv_bn_block1(inputs)

x = self.conv_bn_block2(x)

x = self.conv_bn_block3(x)

# 如果inputs和x的shape不一致,则调整inputs

if self.stride != 1 or self.input_channels != self.output_channels:

inputs = self.down_sample_block(inputs)

outputs = paddle.add(inputs, x)

outputs = self.relu_out(outputs)

return outputs

设置input_channels=64、output_channels=128、stride=2,实例化BasicBlock对象,并使用paddle.summary查看BasicBlock结构:

basic_block = BasicBlock(64, 128, 2)

paddle.summary(basic_block, input_size=(None, 64, 224, 224))

打印BasicBlock结构信息如下:

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[1, 64, 224, 224]] [1, 128, 112, 112] 73,728

BatchNorm2D-1 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

ReLU-1 [[1, 128, 112, 112]] [1, 128, 112, 112] 0

Conv2D-2 [[1, 128, 112, 112]] [1, 128, 112, 112] 147,456

BatchNorm2D-2 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

Conv2D-3 [[1, 64, 224, 224]] [1, 128, 112, 112] 8,192

BatchNorm2D-3 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

ReLU-2 [[1, 128, 112, 112]] [1, 128, 112, 112] 0

===========================================================================

Total params: 230,912

Trainable params: 229,376

Non-trainable params: 1,536

---------------------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 98.00

Params size (MB): 0.88

Estimated Total Size (MB): 111.13

---------------------------------------------------------------------------

设置input_channels=64、output_channels=128、stride=2,实例化BottleneckBlock对象,并使用paddle.summary查看BottleneckBlock结构:

bottleneck_block = BottleneckBlock(64, 128, 2)

paddle.summary(bottleneck_block, input_size=(None, 64, 224, 224))

打印BottleneckBlock结构信息如下:

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[1, 64, 224, 224]] [1, 32, 224, 224] 2,048

BatchNorm2D-1 [[1, 32, 224, 224]] [1, 32, 224, 224] 128

ReLU-1 [[1, 32, 224, 224]] [1, 32, 224, 224] 0

Conv2D-2 [[1, 32, 224, 224]] [1, 32, 112, 112] 9,216

BatchNorm2D-2 [[1, 32, 112, 112]] [1, 32, 112, 112] 128

ReLU-2 [[1, 32, 112, 112]] [1, 32, 112, 112] 0

Conv2D-3 [[1, 32, 112, 112]] [1, 128, 112, 112] 4,096

BatchNorm2D-3 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

Conv2D-4 [[1, 64, 224, 224]] [1, 128, 112, 112] 8,192

BatchNorm2D-4 [[1, 128, 112, 112]] [1, 128, 112, 112] 512

ReLU-3 [[1, 128, 112, 112]] [1, 128, 112, 112] 0

===========================================================================

Total params: 24,832

Trainable params: 23,552

Non-trainable params: 1,280

---------------------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 107.19

Params size (MB): 0.09

Estimated Total Size (MB): 119.53

---------------------------------------------------------------------------

定义继承自paddle.nn.Layer的ResNet类,在__init__方法中定义各模块,在forward函数中实现网络前向计算流程。具体代码如下:

class ResNet(paddle.nn.Layer):

"""

搭建ResNet

Args:

layers (int): 表明构建的ResNet层数,支持[18, 34, 50, 101, 152]

num_classes (int): 输出类别数

"""

def __init__(self, layers, num_classes=1000):

super(ResNet, self).__init__()

supported_layers = [18, 34, 50, 101, 152]

assert layers in supported_layers, \

'Supported layers are {}, but input layer is {}.'.format(supported_layers, layers)

# 网络所使用的【残差块种类】、每个模块包含的【残差块数量】、各模块的【输出通道数】

layers_config = {

18: {'block_type': BasicBlock, 'num_blocks': [2, 2, 2, 2], 'out_channels': [64, 128, 256, 512]},

34: {'block_type': BasicBlock, 'num_blocks': [3, 4, 6, 3], 'out_channels': [64, 128, 256, 512]},

50: {'block_type': BottleneckBlock, 'num_blocks': [3, 4, 6, 3], 'out_channels': [256, 512, 1024, 2048]},

101: {'block_type': BottleneckBlock, 'num_blocks': [3, 4, 23, 3], 'out_channels': [256, 512, 1024, 2048]},

152: {'block_type': BottleneckBlock, 'num_blocks': [3, 8, 36, 3], 'out_channels': [256, 512, 1024, 2048]}

}

# ResNet的第一个模块:7x7的步长为2的64通道卷积 + BN + 步长为2的3x3最大池化

self.conv = paddle.nn.Conv2D(in_channels=3, out_channels=64, kernel_size=7, stride=2,

padding=3, bias_attr=False)

self.bn = paddle.nn.BatchNorm2D(64)

self.relu = paddle.nn.ReLU()

self.max_pool = paddle.nn.MaxPool2D(kernel_size=3, stride=2, padding=1)

# 输入各残差块的通道数

input_channels = 64

block_list = []

for i, block_num in enumerate(layers_config[layers]['num_blocks']):

for order in range(block_num):

block_list.append(layers_config[layers]['block_type'](input_channels,

layers_config[layers]['out_channels'][i],

2 if order == 0 and i != 0 else 1))

input_channels = layers_config[layers]['out_channels'][i]

# 将所有残差块打包

self.residual_block = paddle.nn.Sequential(*block_list)

# 全局平均池化

self.avg_pool = paddle.nn.AdaptiveAvgPool2D(output_size=1)

self.flatten = paddle.nn.Flatten()

# 输出层

self.fc = paddle.nn.Linear(in_features=layers_config[layers]['out_channels'][-1], out_features=num_classes)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

x = self.relu(x)

x = self.max_pool(x)

x = self.residual_block(x)

x = self.avg_pool(x)

x = self.flatten(x)

x = self.fc(x)

return x

设置layers=50实例化ResNet50模型对象,并使用paddle.summary查看ResNet50模型结构信息:

if __name__ == '__main__':

# basic_block = BasicBlock(64, 128, 2)

# paddle.summary(basic_block, input_size=(None, 64, 224, 224))

# bottleneck_block = BottleneckBlock(64, 128, 2)

# paddle.summary(bottleneck_block, input_size=(None, 64, 224, 224))

resnet50 = ResNet(50)

paddle.summary(resnet50, input_size=(None, 3, 224, 224))

打印ResNet50模型结构信息如下:

-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

Conv2D-1 [[1, 3, 224, 224]] [1, 64, 112, 112] 9,408

BatchNorm2D-1 [[1, 64, 112, 112]] [1, 64, 112, 112] 256

ReLU-1 [[1, 64, 112, 112]] [1, 64, 112, 112] 0

MaxPool2D-1 [[1, 64, 112, 112]] [1, 64, 56, 56] 0

Conv2D-2 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096

BatchNorm2D-2 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-2 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-3 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-4 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-4 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

Conv2D-5 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-5 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

ReLU-4 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

BottleneckBlock-1 [[1, 64, 56, 56]] [1, 256, 56, 56] 0

Conv2D-6 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-6 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-5 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-7 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-7 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-6 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-8 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-8 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

ReLU-7 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

BottleneckBlock-2 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-9 [[1, 256, 56, 56]] [1, 64, 56, 56] 16,384

BatchNorm2D-9 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-8 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-10 [[1, 64, 56, 56]] [1, 64, 56, 56] 36,864

BatchNorm2D-10 [[1, 64, 56, 56]] [1, 64, 56, 56] 256

ReLU-9 [[1, 64, 56, 56]] [1, 64, 56, 56] 0

Conv2D-11 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384

BatchNorm2D-11 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024

ReLU-10 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

BottleneckBlock-3 [[1, 256, 56, 56]] [1, 256, 56, 56] 0

Conv2D-12 [[1, 256, 56, 56]] [1, 128, 56, 56] 32,768

BatchNorm2D-12 [[1, 128, 56, 56]] [1, 128, 56, 56] 512

ReLU-11 [[1, 128, 56, 56]] [1, 128, 56, 56] 0

Conv2D-13 [[1, 128, 56, 56]] [1, 128, 28, 28] 147,456

BatchNorm2D-13 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-12 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-14 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-14 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

Conv2D-15 [[1, 256, 56, 56]] [1, 512, 28, 28] 131,072

BatchNorm2D-15 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

ReLU-13 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

BottleneckBlock-4 [[1, 256, 56, 56]] [1, 512, 28, 28] 0

Conv2D-16 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-16 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-14 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-15 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-18 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-18 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

ReLU-16 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

BottleneckBlock-5 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-19 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-19 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-17 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-18 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-21 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-21 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

ReLU-19 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

BottleneckBlock-6 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-22 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536

BatchNorm2D-22 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-20 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-23 [[1, 128, 28, 28]] [1, 128, 28, 28] 147,456

BatchNorm2D-23 [[1, 128, 28, 28]] [1, 128, 28, 28] 512

ReLU-21 [[1, 128, 28, 28]] [1, 128, 28, 28] 0

Conv2D-24 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536

BatchNorm2D-24 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048

ReLU-22 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

BottleneckBlock-7 [[1, 512, 28, 28]] [1, 512, 28, 28] 0

Conv2D-25 [[1, 512, 28, 28]] [1, 256, 28, 28] 131,072

BatchNorm2D-25 [[1, 256, 28, 28]] [1, 256, 28, 28] 1,024

ReLU-23 [[1, 256, 28, 28]] [1, 256, 28, 28] 0

Conv2D-26 [[1, 256, 28, 28]] [1, 256, 14, 14] 589,824

BatchNorm2D-26 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-24 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-27 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-27 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

Conv2D-28 [[1, 512, 28, 28]] [1, 1024, 14, 14] 524,288

BatchNorm2D-28 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-25 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-8 [[1, 512, 28, 28]] [1, 1024, 14, 14] 0

Conv2D-29 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-29 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-26 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-30 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-30 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-27 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-31 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-31 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-28 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-9 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-32 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-32 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-29 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-33 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-33 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-30 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-34 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-34 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-31 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-10 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-35 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-35 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-32 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-36 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-36 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-33 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-37 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-37 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-34 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-11 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-38 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-38 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-35 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-39 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-39 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-36 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-40 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-40 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-37 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-12 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-41 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144

BatchNorm2D-41 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-38 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-42 [[1, 256, 14, 14]] [1, 256, 14, 14] 589,824

BatchNorm2D-42 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024

ReLU-39 [[1, 256, 14, 14]] [1, 256, 14, 14] 0

Conv2D-43 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144

BatchNorm2D-43 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096

ReLU-40 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

BottleneckBlock-13 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0

Conv2D-44 [[1, 1024, 14, 14]] [1, 512, 14, 14] 524,288

BatchNorm2D-44 [[1, 512, 14, 14]] [1, 512, 14, 14] 2,048

ReLU-41 [[1, 512, 14, 14]] [1, 512, 14, 14] 0

Conv2D-45 [[1, 512, 14, 14]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-45 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-42 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-46 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-46 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

Conv2D-47 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 2,097,152

BatchNorm2D-47 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

ReLU-43 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

BottleneckBlock-14 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 0

Conv2D-48 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-48 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-44 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-49 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-49 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-45 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-50 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-50 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

ReLU-46 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

BottleneckBlock-15 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

Conv2D-51 [[1, 2048, 7, 7]] [1, 512, 7, 7] 1,048,576

BatchNorm2D-51 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-47 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-52 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,359,296

BatchNorm2D-52 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048

ReLU-48 [[1, 512, 7, 7]] [1, 512, 7, 7] 0

Conv2D-53 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576

BatchNorm2D-53 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192

ReLU-49 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

BottleneckBlock-16 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0

AdaptiveAvgPool2D-1 [[1, 2048, 7, 7]] [1, 2048, 1, 1] 0

Flatten-1 [[1, 2048, 1, 1]] [1, 2048] 0

Linear-1 [[1, 2048]] [1, 1000] 2,049,000

===============================================================================

Total params: 25,610,152

Trainable params: 25,503,912

Non-trainable params: 106,240

-------------------------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 286.57

Params size (MB): 97.69

Estimated Total Size (MB): 384.84

-------------------------------------------------------------------------------

ResNet50中包含A、B、C、D四种残差块的数量分别是3、4、6、3。每个残差块有三个卷积层,所以残差块里一共有(3+4+6+3)×3=48层网络。再加上开头的7×7卷积层和最后的全连接层,整个ResNet网络共50层,所以被称为ResNet50。

4. 参考资料链接

- https://arxiv.org/pdf/1312.6184v1.pdf

- https://arxiv.org/pdf/1512.03385.pdf

- https://aistudio.baidu.com/aistudio/projectdetail/2299651

- https://aistudio.baidu.com/aistudio/projectdetail/2270457

- https://www.cnblogs.com/shine-lee/p/12363488.html

- https://github.com/PaddlePaddle/Paddle/blob/release/2.1/python/paddle/vision/models/resnet.py