开端

本人有幸加入哈工大赛尔NLP实验室。慢慢学习与NLP有关的知识。这篇论文是要求阅读项目中的一篇。本文也不算翻译,只是总结每一部分的重点。我希望能够通过这种写博客的方式巩固自己的知识,也和大家一起分享。

综述

这篇论文介绍的是在word2vec上预训练词向量使用卷积神经网络完成句子级别的分类任务。简单的CNN使用静态的词向量可以达到多个好结果在多任务上。如果进一步允许学习词向量能够获得更好的表现。

介绍

介绍部分介绍了深度学习模型在计算机视觉和语音识别获得了很好的成果。许多深度学习方面的研究都使用了从语言模型获得的词向量表示。

词向量把单词从稀疏的独热码向量投影到一个低维的紧密表示。在这个表示中相似的词向量的距离相近。

卷积神经网络使用卷积滤波器获得局部特征。一开始使用在计算机视觉领域,逐渐在NLP领域获取了成果。

在目前的工作中,我们训练一个CNN一层卷积在词向量上。一开始我们的词向量静止。尽管模型很简单,还是取得了不错的成果。

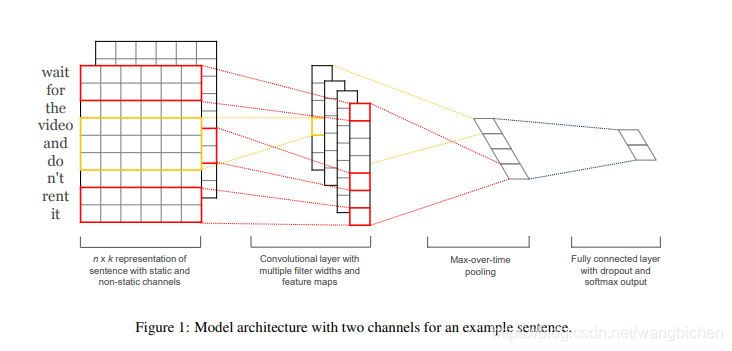

模型

xi∈Rk表示第i个单词由一个k维向量表示。长度为n的句子表示为

x1:n = x1 ⊕ x2 ⊕ . . . ⊕ xn ⊕表示向量的连接。

xi:i+j 表示对向量i…i+j连接

ci = f(w · xi:i+h?1 + b)表示了一个特征。其实就是把i…i+h-1的向量首尾连接,与w做向量乘法,得到一个值。这样做其是和卷积等价的。h是窗口大小。w∈R(h*k)是一个卷积核。

对每个窗口计算特征,把他们连到一起c = [c1, c2, . . . , cn?h+1]得到一个特征图。c ∈ Rn?h+1

然后使用最大池化c? = max{c},为了捕获最明显的特征。

上面过程是使用一个的卷积核。模型中使用多个卷积核,来获取多个特征图。这些来自多卷积核的特征最后连接到一起放入softmax分类器中。

模型还实验了多输入,意思是一个向量静态一个向量动态然后两个结果相加。

正则化

在这个论文中,正则化使用了l2正则化和dropout层。

dropout用来阻止过拟合。就是让神经元以一定概率失活。

就是在倒数第二层中训练中

z = [?c1, . . . , c?m]

不使用y = w · z + b

而是y = w · (z ? r) + b,

r是一个向量取值从0,1按伯努利分布随机采样按dropout_rate也就是论文中的p为等于1的概率。

然后测试的时候,按照p来缩放向量而不dropout。

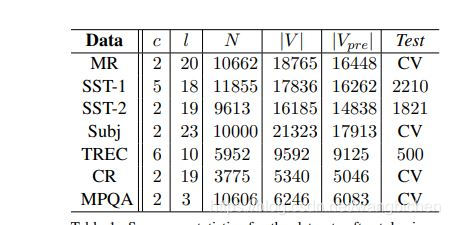

训练结果

训练结果我就不详细写了。

最后附上一份自己的代码

没有用训练好的词向量,就是学习架构用的,最后F1值也没有达到论文水平,72左右。

import torch

import torch.nn as nn

import torch.nn.functional as F

class MyModel(nn.Module):

def __init__(self, embeddings, n_features, n_classes, batch_size, dropout_prob=0.5):

super().__init__()

self.embeddings = embeddings

self.n_features = n_features

self.n_classes = n_classes

self.dropout_prob = dropout_prob

self.batch_size = batch_size

self.embed_size = embeddings.shape[1]

self.my_embeddings = nn.Embedding(embeddings.shape[0], self.embed_size)

#[batch_size,seq_len]输入 每一列代表一句

#[batch_size,seq_len,embedding_size]输出,每列变多维度

self.my_embeddings.weight = nn.Parameter(torch.tensor(embeddings))

self.fc1_1 = nn.Conv1d(in_channels=self.embed_size,out_channels=100,kernel_size = 1)

#[batch_size,embedding_size,seq_len]输入,每列变多维度,一维卷积是在最后维度上扫

#3*[batch_size,100,seq_len-3+1]

self.fc1_2 = nn.Conv1d(in_channels=self.embed_size, out_channels=100, kernel_size=4)

self.fc1_3 = nn.Conv1d(in_channels=self.embed_size, out_channels=100, kernel_size=5)

self.fc1_1_2 = nn.ReLU()

self.fc1_2_2 = nn.ReLU()

self.fc1_3_2 = nn.ReLU()

#做池化 [batch_size,100,seq_len-3+1]输入

#[batch_size,300,1]输出

#线性层,每列变多维度

self.fc3_1 = nn.Linear(in_features = 300, out_features =100)

self.fc3_2 = nn.ReLU()

self.fc3_3 = nn.Dropout(0.5)

self.fc4_1 = nn.Linear(in_features=100, out_features=50)

self.fc4_2 = nn.ReLU()

self.fc4_3 = nn.Dropout(0.5)

self.fc5= nn.Linear(in_features=50,out_features=2)

self.softmax = nn.Softmax(dim=1)

def forward(self,x):

x = x.long()

x = self.my_embeddings(x)

x = x.permute([0,2,1]).to(torch.float32)

x1 = self.fc1_1(x)

x2 = self.fc1_2(x)

x3 = self.fc1_3(x)

x1 = F.relu(self.fc1_1_2(F.max_pool1d(x1, kernel_size = x1.shape[2])))

x2 = F.relu(self.fc1_2_2(F.max_pool1d(x2, kernel_size=x2.shape[2])))

x3 = F.relu(self.fc1_3_2(F.max_pool1d(x3, kernel_size=x3.shape[2])))

x = torch.cat((x3, x2, x1),1).squeeze(2)

x = self.fc3_1(x)

x = self.fc3_2(x)

x = self.fc3_3(x)

x = self.fc4_1(x)

x = self.fc4_2(x)

x = self.fc4_3(x)

x = self.fc5(x)

return self.softmax(x)

##训练过程

from torch.utils.data.dataloader import DataLoader

import model

import dataget

import torch.optim as optim

from sklearn.metrics import f1_score, confusion_matrix, accuracy_score, classification_report, precision_recall_fscore_support

import matplotlib.pyplot as plt

mydataset = dataget.MyDataSet()

mymodel = model.MyModel(batch_size=64,dropout_prob=0.5,embeddings=mydataset.word2vec,n_classes=2,n_features=mydataset.maxlen)

mydatasetloader = DataLoader(dataset=mydataset,batch_size=64)

mytestdatasetloader = DataLoader(dataset=dataget.MytestSet(mydataset),batch_size=64)

loss_function = torch.nn.CrossEntropyLoss()

optimzer = optim.Adam(mymodel.parameters(),lr=0.0001)

history_loss = list()

for epoches in range(100):

tr_loss = 0

nb_tr_steps = 0

train_logits_list = []

train_labels_list = []

tv_loss = 0

nb_tv_steps = 0

valid_logits_list = []

valid_labels_list = []

mymodel.train()

for data,mask,labels in tqdm(mydatasetloader):

predict = mymodel(data)

labels = labels.long()

print(labels.shape)

print(predict.shape)

#print(predict)

loss = loss_function(predict, labels)

loss.backward()

predict = predict.view(-1, 2).detach().cpu().numpy()

labels = labels.view(-1).to('cpu').numpy()

train_logits_list += [int(x) for x in np.argmax(predict, axis=1)]

train_labels_list += [int(x) for x in labels]

tr_loss = tr_loss+loss.item()

nb_tr_steps = nb_tr_steps +1

optimzer.step()

optimzer.zero_grad()

train_loss = tr_loss

train_accuracy = metrics.accuracy_score(train_labels_list, train_logits_list)

train_w_f1 = metrics.f1_score(train_labels_list, train_logits_list, average='weighted')

mymodel.eval()

for data,mask,labels in tqdm(mytestdatasetloader):

predict = mymodel(data)

labels = labels.long()

loss = loss_function(predict, labels)

tv_loss = tv_loss+loss.item()

nb_tv_steps = nb_tv_steps +1

predict = predict.view(-1, 2).detach().cpu().numpy()

labels = labels.view(-1).to('cpu').numpy()

valid_logits_list += [int(x) for x in np.argmax(predict, axis=1)]

valid_labels_list += [int(x) for x in labels]

valid_loss = tv_loss

valid_accuracy = metrics.accuracy_score(valid_labels_list, valid_logits_list)

valid_w_f1 = metrics.f1_score(valid_labels_list, valid_logits_list, average='weighted')

history_loss.append(valid_loss)

print('\nEpoch %d, train_loss=%.5f, train_acc=%.2f, train_w_f1=%.2f,valid_loss=%.5f, valid_acc=%.2f, valid_w_f1=%.2f'

%(epoches, train_loss, train_accuracy * 100, train_w_f1 * 100,valid_loss, valid_accuracy * 100, valid_w_f1 * 100))

fig=plt.figure(num=1,figsize=(4,4))

plt.subplot(111)

plt.plot(np.arange(0,len(history_loss)),history_loss)

plt.show()

##数据处理

import random

import chardet

import torch

import pickle

import numpy as np

from torch.utils.data.dataset import Dataset

from PIL import Image

import numpy as np

import torch

from tqdm import tqdm

def get_word2id(data_paths):

print('加载数据集')

wordid = {'PAD': '0'}

for path in data_paths:

with open(path,encoding="Windows-1252") as f:

for line in tqdm(f):

words = line.strip().split()

for word in words:

if word not in wordid.keys():

wordid[word] = len(wordid)

print(wordid)

return wordid

def get_word2vec(word2id):

word2vec = np.array(np.random.uniform(-1., 1., [len(word2id) + 1, 50]))

#apple = ge.models.KeyedVectors.load_word2vec_format("GoogleNews-vectors-negative300.bin.gz", binary=True)

return word2vec

def get_corpus(word2id):

print('loading corpus...')

contents = list()

labels = list()

masks = list()

maxlen = 0

with open('E:\\python_project\\dataset\\rt-polaritydata\\rt-polarity.neg', encoding="Windows-1252") as f:

for line in tqdm(f):

words = line.strip().split()

if not words: continue

content = [word2id[word] for word in words]

if len(content) >maxlen:maxlen = len(content)

label = 0

contents.append(content)

labels.append(label)

with open('E:\\python_project\\dataset\\rt-polaritydata\\rt-polarity.pos', encoding="Windows-1252") as f:

for line in tqdm(f):

words = line.strip().split()

if not words: continue

content = [word2id[word] for word in words]

if len(content) > maxlen: maxlen = len(content)

label = 1

contents.append(content)

labels.append(label)

for index,line in enumerate(contents):

addline = [0 for i in range(maxlen - len(line))]

mask = [1 for i in range(len(line))]

line = line + addline

mask = mask + addline

masks.append(mask)

contents[index] = line

cc = list(zip(contents,labels))

random.shuffle(cc)

contents[:], labels[:] = zip(*cc)

return contents,masks,labels,maxlen

class MyDataSet(Dataset):

def __init__(self):

datapath = ['E:\\python_project\\dataset\\rt-polaritydata\\rt-polarity.pos',

'E:\\python_project\\dataset\\rt-polaritydata\\rt-polarity.neg']

self.word2id = get_word2id(data_paths=datapath)

self.corpus = get_corpus(self.word2id)

self.word2vec = get_word2vec(self.word2id)

self.maxlen = self.corpus[3]

def __len__(self):

return 8000

def __getitem__(self, index):

'''

:param index:

:return: 编码,掩码,标签

'''

return np.array(self.corpus[0][index]), np.array(self.corpus[1][index]),np.array(self.corpus[2][index])

class MytestSet(Dataset):

def __init__(self,MyDataSet):

self.mydataset = MyDataSet

def __len__(self):

return 2050

def __getitem__(self, index):

'''

:param index:

:return: 编码,掩码,标签

'''

return np.array(self.mydataset.corpus[0][index+8000]), np.array(self.mydataset.corpus[1][index+8000]), np.array(self.mydataset.corpus[2][index+8000])