1.tf.nn.con2d()函数介绍

tf.nn.con2d()函数参数如下图所示:

tf.nn.conv2d(

? ? input,

? ? filter,

? ? strides,

? ? padding,

? ? use_cudnn_on_gpu=True,

? ? data_format='NHWC',

? ? dilations=[1, 1, 1, 1],

? ? name=None

)参数说明参考:https://tensorflow.google.cn/api_docs/python/tf/nn/conv2d

Args | |

|---|---|

input | A?Tensor. Must be one of the following types:?half,?bfloat16,?float32,?float64. A Tensor of rank at least 4. The dimension order is interpreted according to the value of?data_format; with the all-but-inner-3 dimensions acting as batch dimensions. See below for details. |

filters | A?Tensor. Must have the same type as?input. A 4-D tensor of shape?[filter_height,?filter_width,?in_channels,?out_channels] |

strides | An int or list of?ints?that has length?1,?2?or?4. The stride of the sliding window for each dimension of?input. If a single value is given it is replicated in the?H?and?W?dimension. By default the?N?and?C?dimensions are set to 1. The dimension order is determined by the value of?data_format, see below for details. |

padding | Either the?string?"SAME"?or?"VALID"?indicating the type of padding algorithm to use, or a list indicating the explicit paddings at the start and end of each dimension. When explicit padding is used and data_format is?"NHWC", this should be in the form?[[0,?0],?[pad_top,pad_bottom],?[pad_left,?pad_right],?[0,?0]]. When explicit padding used and data_format is?"NCHW", this should be in the form?[[0,?0],?[0,?0],[pad_top,?pad_bottom],?[pad_left,?pad_right]]. |

data_format | An optional?string?from:?"NHWC",?"NCHW". Defaults to?"NHWC". Specify the data format of the input and output data. With the default format "NHWC", the data is stored in the order of:?batch_shape + [height,?width,?channels]. Alternatively, the format could be "NCHW", the data storage order of:?batch_shape + [channels,?height,?width]. |

dilations | An int or list of?ints?that has length?1,?2?or?4, defaults to 1. The dilation factor for each dimension ofinput. If a single value is given it is replicated in the?H?and?W?dimension. By default the?N?and?C?dimensions are set to 1. If set to k > 1, there will be k-1 skipped cells between each filter element on that dimension. The dimension order is determined by the value of?data_format, see above for details. Dilations in the batch and depth dimensions if a 4-d tensor must be 1. |

name | A name for the operation (optional). |

2.tf.nn.con2d()卷积运算

2.1 使用数据

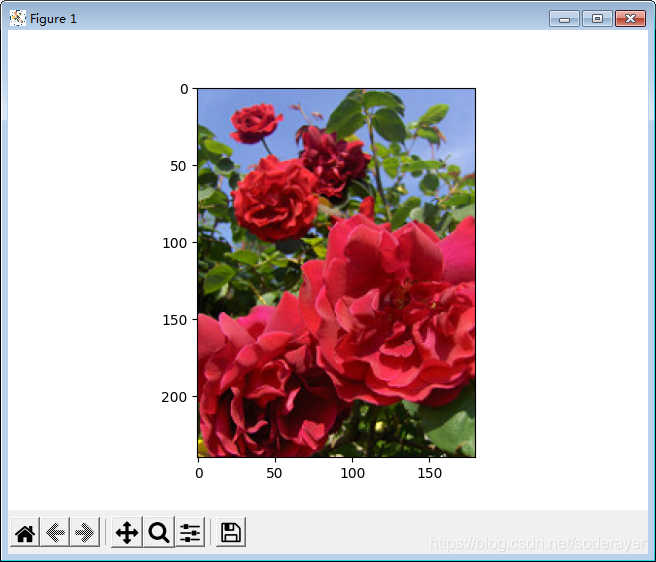

flower_photos数据集中选一张玫瑰花图片

2.2代码实现卷积运算

2.2.1 使用引用包

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import tensorflow as tf2.2.2显示读取显示图片

data = Image.open("roses_483444865_65962cea07_m.jpg") #返回一个PIL图像对象

plt.imshow(data)

plt.show()

2.2.3对图片进行维度变换

变换为input参数所需格式,即:

[batch_size,filter_height,?filter_width,?in_channels]

这里是[1,240,180,3]

x=np.array(data)

x=x/255

x=x.reshape(1,240,180,3)

print(x.shape)?2.2.4 数组转化为张量

image_tensor=tf.convert_to_tensor(x)

x_input = tf.cast(image_tensor,tf.float32)?2.2.5设置filter参数

这里使用kernel_in命名,即卷积核。维度为[3,2,1,3]([filter_height,?filter_width,?in_channels,?out_channels])out_channel需要和input参数最后一个相同。

padding包含"SAME"和"VALID","SAME"输出结果保持一致的维度,"VALID"根据步长等参数有所变化。

kernel_in = np.array([

[[[1, 1, 1]], [[1, 1, 1]]],

[[[1, 1, 1]], [[1, 1, 1]]],

[[[1, 1, 1]], [[1, 1, 1]]]])

kernel= tf.constant(kernel_in, dtype=tf.float32)

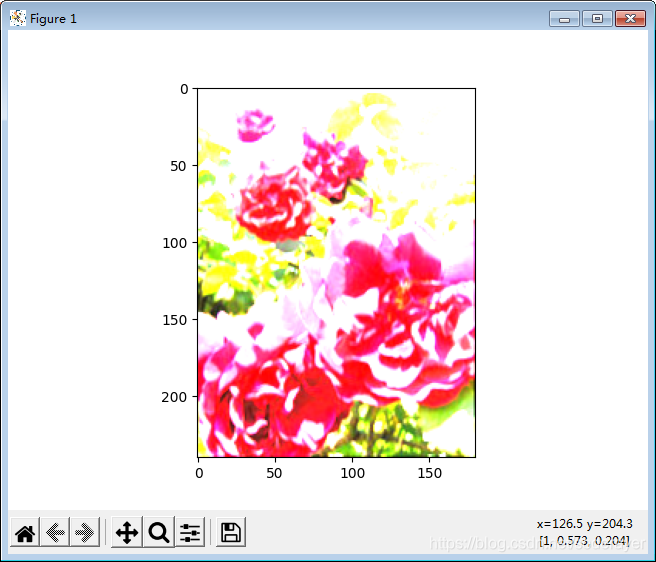

z=tf.nn.conv2d(x_input, kernel, strides=[1,1,1,1], padding='SAME')2.2.6 结果显示

x_conv2d=np.array(z)

x_conv2d=x_conv2d.reshape(240,180,3)

#x_conv2d=np.array(x_conv2d,dtype=float)

print(x_conv2d)

plt.imshow(x_conv2d)

plt.show()

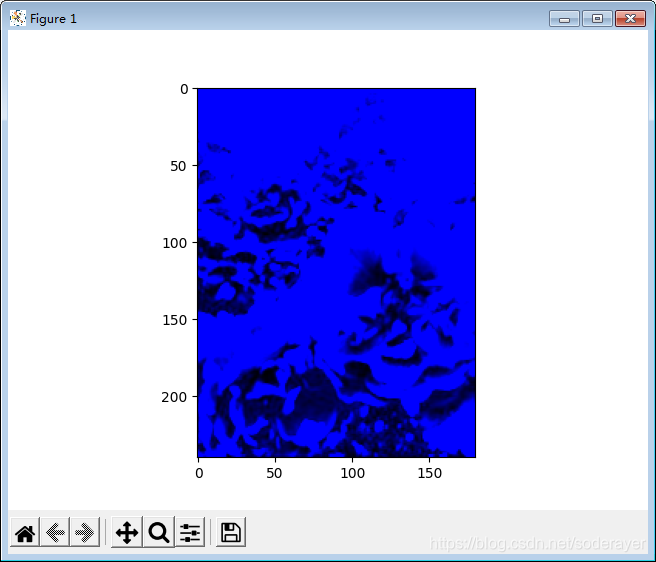

?2.2.7 不同卷积核结果显示

变换kernel

kernel_in = np.array([

[[[-1, 0, 1]],[[-2, 0, 2]],[[-1, 0, 1]]],

[[[-1, 0, 1]],[[-2, 0, 2]],[[-1, 0, 1]]],

[[[-1, 0, 1]],[[-2, 0, 2]],[[-1, 0, 1]]]])

?3.完整代码

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import tensorflow as tf

data = Image.open("roses_483444865_65962cea07_m.jpg") #返回一个PIL图像对象

plt.imshow(data)

plt.show()

x=np.array(data)

x=x/255

x=x.reshape(1,240,180,3)

image_tensor=tf.convert_to_tensor(x)

x_input = tf.cast(image_tensor,tf.float32)

print("x_in{}",x_input.shape)

kernel_in = np.array([

[[[-1, 0, 1]],[[-2, 0, 2]],[[-1, 0, 1]]],

[[[-1, 0, 1]],[[-2, 0, 2]],[[-1, 0, 1]]],

[[[-1, 0, 1]],[[-2, 0, 2]],[[-1, 0, 1]]]])

kernel = tf.constant(kernel_in, dtype=tf.float32)

z=tf.nn.conv2d(x_input, kernel, strides=[1,1,1,1], padding='SAME')

x_conv2d=np.array(z)

x_conv2d=x_conv2d.reshape(240,180,3)

plt.imshow(x_conv2d)

plt.show()