九、过拟合

内容参考来自https://github.com/dragen1860/Deep-Learning-with-TensorFlow-book开源书籍《TensorFlow2深度学习》,这只是我做的简单的学习笔记,方便以后复习

1.模型的容量

通俗地讲,模型的容量或表达能力,是指模型拟合复杂函数的能力。

2.过拟合与欠拟合

过拟合(Overfitting):当模型的容量过大时,网络模型除了学习到训练集数据的模态之外,还把额外的观测

误差也学习进来,导致学习的模型在训练集上面表现较好,但是在未见的样本上表现不

佳,也就是模型泛化能力偏弱。

欠拟合(Underfitting):当模型的容量过小时,模型不能够很好地学习到训练集数据的模态,导致训练集上表现不佳,同时在未见的样本上表现也不佳。

3.正则化

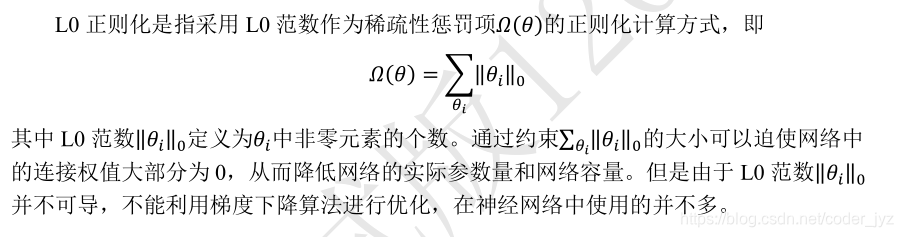

常用的正则化方式有 L0、L1、L2 正则化。

实例

import tensorflow as tf

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

def preprocess(x, y):

x = tf.cast(x, dtype=tf.float32) / 255.

y = tf.cast(y, dtype=tf.int32)

return x,y

batchsz = 128

(x, y), (x_val, y_val) = datasets.mnist.load_data()

print('datasets:', x.shape, y.shape, x.min(), x.max())

db = tf.data.Dataset.from_tensor_slices((x,y))

db = db.map(preprocess).shuffle(60000).batch(batchsz).repeat(10)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))

ds_val = ds_val.map(preprocess).batch(batchsz)

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

network.build(input_shape=(None, 28*28))

network.summary()

optimizer = optimizers.Adam(lr=0.01)

for step, (x,y) in enumerate(db):

with tf.GradientTape() as tape:

# [b, 28, 28] => [b, 784]

x = tf.reshape(x, (-1, 28*28))

# [b, 784] => [b, 10]

out = network(x)

# [b] => [b, 10]

y_onehot = tf.one_hot(y, depth=10)

# [b]

loss = tf.reduce_mean(tf.losses.categorical_crossentropy(y_onehot, out, from_logits=True))

loss_regularization = []

for p in network.trainable_variables:

loss_regularization.append(tf.nn.l2_loss(p))

loss_regularization = tf.reduce_sum(tf.stack(loss_regularization))

loss = loss + 0.0001 * loss_regularization

grads = tape.gradient(loss, network.trainable_variables)

optimizer.apply_gradients(zip(grads, network.trainable_variables))

if step % 100 == 0:

print(step, 'loss:', float(loss), 'loss_regularization:', float(loss_regularization))

# evaluate

if step % 500 == 0:

total, total_correct = 0., 0

for step, (x, y) in enumerate(ds_val):

# [b, 28, 28] => [b, 784]

x = tf.reshape(x, (-1, 28*28))

# [b, 784] => [b, 10]

out = network(x)

# [b, 10] => [b]

pred = tf.argmax(out, axis=1)

pred = tf.cast(pred, dtype=tf.int32)

# bool type

correct = tf.equal(pred, y)

# bool tensor => int tensor => numpy

total_correct += tf.reduce_sum(tf.cast(correct, dtype=tf.int32)).numpy()

total += x.shape[0]

print(step, 'Evaluate Acc:', total_correct/total)

4.Dropout

Dropout 通过随机断开神经网络的连接,减少每次训练时实际参与计算的模型的参数量;但是在测试时,Dropout 会恢

复所有的连接,保证模型测试时获得最好的性能。

# 添加 dropout 操作,断开概率为 0.5

x = tf.nn.dropout(x, rate=0.5)

# 添加 Dropout 层,断开概率为 0.5

model.add(layers.Dropout(rate=0.5))

5.数据增强

数据增强(DataAugmentation)是指在维持样本标签不变的条件下,根据先验知识改变样本的特征,使得新产生的样本也符合或者近似符合数据的真实分布。

旋转

# 图片逆时针旋转 180 度

x = tf.image.rot90(x,2)

翻转

# 随机水平翻转

x = tf.image.random_flip_left_right(x)

# 随机竖直翻转

x = tf.image.random_flip_up_down(x)

裁剪

# 图片先缩放到稍大尺寸

x = tf.image.resize(x, [244, 244])

# 再随机裁剪到合适尺寸

x = tf.image.random_crop(x, [224,224,3])

6.过拟合问题实战

import matplotlib.pyplot as plt

# 导入数据集生成工具

import numpy as np

import seaborn as sns

from sklearn.datasets import make_moons

from sklearn.model_selection import train_test_split

from tensorflow.keras import layers, Sequential, regularizers

from mpl_toolkits.mplot3d import Axes3D

plt.rcParams['font.size'] = 16

plt.rcParams['font.family'] = ['STKaiti']

plt.rcParams['axes.unicode_minus'] = False

OUTPUT_DIR = 'output_dir'

N_EPOCHS = 500

def load_dataset():

# 采样点数

N_SAMPLES = 1000

# 测试数量比率

TEST_SIZE = None

# 从 moon 分布中随机采样 1000 个点,并切分为训练集-测试集

X, y = make_moons(n_samples=N_SAMPLES, noise=0.25, random_state=100)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=TEST_SIZE, random_state=42)

return X, y, X_train, X_test, y_train, y_test

def make_plot(X, y, plot_name, file_name, XX=None, YY=None, preds=None, dark=False, output_dir=OUTPUT_DIR):

# 绘制数据集的分布, X 为 2D 坐标, y 为数据点的标签

if dark:

plt.style.use('dark_background')

else:

sns.set_style("whitegrid")

axes = plt.gca()

axes.set_xlim([-2, 3])

axes.set_ylim([-1.5, 2])

axes.set(xlabel="$x_1$", ylabel="$x_2$")

plt.title(plot_name, fontsize=20, fontproperties='SimHei')

plt.subplots_adjust(left=0.20)

plt.subplots_adjust(right=0.80)

if XX is not None and YY is not None and preds is not None:

plt.contourf(XX, YY, preds.reshape(XX.shape), 25, alpha=0.08, cmap=plt.cm.Spectral)

plt.contour(XX, YY, preds.reshape(XX.shape), levels=[.5], cmap="Greys", vmin=0, vmax=.6)

# 绘制散点图,根据标签区分颜色m=markers

markers = ['o' if i == 1 else 's' for i in y.ravel()]

mscatter(X[:, 0], X[:, 1], c=y.ravel(), s=20, cmap=plt.cm.Spectral, edgecolors='none', m=markers, ax=axes)

# 保存矢量图

plt.savefig(output_dir + '/' + file_name)

plt.show()

plt.close()

def mscatter(x, y, ax=None, m=None, **kw):

import matplotlib.markers as mmarkers

if not ax: ax = plt.gca()

sc = ax.scatter(x, y, **kw)

if (m is not None) and (len(m) == len(x)):

paths = []

for marker in m:

if isinstance(marker, mmarkers.MarkerStyle):

marker_obj = marker

else:

marker_obj = mmarkers.MarkerStyle(marker)

path = marker_obj.get_path().transformed(

marker_obj.get_transform())

paths.append(path)

sc.set_paths(paths)

return sc

def network_layers_influence(X_train, y_train):

# 构建 5 种不同层数的网络

for n in range(5):

# 创建容器

model = Sequential()

# 创建第一层

model.add(layers.Dense(8, input_dim=2, activation='relu'))

# 添加 n 层,共 n+2 层

for _ in range(n):

model.add(layers.Dense(32, activation='relu'))

# 创建最末层

model.add(layers.Dense(1, activation='sigmoid'))

# 模型装配与训练

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=N_EPOCHS, verbose=1)

# 绘制不同层数的网络决策边界曲线

# 可视化的 x 坐标范围为[-2, 3]

xx = np.arange(-2, 3, 0.01)

# 可视化的 y 坐标范围为[-1.5, 2]

yy = np.arange(-1.5, 2, 0.01)

# 生成 x-y 平面采样网格点,方便可视化

XX, YY = np.meshgrid(xx, yy)

preds = model.predict_classes(np.c_[XX.ravel(), YY.ravel()])

title = "网络层数:{0}".format(2 + n)

file = "网络容量_%i.png" % (2 + n)

make_plot(X_train, y_train, title, file, XX, YY, preds, output_dir=OUTPUT_DIR + '/network_layers')

def dropout_influence(X_train, y_train):

# 构建 5 种不同数量 Dropout 层的网络

for n in range(5):

# 创建容器

model = Sequential()

# 创建第一层

model.add(layers.Dense(8, input_dim=2, activation='relu'))

counter = 0

# 网络层数固定为 5

for _ in range(5):

model.add(layers.Dense(64, activation='relu'))

# 添加 n 个 Dropout 层

if counter < n:

counter += 1

model.add(layers.Dropout(rate=0.5))

# 输出层

model.add(layers.Dense(1, activation='sigmoid'))

# 模型装配

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# 训练

model.fit(X_train, y_train, epochs=N_EPOCHS, verbose=1)

# 绘制不同 Dropout 层数的决策边界曲线

# 可视化的 x 坐标范围为[-2, 3]

xx = np.arange(-2, 3, 0.01)

# 可视化的 y 坐标范围为[-1.5, 2]

yy = np.arange(-1.5, 2, 0.01)

# 生成 x-y 平面采样网格点,方便可视化

XX, YY = np.meshgrid(xx, yy)

preds = model.predict_classes(np.c_[XX.ravel(), YY.ravel()])

title = "无Dropout层" if n == 0 else "{0}层 Dropout层".format(n)

file = "Dropout_%i.png" % n

make_plot(X_train, y_train, title, file, XX, YY, preds, output_dir=OUTPUT_DIR + '/dropout')

def build_model_with_regularization(_lambda):

# 创建带正则化项的神经网络

model = Sequential()

model.add(layers.Dense(8, input_dim=2, activation='relu')) # 不带正则化项

# 2-4层均是带 L2 正则化项

model.add(layers.Dense(256, activation='relu', kernel_regularizer=regularizers.l2(_lambda)))

model.add(layers.Dense(256, activation='relu', kernel_regularizer=regularizers.l2(_lambda)))

model.add(layers.Dense(256, activation='relu', kernel_regularizer=regularizers.l2(_lambda)))

# 输出层

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) # 模型装配

return model

def plot_weights_matrix(model, layer_index, plot_name, file_name, output_dir=OUTPUT_DIR):

# 绘制权值范围函数

# 提取指定层的权值矩阵

weights = model.layers[layer_index].get_weights()[0]

shape = weights.shape

# 生成和权值矩阵等大小的网格坐标

X = np.array(range(shape[1]))

Y = np.array(range(shape[0]))

X, Y = np.meshgrid(X, Y)

# 绘制3D图

fig = plt.figure()

ax = fig.gca(projection='3d')

ax.xaxis.set_pane_color((1.0, 1.0, 1.0, 0.0))

ax.yaxis.set_pane_color((1.0, 1.0, 1.0, 0.0))

ax.zaxis.set_pane_color((1.0, 1.0, 1.0, 0.0))

plt.title(plot_name, fontsize=20, fontproperties='SimHei')

# 绘制权值矩阵范围

ax.plot_surface(X, Y, weights, cmap=plt.get_cmap('rainbow'), linewidth=0)

# 设置坐标轴名

ax.set_xlabel('网格x坐标', fontsize=16, rotation=0, fontproperties='SimHei')

ax.set_ylabel('网格y坐标', fontsize=16, rotation=0, fontproperties='SimHei')

ax.set_zlabel('权值', fontsize=16, rotation=90, fontproperties='SimHei')

# 保存矩阵范围图

plt.savefig(output_dir + "/" + file_name + ".svg")

plt.close(fig)

def regularizers_influence(X_train, y_train):

for _lambda in [1e-5, 1e-3, 1e-1, 0.12, 0.13]: # 设置不同的正则化系数

# 创建带正则化项的模型

model = build_model_with_regularization(_lambda)

# 模型训练

model.fit(X_train, y_train, epochs=N_EPOCHS, verbose=1)

# 绘制权值范围

layer_index = 2

plot_title = "正则化系数:{}".format(_lambda)

file_name = "正则化网络权值_" + str(_lambda)

# 绘制网络权值范围图

plot_weights_matrix(model, layer_index, plot_title, file_name, output_dir=OUTPUT_DIR + '/regularizers')

# 绘制不同正则化系数的决策边界线

# 可视化的 x 坐标范围为[-2, 3]

xx = np.arange(-2, 3, 0.01)

# 可视化的 y 坐标范围为[-1.5, 2]

yy = np.arange(-1.5, 2, 0.01)

# 生成 x-y 平面采样网格点,方便可视化

XX, YY = np.meshgrid(xx, yy)

preds = model.predict_classes(np.c_[XX.ravel(), YY.ravel()])

title = "正则化系数:{}".format(_lambda)

file = "正则化_%g.svg" % _lambda

make_plot(X_train, y_train, title, file, XX, YY, preds, output_dir=OUTPUT_DIR + '/regularizers')

def main():

X, y, X_train, X_test, y_train, y_test = load_dataset()

# 绘制数据集分布

make_plot(X, y, None, "月牙形状二分类数据集分布.svg")

# 网络层数的影响

network_layers_influence(X_train, y_train)

# Dropout的影响

dropout_influence(X_train, y_train)

# 正则化的影响

regularizers_influence(X_train, y_train)

if __name__ == '__main__':

main()

图太多就不展示了,可以自己试试

欢迎关注我的微信公众号,同步更新,嘻嘻