1、回归:

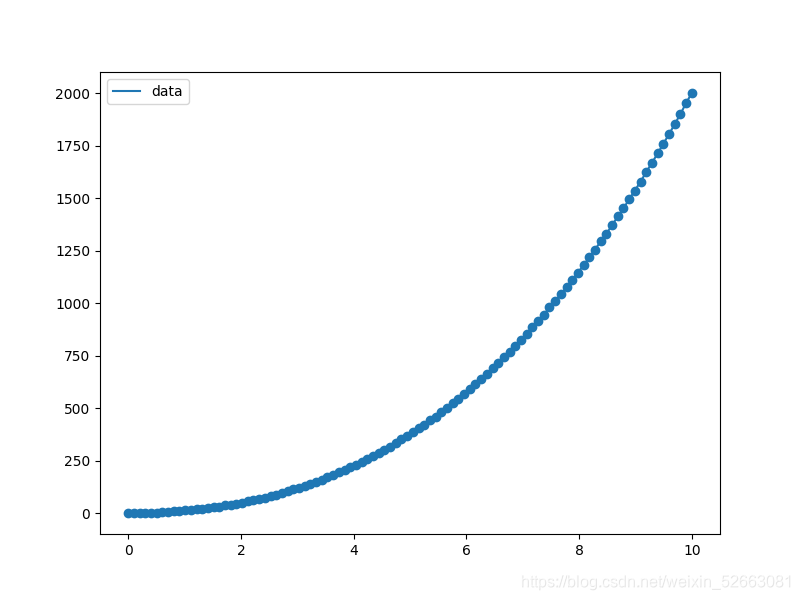

? ? ? ? ? ? ? ? 假设已经认为该数据为三次方数据:

import statsmodels.api as sm

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

nsample = 100

#x的取值

x = np.linspace(0, 10, nsample)

#列出x的列的组成

X = np.column_stack((x, x**2,x**3))

#给x加上一个常数

X = sm.add_constant(X)

#模拟方程的系数

beta = np.array([1,0.1,10,1])

#误差

e = np.random.normal(size=nsample)

#生成Y的等式

y = np.dot(X, beta) + e

##调用OLS。fit来拟合曲线

model = sm.OLS(y,X).fit()

fig, ax = plt.subplots(figsize=(8,6))

ax.plot(x, y, label='data')

ax.legend(loc='best')

ax.scatter(x,y)

plt.show()

?2、分类

在iris数据集上来进行分类器分类

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.datasets import load_iris

iris = load_iris()

##x为数据集

x = iris["data"]

##y为标签集

y = iris["target"]

##打破原有的顺序进行分割,分为x的训练集、测试集;y的训练集、测试集

xtrain,xtest,ytrain,ytest = train_test_split(x,y,test_size=0.3,random_state=0)

########随机森林

##定义一个分类器

rfc = RandomForestClassifier(max_depth=5,random_state=0)

rfc.fit(xtrain,ytrain) ##将训练集的数据进行拟合

y_pred = rfc.predict(xtest) #求出预测的值

########

gtc = GradientBoostingClassifier(max_depth=5,random_state=0)

gtc.fit(xtrain,ytrain)

gtc_pred = gtc.predict(xtest)

########

########

hbc = HistGradientBoostingClassifier(max_depth=5,random_state=0)

hbc.fit(xtrain,ytrain)

hbc_pred = hbc.predict(xtest)

##随机森林

rf = pd.DataFrame(list(zip(ytest,y_pred)),columns=['real','pred'])

rf["num"] = rf.apply(lambda r:1 if r["real"] == r["pred"] else 0,axis=1)

#####验证准确率

print(sum(rf["num"])/len(rf["real"]))

##

gtcf = pd.DataFrame(list(zip(ytest,gtc_pred)),columns=['real','pred'])

gtcf["num"] = rf.apply(lambda r:1 if r["real"] == r["pred"] else 0,axis=1)

print(sum(gtcf["num"])/len(gtcf["real"]))

#####增加学习率的

from sklearn.ensemble import RandomForestClassifier

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

x = iris["data"]

y = iris["target"]

x_train,x_test,y_train,y_test = train_test_split(x,y,test_size=0.3,random_state=0)

for i in range(40):

clf = RandomForestClassifier(max_depth=5,n_estimators=i+1)

clf.fit(x_train,y_train)

pred = clf.predict(x_test)

rf = pd.DataFrame(list(zip(y_test,pred)),columns=["test","pred"])

rf["num"] = rf.apply(lambda r:1 if r["test"] == r["pred"] else 0,axis=1)

print(sum(rf["num"])/len(rf["test"]))

plt.scatter(i,sum(rf["num"])/len(rf["test"]))

plt.show()0.9777777777777777

0.9777777777777777