GoogLeNet

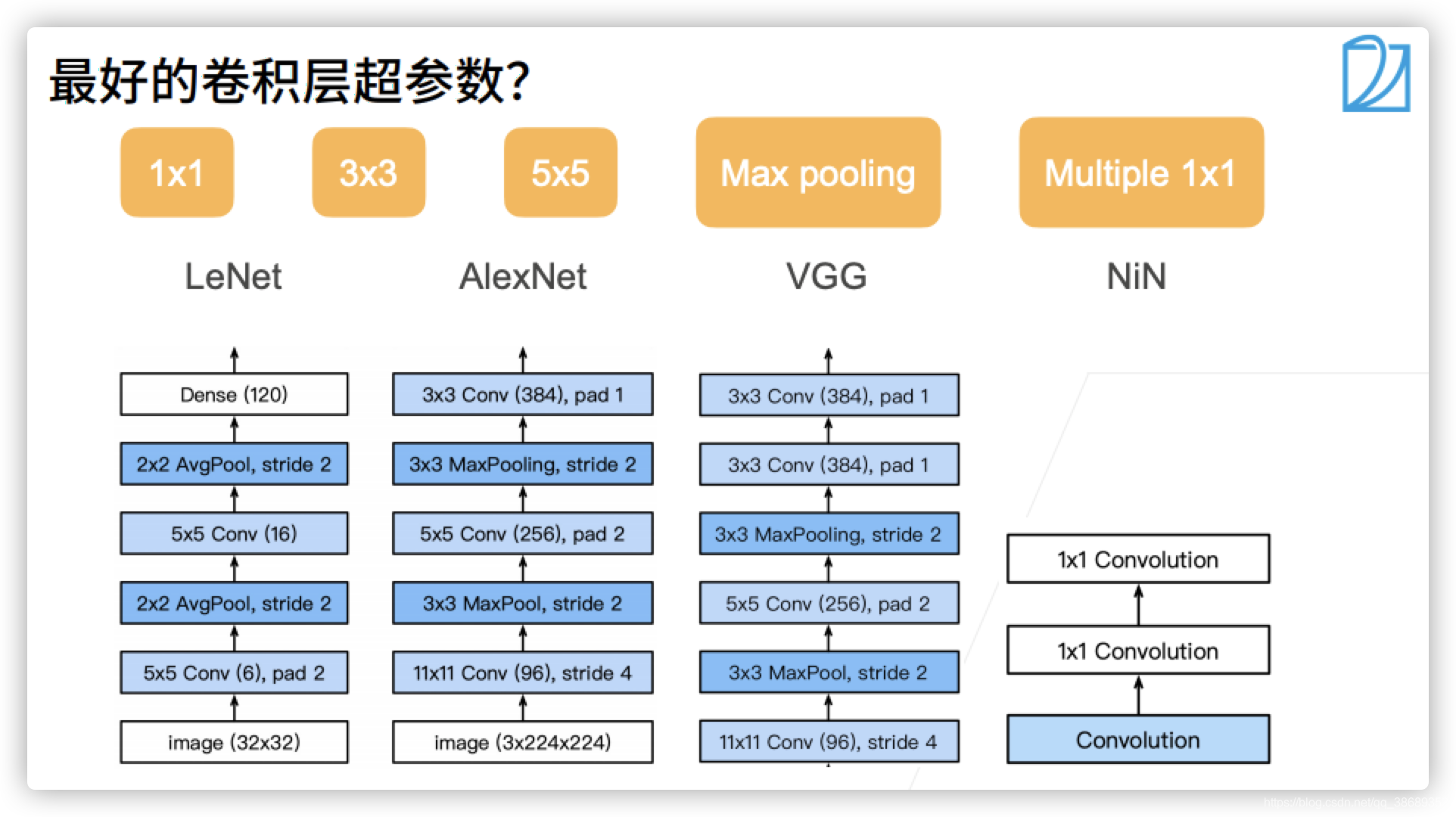

- 前面我们学习了一些经典的神经网络,我们不经会问一个问题,我们为什么要这么做,为什么要那么做?

Inception块:小学生才做选择题,我全都要

-

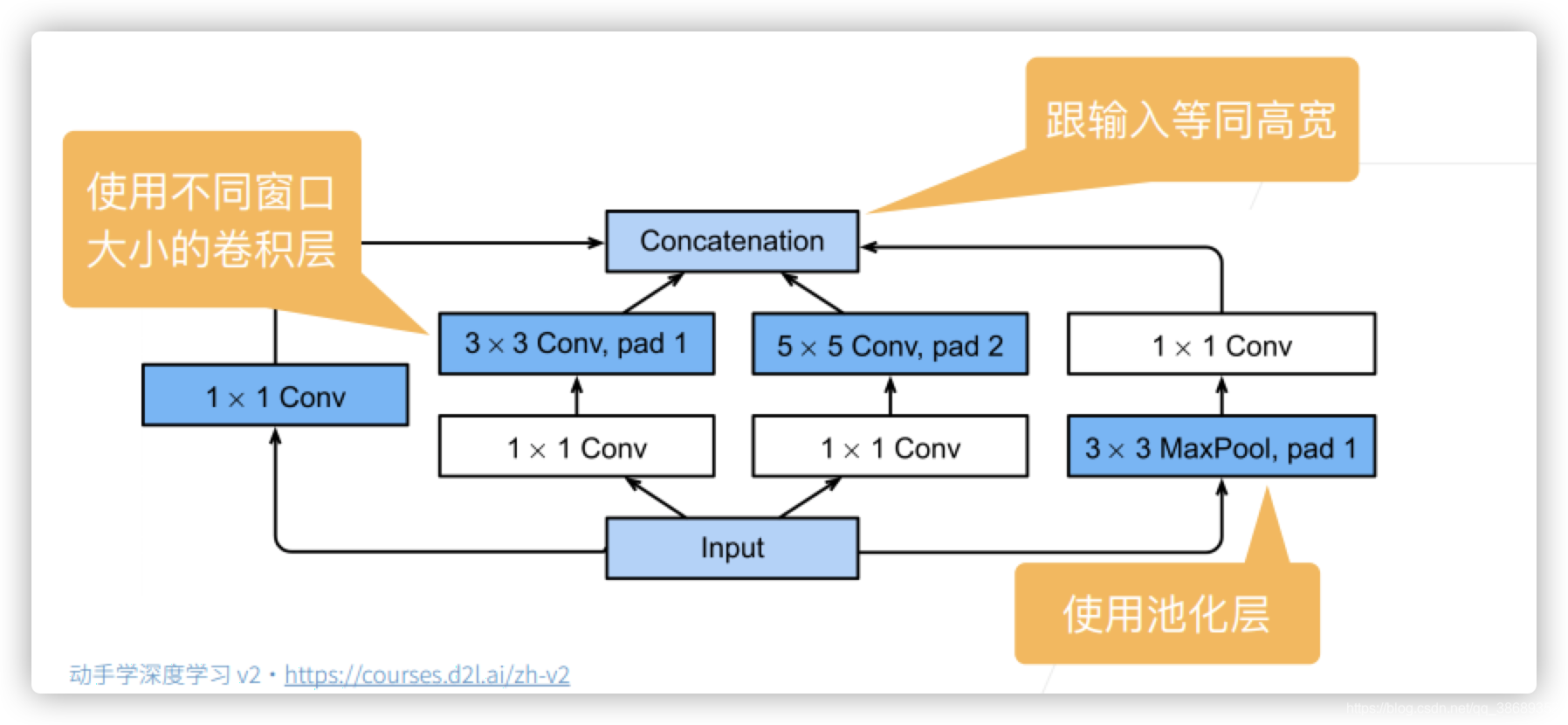

四个路径从不同层面抽取信息,然后在输出通道维合并。意思就是不同的块里面有不同的通道,把我们想要的东西都放里面。

-

Inception块在做什么:将输入copy成为四块。第一条路接入到一个1 * 1的卷积层,再输出到Concatenation处。第二条路是:先通过一个1 * 1的卷积层对通道坐变换,再输入到一个3 * 3的卷积层,padding=1.使得输入和输出的高宽一样。第三条路:先通过一个1 * 1的卷积层对通道坐变换,通过一个5 * 5的卷积层,padding=2。第四条路:先通过一个3 * 3的MaxPool,在使用padding,再在后面加上一个1 * 1的卷积层。 然后经过这四条路之后,将这些合在一起,就是将通道数叠加,而不是将图片变大。进过这个块之后高宽不变,变的是我们的通道数

-

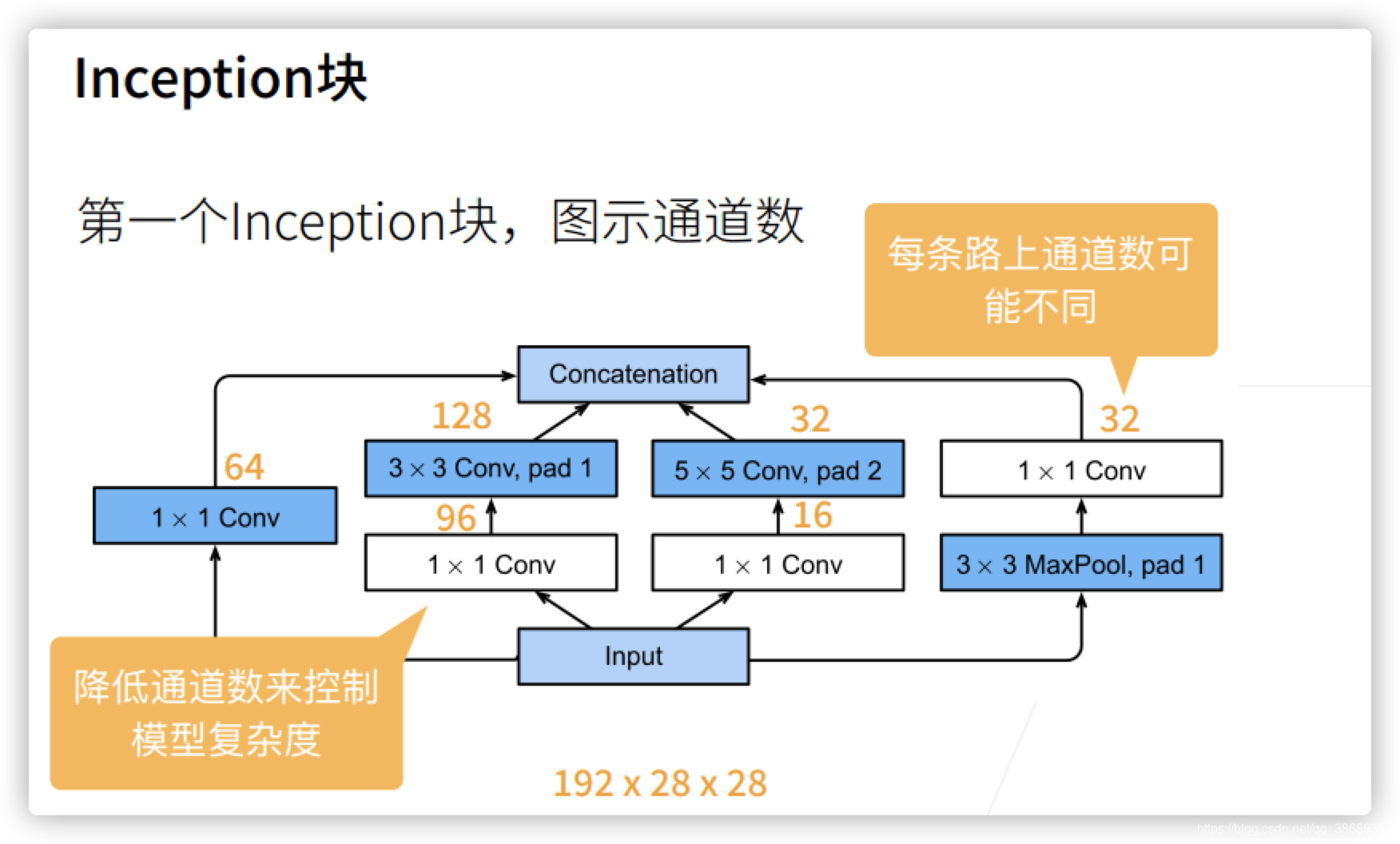

看一下通道数,下图中白色的框基本上来改变通道数的,蓝色的框可以认为是用来抽取信息的,最后的通道是:64+128+32+32。

-

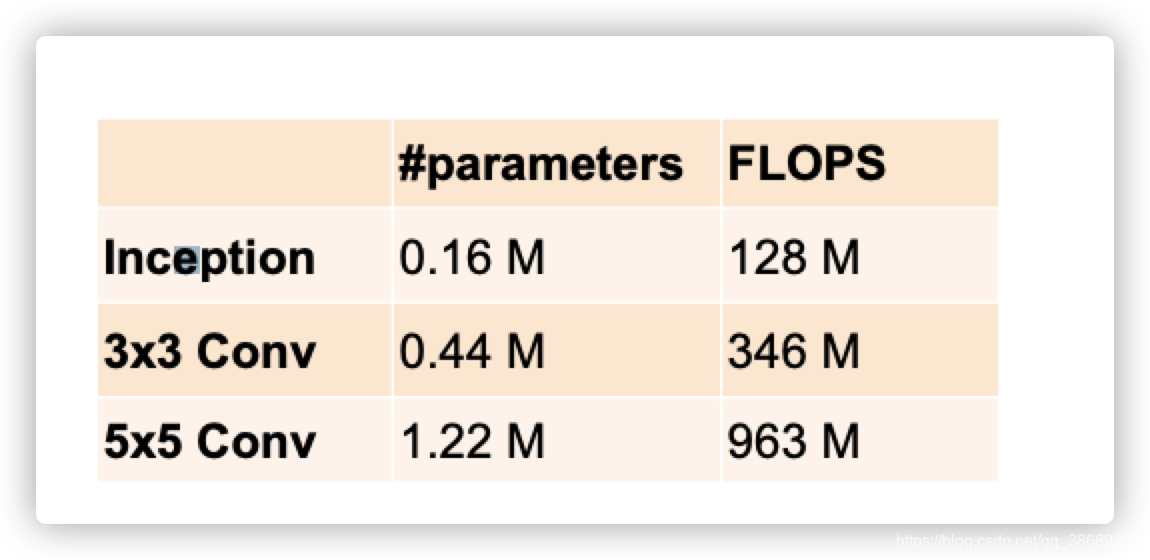

跟3 * 3或5 * 5卷积层比,Inception块有更少的参数个数和计算复杂度。

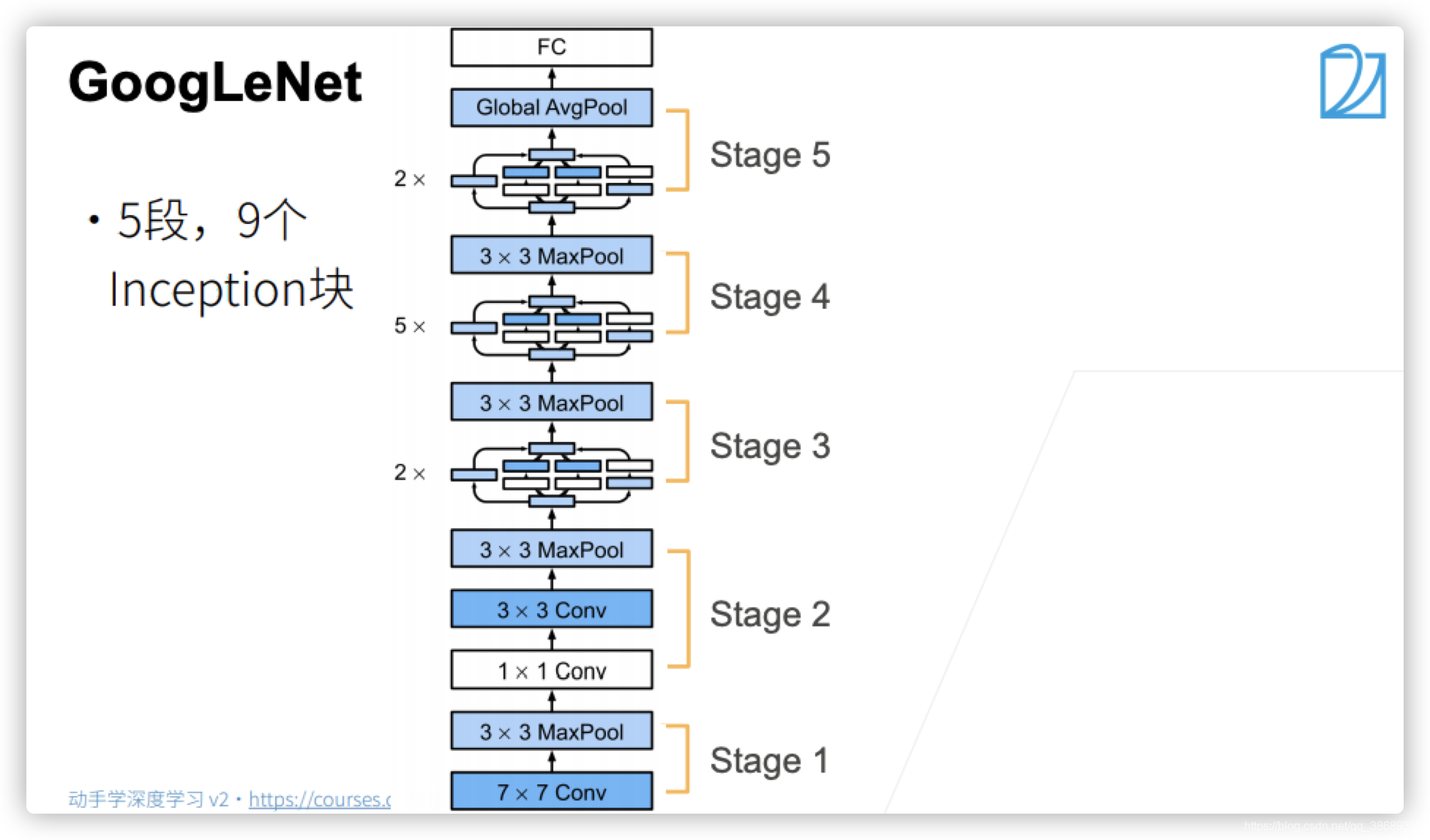

GoogLeNet

- 这里每个stage的意思是将高宽减半。这里最后使用的全局平均池化层之后,会拿到一个长为通道数的向量,然后通过一个全连接层来映射到类别数。

- Stage 1&2

- 使用了更小的窗口,更多的通道

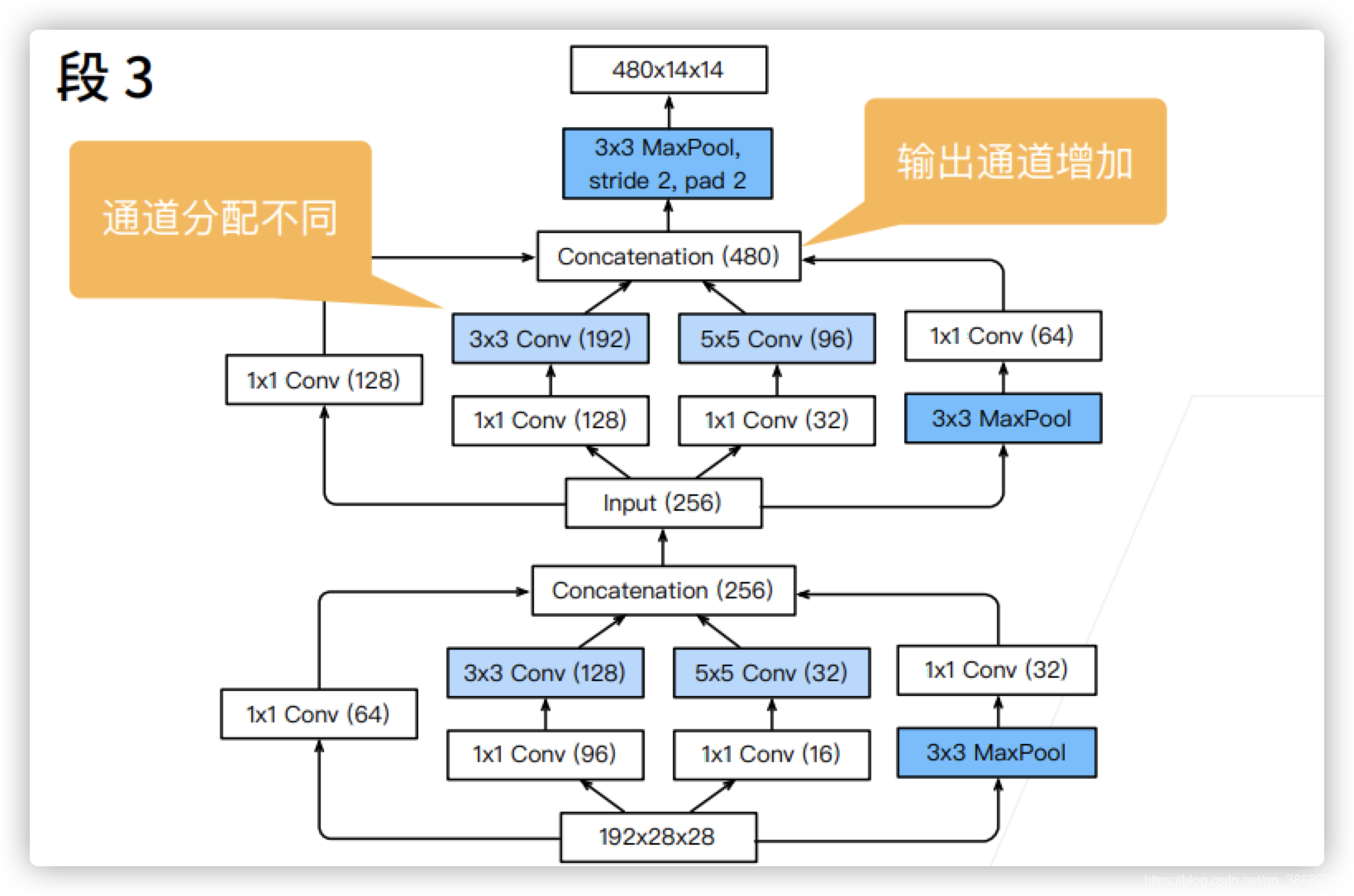

- Stage 3

- 这里使用两个Inception Block。经过这一段之后,通道数从192变为了480,大小从28 * 28变为了14 * 14

- 这里使用两个Inception Block。经过这一段之后,通道数从192变为了480,大小从28 * 28变为了14 * 14

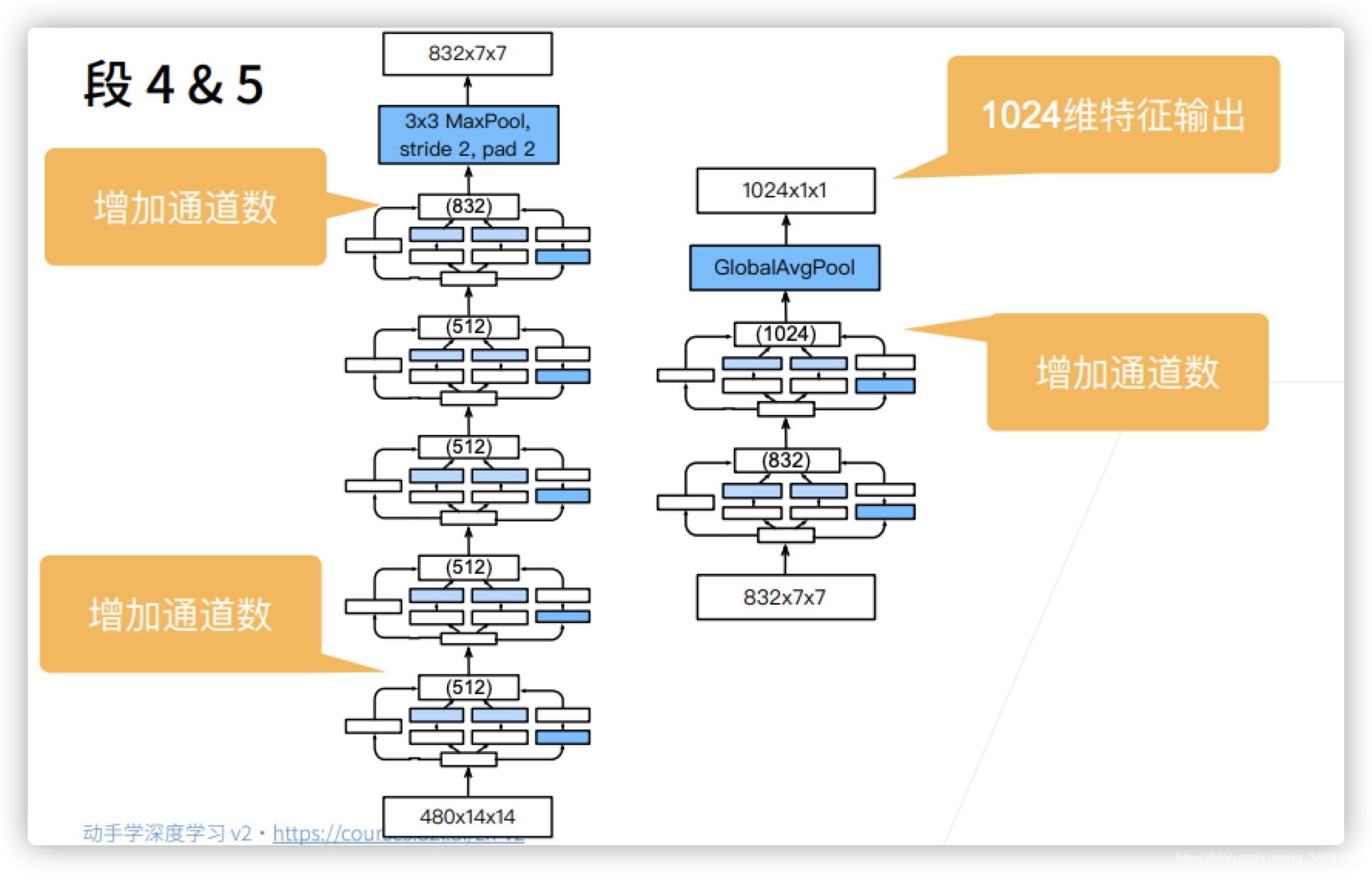

- Stage 4&5

- 经过上面这些Stage后,就是不断增加通道数。

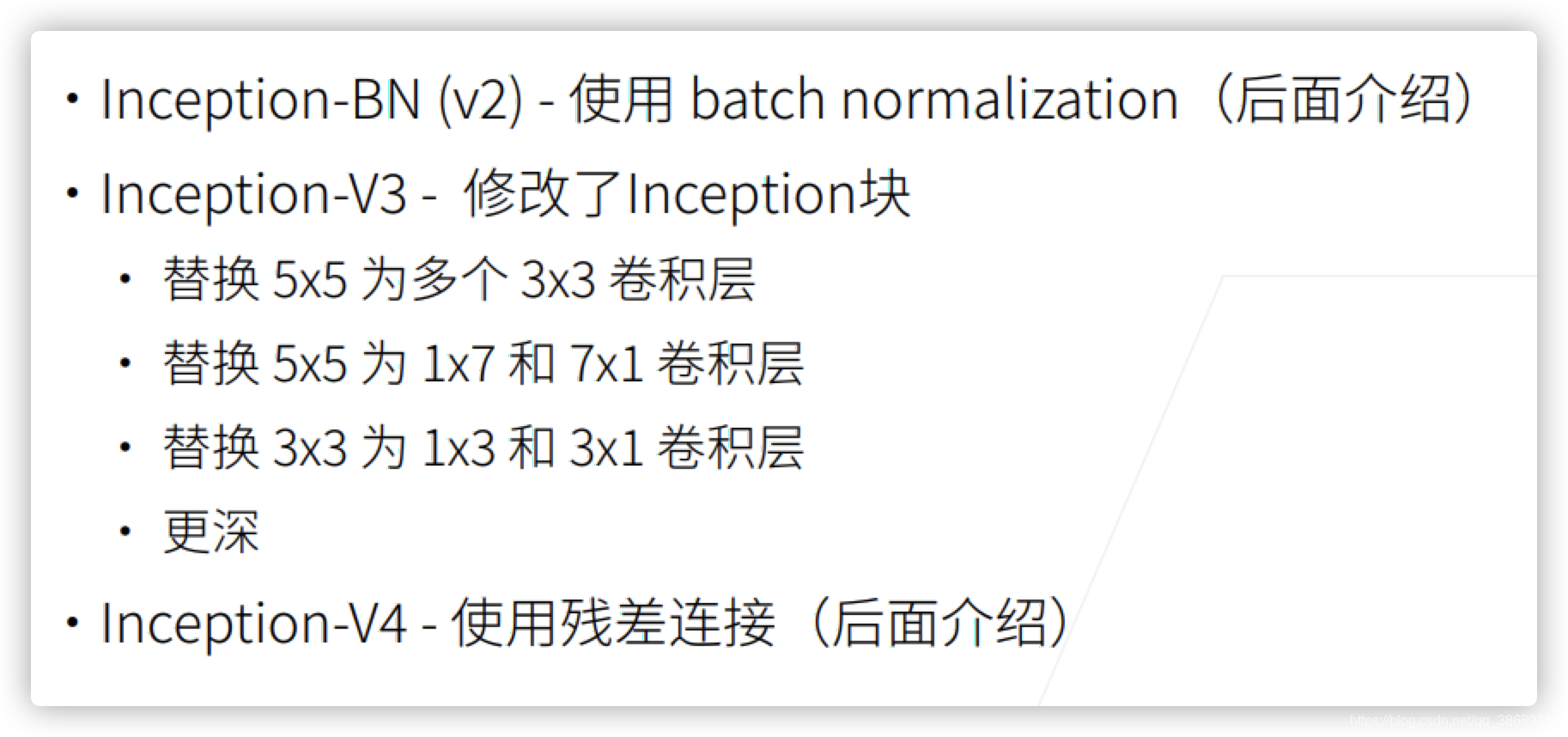

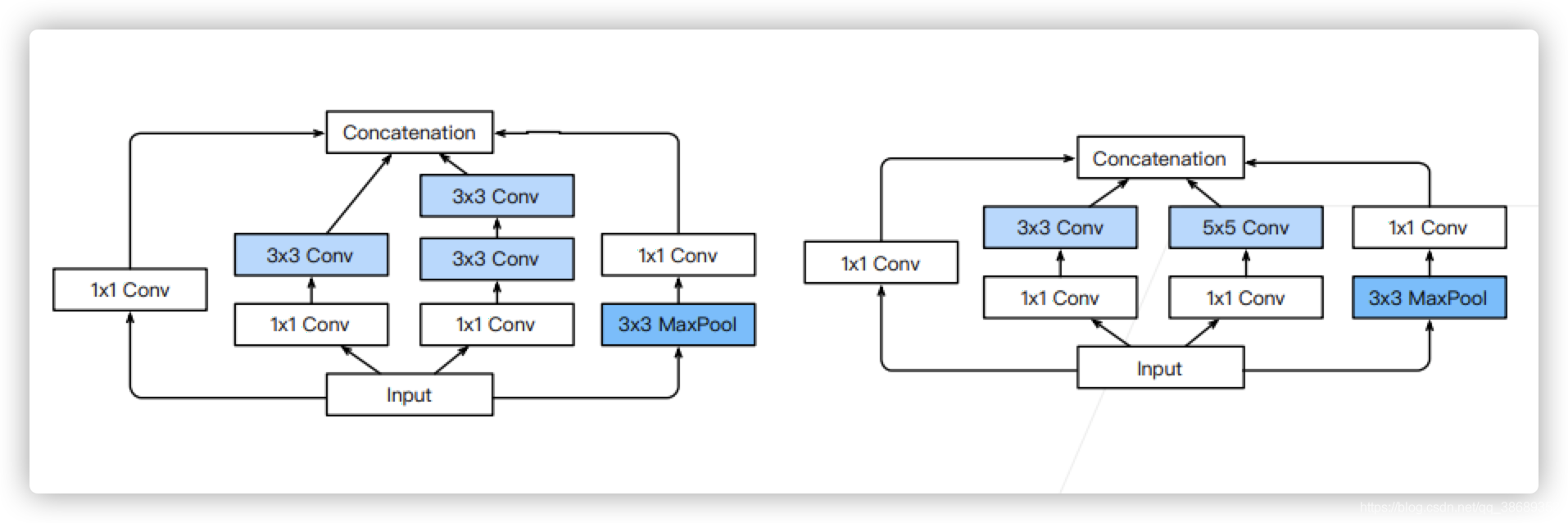

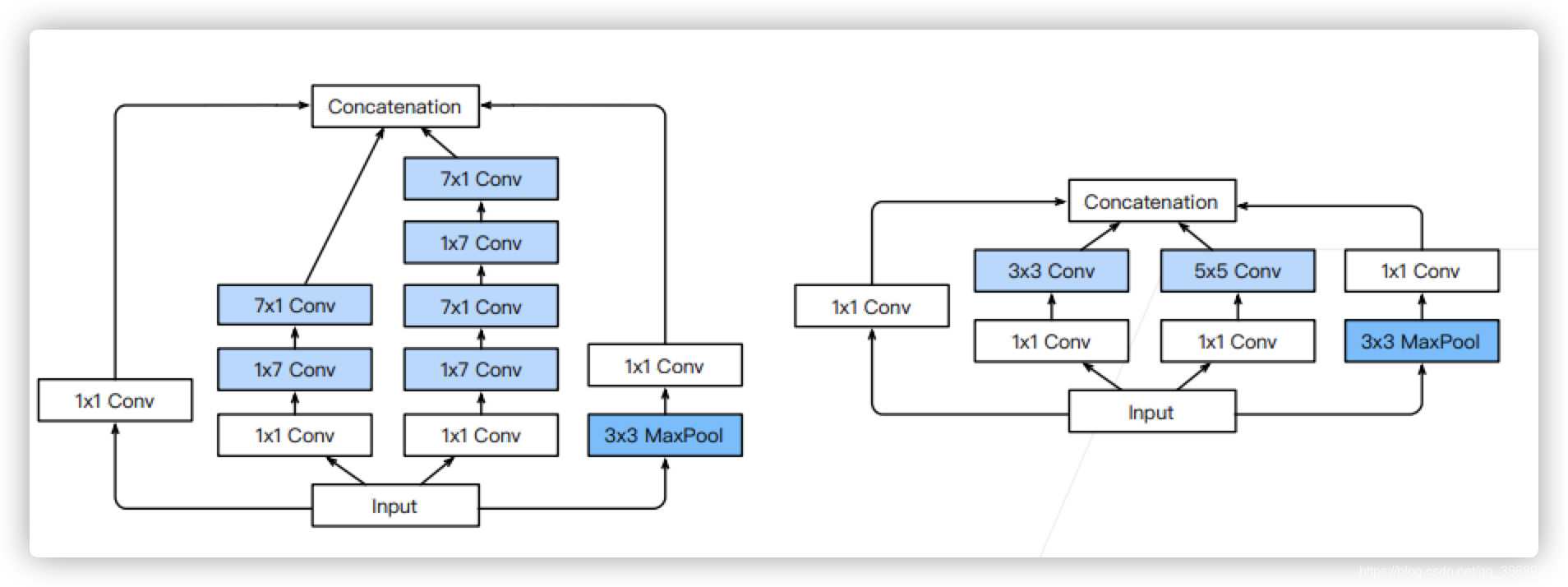

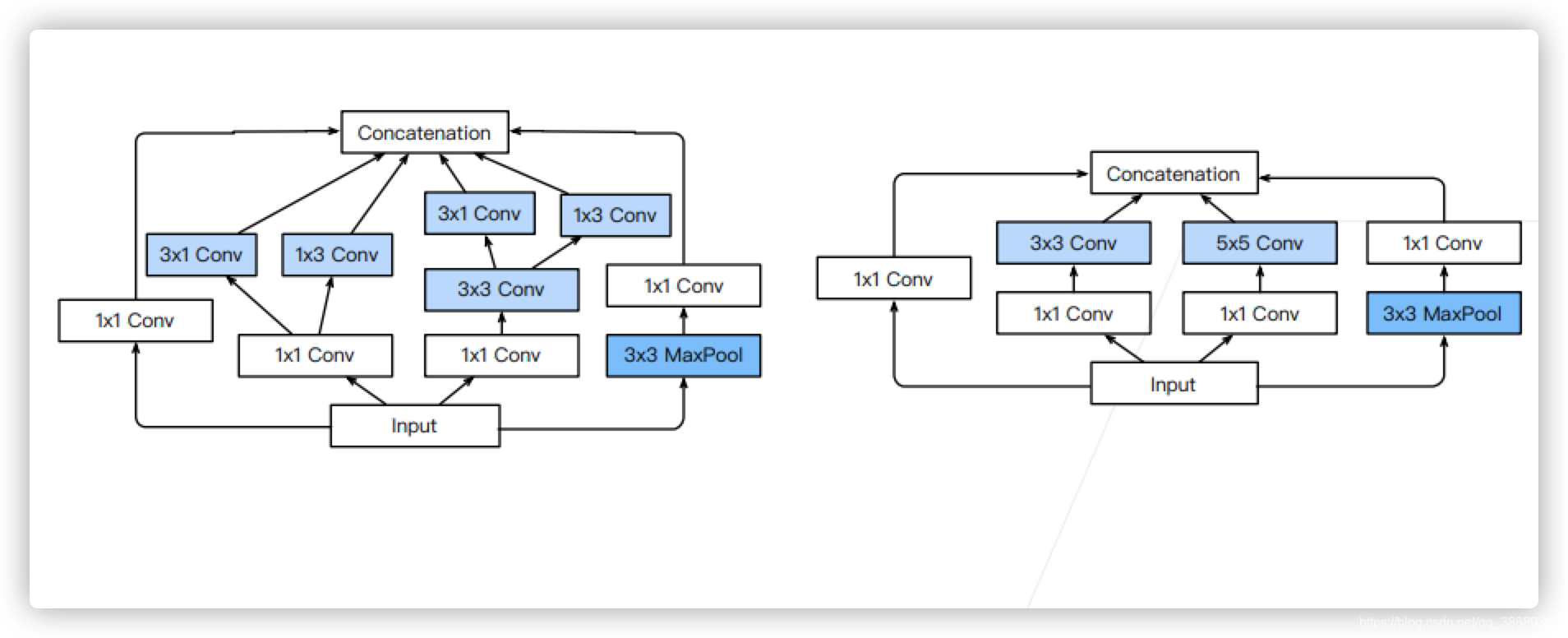

Inception后续的变种

- Inception V3块,Stage 3。下图右边是原始的,下下图也是

- Inception V3块,段4

- Inception V3 Block,Stage 5

总结

代码实现

import torch

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

# 创建一个Inception块

class Inception(nn.Module):

def __init__(self, in_channels, c1, c2, c3, c4, **kwargs):

super(Inception, self).__init__(**kwargs)

self.p1_1 = nn.Conv2d(in_channels, c1, kernel_size=1)

self.p2_1 = nn.Conv2d(in_channels, c2[0], kernel_size=1)

self.p2_2 = nn.Conv2d(c2[0], c2[1], kernel_size=3, padding=1)

self.p3_1 = nn.Conv2d(in_channels, c3[0], kernel_size=1)

self.p3_2 = nn.Conv2d(c3[0], c3[1], kernel_size=5, padding=2)

self.p4_1 = nn.MaxPool2d(kernel_size=3, stride=1, padding=1)

self.p4_2 = nn.Conv2d(in_channels, c4, kernel_size=1)

def forward(self, x):

p1 = F.relu(self.p1_1(x))

p2 = F.relu(self.p2_2(F.relu(self.p2_1(x))))

p3 = F.relu(self.p3_2(F.relu(self.p3_1(x))))

p4 = F.relu(self.p4_2(self.p4_1(x)))

return torch.cat((p1, p2, p3, p4), dim=1)

# Stage 1:一个卷积加上一个最大池化

b1 = nn.Sequential(nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),

nn.ReLU(), nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

# Stage 2

b2 = nn.Sequential(nn.Conv2d(64, 64, kernel_size=1), nn.ReLU(),

nn.Conv2d(64, 192, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

# Stage 3

b3 = nn.Sequential(Inception(192, 64, (96, 128), (16, 32), 32),

Inception(256, 128, (128, 192), (32, 96), 64),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

# Stage 4

b4 = nn.Sequential(Inception(480, 192, (96, 208), (16, 48), 64),

Inception(512, 160, (112, 224), (24, 64), 64),

Inception(512, 128, (128, 256), (24, 64), 64),

Inception(512, 112, (144, 288), (32, 64), 64),

Inception(528, 256, (160, 320), (32, 128), 128),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

# Stage 5

b5 = nn.Sequential(Inception(832, 256, (160, 320), (32, 128), 128),

Inception(832, 384, (192, 384), (48, 128), 128),

nn.AdaptiveAvgPool2d((1, 1)), nn.Flatten())

net = nn.Sequential(b1, b2, b3, b4, b5, nn.Linear(1024, 10))

X = torch.rand(size=(1, 1, 96, 96))

for layer in net:

X = layer(X)

print(layer.__class__.__name__, 'output shape:\t', X.shape)

Sequential output shape: torch.Size([1, 64, 24, 24])

Sequential output shape: torch.Size([1, 192, 12, 12])

Sequential output shape: torch.Size([1, 480, 6, 6])

Sequential output shape: torch.Size([1, 832, 3, 3])

Sequential output shape: torch.Size([1, 1024])

Linear output shape: torch.Size([1, 10])

/Users/tiger/opt/anaconda3/envs/d2l-zh/lib/python3.8/site-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at ../c10/core/TensorImpl.h:1156.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

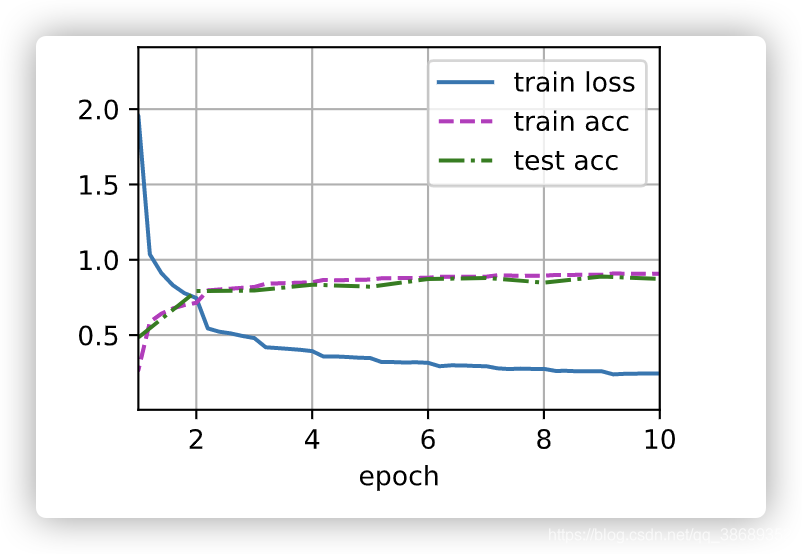

训练结果

import torch

import time

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

# 创建一个Inception块

class Inception(nn.Module):

def __init__(self, in_channels, c1, c2, c3, c4, **kwargs):

super(Inception, self).__init__(**kwargs)

self.p1_1 = nn.Conv2d(in_channels, c1, kernel_size=1)

self.p2_1 = nn.Conv2d(in_channels, c2[0], kernel_size=1)

self.p2_2 = nn.Conv2d(c2[0], c2[1], kernel_size=3, padding=1)

self.p3_1 = nn.Conv2d(in_channels, c3[0], kernel_size=1)

self.p3_2 = nn.Conv2d(c3[0], c3[1], kernel_size=5, padding=2)

self.p4_1 = nn.MaxPool2d(kernel_size=3, stride=1, padding=1)

self.p4_2 = nn.Conv2d(in_channels, c4, kernel_size=1)

def forward(self, x):

p1 = F.relu(self.p1_1(x))

p2 = F.relu(self.p2_2(F.relu(self.p2_1(x))))

p3 = F.relu(self.p3_2(F.relu(self.p3_1(x))))

p4 = F.relu(self.p4_2(self.p4_1(x)))

return torch.cat((p1, p2, p3, p4), dim=1)

# Stage 1:一个卷积加上一个最大池化

b1 = nn.Sequential(nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),

nn.ReLU(), nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

# Stage 2

b2 = nn.Sequential(nn.Conv2d(64, 64, kernel_size=1), nn.ReLU(),

nn.Conv2d(64, 192, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

# Stage 3

b3 = nn.Sequential(Inception(192, 64, (96, 128), (16, 32), 32),

Inception(256, 128, (128, 192), (32, 96), 64),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

# Stage 4

b4 = nn.Sequential(Inception(480, 192, (96, 208), (16, 48), 64),

Inception(512, 160, (112, 224), (24, 64), 64),

Inception(512, 128, (128, 256), (24, 64), 64),

Inception(512, 112, (144, 288), (32, 64), 64),

Inception(528, 256, (160, 320), (32, 128), 128),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

# Stage 5

b5 = nn.Sequential(Inception(832, 256, (160, 320), (32, 128), 128),

Inception(832, 384, (192, 384), (48, 128), 128),

nn.AdaptiveAvgPool2d((1, 1)), nn.Flatten())

start = time.time()

net = nn.Sequential(b1, b2, b3, b4, b5, nn.Linear(1024, 10))

lr, num_epochs, batch_size = 0.1, 10, 128

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=96)

d2l.train_ch6(net, train_iter, test_iter, num_epochs, lr, d2l.try_gpu())

end = time.time()

print(f'time:\t{end - start}')

loss 0.246, train acc 0.907, test acc 0.874

484.0 examples/sec on cuda:0

time: 1352.389996767044