JetsonTX2 :

?JetsonTX2 系列 (1)硬件系统配置

?Jetson TX2 系列(2)软件系统配置

?Jetson TX2 系列(3)深度学习环境搭建(Pytorch)

?Jetson TX2 系列(4)ORB_SLAM2 环境搭建

?Jetson TX2 系列(5)Ubuntu18.04 安装与使用

?Jetson TX2 系列(6)ORB_SLAM2和ROS的开发IDE配置(clion和VSCode)

?本文主要完成基于Ubuntu18.04和TX2在ROS下使用RealsenseD455运行ORB_SLAM2相关搬运工作。

目录

0. 检查:

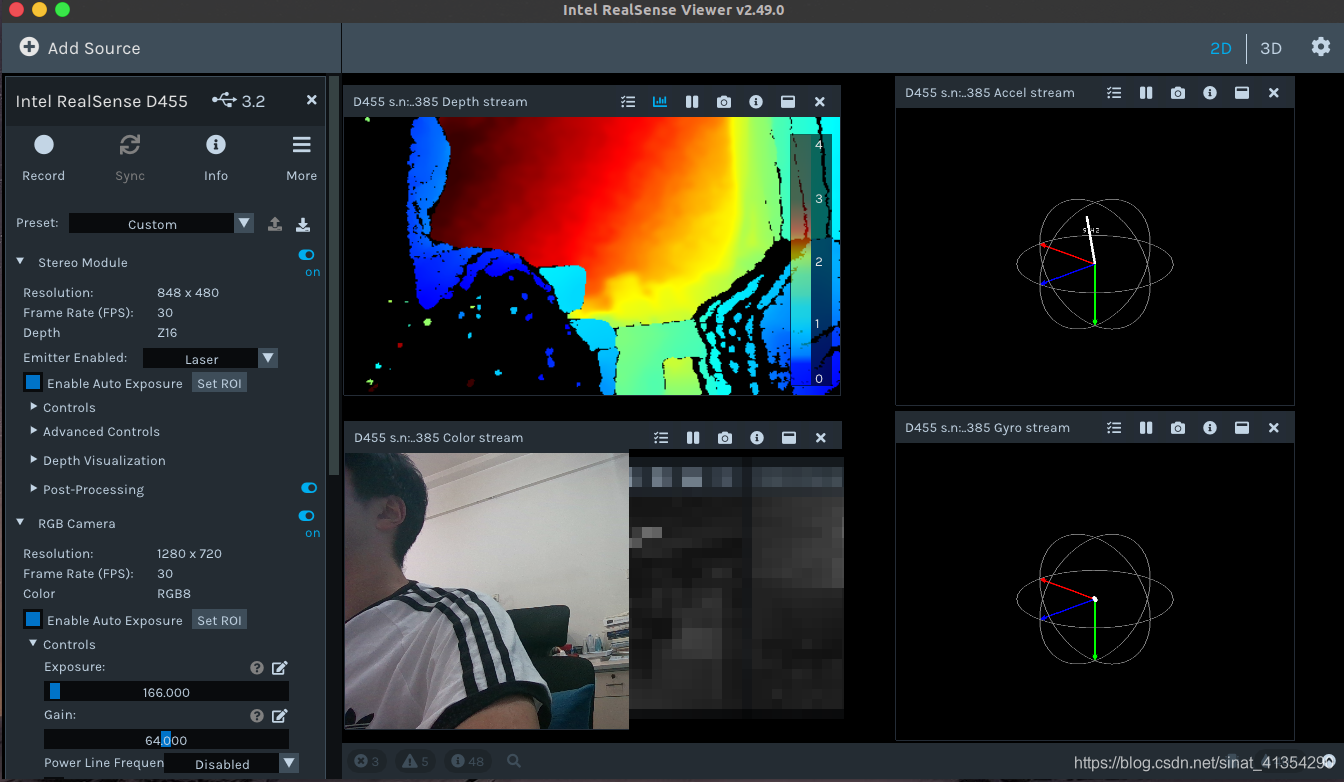

0.1 Intel? RealSense? SDK 2.0的安装

realsense-viewer

0.2 realsense-ros的安装

roslaunch realsense2_camera rs_rgbd.launch

rostopic list

rviz

0.3 ORB_SLAM2的安装

cd catkin_ws/src/ORB_SLAM2

chmod +x build_ros.sh

./build_ros.sh

0.4 RealsenseD455的标定

#TODO

相机自动标定

简要流程:

- 将相机朝着纹理比较丰富的环境,不一定是平面

- 打开 realsense_viewer

- 将 “Stereo Module” 中的“Emitter Enabled” 设置为 “Laser”

- 在 “More” 下拉菜单中选择 “On-Chip Calibration”

- 选择 “Calibrate”

- 查看 “Health-Check” 的数值, 一般小于 0.25 是可以接受的。

- 如果新的标定参数比之前的好,就 “Apply New” 将新的参数烧入 Firmware 中。

echo "source /opt/ros/melodic/setup.bash" >> ~/.bashrc

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

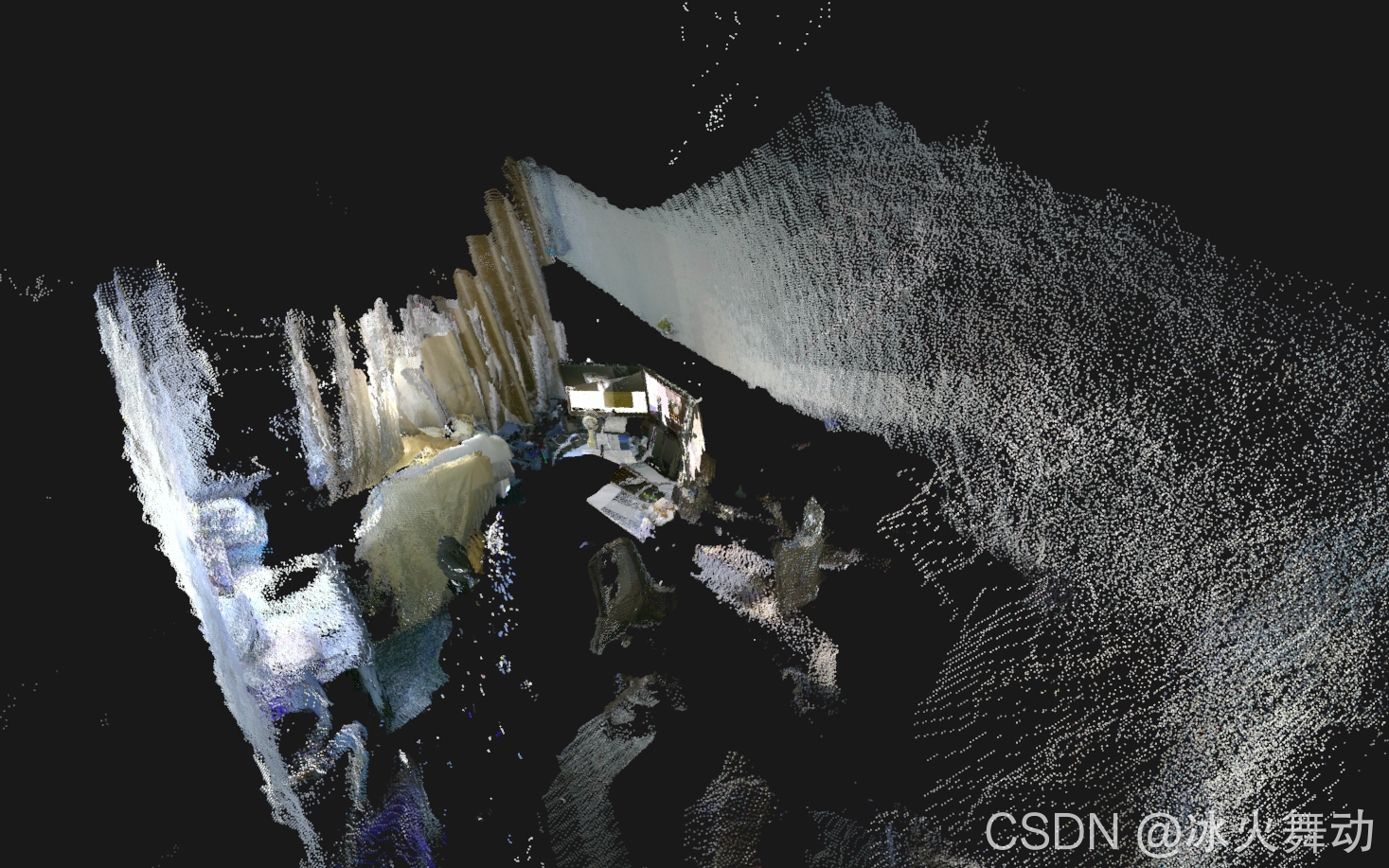

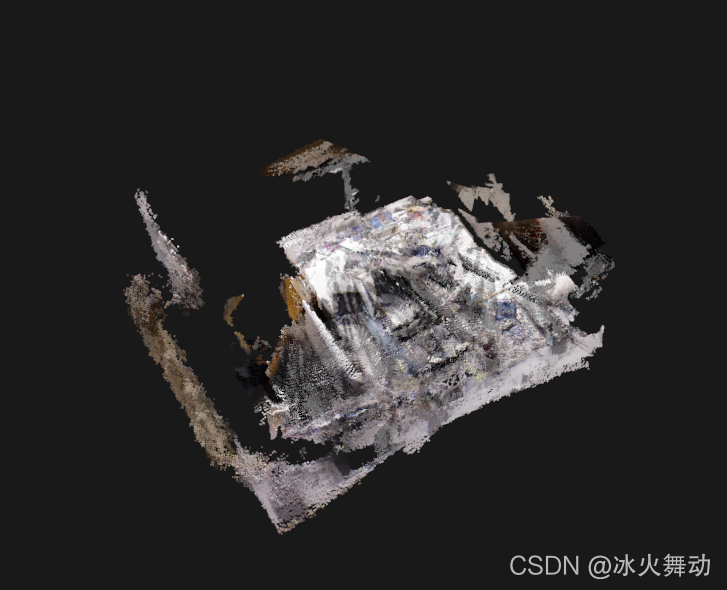

1. 点云地图构建

1.1 基于tum数据集构建点云地图

-

下载源文件

git clone https://gitee.com/l-j-l/ORBSLAM2_with_pointcloud_map.git ORB_SLAM2_modified -

复制Vocabulary文件夹

cp -r Vocabulary/ ../ORB_SLAM2_modified/ORB_SLAM2_modified/ -

删除build文件夹

rm -rf Thirdparty/DBoW2/build/ rm -rf Thirdparty/g2o/build/ -

编译

.build.h -

运行

./bin/rgbd_tum Vocabulary/ORBvoc.bin Examples/RGB-D/TUM1.yaml ~/dataset/rgbd_dataset_freiburg1_desk ~/dataset/rgbd_dataset_freiburg1_desk/associations.txtORBSLAM_with_pointcloud_map段错误(核心已转储)参考: 论ORBSLAM_with_pointcloud_map段错误(核心已转储)的另一种解决方法

在CMAKELISTS中删除-march=nativeset(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 -march=native ") set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 -march=native")在Thirdparty/g2o/CMakeLists.txt中删除-march=native

# Compiler specific options for gcc SET(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} -O3 -march=native") SET(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_RELEASE} -O3 -march=native") -

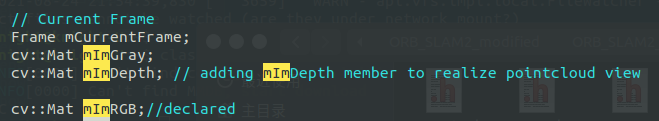

彩色点云

修改~/ORB_SLAM2_modified/include/Tracking.h 和 ~/ORB_SLAM2_modified/src/Tracking.cc文件

declare mImRGB in Tracking.h as following:

–106行–

Frame mCurrentFrame; cv::Mat mImRGB;//declared cv::Mat mImGray;

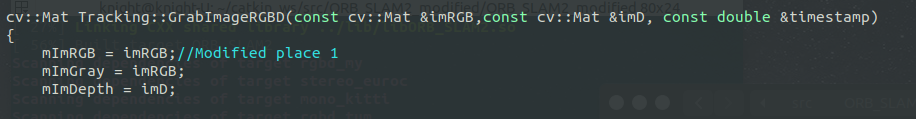

Secondly, modify the Tracking.cc as following:

Modified place 1:

cv::Mat Tracking::GrabImageRGBD(const cv::Mat &imRGB,const cv::Mat &imD, const double ×tamp)

{

mImRGB = imRGB;//Modified place 1

mImGray = imRGB;

…

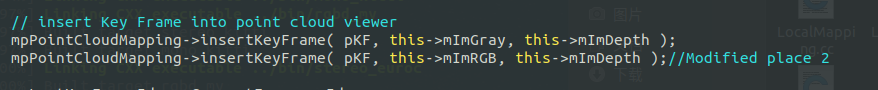

Modified place 2:

mpPointCloudMapping->insertKeyFrame( pKF, this->mImGray, this->mImDepth );//change the mImGray to mImRGB as next row

mpPointCloudMapping->insertKeyFrame( pKF, this->mImRGB, this->mImDepth );//Modified place 2–208行–

–1142行–

重新编译系统cd /build make -j8

-

基于ROS的点云地图构建

1.首先是将 ROS 文件所在路径加入到 ROS_PACKAGE_PATH 环境变量中。具体操作是在 ~/.bashrc 文件末尾加入以下语句:export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:~/catkin_ws/src/ORB_SLAM2_modified/ORB_SLAM2_modified/Examples/ROS2.把 PCL 相关的设置添加到~/ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2/CMakeLists.txt 文件中。

这是一个高博老师的遗留问题,点云建图没有修改ROS版本,所以需要进行修改,如果不想修改,可以直接git一个完整版本:git clone https://github.com/wylnii/ORBSLAM2_with_pointcloud_map.git... find_package(Eigen3 3.1.0 REQUIRED) find_package(Pangolin REQUIRED) find_package( PCL 1.7 REQUIRED ) ####### 1 include_directories( ${PROJECT_SOURCE_DIR} ${PROJECT_SOURCE_DIR}/../../../ ${PROJECT_SOURCE_DIR}/../../../include ${Pangolin_INCLUDE_DIRS} ${PCL_INCLUDE_DIRS} ####### 2 ) add_definitions( ${PCL_DEFINITIONS} ) ####### 3 link_directories( ${PCL_LIBRARY_DIRS} ) ####### 4 set(LIBS ${OpenCV_LIBS} ${EIGEN3_LIBS} ${Pangolin_LIBRARIES} ${PROJECT_SOURCE_DIR}/../../../Thirdparty/DBoW2/lib/libDBoW2.so ${PROJECT_SOURCE_DIR}/../../../Thirdparty/g2o/lib/libg2o.so ${PROJECT_SOURCE_DIR}/../../../lib/libORB_SLAM2.so ${PCL_LIBRARIES} ####### 5 ) ...3.运行编译

删掉~/ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2/build文件夹,运行build_ros.sh。

4.修改数据参数yaml文件

在具体操作时,我们复制了 TUM1.yaml 文件,命名为 TUM1_ROS.yaml,修改其中的参数 DepthMapFactor: 1.0:“factor = 5000 # for the 16-bit PNG files,OR: factor = 1 # for the 32-bit float images in the ROS bag files”5.运行

roscore rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/TUM1_ROS.yaml rosbag play datasets/rgbd_dataset_freiburg1_xyz.bag /camera/rgb/image_color:=/camera/rgb/image_raw /camera/depth/image:=/camera/depth_registered/image_raw笔者这里没有进行测试,因为没有下载bag格式的数据集, tum bag下载。

-

保存地图

高博老师的程序只能实时查看点云地图,不能保存。修改文件~/ORB_SLAM2_modified/src/pointcloudmapping.cc,调用 PCL 库的 pcl::io::savePCDFileBinary 函数保存点云地图,

1.修改文件,加入头文件#include <pcl/io/pcd_io.h>–123 行–

... for ( size_t i=lastKeyframeSize; i<N ; i++ ) { PointCloud::Ptr p = generatePointCloud( keyframes[i], colorImgs[i], depthImgs[i] ); *globalMap += *p; } pcl::io::savePCDFileBinary("vslam.pcd", *globalMap); // 只需要加入这一句 ...2.编译

cd ~/ORB_SLAM2_modified/build make -j83.查看点云地图

sudo apt-get install pcl-tools pcl_viewer vslam.pcd

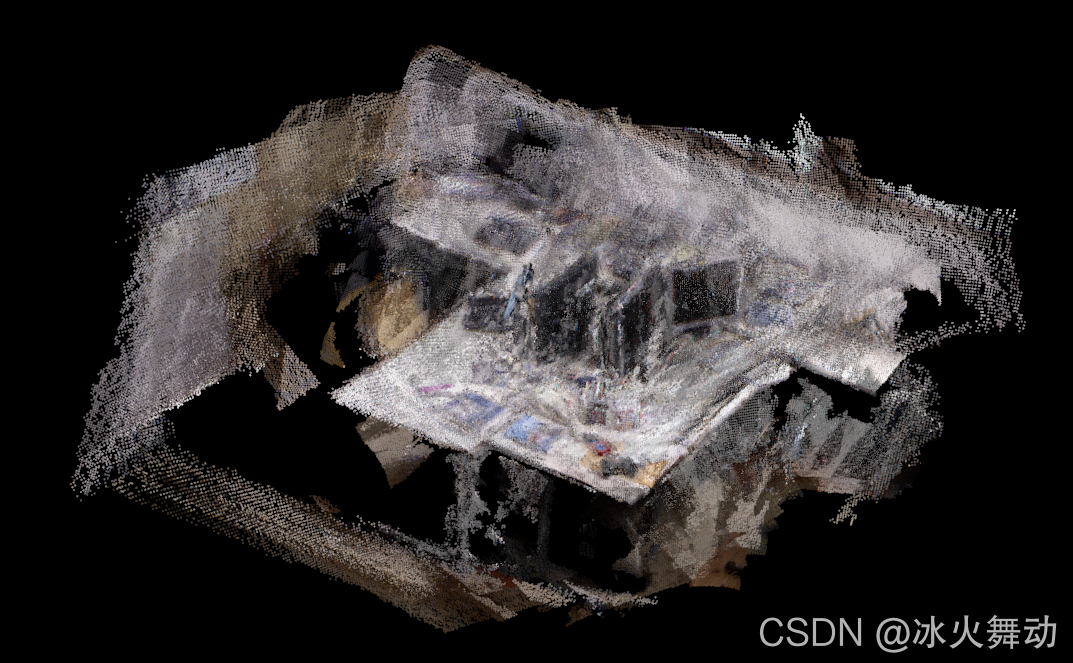

1.2 RealSense D455 构建点云地图

-

修改yaml配置文件

yaml文件包含了相机内参,可以通过rostopic /camera/color/camera_info获得。

`RealSense.ymal:#-------------------------------------------------------------------------------------------- # Camera calibration and distortion parameters (OpenCV) Camera.fx: 920.2216186523438 Camera.fy: 918.2052612304688 Camera.cx: 648.8403930664062 Camera.cy: 363.62689208984375 Camera.k1: 0.0 Camera.k2: 0.0 Camera.p1: 0.0 Camera.p2: 0.0 Camera.k3: 0.0 Camera.width: 1280 Camera.height: 720 #Camera frames per second Camera.fps: 30.0 #IR projector baseline times fx (aprox.) Camera.bf: 46.01 #Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale) Camera.RGB: 1 #Close/Far threshold. Baseline times. ThDepth: 40.0 #Deptmap values factor,将深度像素值转化为实际距离,原来单位是 mm,转化成 m DepthMapFactor: 1000.0 #ORB Parameters #-------------------------------------------------------------------------------------------- #ORB Extractor: Number of features per image ORBextractor.nFeatures: 1000 #ORB Extractor: Scale factor between levels in the scale pyramid ORBextractor.scaleFactor: 1.2 #ORB Extractor: Number of levels in the scale pyramid ORBextractor.nLevels: 8 #ORB Extractor: Fast threshold #Image is divided in a grid. At each cell FAST are extracted imposing a minimum response. #Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST #You can lower these values if your images have low contrast ORBextractor.iniThFAST: 20 ORBextractor.minThFAST: 7 #-------------------------------------------------------------------------------------------- #Viewer Parameters #-------------------------------------------------------------------------------------------- Viewer.KeyFrameSize: 0.05 Viewer.KeyFrameLineWidth: 1 Viewer.GraphLineWidth: 0.9 Viewer.PointSize:2 Viewer.CameraSize: 0.08 Viewer.CameraLineWidth: 3 Viewer.ViewpointX: 0 Viewer.ViewpointY: -0.7 Viewer.ViewpointZ: -1.8 Viewer.ViewpointF: 500 -

修改 pointcloudmapping.cc文件

–126 行–voxel.setInputCloud( globalMap ); voxel.setLeafSize (0.02f, 0.02f, 0.02f); # 调节点云密度 voxel.filter( *tmp );–73行–

p.y = - ( m - kf->cy) * p.z / kf->fy; # 将原本颠倒的点云地图上下翻转,方便观察 p.r = color.ptr<uchar>(m)[n*3]; # 修改颜色显示 p.g = color.ptr<uchar>(m)[n*3+1]; p.b = color.ptr<uchar>(m)[n*3+2]; -

修改订阅话题

进入catkin_ws/ORB_SLAM2/Examples/ROS/ORB_SLAM2/src路径下,找到ros_rgbd.cc,修改:message_filters::Subscriber<sensor_msgs::Image> rgb_sub(nh, "/camera/rgb/image_raw", 1); message_filters::Subscriber<sensor_msgs::Image> depth_sub(nh, "camera/depth_registered/image_raw", 1);message_filters::Subscriber<sensor_msgs::Image> rgb_sub(nh, "/camera/color/image_raw", 1); message_filters::Subscriber<sensor_msgs::Image> depth_sub(nh, "/camera/aligned_depth_to_color/image_raw", 1); -

运行

roscore roslaunch realsense2_camera rs_rgbd.launch rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/RealSense.yaml /camera/rgb/image_raw:=/camera/color/image_raw /camera/depth_registered/image_raw:=/camera/aligned_depth_to_color/image_raw

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/RealSense.yaml /camera/rgb/image_raw:=/camera/color/image_raw /camera/depth_registered/image_raw:=/camera/aligned_depth_to_color/image_raw

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/TUM1U.yaml /camera/rgb/image_raw:=/camera/color/image_raw /camera/depth_registered/image_raw:=/camera/aligned_depth_to_color/image_raw

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/TUM1U.yaml

测试运行通过:

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.txt Examples/RGB-D/TUM1_ROS.yaml

修改launch文件~/readme/realsense/Realsense-Ros/catkin_ws/src/realsense/realsense2_camera/launch

<launch>

<arg name="serial_no" default=""/>

<arg name="json_file_path" default=""/>

<arg name="camera" default="camera"/>

<arg name="tf_prefix" default="$(arg camera)"/>

<arg name="fisheye_width" default="640"/>

<arg name="fisheye_height" default="480"/>

<arg name="enable_fisheye" default="true"/>

<arg name="depth_width" default="640"/>

<arg name="depth_height" default="480"/>

<arg name="enable_depth" default="true"/>

<arg name="color_width" default="640"/>

<arg name="color_height" default="480"/>

<arg name="enable_color" default="true"/>

<arg name="fisheye_fps" default="30"/>

<arg name="depth_fps" default="30"/>

<arg name="color_fps" default="30"/>

<arg name="gyro_fps" default="200"/>

<arg name="accel_fps" default="250"/>

<arg name="enable_pointcloud" default="false"/>

<arg name="pointcloud_texture_stream" default="RS2_STREAM_COLOR"/>

<arg name="pointcloud_texture_index" default="0"/>

<arg name="enable_sync" default="true"/>

<arg name="align_depth" default="true"/>

<arg name="filters" default=""/>

<arg name="clip_distance" default="-2"/>

<arg name="linear_accel_cov" default="0.01"/>

<arg name="initial_reset" default="false"/>

<arg name="unite_imu_method" default="linear_interpolation"/>

<arg name="hold_back_imu_for_frames" default="true"/>

<group ns="$(arg camera)">

<include file="$(find realsense2_camera)/launch/includes/nodelet.launch.xml">

<arg name="tf_prefix" value="$(arg tf_prefix)"/>

<arg name="serial_no" value="$(arg serial_no)"/>

<arg name="json_file_path" value="$(arg json_file_path)"/>

<arg name="enable_pointcloud" value="$(arg enable_pointcloud)"/>

<arg name="pointcloud_texture_stream" value="$(arg pointcloud_texture_stream)"/>

<arg name="pointcloud_texture_index" value="$(arg pointcloud_texture_index)"/>

<arg name="enable_sync" value="$(arg enable_sync)"/>

<arg name="align_depth" value="$(arg align_depth)"/>

<arg name="fisheye_width" value="$(arg fisheye_width)"/>

<arg name="fisheye_height" value="$(arg fisheye_height)"/>

<arg name="enable_fisheye" value="$(arg enable_fisheye)"/>

<arg name="depth_width" value="$(arg depth_width)"/>

<arg name="depth_height" value="$(arg depth_height)"/>

<arg name="enable_depth" value="$(arg enable_depth)"/>

<arg name="color_width" value="$(arg color_width)"/>

<arg name="color_height" value="$(arg color_height)"/>

<arg name="enable_color" value="$(arg enable_color)"/>

<arg name="fisheye_fps" value="$(arg fisheye_fps)"/>

<arg name="depth_fps" value="$(arg depth_fps)"/>

<arg name="color_fps" value="$(arg color_fps)"/>

<arg name="gyro_fps" value="$(arg gyro_fps)"/>

<arg name="accel_fps" value="$(arg accel_fps)"/>

<arg name="filters" value="$(arg filters)"/>

<arg name="clip_distance" value="$(arg clip_distance)"/>

<arg name="linear_accel_cov" value="$(arg linear_accel_cov)"/>

<arg name="initial_reset" value="$(arg initial_reset)"/>

<arg name="unite_imu_method" value="$(arg unite_imu_method)"/>

</include>

</group>

</launch>

????

如果运行的时候无法跟踪到特征点,无法生成点云,或是生成的很慢,请把参数文件中的DepthMapFactor: 1000.0 改为5000.0

rostopic echo /camera_info

ROS中生成CameraInfo消息

---

header:

seq: 1407

stamp:

secs: 1629859962

nsecs: 194228888

frame_id: "camera_color_optical_frame"

height: 480

width: 640

distortion_model: "plumb_bob"

D: [-0.05550055205821991, 0.06508966535329819, -0.0003226370026823133, 0.0005384536925703287, -0.020686540752649307]

K: [381.16790771484375, 0.0, 320.990966796875, 0.0, 380.8759460449219, 243.68350219726562, 0.0, 0.0, 1.0]

R: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

P: [381.16790771484375, 0.0, 320.990966796875, 0.0, 0.0, 380.8759460449219, 243.68350219726562, 0.0, 0.0, 0.0, 1.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: False

---

.build_ros.sh

/usr/bin/ld: warning: libopencv_core.so.3.2, needed by /opt/ros/melodic/lib/libcv_bridge.so, may conflict with libopencv_core.so.3.4