图像数据集.

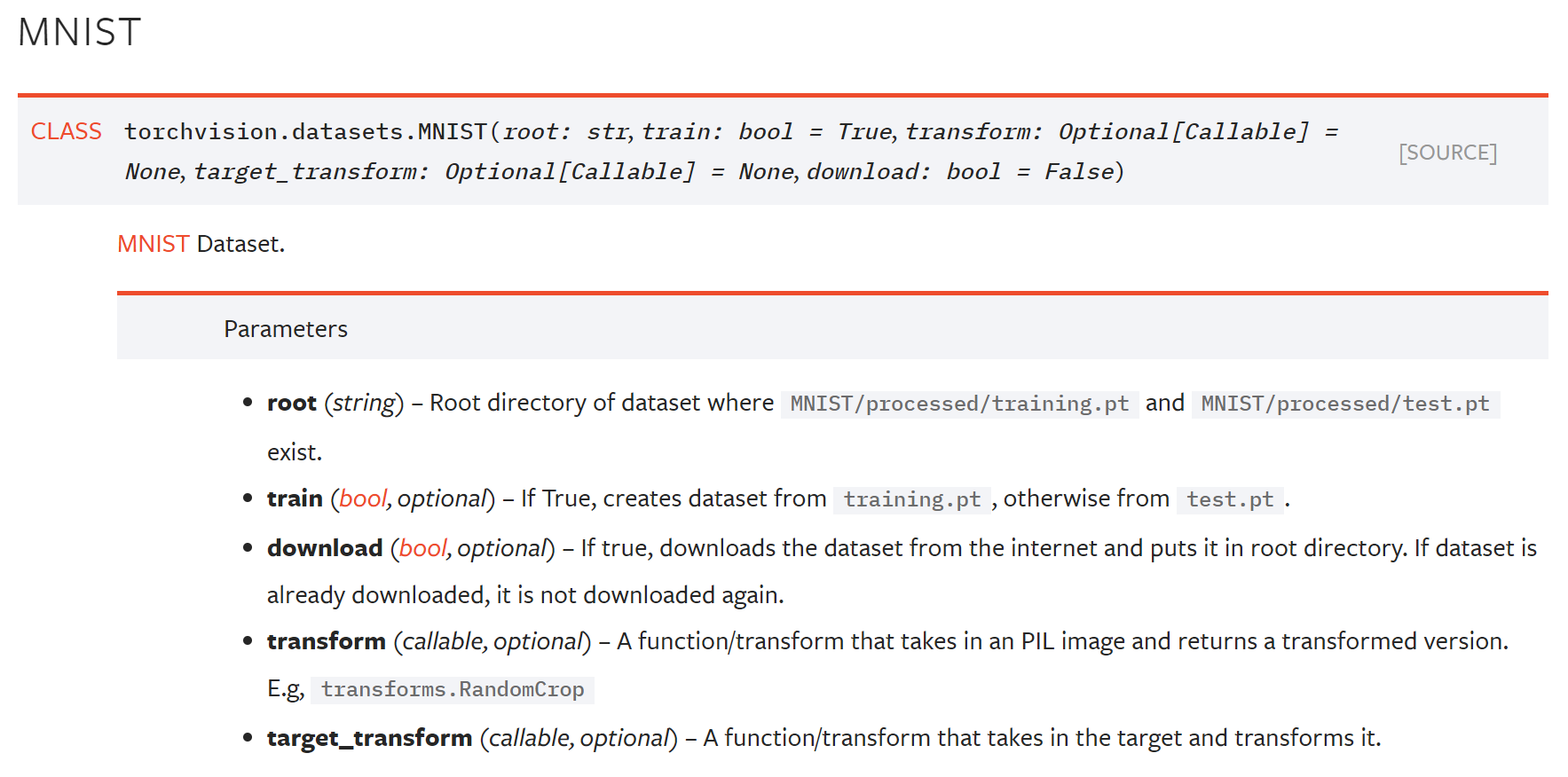

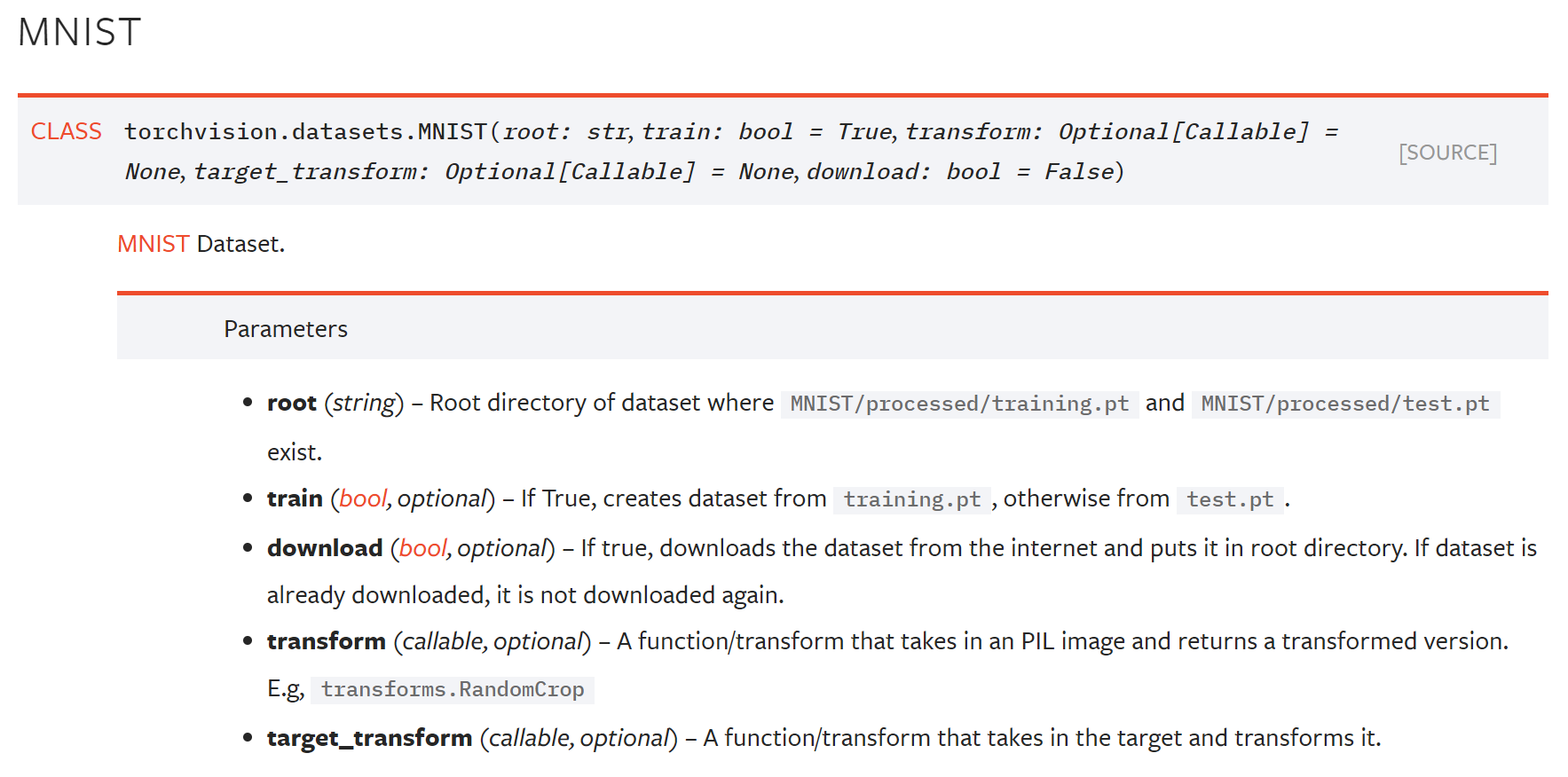

MNIST.

transformation = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST('data\\',

train = True,

transform = transformation,

download = True)

test_dataset = datasets.MNIST('data\\',

train = False,

transform = transformation,

download = True)

train_loader = DataLoader(train_dataset,

batch_size = 32,

shuffle = True,

num_workers = 0)

test_loader = DataLoader(test_dataset,

batch_size = 32,

shuffle = False,

num_workers = 0)

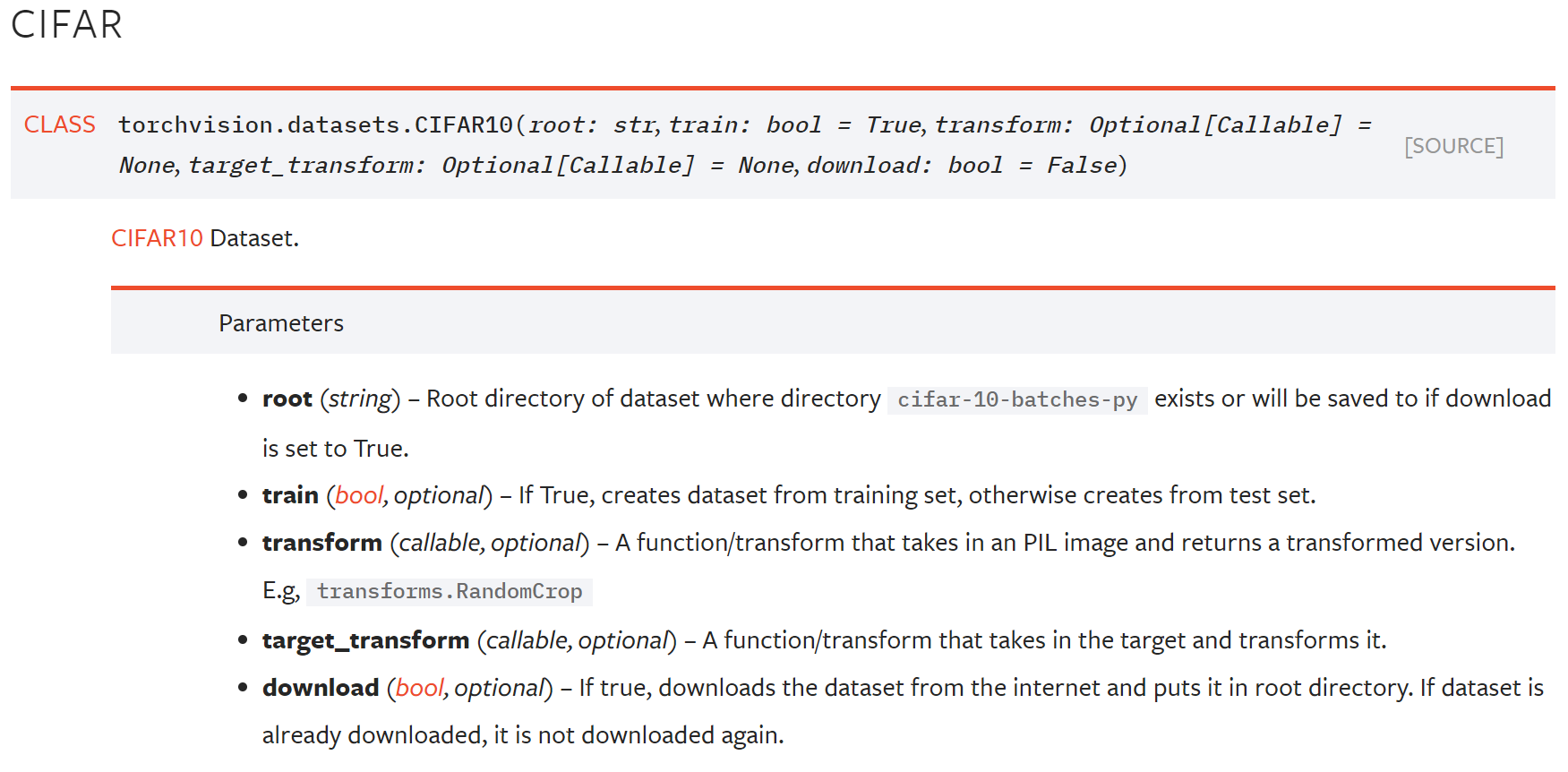

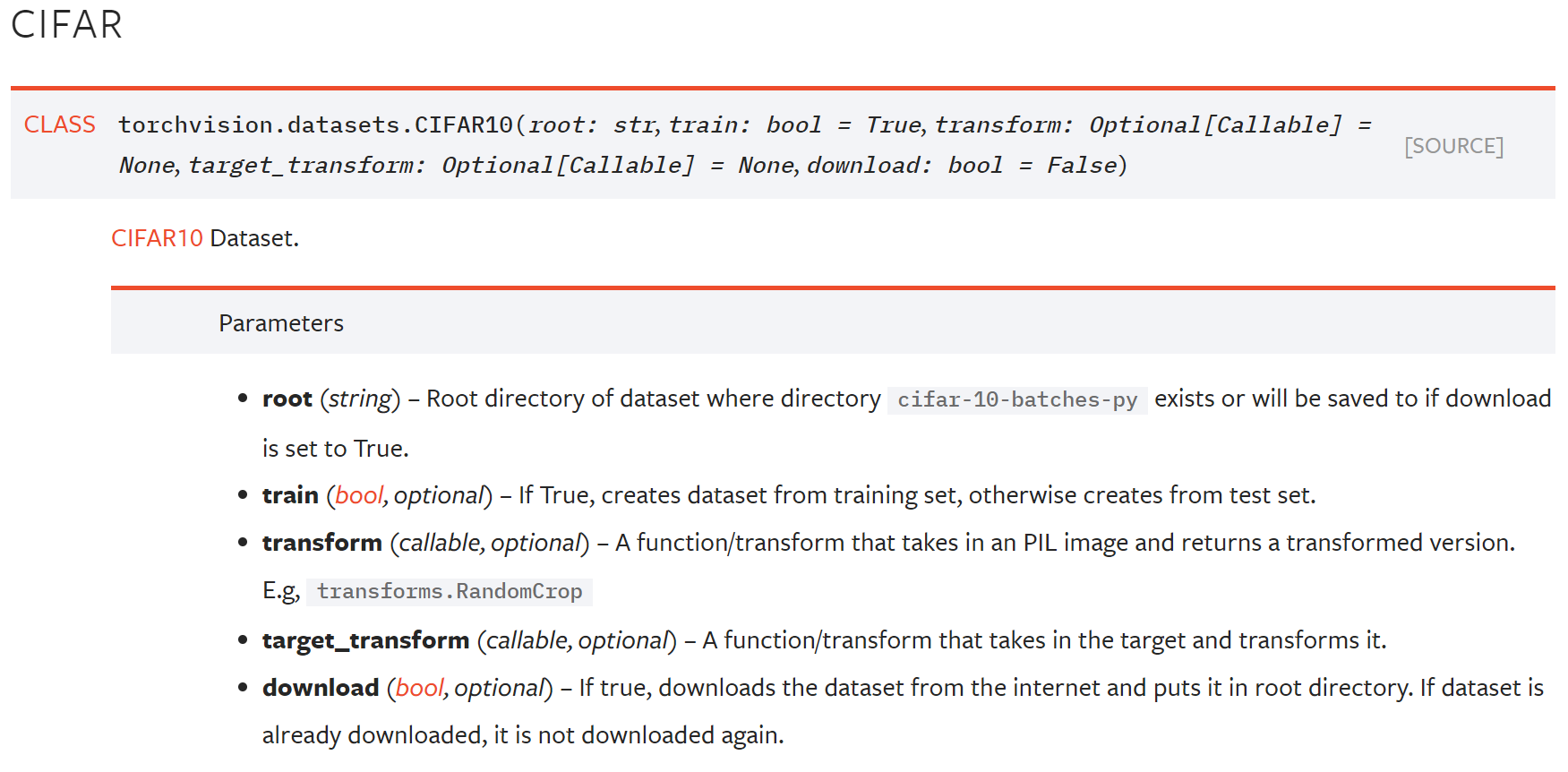

CIFAR10.

ds = datasets.CIFAR10(root = 'data',download = True,

transform = trans)

loader = torch.utils.data.DataLoader(ds,batch_size = 64,

shuffle = True)

STL10.

IMAGE = STL10(root = 'data/',

split = 'train',

transform = transforms.ToTensor(),

download = True)

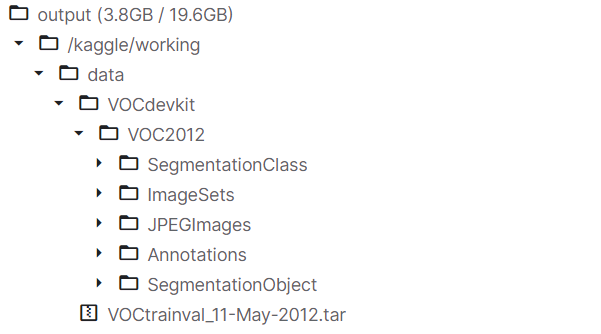

VOC Segmentation.

文本数据集.

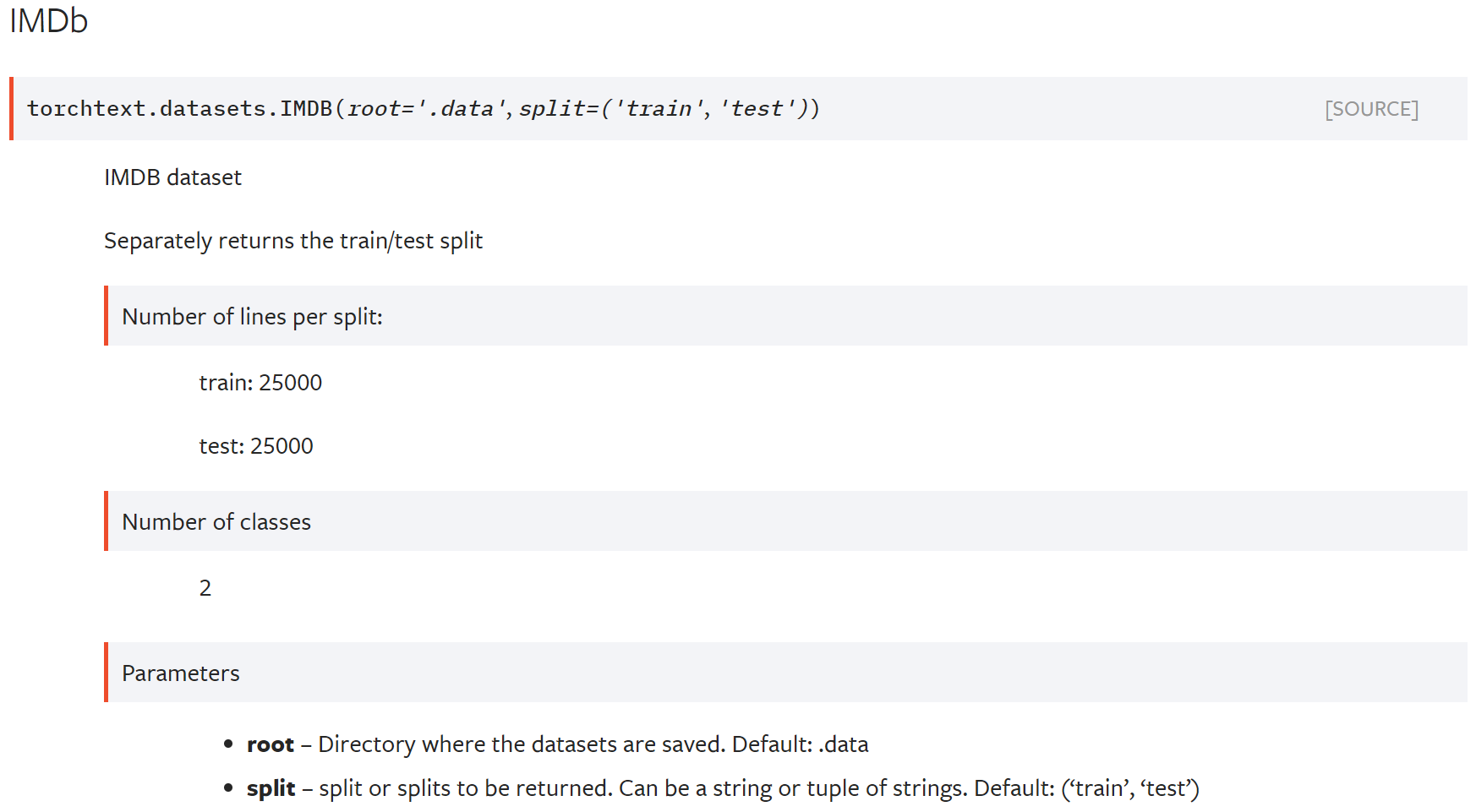

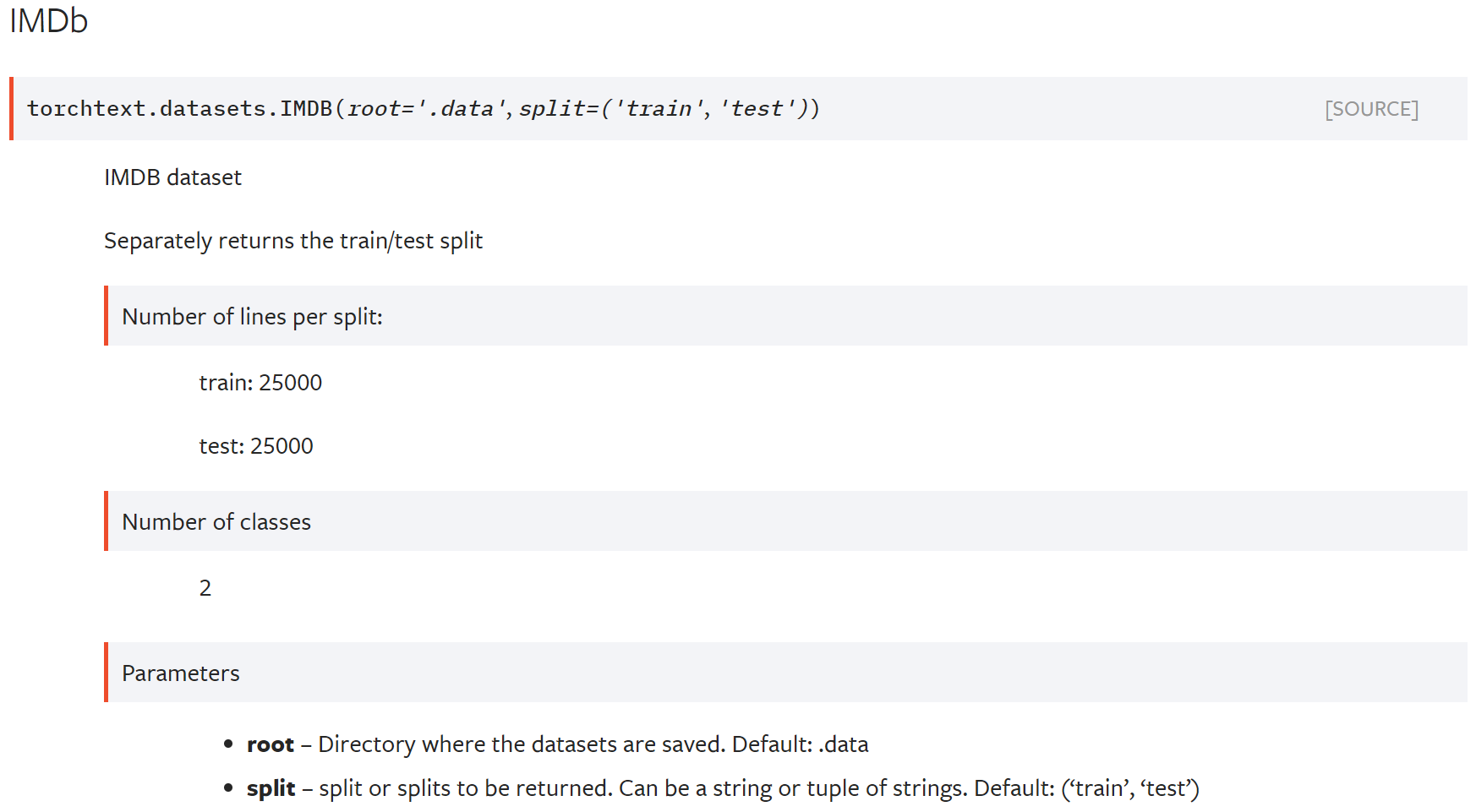

IMDB.

TEXT = data.Field(lower = True,

fix_length = 200,

batch_first = True)

LABEL = data.Field(sequential = False)

train,test = datasets.IMDB.splits(TEXT,LABEL)

TEXT.build_vocab(train,

vectors = GloVe(name = '6B',

dim = 300),

max_size = 10000,

min_freq = 10)

LABEL.build_vocab(train)

train_iter,test_iter = \

data.BucketIterator.splits((train,test),

batch_size = 32,

device = torch.device('cpu'),

shuffle = True)

train_iter.repeat = False

test_iter.repeat = False

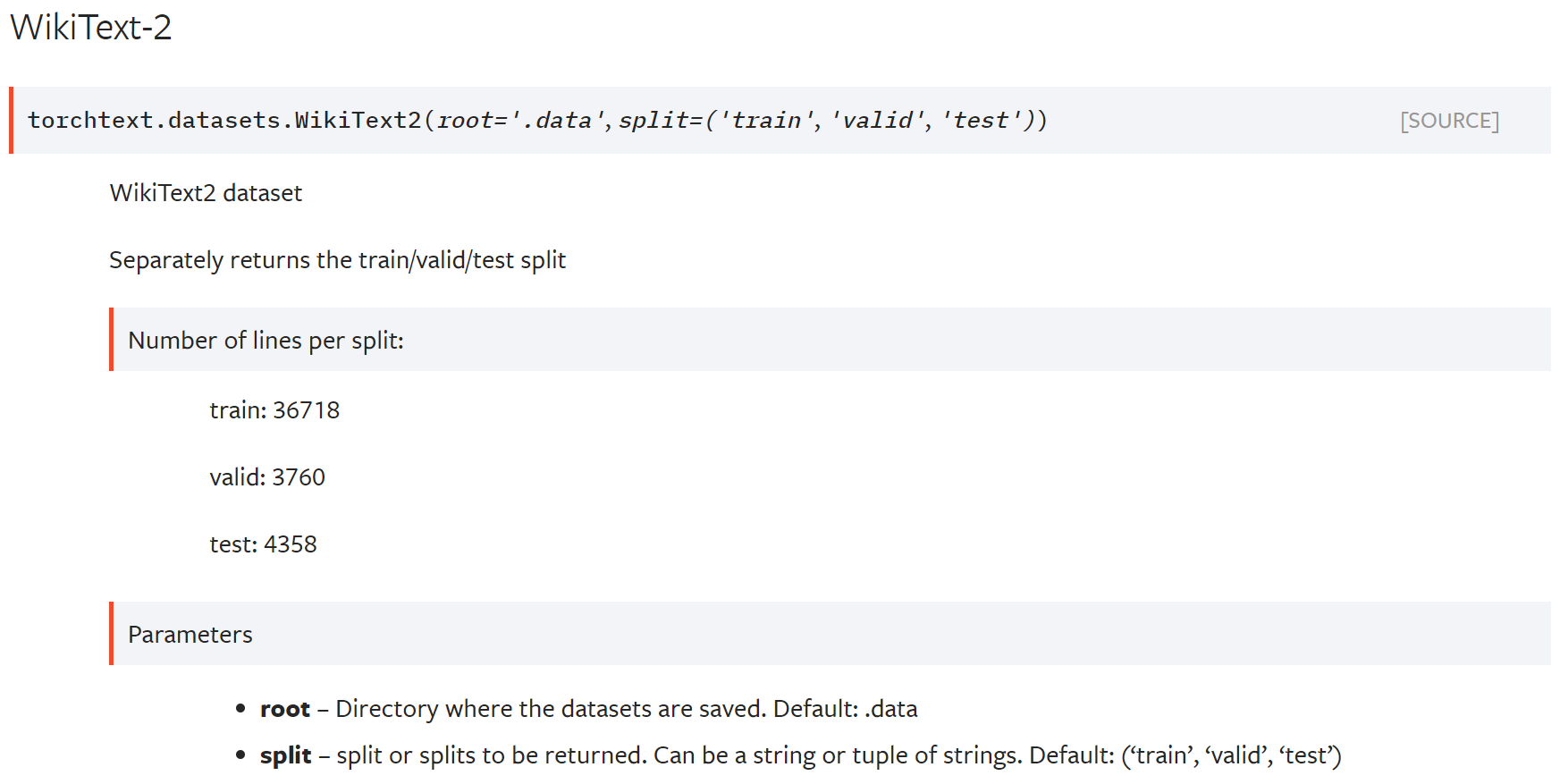

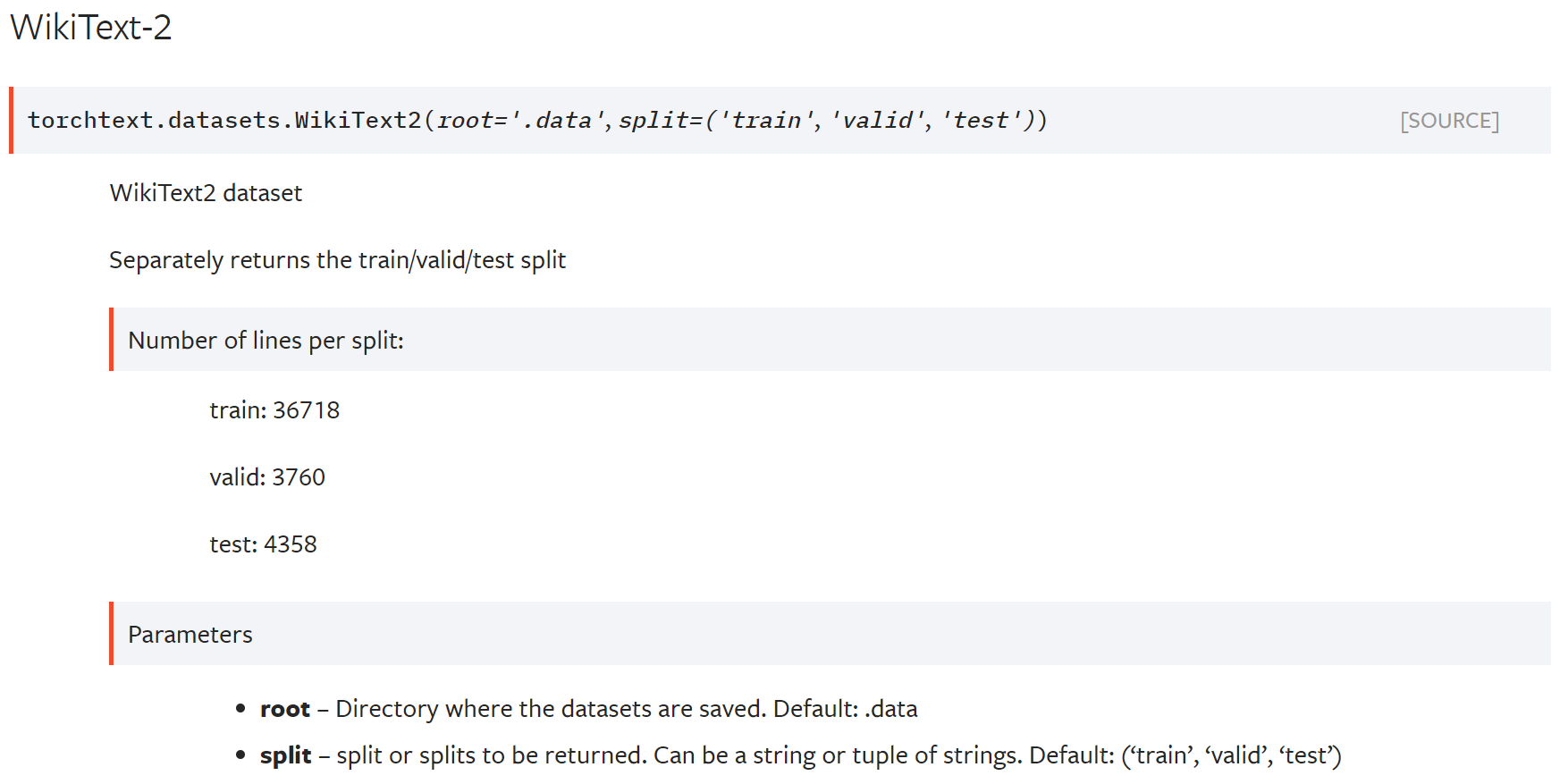

WikiText-2.

TEXT = data.Field(batch_first = False,lower = True)

train,valid,test = datasets.WikiText2.splits(TEXT,root = 'data')

batchsize = 4

for dataset in (train,valid,test):

end = (len(dataset[0].text)//batchsize)*batchsize

dataset[0].text = dataset[0].text[0:end]

train_iter,valid_iter,test_iter = \

data.BPTTIterator.splits((train,valid,test),

batch_size = batchsize,

bptt_len = 35)

TEXT.build_vocab(train)

|