第一次接触pytorch,本贴仅记录学习过程,侵删

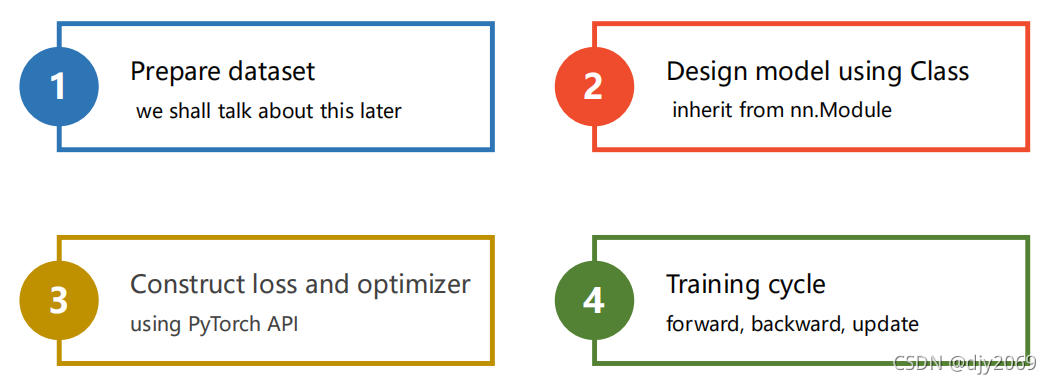

在B站看完了视频的P5 05.用pytorch实现线性回归。

附上视频地址:《PyTorch深度学习实践》完结合集_05. 用pytorch实现线性回归

先记录一些笔记。

import torch.nn

# 1. Prepare dataset

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[2.0], [4.0], [6.0]])

# 2. Design model using Class

class LinearModel(torch.nn.Module):

def __init__(self):

super().__init__()

self.linear = torch.nn.Linear(1, 1)

# Class nn.Linear contain two member Tensors: weight and bias.

def forward(self, x):

y_pred = self.linear(x)

return y_pred

model = LinearModel() # Create a instance of class LinearModel

# 3. Construct Loss and Optimizer

criterion = torch.nn.MSELoss(size_average=False)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

# 4. Training Cycle

for epoch in range(1000):

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss)

optimizer.zero_grad()

# The grad computed by .backward() will be accumulated.

# So before backward, remember set the grad to ZERO!!!

loss.backward()

optimizer.step() # update

# Output weight and bias

print('w=', model.linear.weight.item())

print('b=', model.linear.bias.item())

# Test Model

x_test = torch.Tensor([[4.0]])

y_test = model(x_test)

print('y_pred=', y_test.data)

作业:

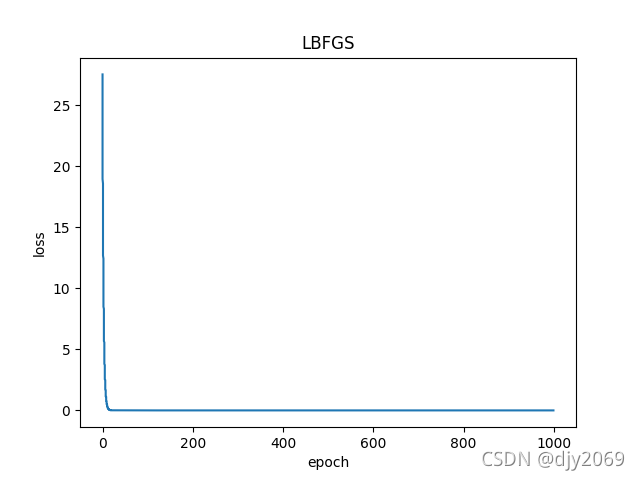

其中LBFGS会多次重新计算函数,这样就要使用一个闭包(closure)来支持多次计算model的操作。

import matplotlib.pyplot as plt

import torch.nn

# 1. Prepare dataset

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[2.0], [4.0], [6.0]])

# 2. Design model using Class

class LinearModel(torch.nn.Module):

def __init__(self):

super().__init__()

self.linear = torch.nn.Linear(1, 1)

def forward(self, x):

y_pred = self.linear(x)

return y_pred

model = LinearModel()

# 3. Construct Loss and Optimizer

criterion = torch.nn.MSELoss(size_average=False)

optimizer = torch.optim.LBFGS(model.parameters(), lr=0.01)

# 4. Training Cycle

loss_list = []

time = []

for epoch in range(1000):

def closure():

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss)

loss_list.append(loss.item())

time.append(epoch)

optimizer.zero_grad()

loss.backward()

return loss

optimizer.step(closure)

# Output weight and bias

print('w=', model.linear.weight.item())

print('b=', model.linear.bias.item())

# Test Model

x_test = torch.Tensor([[4.0]])

y_test = model(x_test)

print('y_pred=', y_test.data)

plt.plot(time, loss_list)

plt.title('LBFGS')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.show()

图:

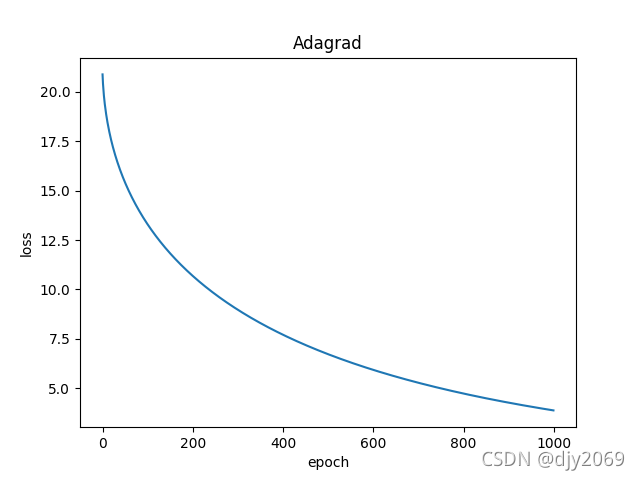

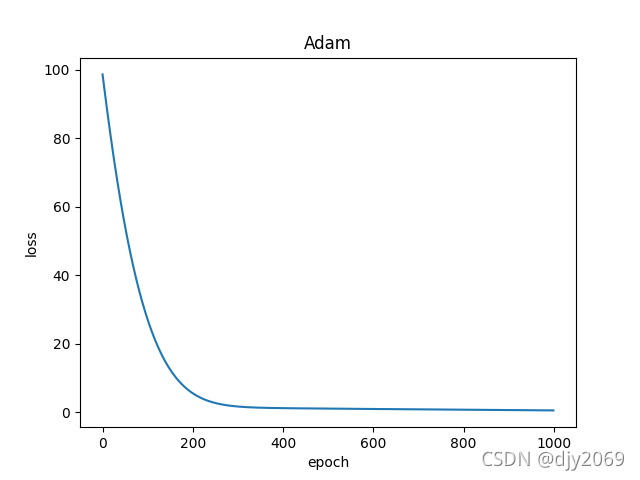

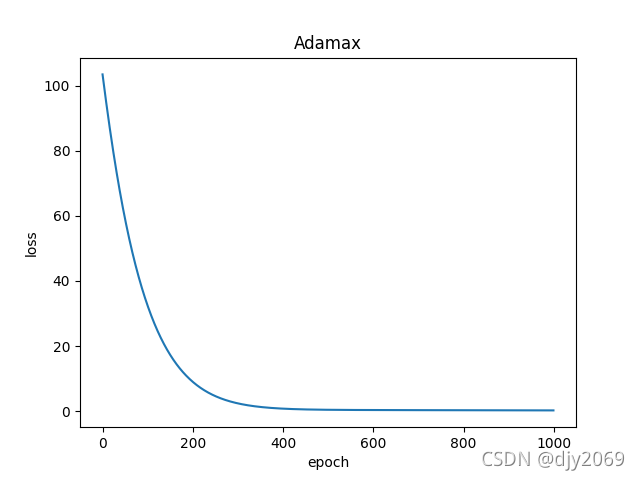

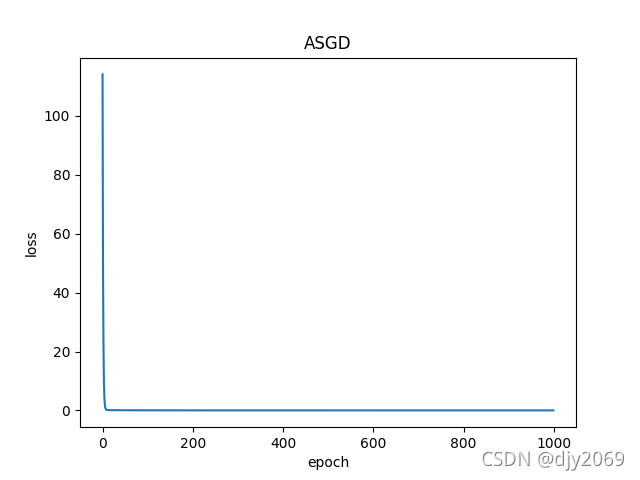

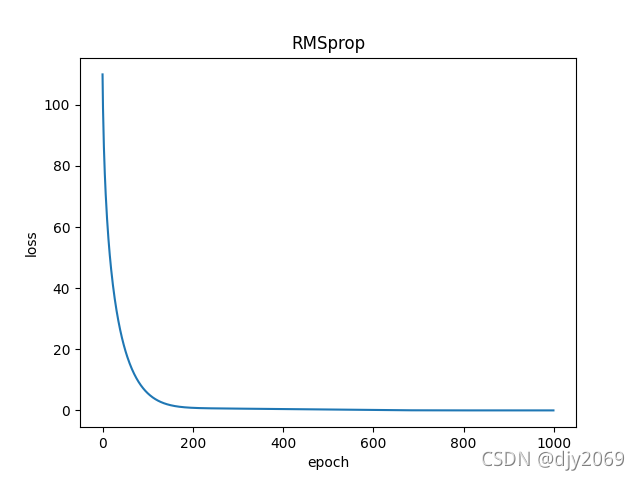

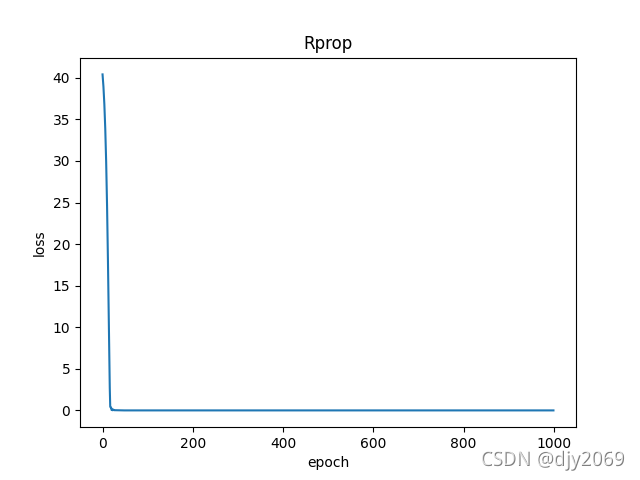

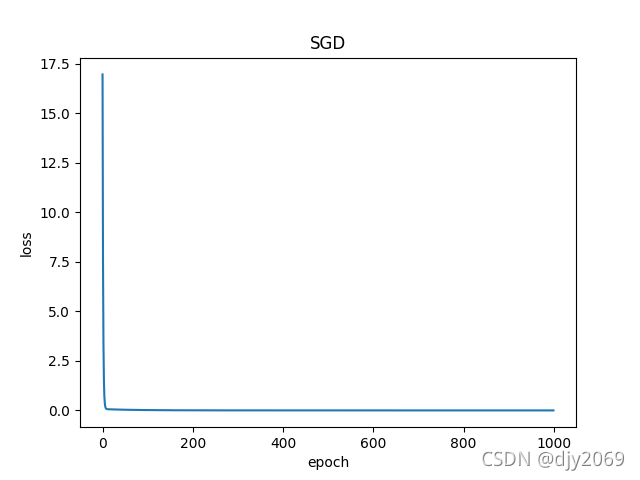

其余的Optimizer代码不一定需要使用闭包:

import matplotlib.pyplot as plt

import torch.nn

# 1. Prepare dataset

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[2.0], [4.0], [6.0]])

# 2. Design model using Class

class LinearModel(torch.nn.Module):

def __init__(self):

super().__init__()

self.linear = torch.nn.Linear(1, 1)

def forward(self, x):

y_pred = self.linear(x)

return y_pred

model = LinearModel()

# 3. Construct Loss and Optimizer

criterion = torch.nn.MSELoss(size_average=False)

optimizer = torch.optim.Adagrad(model.parameters(), lr=0.01)

# optimizer = torch.optim.Adam(model.parameters(), lr=0.01)

# optimizer = torch.optim.Adamax(model.parameters(), lr=0.01)

# optimizer = torch.optim.ASGD(model.parameters(), lr=0.01)

# optimizer = torch.optim.RMSprop(model.parameters(), lr=0.01)

# optimizer = torch.optim.Rprop(model.parameters(), lr=0.01)

# optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

# 4. Training Cycle

loss_list = []

time = []

for epoch in range(1000):

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss)

loss_list.append(loss.item())

time.append(epoch)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Output weight and bias

print('w=', model.linear.weight.item())

print('b=', model.linear.bias.item())

# Test Model

x_test = torch.Tensor([[4.0]])

y_test = model(x_test)

print('y_pred=', y_test.data)

plt.plot(time, loss_list)

plt.title('Adagrad')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.show()

图: