前情回顾

结论速递

这一个任务是抽取式问答任务,和前面的任务相比,虽然微调流程大体一致,但是多了文本段的处理。这些处理包括在放入训练前,以及训练结果的后处理(用于评估)。在这些处理中,位置的查找是主要问题,需要十分熟悉tokenize的操作。

需要明确的是训练过程的评估指标,和任务的目标是不同的,这可能是这一类问题的一大特点。

由于用了小batch进行训练,即使是只有3个epoch,也取得了还可以的效果。

本文索引

1 抽取式问答任务

1.1 任务简介

抽取式问答任务指的是,给定一个问题和一段文本,从这段文本中找出能够回答该问题的文本片段(span)。

这边教程里提到了两种常见的抽取式问答任务SQUAD 1和SQUAD 2。

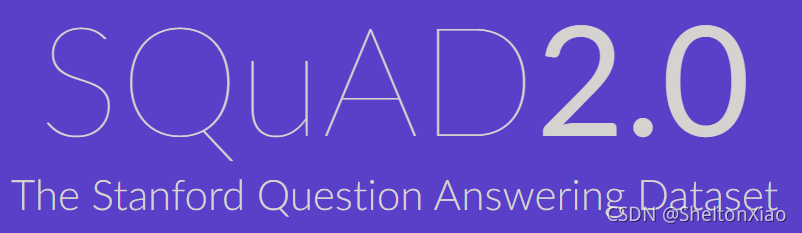

截至2021年8月30日,SQuAD2.0任务的leaderboard如下:

这边调用的预训练模型checkpoints依然和上一个任务一致,是“distilbert-base-uncased”。事实上,只要是Huggingface Model Hub里头包含token classification head和fast tokenizer的预训练模型,都可以完成这一任务。

1.2 数据加载

按照惯例,我们依然首先需要确定训练的一些主要参数

model_checkpoint = "distilbert-base-uncased"

batch_size = 16

随后是加载数据

datasets = load_dataset("squad")

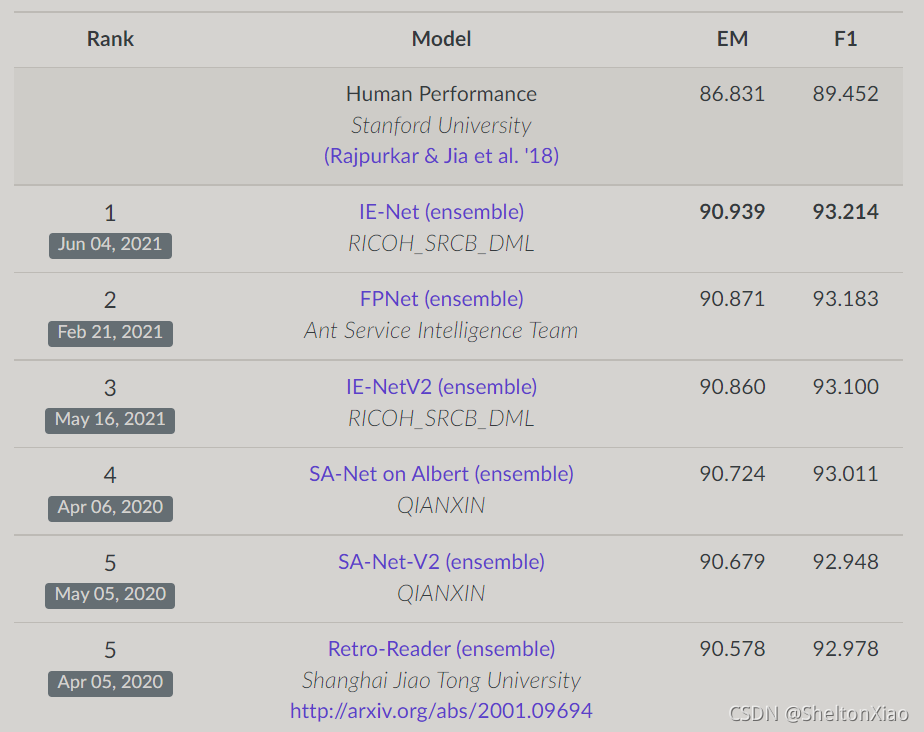

我们所使用的是SQuAD 1.1数据集,这篇博客绘制了一个数据集的结构,可以帮助我们理解这个数据集。

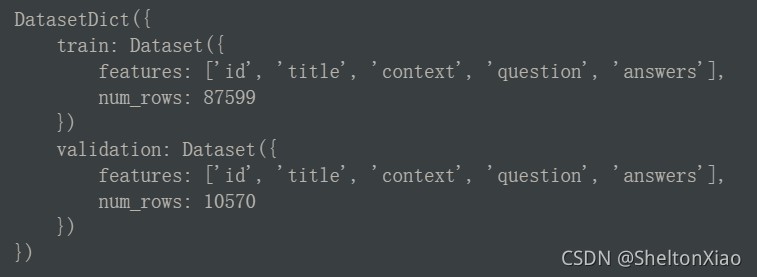

dataset本身的结构是这样的(是一个DatasetDict)

如果打出训练集的第一个数据的话。

[IN]: datasets["train"][0]

# answers代表答案

# context代表文本片段

# question代表问题

[OUT]:

{'answers': {'answer_start': [515], 'text': ['Saint Bernadette Soubirous']},

'context': 'Architecturally, the school has a Catholic character. Atop the Main Building\'s gold dome is a golden statue of the Virgin Mary. Immediately in front of the Main Building and facing it, is a copper statue of Christ with arms upraised with the legend "Venite Ad Me Omnes". Next to the Main Building is the Basilica of the Sacred Heart. Immediately behind the basilica is the Grotto, a Marian place of prayer and reflection. It is a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858. At the end of the main drive (and in a direct line that connects through 3 statues and the Gold Dome), is a simple, modern stone statue of Mary.',

'id': '5733be284776f41900661182',

'question': 'To whom did the Virgin Mary allegedly appear in 1858 in Lourdes France?',

'title': 'University_of_Notre_Dame'}

从这个dict里头我们可以看到,上述树状图在Datasets库读入后是不完全正确的,qas层级在读入后并不存在,同时还有另外的title。(也就是说,其实Datasets库并不仅仅完成了读json文件的操作)

2 抽取式问答任务的实现

2.1 数据预处理

先拎出来比较重要的不同点:问题和潜在答案的文本是衔接后输入的

2.1.1 预训练tokenizer的加载

首先同样需要先进行tokenized,可以从checkpoint里头加载对应的pretrained tokennizer.

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint)

tokenizer.tokenize方法可以帮我们看到tokenizer预处理后的文本格式。

print("单个文本tokenize: {}".format(tokenizer.tokenize("What is your name?"), add_special_tokens=True))

print("2个文本tokenize: {}".format(tokenizer.tokenize("My name is Sylvain.", add_special_tokens=True)))

对应的输出是

单个文本tokenize: ['what', 'is', 'your', 'name', '?']

2个文本tokenize: ['[CLS]', 'my', 'name', 'is', 'sy', '##lva', '##in', '.', '[SEP]']

token IDs也就是input_ids一般来说随着预训练模型名字的不同而有所不同。原因是不同的预训练模型在预训练的时候设定了不同的规则。但只要tokenizer和model的名字一致,那么tokenizer预处理的输入格式就会满足model需求的。

2.1.2 超长文本的处理

对于抽取式问答问题,我们需要思考预训练机器问答模型如何处理长文本。

一般来说预训练模型输入有最大长度要求,所以我们通常将超长的输入进行截断。但是,如果我们将问答数据三元组<question, context, answer>中的超长context截断,那么我们可能丢掉答案(因为我们是从context中抽取出一个小片段作为答案)。

为了解决这个问题,我们把超长的输入切片为多个较短的输入,每个输入都要满足模型最大长度输入要求。由于答案可能存在与切片的地方,因此我们需要允许相邻切片之间有交集,代码中通过doc_stride参数控制。

机器问答预训练模型通常将question和context拼接之后作为输入,然后让模型从context里寻找答案。在下述例子中,设定模型要求的最大输入是384(经常使用的还有512)。并且允许两个切片之间重合的数量为128。

max_length = 384 # 输入feature的最大长度,question和context拼接之后

doc_stride = 128 # 2个切片之间的重合token数量。

超长文本处理的案例

先找到一个超长文本

for i, example in enumerate(datasets["train"]):

if len(tokenizer(example["question"], example["context"])["input_ids"]) > 384:

break

example = datasets["train"][i]

这个文本的长度是396。

注意,一般来说,我们只对context进行切片,不会对问题进行切片,由于context是拼接在question后面的,对应着第2个文本,所以使用only_second控制.tokenizer使用doc_stride控制切片之间的重合长度。

tokenized_example = tokenizer(

example["question"],

example["context"],

max_length=max_length,

truncation="only_second",

return_overflowing_tokens=True,

return_offsets_mapping=True,

stride=doc_stride

)

由于对超长输入进行了切片,我们得到了多个输入,这些输入input_ids对应的长度是384和157。

如果把它还原为文本格式的话

for i, x in enumerate(tokenized_example["input_ids"][:2]):

print("切片: {}".format(i))

print(tokenizer.decode(x))

对应的输出是

切片: 0

[CLS] how many wins does the notre dame men's basketball team have? [SEP] the men's basketball team has over 1, 600 wins, one of only 12 schools who have reached that mark, and have appeared in 28 ncaa tournaments. former player austin carr holds the record for most points scored in a single game of the tournament with 61. although the team has never won the ncaa tournament, they were named by the helms athletic foundation as national champions twice. the team has orchestrated a number of upsets of number one ranked teams, the most notable of which was ending ucla's record 88 - game winning streak in 1974. the team has beaten an additional eight number - one teams, and those nine wins rank second, to ucla's 10, all - time in wins against the top team. the team plays in newly renovated purcell pavilion ( within the edmund p. joyce center ), which reopened for the beginning of the 2009 – 2010 season. the team is coached by mike brey, who, as of the 2014 – 15 season, his fifteenth at notre dame, has achieved a 332 - 165 record. in 2009 they were invited to the nit, where they advanced to the semifinals but were beaten by penn state who went on and beat baylor in the championship. the 2010 – 11 team concluded its regular season ranked number seven in the country, with a record of 25 – 5, brey's fifth straight 20 - win season, and a second - place finish in the big east. during the 2014 - 15 season, the team went 32 - 6 and won the acc conference tournament, later advancing to the elite 8, where the fighting irish lost on a missed buzzer - beater against then undefeated kentucky. led by nba draft picks jerian grant and pat connaughton, the fighting irish beat the eventual national champion duke blue devils twice during the season. the 32 wins were [SEP]

切片: 1

[CLS] how many wins does the notre dame men's basketball team have? [SEP] championship. the 2010 – 11 team concluded its regular season ranked number seven in the country, with a record of 25 – 5, brey's fifth straight 20 - win season, and a second - place finish in the big east. during the 2014 - 15 season, the team went 32 - 6 and won the acc conference tournament, later advancing to the elite 8, where the fighting irish lost on a missed buzzer - beater against then undefeated kentucky. led by nba draft picks jerian grant and pat connaughton, the fighting irish beat the eventual national champion duke blue devils twice during the season. the 32 wins were the most by the fighting irish team since 1908 - 09. [SEP]

因为切成两块,所以我们需要重新寻找答案在的位置。

机器问答模型将使用答案的位置(答案的起始位置和结束位置,start和end)作为训练标签(而不是答案的

token IDS)。所以切片需要和原始输入有一个对应关系,每个token在切片后context的位置和原始超长context里位置的对应关系。

在tokenizer调用时可以使用return_offsets_mapping=True参数得到这个对应关系的map。

# 打印切片前后位置下标的对应关系

[IN]: print(tokenized_example["offset_mapping"][0][:10])

[OUT]: [(0, 0), (0, 3), (4, 8), (9, 13), (14, 18), (19, 22), (23, 28), (29, 33), (34, 37), (37, 38), (38, 39), (40, 50), (51, 55), (56, 60), (60, 61), (0, 0), (0, 3), (4, 7), (7, 8), (8, 9), (10, 20), (21, 25), (26, 29), (30, 34), (35, 36), (36, 37), (37, 40), (41, 45), (45, 46), (47, 50), (51, 53), (54, 58), (59, 61), (62, 69), (70, 73), (74, 78), (79, 86), (87, 91), (92, 96), (96, 97), (98, 101), (102, 106), (107, 115), (116, 118), (119, 121), (122, 126), (127, 138), (138, 139), (140, 146), (147, 153), (154, 160), (161, 165), (166, 171), (172, 175), (176, 182), (183, 186), (187, 191), (192, 198), (199, 205), (206, 208), (209, 210), (211, 217), (218, 222), (223, 225), (226, 229), (230, 240), (241, 245), (246, 248), (248, 249), (250, 258), (259, 262), (263, 267), (268, 271), (272, 277), (278, 281), (282, 285), (286, 290), (291, 301), (301, 302), (303, 307), (308, 312), (313, 318), (319, 321), (322, 325), (326, 330), (330, 331), (332, 340), (341, 351), (352, 354), (355, 363), (364, 373), (374, 379), (379, 380), (381, 384), (385, 389), (390, 393), (394, 406), (407, 408), (409, 415), (416, 418)]

打印出来的元组是对应的起始点和终点的位置。

我们还需要使用sequence_ids参数来区分question和context。

sequence_ids = tokenized_example.sequence_ids()

由于先输入的是question,后输入的是context。所以0对应的是question,1对应的是context。

下面的代码可以实现找到标注的答案(label)在预处理后所在的位置。

answers = example["answers"]

start_char = answers["answer_start"][0]

end_char = start_char + len(answers["text"][0])

# 找到当前文本的Start token index.

token_start_index = 0

while sequence_ids[token_start_index] != 1:

token_start_index += 1

# 找到当前文本的End token idnex.

token_end_index = len(tokenized_example["input_ids"][0]) - 1

while sequence_ids[token_end_index] != 1:

token_end_index -= 1

# 检测答案是否在文本区间的外部,这种情况下意味着该样本的数据标注在CLS token位置。

offsets = tokenized_example["offset_mapping"][0]

if (offsets[token_start_index][0] <= start_char and offsets[token_end_index][1] >= end_char):

# 将token_start_index和token_end_index移动到answer所在位置的两侧.

# 注意:答案在最末尾的边界条件.这块暂时还没看太明白

while token_start_index < len(offsets) and offsets[token_start_index][0] <= start_char:

token_start_index += 1

start_position = token_start_index - 1

while offsets[token_end_index][1] >= end_char:

token_end_index -= 1

end_position = token_end_index + 1

print("start_position: {}, end_position: {}".format(start_position, end_position))

else:

print("The answer is not in this feature.")

有点像放了两个指针,一个从前往后找,一个从后往前找,先找到context对应的位置。

接下来对tokenized_example["offset_mapping"][0]进行判断(注意是整个才是刚刚打出来的那堆),所以len(offsets)就是token总数的意思。然后再放两个指针,一个从前往后找,一个从后往前找,找到tuple第二位和strart_char,end_char对应的位置。(其中strart_char对应的原来的开始所在的位置,end_char对应的原来的结束所在的位置)

有时候question拼接context,而有时候是context拼接question,不同的模型有不同的要求,因此我们需要使用padding_side参数来指定。

pad_on_right = tokenizer.padding_side == "right" #context在右边

2.1.3 预处理函数整合

现在,把所有步骤合并到一起。对于context中无答案的情况,我们直接将标注的答案起始位置和结束位置放置在CLS的下标处。如果allow_impossible_answers这个参数是False,那这些无答案的样本都会被扔掉。

def prepare_train_features(examples):

# 既要对examples进行truncation(截断)和padding(补全)还要还要保留所有信息,所以要用的切片的方法。

# 每一个一个超长文本example会被切片成多个输入,相邻两个输入之间会有交集。

tokenized_examples = tokenizer(

examples["question" if pad_on_right else "context"],

examples["context" if pad_on_right else "question"],

truncation="only_second" if pad_on_right else "only_first",

max_length=max_length,

stride=doc_stride,

return_overflowing_tokens=True,

return_offsets_mapping=True,

padding="max_length",

)

# 我们使用overflow_to_sample_mapping参数来映射切片片ID到原始ID。

# 比如有2个expamples被切成4片,那么对应是[0, 0, 1, 1],前两片对应原来的第一个example。

sample_mapping = tokenized_examples.pop("overflow_to_sample_mapping")

# offset_mapping也对应4片

# offset_mapping参数帮助我们映射到原始输入,由于答案标注在原始输入上,所以有助于我们找到答案的起始和结束位置。

offset_mapping = tokenized_examples.pop("offset_mapping")

# 重新标注数据

tokenized_examples["start_positions"] = []

tokenized_examples["end_positions"] = []

for i, offsets in enumerate(offset_mapping):

# 对每一片进行处理

# 将无答案的样本标注到CLS上

input_ids = tokenized_examples["input_ids"][i]

cls_index = input_ids.index(tokenizer.cls_token_id)

# 区分question和context

sequence_ids = tokenized_examples.sequence_ids(i)

# 拿到原始的example 下标.

sample_index = sample_mapping[i]

answers = examples["answers"][sample_index]

# 如果没有答案,则使用CLS所在的位置为答案.

if len(answers["answer_start"]) == 0:

tokenized_examples["start_positions"].append(cls_index)

tokenized_examples["end_positions"].append(cls_index)

else:

# 答案的character级别Start/end位置.

start_char = answers["answer_start"][0]

end_char = start_char + len(answers["text"][0])

# 找到token级别的index start.

token_start_index = 0

while sequence_ids[token_start_index] != (1 if pad_on_right else 0):

token_start_index += 1

# 找到token级别的index end.

token_end_index = len(input_ids) - 1

while sequence_ids[token_end_index] != (1 if pad_on_right else 0):

token_end_index -= 1

# 检测答案是否超出文本长度,超出的话也适用CLS index作为标注.

if not (offsets[token_start_index][0] <= start_char and offsets[token_end_index][1] >= end_char):

tokenized_examples["start_positions"].append(cls_index)

tokenized_examples["end_positions"].append(cls_index)

else:

# 如果不超出则找到答案token的start和end位置。.

# Note: we could go after the last offset if the answer is the last word (edge case).

while token_start_index < len(offsets) and offsets[token_start_index][0] <= start_char:

token_start_index += 1

tokenized_examples["start_positions"].append(token_start_index - 1)

while offsets[token_end_index][1] >= end_char:

token_end_index -= 1

tokenized_examples["end_positions"].append(token_end_index + 1)

return tokenized_examples

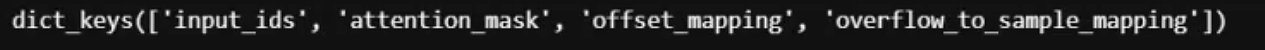

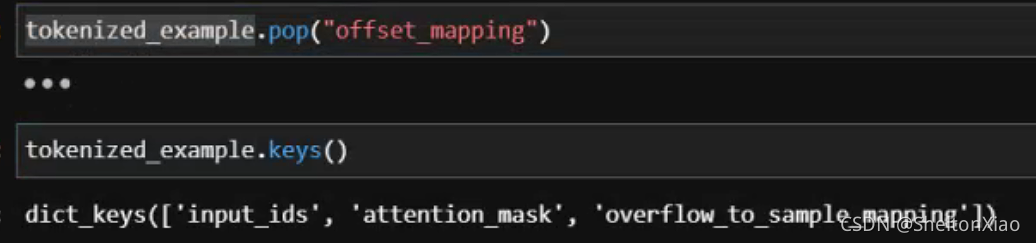

注意到这里有两句话

sample_mapping = tokenized_examples.pop("overflow_to_sample_mapping")

offset_mapping = tokenized_examples.pop("offset_mapping")

事实上,tokenized_examples可以作为一个dict对象被访问,如果我们把它的keys打出来的话,可以看它有的keys是这样的:

pop语句对应的效果如下

接下来,只要对所有的数据应用即可:

tokenized_datasets = datasets.map(prepare_train_features, batched=True, remove_columns=datasets["train"].column_names)

更好的是,返回的结果会自动被缓存,避免下次处理的时候重新计算(但是也要注意,如果输入有改动,可能会被缓存影响!)。datasets库函数会对输入的参数进行检测,判断是否有变化,如果没有变化就使用缓存数据,如果有变化就重新处理。但如果输入参数不变,想改变输入的时候,最好清理调这个缓存。清理的方式是使用load_from_cache_file=False参数。另外,上面使用到的batched=True这个参数是tokenizer的特点,以为这会使用多线程同时并行对输入进行处理。

2.2 微调预训练模型

2.2.1 预训练模型导入

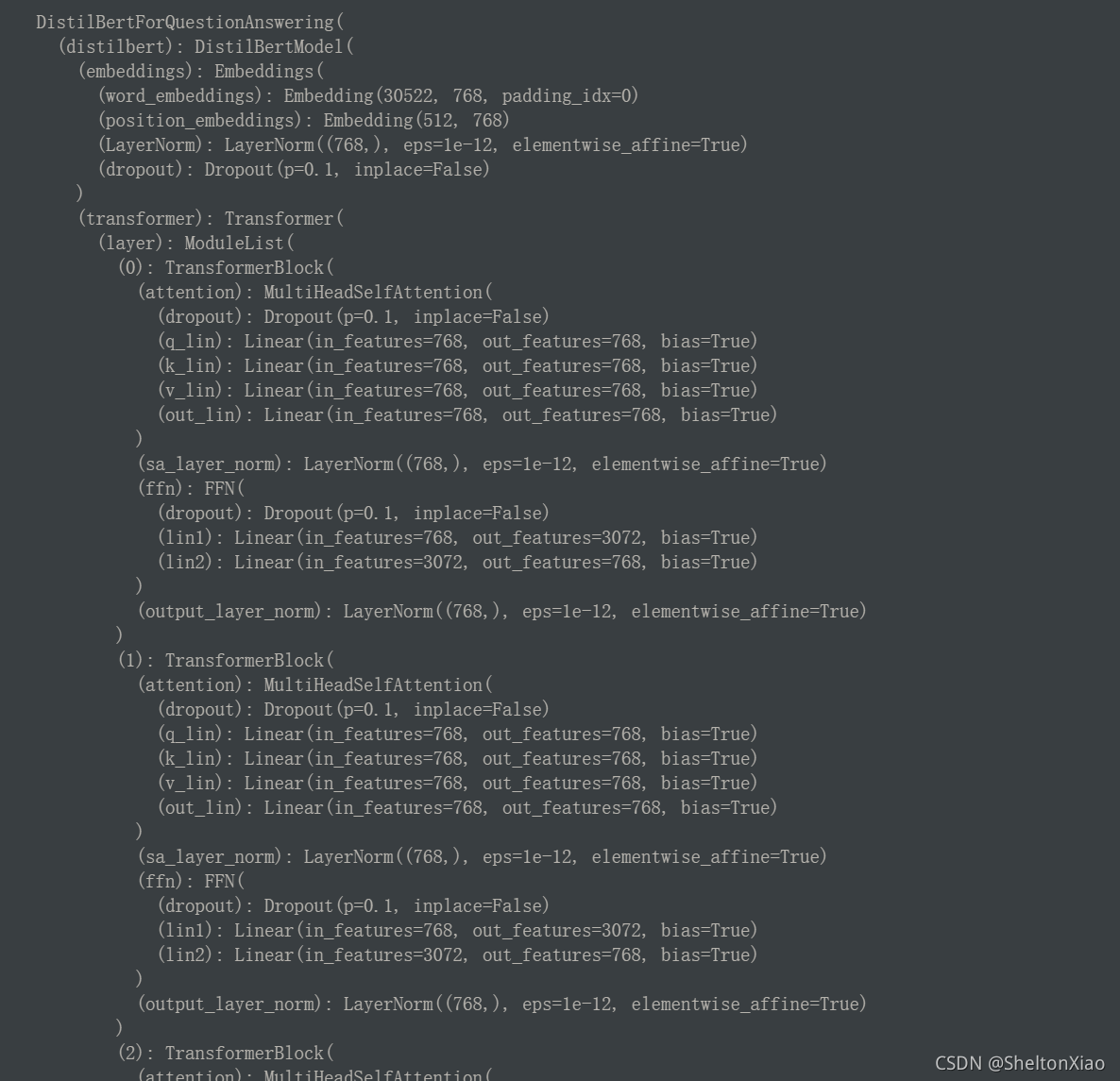

我们使用这个类AutoModelForQuestionAnswering。

from transformers import AutoModelForQuestionAnswering, TrainingArguments, Trainer

model = AutoModelForQuestionAnswering.from_pretrained(model_checkpoint)

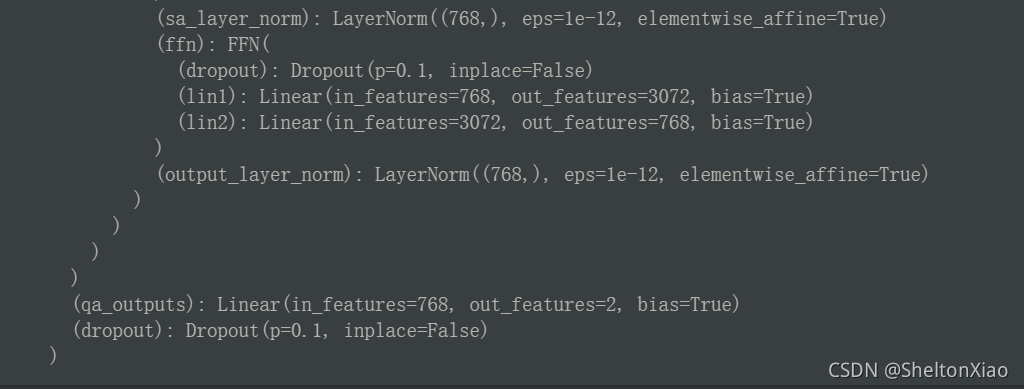

查看导入的模型,可以看到它是一个DistilBertForQuestionAnswering对象。

在常规bert的后面,有一个

qa_outputs的线性层,和一个残差层。

2.2.2 参数确定

和之前两个任务一样,我们需要定义TrainingArguments和喂数据的default_data_collator。

args = TrainingArguments(

f"test-squad",

evaluation_strategy = "epoch",

learning_rate=2e-5, #学习率

per_device_train_batch_size=batch_size,

per_device_eval_batch_size=batch_size,

num_train_epochs=3, # 训练的论次

weight_decay=0.01,

)

from transformers import default_data_collator

data_collator = default_data_collator

然后把这些东西都丢给trainer

trainer = Trainer(

model,

args,

train_dataset=tokenized_datasets["train"],

eval_dataset=tokenized_datasets["validation"],

data_collator=data_collator,

tokenizer=tokenizer,

)

2.2.3 训练

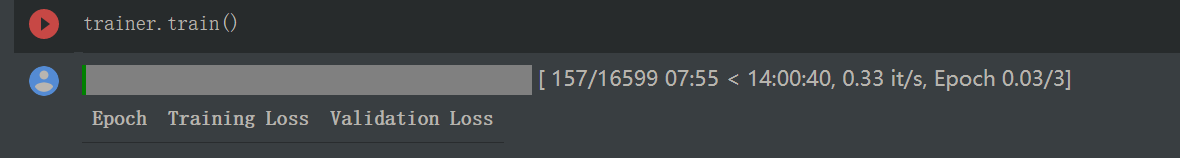

接下来调用train方法训练就可以了。

trainer.train()

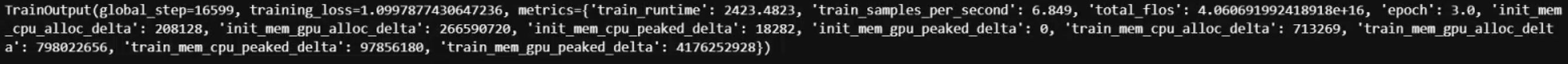

一共进行了三个训练的尝试,第一次尝试使用colab的TPU

真的慢到令人发指。

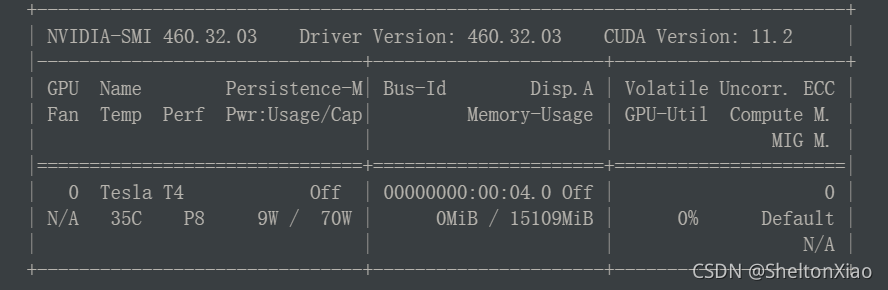

于是试着用colab pro的GPU。

3个epoch用时3小时14分钟。

最后尝试用带有RTX 3080的本地设备。

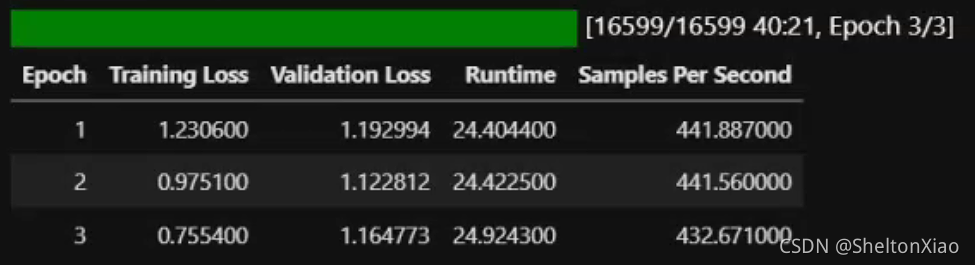

3个epoch用时40分钟(活的广告,买它买它)

其实挺奇怪的,可能跟cuda版本有点关系吧。

2.3 训练结果评估

由于这是一个解决问答的问题,所以我们需要进行一系列的后处理才能进行评估(那么问题来了,之前训练的时候到底是以什么为评价指标的呢,loss是怎么算的?!应该是softmax直接求的loss)

2.3.1 确定有效答案

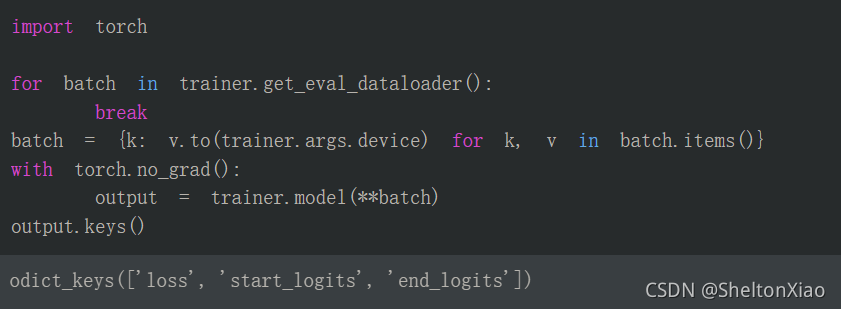

输出一个类似于dict的数据结构,因为提供了label所以有loss,除此之外,还有answer start和end的logits。

我们可以查看start和end的logits的大小,分别各自对应torch.Size([16, 384])大小的tensor,也就是(batchsize, length)

每个feature里的每个token都会有一个logit。预测answer最简单的方法就是选择start的logits里最大的下标最为answer其实位置,end的logits里最大下标作为answer的结束位置。

(这是因为输出是softmax嘛?不对不对,是因为线性变换后那个向量长度的维度不变,而我们的目标输出应该就是一个0,1的那种等长向量——由label变换而来的)

output.start_logits.argmax(dim=-1), output.end_logits.argmax(dim=-1)

以上策略大部分情况下都是不错的。但是,如果我们的输入告诉我们找不到答案:比如start的位置比end的位置下标大,或者start和end的位置指向了question。

这个时候,简单的方法是我们继续需要选择第2好的预测作为我们的答案了,实在不行看第3好的预测,以此类推。

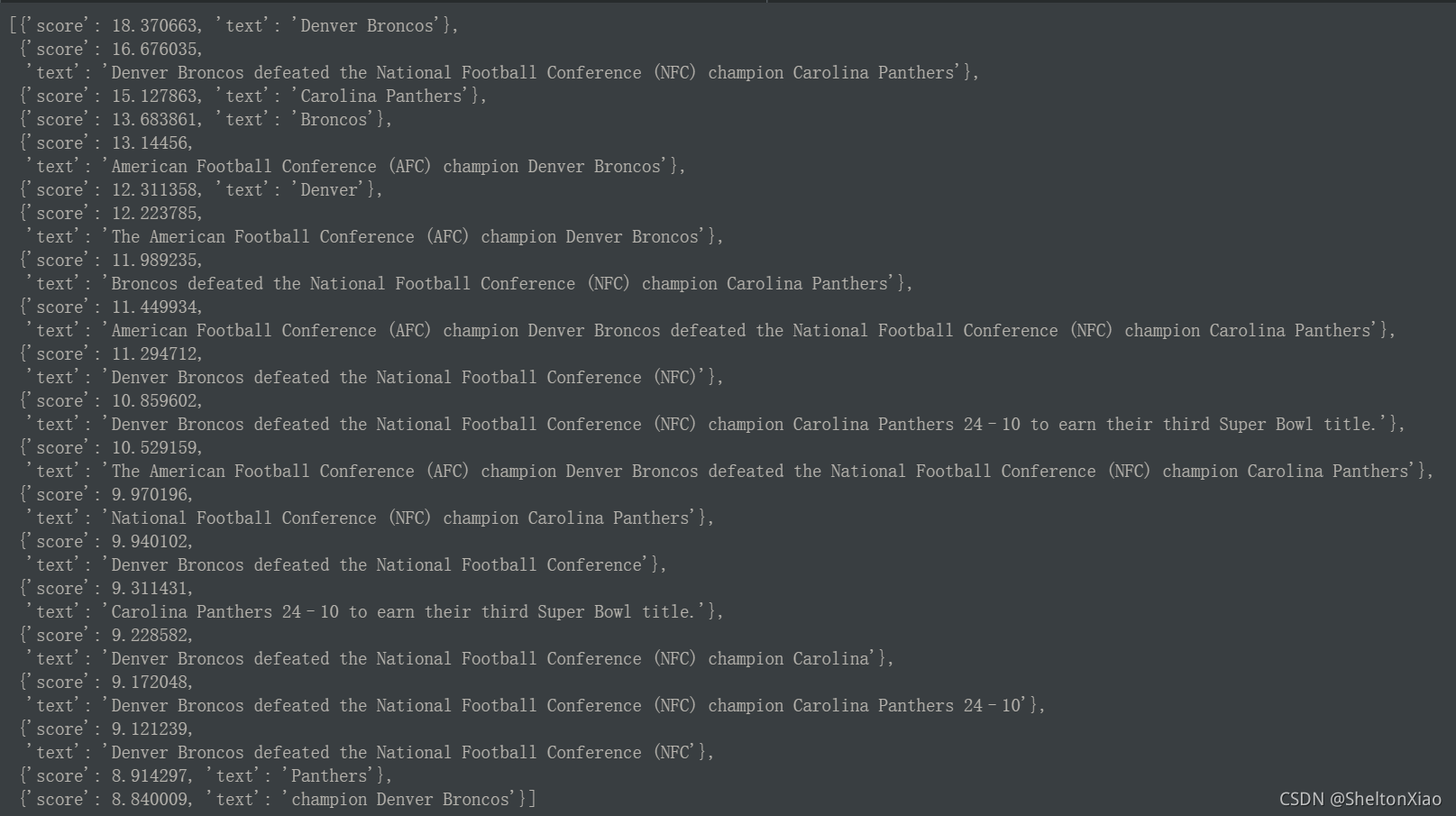

但是会有更好的方法,就是把start和end的logits相加得到新的打分,然后去看最好的n_best_size个start和end对(然后检查n_best_size个答案,挑最好的)

这里定的n_bert_size是20。借助numpy.argsort来进行若干最大值的查找。

import numpy as np

n_best_size = 20

start_logits = output.start_logits[0].cpu().numpy()

end_logits = output.end_logits[0].cpu().numpy()

# 收集最佳的start和end logits的位置:

start_indexes = np.argsort(start_logits)[-1 : -n_best_size - 1 : -1].tolist()

end_indexes = np.argsort(end_logits)[-1 : -n_best_size - 1 : -1].tolist()

valid_answers = []

for start_index in start_indexes:

for end_index in end_indexes:

if start_index <= end_index: # 如果start小于end,那么合理的

valid_answers.append(

{

"score": start_logits[start_index] + end_logits[end_index],

"text": "" # 后续需要根据token的下标将答案找出来

}

)

2.3.2 判断答案是否在context里

需要检查start和end位置对应的文本是否在context里面而不是在question里面。

为了完成这件事情,我们需要添加以下两个信息到validation的features里面:

- 产生feature的example的ID。由于每个example可能会产生多个feature,所以每个feature/切片的feature需要知道他们对应的example。

- offset mapping: 将每个切片的tokens的位置映射回原始文本基于character的下标位置。

和prepare_train_features稍有不同。%%

def prepare_validation_features(examples):

# Tokenize our examples with truncation and maybe padding, but keep the overflows using a stride. This results

# in one example possible giving several features when a context is long, each of those features having a

# context that overlaps a bit the context of the previous feature.

tokenized_examples = tokenizer(

examples["question" if pad_on_right else "context"],

examples["context" if pad_on_right else "question"],

truncation="only_second" if pad_on_right else "only_first",

max_length=max_length,

stride=doc_stride,

return_overflowing_tokens=True,

return_offsets_mapping=True,

padding="max_length",

)

# Since one example might give us several features if it has a long context, we need a map from a feature to

# its corresponding example. This key gives us just that.

sample_mapping = tokenized_examples.pop("overflow_to_sample_mapping")

# We keep the example_id that gave us this feature and we will store the offset mappings.

tokenized_examples["example_id"] = []

for i in range(len(tokenized_examples["input_ids"])):

# Grab the sequence corresponding to that example (to know what is the context and what is the question).

sequence_ids = tokenized_examples.sequence_ids(i)

context_index = 1 if pad_on_right else 0

# One example can give several spans, this is the index of the example containing this span of text.

sample_index = sample_mapping[i]

tokenized_examples["example_id"].append(examples["id"][sample_index])

# Set to None the offset_mapping that are not part of the context so it's easy to determine if a token

# position is part of the context or not.

tokenized_examples["offset_mapping"][i] = [

(o if sequence_ids[k] == context_index else None)

for k, o in enumerate(tokenized_examples["offset_mapping"][i])

]

return tokenized_examples

这里往tokenizer里头又加了一个叫example_id的key,和offset_mapping的key。

2.3.3 处理validation数据

把刚刚那个函数应用一下

validation_features = datasets["validation"].map(

prepare_validation_features,

batched=True,

remove_columns=datasets["validation"].column_names

)

validation_features.set_format(type=validation_features.format["type"], columns=list(validation_features.features.keys()))

并且我们可以获取一下对应的预测好的结果

raw_predictions = trainer.predict(validation_features)

把前面的一起结合一下

start_logits = output.start_logits[0].cpu().numpy()

end_logits = output.end_logits[0].cpu().numpy()

offset_mapping = validation_features[0]["offset_mapping"]

# The first feature comes from the first example. For the more general case, we will need to be match the example_id to

# an example index

context = datasets["validation"][0]["context"]

# Gather the indices the best start/end logits:

start_indexes = np.argsort(start_logits)[-1 : -n_best_size - 1 : -1].tolist()

end_indexes = np.argsort(end_logits)[-1 : -n_best_size - 1 : -1].tolist()

valid_answers = []

for start_index in start_indexes:

for end_index in end_indexes:

# Don't consider out-of-scope answers, either because the indices are out of bounds or correspond

# to part of the input_ids that are not in the context.

if (

start_index >= len(offset_mapping)

or end_index >= len(offset_mapping)

or offset_mapping[start_index] is None

or offset_mapping[end_index] is None

):

continue

# Don't consider answers with a length that is either < 0 or > max_answer_length.

if end_index < start_index or end_index - start_index + 1 > max_answer_length:

continue

if start_index <= end_index: # We need to refine that test to check the answer is inside the context

start_char = offset_mapping[start_index][0]

end_char = offset_mapping[end_index][1]

valid_answers.append(

{

"score": start_logits[start_index] + end_logits[end_index],

"text": context[start_char: end_char]

}

)

valid_answers = sorted(valid_answers, key=lambda x: x["score"], reverse=True)[:n_best_size]

valid_answers

2.3.4 评估流程的合并

from tqdm.auto import tqdm

def postprocess_qa_predictions(examples, features, raw_predictions, n_best_size = 20, max_answer_length = 30):

all_start_logits, all_end_logits = raw_predictions

# Build a map example to its corresponding features.

example_id_to_index = {k: i for i, k in enumerate(examples["id"])}

features_per_example = collections.defaultdict(list)

for i, feature in enumerate(features):

features_per_example[example_id_to_index[feature["example_id"]]].append(i)

# The dictionaries we have to fill.

predictions = collections.OrderedDict()

# Logging.

print(f"Post-processing {len(examples)} example predictions split into {len(features)} features.")

# Let's loop over all the examples!

for example_index, example in enumerate(tqdm(examples)):

# Those are the indices of the features associated to the current example.

feature_indices = features_per_example[example_index]

min_null_score = None # Only used if squad_v2 is True.

valid_answers = []

context = example["context"]

# Looping through all the features associated to the current example.

for feature_index in feature_indices:

# We grab the predictions of the model for this feature.

start_logits = all_start_logits[feature_index]

end_logits = all_end_logits[feature_index]

# This is what will allow us to map some the positions in our logits to span of texts in the original

# context.

offset_mapping = features[feature_index]["offset_mapping"]

# Update minimum null prediction.

cls_index = features[feature_index]["input_ids"].index(tokenizer.cls_token_id)

feature_null_score = start_logits[cls_index] + end_logits[cls_index]

if min_null_score is None or min_null_score < feature_null_score:

min_null_score = feature_null_score

# Go through all possibilities for the `n_best_size` greater start and end logits.

start_indexes = np.argsort(start_logits)[-1 : -n_best_size - 1 : -1].tolist()

end_indexes = np.argsort(end_logits)[-1 : -n_best_size - 1 : -1].tolist()

for start_index in start_indexes:

for end_index in end_indexes:

# Don't consider out-of-scope answers, either because the indices are out of bounds or correspond

# to part of the input_ids that are not in the context.

if (

start_index >= len(offset_mapping)

or end_index >= len(offset_mapping)

or offset_mapping[start_index] is None

or offset_mapping[end_index] is None

):

continue

# Don't consider answers with a length that is either < 0 or > max_answer_length.

if end_index < start_index or end_index - start_index + 1 > max_answer_length:

continue

start_char = offset_mapping[start_index][0]

end_char = offset_mapping[end_index][1]

valid_answers.append(

{

"score": start_logits[start_index] + end_logits[end_index],

"text": context[start_char: end_char]

}

)

if len(valid_answers) > 0:

best_answer = sorted(valid_answers, key=lambda x: x["score"], reverse=True)[0]

else:

# In the very rare edge case we have not a single non-null prediction, we create a fake prediction to avoid

# failure.

best_answer = {"text": "", "score": 0.0}

# Let's pick our final answer: the best one or the null answer (only for squad_v2)

if not squad_v2:

predictions[example["id"]] = best_answer["text"]

else:

answer = best_answer["text"] if best_answer["score"] > min_null_score else ""

predictions[example["id"]] = answer

return predictions

我们可以得到原始预测的结果

final_predictions = postprocess_qa_predictions(datasets["validation"], validation_features, raw_predictions.predictions)

2.3.5 评价指标的调用

加载评价指标

metric = load_metric("squad_v2" if squad_v2 else "squad")

if squad_v2:

formatted_predictions = [{"id": k, "prediction_text": v, "no_answer_probability": 0.0} for k, v in predictions.items()]

else:

formatted_predictions = [{"id": k, "prediction_text": v} for k, v in final_predictions.items()]

references = [{"id": ex["id"], "answers": ex["answers"]} for ex in datasets["validation"]]

metric.compute(predictions=formatted_predictions, references=references)

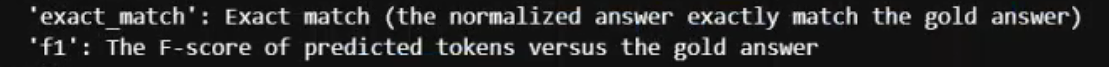

我们再看看metric里面是什么东西,可以看到

都是对于gold answer的确认。

其中

F

1

=

2

×

p

r

e

c

i

s

i

o

n

×

r

e

c

a

l

l

p

r

e

c

i

s

i

o

n

+

r

e

c

a

l

l

F_1 = 2 × \dfrac{precision × recall}{precision + recall}

F1?=2×precision+recallprecision×recall?

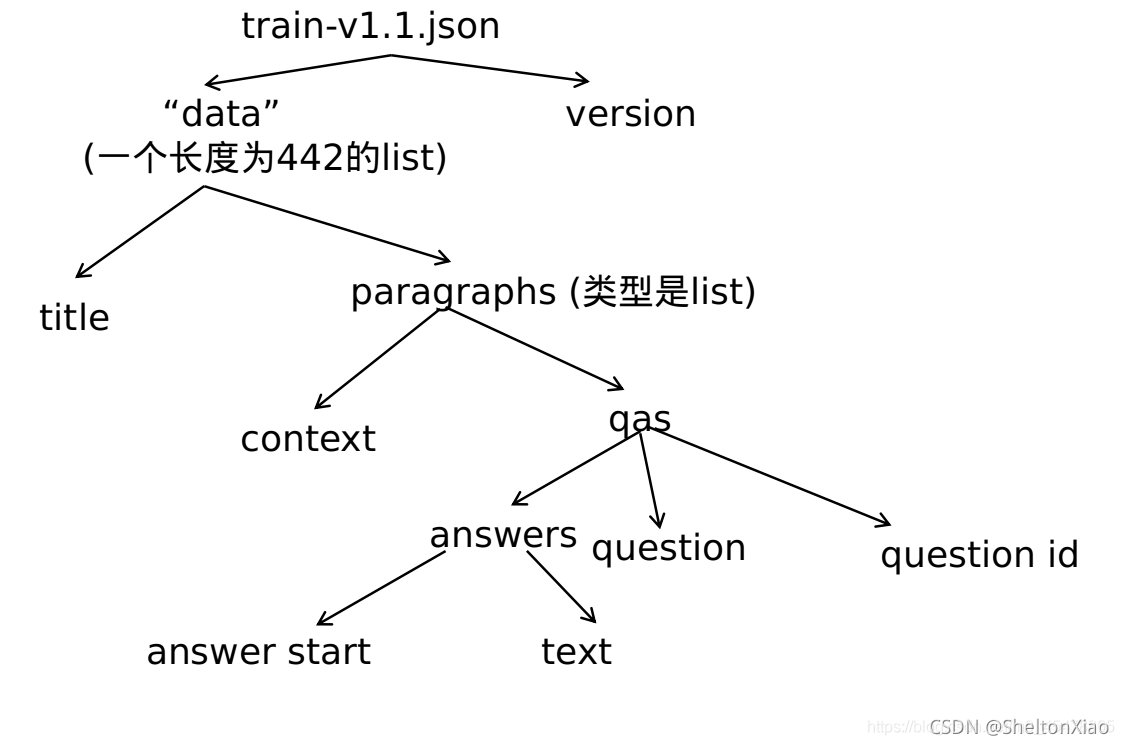

最后可以看到,colab上的运行版本

本地版本