12-1 Optimization objective

Alternative view of logistic regression

h θ ( x ) = 1 1 + e ? θ T x h _ { \theta } ( x ) = \frac { 1 } { 1 + e ^ { - \theta ^ { T } x } } hθ?(x)=1+e?θTx1?

If y = 1 y=1 y=1,we want h θ ( x ) ≈ 1 , θ T x ? 0 h_{\theta}(x)\approx1, \theta^Tx\gg0 hθ?(x)≈1,θTx?0

If y = 0 y=0 y=0,we want h θ ( x ) ≈ 0 , θ T x ? 0 h_{\theta}(x)\approx0, \theta^Tx\ll0 hθ?(x)≈0,θTx?0

? Cost of example: ? ( y log ? h θ ( x ) + ( 1 ? y ) log ? ( 1 ? h θ ( x ) ) ) - ( y \log h _ { \theta } ( x ) + ( 1 - y ) \log ( 1 - h _ { \theta } ( x ) ) ) ?(yloghθ?(x)+(1?y)log(1?hθ?(x))) = ? y log ? 1 1 + e ? θ r x ? ( 1 ? y ) log ? ( 1 ? 1 1 + e ? θ r x ) = - y \log \frac { 1 } { 1 + e ^ { - \theta ^ { r } x } } - ( 1 - y ) \log ( 1 - \frac { 1 } { 1 + e ^ { - \theta ^ { r } x } } ) =?ylog1+e?θrx1??(1?y)log(1?1+e?θrx1?)

Support vector machine

Logistic regression:

m

i

n

θ

1

m

[

∑

i

=

1

m

y

(

i

)

(

?

log

?

h

θ

(

x

(

i

)

)

+

(

1

?

y

(

i

)

)

(

?

log

?

(

1

?

h

θ

(

x

(

i

)

)

)

]

+

λ

2

m

∑

j

=

1

n

θ

j

2

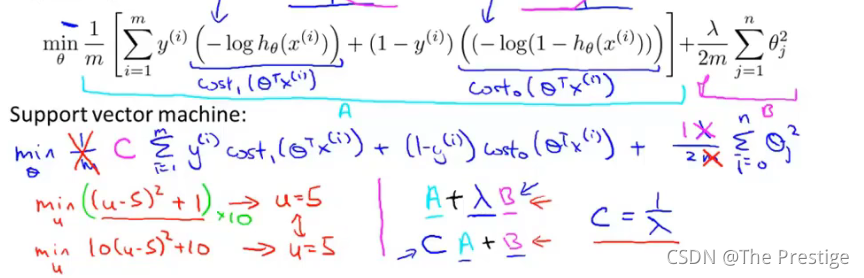

min_\theta\frac { 1 } { m } [ \sum _ { i = 1 } ^ { m } y ^ { ( i ) }( -\log h _ { \theta } ( x ^ { ( i ) } ) + ( 1 - y ^ { ( i ) } ) (-\log ( 1 - h _ { \theta } ( x ^ { ( i ) } ) ) ] + \frac { \lambda } { 2 m } \sum _ { j = 1 } ^ { n } \theta^2_j

minθ?m1?[i=1∑m?y(i)(?loghθ?(x(i))+(1?y(i))(?log(1?hθ?(x(i)))]+2mλ?j=1∑n?θj2?

support vector machine:

m

i

n

θ

C

∑

i

=

1

m

[

y

(

i

)

c

o

s

t

1

(

θ

T

x

(

i

)

)

+

(

1

?

y

(

i

)

)

c

o

s

t

0

(

θ

T

x

(

i

)

)

]

+

1

2

∑

i

=

1

n

θ

j

2

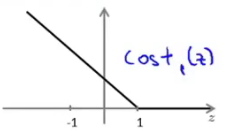

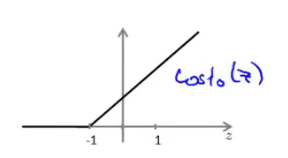

min _ { \theta} C \sum _ { i = 1 } ^ { m }[ y ^ { ( i ) } {cost} _ { 1 } ( \theta ^ { T } x ^ { ( i ) } ) + ( 1 - y ^ { ( i ) } ) {cost} _ { 0 } ( \theta ^ { T } x ^ { ( i ) } ) ] + \frac { 1 } { 2 } \sum _ { i = 1 } ^ { n}\theta_j^2

minθ?Ci=1∑m?[y(i)cost1?(θTx(i))+(1?y(i))cost0?(θTx(i))]+21?i=1∑n?θj2?

SVM hypothesis

m

i

n

θ

C

∑

i

=

1

m

[

y

(

i

)

c

o

s

t

1

(

θ

T

x

(

i

)

)

+

(

1

?

y

(

i

)

)

c

o

s

t

0

(

θ

T

x

(

i

)

)

]

+

1

2

∑

i

=

1

n

θ

j

2

min _ { \theta} C \sum _ { i = 1 } ^ { m }[ y ^ { ( i ) } {cost} _ { 1 } ( \theta ^ { T } x ^ { ( i ) } ) + ( 1 - y ^ { ( i ) } ) {cost} _ { 0 } ( \theta ^ { T } x ^ { ( i ) } ) ] + \frac { 1 } { 2 } \sum _ { i = 1 } ^ { n}\theta_j^2

minθ?Ci=1∑m?[y(i)cost1?(θTx(i))+(1?y(i))cost0?(θTx(i))]+21?i=1∑n?θj2?

Hypothesis:

h θ ( x ) = { 1 i f ?? θ T x ≥ 0 0 o t h e r w i s e h_\theta(x)=\begin{cases}1\quad if\;\theta^Tx\ge0\\0\quad otherwise\end{cases} hθ?(x)={1ifθTx≥00otherwise?

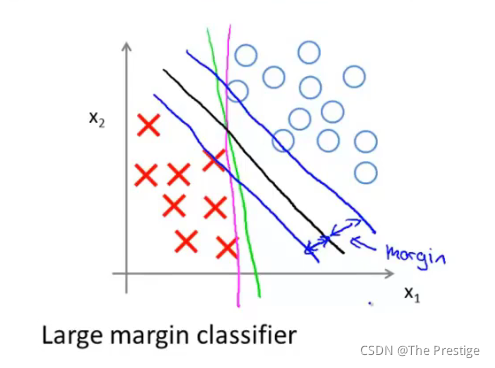

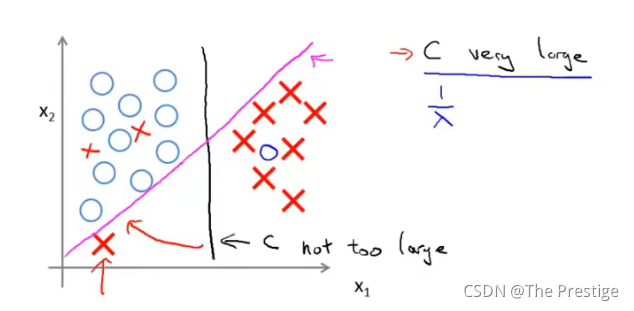

12-2 Large Margin Intuition

Support Vector Machine

if y = 1 y=1 y=1,we want $\theta^Tx\ge1\quad(\text{not just ≥ \ge ≥ 0})$

if

y

=

0

y=0

y=0,we want $\theta^Tx\le-1\quad(\text{not just

<

<

< 0})$

SVM Decision Boundary

SVM Decision Boundary: Linearly separable case

Large margin classifier in presence of outliers

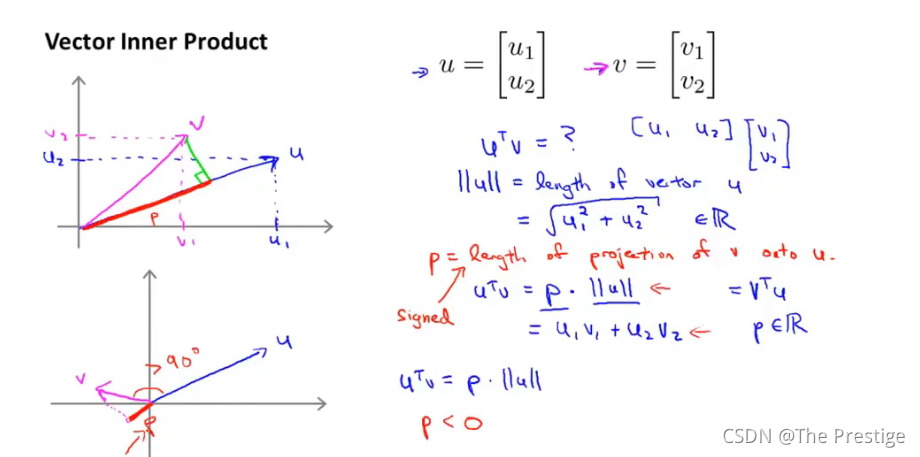

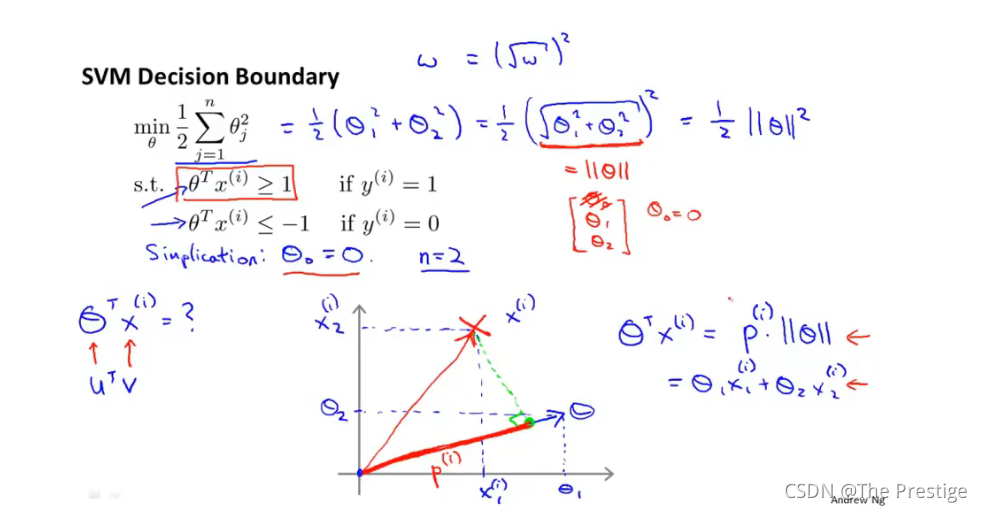

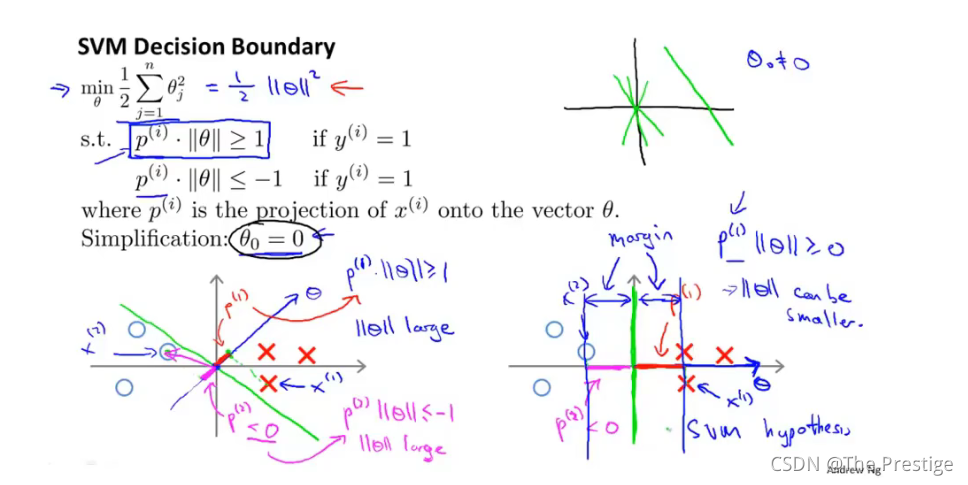

12-3 The mathematics behind large margin classification (optional)

Vector Inner Product 向量内积

SVM Decision Boundary

12-4 Kernels I

核函数

Non-linear Decision Boundary

predict y=1 if θ 0 + θ 1 x 1 + θ 2 x 2 + θ 3 x 1 x 2 + θ 4 x 1 2 + θ 5 x 2 2 + ? ≥ 0 { \theta _ { 0 } + \theta _ { 1 } x _ { 1 } + \theta _ { 2 } x _ { 2 } + \theta _ { 3 } x _ { 1 } x _ { 2 } } { + \theta _ { 4 } x _ { 1 } ^ { 2 } + \theta _ { 5 } x _ { 2 } ^ { 2 } + \cdots \geq 0 } θ0?+θ1?x1?+θ2?x2?+θ3?x1?x2?+θ4?x12?+θ5?x22?+?≥0

h θ ( x ) = { 1 i f ?? θ 0 + θ 1 x 1 + θ 2 x 2 + θ 3 x 1 x 2 + ? ≥ 0 0 o t h e r w i s e h_\theta(x)=\begin{cases}1\quad if\;{ \theta _ { 0 } + \theta _ { 1 } x _ { 1 } + \theta _ { 2 } x _ { 2 } + \theta _ { 3 } x _ { 1 } x _ { 2 } } { + \cdots \geq 0 }\\0\quad otherwise\end{cases} hθ?(x)={1ifθ0?+θ1?x1?+θ2?x2?+θ3?x1?x2?+?≥00otherwise?

Given x,compute new feature depending on proximity to landmarks l ( 1 ) , l ( 2 ) , l ( 3 ) l^{(1)},l^{(2)},l^{(3)} l(1),l(2),l(3)

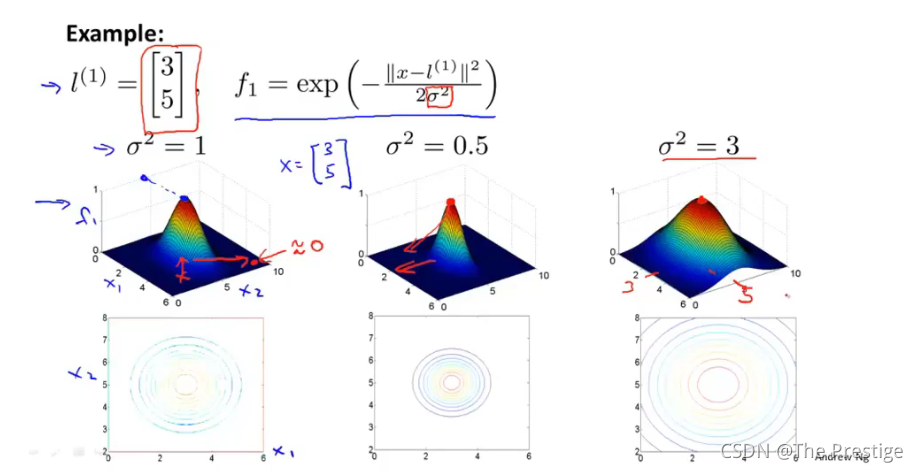

Kernels and Similarity

f 1 = s i m i l a r i t y ( x , l ( 1 ) ) = e x p ( ? ∣ ∣ x ? l ( 1 ) ∣ ∣ 2 2 σ 2 ) = exp ? ( ? ∑ j = 1 n ( x j ? l j ( 1 ) ) 2 2 σ 2 ) f_1=similarity(x,l^{(1)})=exp(-\frac{||x-l^{(1)}||^2}{2\sigma^2})=\operatorname { exp } ( - \frac { \sum _ { j = 1 } ^ { n } ( x _ { j } - l _j^{ ( 1 ) } ) ^ { 2 } } { 2 \sigma ^ { 2 } } ) f1?=similarity(x,l(1))=exp(?2σ2∣∣x?l(1)∣∣2?)=exp(?2σ2∑j=1n?(xj??lj(1)?)2?)

I f ?? x ≈ l ( 1 ) : If \; x\approx l^{(1)}: Ifx≈l(1): f 1 ≈ 1 f_1\approx 1 f1?≈1

I f ?? x ?? i f ?? f a r ?? f r o m ?? l ( 1 ) : If \;x\; if \; far \; from\;l^{(1)}: Ifxiffarfroml(1): f 1 ≈ 0 f_1\approx 0 f1?≈0

12-5 Kernels II

Choosing the landmarks

SVM with Kernels

Given ( x ( 1 ) , y ( 1 ) ) , ( x ( 2 ) , y ( 2 ) ) , ? ? , ( x ( m ) , y ( m ) ) ( x ^ { ( 1 ) } , y ^ { ( 1 ) } ) , ( x ^ { ( 2 ) } , y ^ { ( 2 ) } ) , \cdots , ( x ^ { ( m ) } , y ^ { ( m ) } ) (x(1),y(1)),(x(2),y(2)),?,(x(m),y(m))

choose l ( 1 ) = x ( 1 ) , l ( 2 ) = x ( 2 ) , ? ? , l ( m ) = x ( m ) l ^ { ( 1 ) } = x ^ { ( 1 ) } , l ^ { ( 2 ) } = x ^ { ( 2 ) } , \cdots , l ^ { ( m ) } = x ^ { ( m ) } l(1)=x(1),l(2)=x(2),?,l(m)=x(m)

Given example x:

? f 1 = s i m i l a r i t y ( x , l ( 1 ) ) f 2 = s i m i l a r i t y ( x , l ( 2 ) ) ? \begin{array}{l}f_1=similarity(x,l^{(1)})\\f_2=similarity(x,l^{(2)})\\\cdots\end{array} f1?=similarity(x,l(1))f2?=similarity(x,l(2))??

For training example ( x ( i ) , y ( i ) ) ? f ( i ) (x^{(i)},y^{(i)})\longrightarrow f^{(i)} (x(i),y(i))?f(i)

Hypothesis: Given x,compute features f ∈ R m + 1 f\in \mathbb{R}^{m+1} f∈Rm+1

Predict “y=1” if θ T f ≥ 0 \theta^T f\ge 0 θTf≥0

Training: m i n θ C ∑ i = 1 m [ y ( i ) c o s t 1 ( θ T f ( i ) ) + ( 1 ? y ( i ) ) c o s t 0 ( θ T f ( i ) ) ] + 1 2 ∑ i = 1 n θ j 2 min _ { \theta} C \sum _ { i = 1 } ^ { m }[ y ^ { ( i ) } {cost} _ { 1 } ( \theta ^ { T } f ^ { ( i ) } ) + ( 1 - y ^ { ( i ) } ) {cost} _ { 0 } ( \theta ^ { T } f ^ { ( i ) } ) ] + \frac { 1 } { 2 } \sum _ { i = 1 } ^ { n}\theta_j^2 minθ?C∑i=1m?[y(i)cost1?(θTf(i))+(1?y(i))cost0?(θTf(i))]+21?∑i=1n?θj2?

SVM parameters:

C ( = 1 λ ) C(=\frac{1}{\lambda}) C(=λ1?).

Large C: Lower bias, high variance.(small

λ

\lambda

λ)

Small C: Higher bias, low variance.(

l

a

r

g

e

??

λ

large\;\lambda

largeλ)

σ 2 \sigma^2 σ2

Large σ 2 \sigma^2 σ2: Features f i f_i fi?; vary more smoothly. Higher bias, lower variance.

Small σ 2 \sigma^2 σ2: Features f i f_i fi?; vary less smoothly. Lower bias, Higher variance.

12-6 Using an SVM

Use SVM software package (e.g. liblinear, libsvm, …) to solve for parameters θ \theta θ

Need to specify:

Choice of parameter C

Choice of kernel (similarity function):

? E.g. No kernel (“linear kernel”)

θ 0 + θ 1 x 1 + θ 2 x 2 + θ 3 + ? ≥ 0 ? n ?? l a r g e , m ?? s m a l l \theta _ { 0 } + \theta _ { 1 } x _ { 1 } + \theta _ { 2 } x _ { 2 } + \theta _ { 3 } + \cdots \ge 0 \longrightarrow n\;large,m\;small θ0?+θ1?x1?+θ2?x2?+θ3?+?≥0?nlarge,msmall

? predict:“y=1” if θ T x ≥ 0 \theta^Tx\ge0 θTx≥0

? Gaussian kernel:

f i = e x p ( ? ∣ ∣ x ? l ( i ) ∣ ∣ 2 2 σ 2 ) , w h e r e ?? l ( i ) = x ( i ) f_i=exp(-\frac{||x-l^{(i)}||^2}{2\sigma^2}),where\;l^{(i)}=x^{(i)} fi?=exp(?2σ2∣∣x?l(i)∣∣2?),wherel(i)=x(i)

? Need to choose σ 2 \sigma^2 σ2

Kernel (similarity) functions:

function f= kernel(x1,x2)

f=exp((-abs(x1-x2)^2)/(2*(sigma^2)))

return

Note: Do perform feature scaling before using the Gaussian kernel

=[](#4-3 Gradient descent in practice I: Feature Scaling)

Other choices of kernel

Note: Not all similarity functions similarity(x, l) make valid kernels (Need to satisfy technical condition called"mercer’s Theorem"to make sure SVM packages’ optimizations run correctly, and do not diverge)

Many of-the-shelf kernels avaliable:

- Polynomial kernel: k ( x , l ) = ( x T l ) 2 , ( x T l + 1 ) 3 , ( x T l + 5 ) 4 k(x,l)=(x^Tl)^2,(x^Tl+1)^3,(x^Tl+5)^4 k(x,l)=(xTl)2,(xTl+1)3,(xTl+5)4

- More esoteric: String kernel, chi-square kernel, histogram intersection kernel

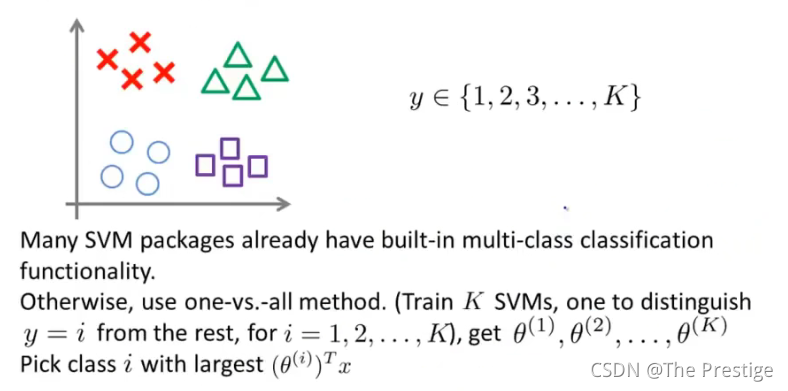

Multi-class classification

Logistic regression vs. SVMS

m=number of features(

x

∈

R

n

+

1

x\in \mathbb{R}^{n+1}

x∈Rn+1), m =number of training examples

If n is large(relative to m)

Use logistic regression, or SVM without a kernel (“linear kernel”)

If n is small, m is intermediate:

Use SVM with Gaussian kernel

If m is small, m is large:

Create/add more features, then use logistic regression or SVM without a kernel

Neural network likely to work well for most of these settings, but may be slower to train