2.1 ���Իع���

ѧϰĿ��

- �˽����Իع��Ӧ�ó���

- ֪�����Իع�Ķ���

1 ���Իع�Ӧ�ó���

-

����Ԥ��

-

���۶�Ԥ��

-

������Ԥ��

���Թ�ϵ����:

2 ʲô�����Իع�

2.1 �����빫ʽ

���Իع�(Linear regression)�������ع鷽��(����)��һ�������Ա���(����ֵ)�������(Ŀ��ֵ)֮����ϵ���н�ģ��һ�ַ�����ʽ��

-

�ص�:ֻ��һ���Ա����������Ϊ�������ع�,����һ���Ա�������Ľ�����Ԫ�ع���

ͨ�ù�ʽ h ( w ) = w 1 x 1 + w 2 x 2 + w 3 x 3 + . . . + b = w T x + b h(w)=w{_1}x{_1}+w{_2}x{_2}+w{_3}x{_3}+...+b=w^Tx+b h(w)=w1?x1?+w2?x2?+w3?x3?+...+b=wTx+b,

���� w w w, x x x������������: w = ( b w 1 w 2 ? ) w= \begin{pmatrix} b \\ w{_1} \\ w{_2} \\ \vdots \end{pmatrix} w=??????bw1?w2?????????, x = ( 1 x 1 x 2 ? ) x= \begin{pmatrix} 1 \\ x{_1} \\ x{_2}\\\vdots \end{pmatrix} x=??????1x1?x2?????????

-

���Իع��þ����ʾ����

{ 1 �� x 1 + x 2 = 2 0 �� x 1 + x 2 = 2 2 �� x 1 + x 2 = 3 \begin{cases}1 \times x{_1} + x{_2} = 2 \\ 0 \times x{_1} + x{_2} = 2 \\ 2 \times x{_1} + x{_2} = 3 \end{cases} ??????1��x1?+x2?=20��x1?+x2?=22��x1?+x2?=3?

? д�ɾ�����ʽ:

KaTeX parse error: No such environment: split at position 8: \begin{?s?p?l?i?t?}? &\begin{bmat��

? ���еĽǶȿ�:

KaTeX parse error: No such environment: split at position 8: \begin{?s?p?l?i?t?}? &\begin{bmatri��

?

��ô��ô������?����������������

- ��ĩ�ɼ�:0.7�����Գɼ�+0.3��ƽʱ�ɼ�

- ���Ӽ۸� = 0.02����������ľ��� + 0.04������һ������Ũ�� + (-0.12����ס��ƽ������) + 0.254����������

������������,���ǿ�������ֵ��Ŀ��ֵ֮�佨����һ����ϵ,�����ϵ��������Ϊ����ģ����

2.2 ���Իع��������Ŀ��Ĺ�ϵ����

���Իع鵱����Ҫ������ģ��,**һ�������Թ�ϵ,��һ���Ƿ����Թ�ϵ��**����������ֻ�ܻ�һ��ƽ�����ȥ����,���Զ��õ����������������������ӡ�

-

���Թ�ϵ

- ���������Թ�ϵ:

-

��������Թ�ϵ

ע��:��������Ŀ��ֵ�Ĺ�ϵ��ֱ�߹�ϵ,��������������Ŀ��ֵ����ƽ��Ĺ�ϵ

����ά�ȵ����Dz����Լ�ȥ��,��ס���ֹ�ϵ����

-

�����Թ�ϵ

ע��:Ϊʲô�������Ĺ�ϵ��?ԭ����ʲô?

����Ƿ����Թ�ϵ,��ô�ع鷽�̿�������Ϊ:

w 1 x 1 + w 2 x 2 2 + w 3 x 3 2 w_1x_1+w_2x_2^2+w_3x_3^2 w1?x1?+w2?x22?+w3?x32?

��

- ���Իع�Ķ��塾�˽⡿

- �����ع鷽��(����)��һ�������Ա���(����ֵ)�������(Ŀ��ֵ)֮����ϵ���н�ģ��һ�ַ�����ʽ

- ���Իع�ķ��ࡾ֪����

- ���Թ�ϵ

- �����Թ�ϵ

2.2 ���Իع�api����ʹ��

ѧϰĿ��

- ֪�����Իع�api�ļ�ʹ��

1 ���Իع�API

- sklearn.linear_model.LinearRegression()

- LinearRegression.coef_:�ع�ϵ��

- LinearRegression.intercept_: �ؾ�

2 ����

2.1 �������

- 1.��ȡ���ݼ�

- 2.���ݻ�������(�ð�����ʡ��)

- 3.��������(�ð�����ʡ��)

- 4.����ѧϰ

- 5.ģ������(�ð�����ʡ��)

2.2 �������

- ����ģ��

from sklearn.linear_model import LinearRegression

- �������ݼ�

x = [[80, 86],

[82, 80],

[85, 78],

[90, 90],

[86, 82],

[82, 90],

[78, 80],

[92, 94]]

y = [84.2, 80.6, 80.1, 90, 83.2, 87.6, 79.4, 93.4]

- ����ѧϰ-- ģ��ѵ��

# ʵ����API

estimator = LinearRegression()

# ʹ��fit��������ѵ��,�õ�ģ��

estimator.fit(x,y)

# ��ӡ�ع�ϵ��(ÿ��������ǰ��ϵ��)

estimator.coef_

# ��ӡ�ؾ�

estimator.intercept_

# ����ѵ����ģ�ͽ���Ԥ��

estimator.predict([[100, 80]])

��

- sklearn.linear_model.LinearRegression()

- LinearRegression.coef_:�ع�ϵ��

- LinearRegression.intercept_: �ؾ�

2.3 ��ѧ:��

ѧϰĿ��

- ֪������������

- ֪����������������

1 �����ĸ���ع�

-

����(Derivative),Ҳ�е�����ֵ��������,�������е���Ҫ�������

- ������y=f(x)���Ա���x��һ��x0�ϲ���һ��������xʱ,�������ֵ��������y���Ա���������x�ı�ֵ�ڦ�x����0ʱ�ļ���a�������,a��Ϊ��x0���ĵ���,����f��(x0)��df(x0)/dx��

[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-9LNIMY0r-1631190399726)(./images/����.jpeg)]

-

�����Ǻ����ľֲ����ʡ�һ��������ijһ��ĵ��������������������һ�㸽���ı仯�ʡ�

- ����������Ա�����ȡֵ����ʵ���Ļ�,������ijһ��ĵ������Ǹú�������������������һ���ϵ�����б��

- �����ı�����ͨ�����ĸ���Ժ������оֲ������Աƽ����������˶�ѧ��,�����λ�ƶ���ʱ��ĵ������������˲ʱ�ٶ�

-

�������еĺ������е���,һ������Ҳ��һ�������еĵ��϶��е�����

- ��ij������ijһ�㵼������,���������һ��ɵ�,�����Ϊ���ɵ�

- �ɵ��ĺ���һ������;�������ĺ���һ�����ɵ�

-

���ڿɵ��ĺ���f(x),x?f��(x)Ҳ��һ������,����f(x)�ĵ�����(��Ƶ���)��Ѱ����֪�ĺ�����ij��ĵ������䵼�����Ĺ��̳�Ϊ��

- ʵ����,����һ�����Ĺ���,�������������㷨��Ҳ��Դ�ڼ����������㷨��

- ��֪������Ҳ���Ե�������ԭ���ĺ���

2 ���������ĵ���

| ��ʽ | ���� |

|---|---|

| ( C ) �� = 0 (C)^\prime=0 (C)��=0 | ( 5 ) �� = 0 \left(5\right)^\prime=0 (5)��=0 ( 10 ) �� = 0 \left(10\right)^\prime=0 (10)��=0 |

| ( x �� ) �� = �� x �� ? 1 \left(x^\alpha\right)^\prime=\alpha x^{\alpha-1} (x��)��=��x��?1 | ( x 3 ) �� = 3 x 2 \left(x^3\right)^\prime=3 x^{2} (x3)��=3x2 ( x 5 ) �� = 5 x 4 \left(x^5\right)^\prime=5 x^{4} (x5)��=5x4 |

| ( a x ) �� = a x ln ? a \left(a^x\right)^\prime=a^{x}\ln{a} (ax)��=axlna | ( 2 x ) �� = 2 x ln ? 2 \left(2^x\right)^\prime=2^x\ln{2} (2x)��=2xln2 ( 7 x ) �� = 7 x ln ? 7 \left(7^x\right)^\prime=7^x\ln{7} (7x)��=7xln7 |

| ( e x ) �� = e x \left(e^x\right)^\prime=e^{x} (ex)��=ex | ( e x ) �� = e x \left(e^x\right)^\prime=e^{x} (ex)��=ex |

| ( log ? a x ) �� = 1 x ln ? a \left(\log{_a}x\right)^\prime=\frac{1}{x\ln{a}} (loga?x)��=xlna1? | ( log ? 10 x ) �� = 1 x ln ? 10 \left(\log{_{10}}x\right)^\prime=\frac{1}{x\ln{10}} (log10?x)��=xln101? ( log ? 6 x ) �� = 1 x ln ? 6 \left(\log{_{6}}x\right)^\prime=\frac{1}{x\ln{6}} (log6?x)��=xln61? |

| ( ln ? x ) �� = 1 x \left(\ln{x}\right)^\prime=\frac{1}{x} (lnx)��=x1? | ( ln ? x ) �� = 1 x \left(\ln{x}\right)^\prime=\frac{1}{x} (lnx)��=x1? |

| ( sin ? x ) �� = cos ? x \left(\sin{x}\right)^\prime=\cos{x} (sinx)��=cosx | ( sin ? x ) �� = cos ? x \left(\sin{x}\right)^\prime=\cos{x} (sinx)��=cosx |

| ( cos ? x ) �� = ? sin ? x \left(\cos{x}\right)^\prime=-\sin{x} (cosx)��=?sinx | ( cos ? x ) �� = ? sin ? x \left(\cos{x}\right)^\prime=-\sin{x} (cosx)��=?sinx |

3 ��������������

| ��ʽ | ���� |

|---|---|

| [ u ( x ) �� v ( x ) ] �� = u �� ( x ) �� v �� ( x ) \left[u(x)\pm v(x)\right]^\prime=u^\prime(x) \pm v^\prime(x) [u(x)��v(x)]��=u��(x)��v��(x) | ( e x + 4 ln ? x ) �� = ( e x ) �� + ( 4 ln ? x ) �� = e x + 4 x (e^x+4\ln{x})^\prime=(e^x)^\prime+(4\ln{x})^\prime=e^x+\frac{4}{x} (ex+4lnx)��=(ex)��+(4lnx)��=ex+x4? |

| [ u ( x ) ? v ( x ) ] �� = u �� ( x ) ? v ( x ) + u ( x ) ? v �� ( x ) \left[u(x)\cdot v(x)\right]^\prime=u^\prime(x) \cdot v(x) + u(x) \cdot v^\prime(x) [u(x)?v(x)]��=u��(x)?v(x)+u(x)?v��(x) | ( sin ? x ? ln ? x ) �� = cos ? x ? ln ? x + sin ? x ? 1 x (\sin{x}\cdot\ln{x})^\prime=\cos{x}\cdot\ln{x}+\sin{x}\cdot\frac{1}{x} (sinx?lnx)��=cosx?lnx+sinx?x1? |

| [ u ( x ) v ( x ) ] �� = u �� ( x ) ? v ( x ) ? u ( x ) ? v �� ( x ) v 2 ( x ) \left[\frac{u(x)}{v(x)}\right]^\prime=\frac{u^\prime(x) \cdot v(x) - u(x) \cdot v^\prime(x)}{v^2(x)} [v(x)u(x)?]��=v2(x)u��(x)?v(x)?u(x)?v��(x)? | ( e x cos ? x ) �� = e x ? cos ? x ? e x ? ( ? sin ? x ) c o s 2 ( x ) \left(\frac{e^x}{\cos{x}}\right)^\prime=\frac{e^x\cdot\cos{x}-e^x\cdot(-\sin{x})}{cos^2(x)} (cosxex?)��=cos2(x)ex?cosx?ex?(?sinx)? |

| { g [ h ( x ) ] } �� = g �� ( h ) ? h �� ( x ) \{g[h(x)]\}^\prime=g^\prime(h)*h^\prime(x) {g[h(x)]}��=g��(h)?h��(x) | ( sin ? 2 x ) �� = cos ? 2 x ? ( 2 x ) �� = 2 cos ? ( 2 x ) (\sin{2x})^\prime=\cos{2x}\cdot(2x)^\prime=2\cos(2x) (sin2x)��=cos2x?(2x)��=2cos(2x) |

4 ��ϰ

- y = x 3 ? 2 x 2 + s i n x y = x^3-2x^2+sinx y=x3?2x2+sinx,�� f �� ( x ) f^\prime(x) f��(x)

- ( e x + 4 l n x ) �� (e^x+4lnx)^\prime (ex+4lnx)��

- ( s i n x ? l n x ) �� (sinx*lnx)^\prime (sinx?lnx)��

- ( e x c o s x ) �� (\frac{e^x}{cosx})^\prime (cosxex?)��

- y = sin ? 2 x y=\sin2x y=sin2x, �� d y d x \frac{dy}{dx} dxdy?

- ( e 2 x ) �� (e^{2x})^\prime (e2x)��

��:

- y �� = ( x 3 ? 2 x 2 + sin ? x ) �� = ( x 3 ) �� ? ( 2 x 2 ) �� + ( sin ? x ) �� = 3 x 2 ? 4 x + cos ? x y^\prime=(x^3-2x^2+\sin{x})^\prime=(x^3)^\prime-(2x^2)^\prime+(\sin{x})^\prime = 3x^2-4x+\cos{x} y��=(x3?2x2+sinx)��=(x3)��?(2x2)��+(sinx)��=3x2?4x+cosx

- ( e x + 4 l n x ) �� = ( e x ) �� + ( 4 ln ? x ) �� = e x + 4 x (e^x+4lnx)^\prime=(e^x)^\prime+(4\ln{x})^\prime=e^x+\frac{4}{x} (ex+4lnx)��=(ex)��+(4lnx)��=ex+x4?

- ( s i n x ? l n x ) �� = ( sin ? x ) �� ? ln ? x + sin ? x ? ( ln ? x ) �� = cos ? x ? ln ? x + sin ? x ? 1 x (sinx*lnx)^\prime=(\sin{x})^\prime\cdot\ln{x}+\sin{x}\cdot(\ln{x})^\prime=\cos{x}\cdot\ln{x}+\sin{x}\cdot\frac{1}{x} (sinx?lnx)��=(sinx)��?lnx+sinx?(lnx)��=cosx?lnx+sinx?x1?

- ( e x cos ? x ) �� = ( e x ) �� ? cos ? x ? e x ? ( cos ? x ) �� cos ? 2 x = e x ? cos ? x ? e x ? ( ? sin ? x ) cos ? 2 ( x ) \left(\frac{e^x}{\cos{x}}\right)^\prime=\frac{(e^x)^\prime\cdot\cos{x}-e^x\cdot(\cos{x})\prime}{\cos^2{x}}=\frac{e^x\cdot\cos{x}-e^x\cdot(-\sin{x})}{\cos^2(x)} (cosxex?)��=cos2x(ex)��?cosx?ex?(cosx)��?=cos2(x)ex?cosx?ex?(?sinx)?

- ( sin ? 2 x ) �� = cos ? 2 x ? ( 2 x ) �� = 2 cos ? ( 2 x ) (\sin{2x})^\prime=\cos{2x}\cdot(2x)^\prime=2\cos(2x) (sin2x)��=cos2x?(2x)��=2cos(2x)

- ( e 2 x ) �� = e 2 x ? ( 2 x ) �� = 2 e 2 x (e^{2x})^\prime=e^{2x}\cdot(2x)^\prime=2e^{2x} (e2x)��=e2x?(2x)��=2e2x

��

- ������������ʽ�͵�������������

2.4 ���Իع����ʧ���Ż�

ѧϰĿ��

- ֪�����Իع�����ʧ����

- ֪��ʹ�����淽�̶���ʧ�����Ż��Ĺ���

- ֪��ʹ���ݶ��½�������ʧ�����Ż��Ĺ���

���ȷ���ع鷽��

- �㷨ѵ�������Ļع鷽��, �Ƿ���������е�����?

- ����������

- Ŀ��,�ع鷽�̵Ľ����ʵ��ֵ�������С

- ��Ȼ�������,��κ������?

1 ��ʧ����

����ʧ����Ϊ:

J

(

w

)

=

(

h

(

x

1

)

?

y

1

)

2

+

(

h

(

x

2

)

?

y

2

)

2

+

.

.

.

+

(

h

(

x

m

)

?

y

m

)

2

=

��

i

=

1

m

(

h

(

x

i

)

?

y

i

)

2

\begin{aligned}J(w)&=(h(x_{1})-y_{1})^2+(h(x_{2})-y_{2})^2+...+(h(x_{m})-y_{m})^2 \\ &=\sum_{i=1}^m(h(x_{i})-y_{i})^2\end{aligned}

J(w)?=(h(x1?)?y1?)2+(h(x2?)?y2?)2+...+(h(xm?)?ym?)2=i=1��m?(h(xi?)?yi?)2?

- y i y_{i} yi?Ϊ�� i i i��ѵ����������ʵֵ

-

h

(

x

i

)

h(x_{i})

h(xi?)��

i

i

i��ѵ����������ֵ���Ԥ�⺯��,Ҳ��ΪĿ�꺯��

- Ŀ�꺯��(objective function)��ָ�����ĵ�Ŀ��(ijһ����)����ص�����(ijЩ����)�ĺ�����ϵ����˵,�������������ó����Ǹ�������

- �ֳ���С���˷�

���ȥ���������ʧ,ʹ����Ԥ��ĸ���ȷЩ?��Ȼ�����������ʧ,����һֱ˵����ѧϰ���Զ�ѧϰ�Ĺ���,�����Իع���������ܹ����֡��������ͨ��һЩ�Ż�����ȥ�Ż�(��ʵ����ѧ���е�����)�ع������ʧ!!!

2 �Ż��㷨

���ȥ��ģ�͵��е�W,ʹ����ʧ��С?(Ŀ�����ҵ���С��ʧ��Ӧ��Wֵ)

- ���Իع龭��ʹ�õ������Ż��㷨

- ���淽��

- �ݶ��½���

2.1 ���淽��

2.1.1 ʲô�����淽��

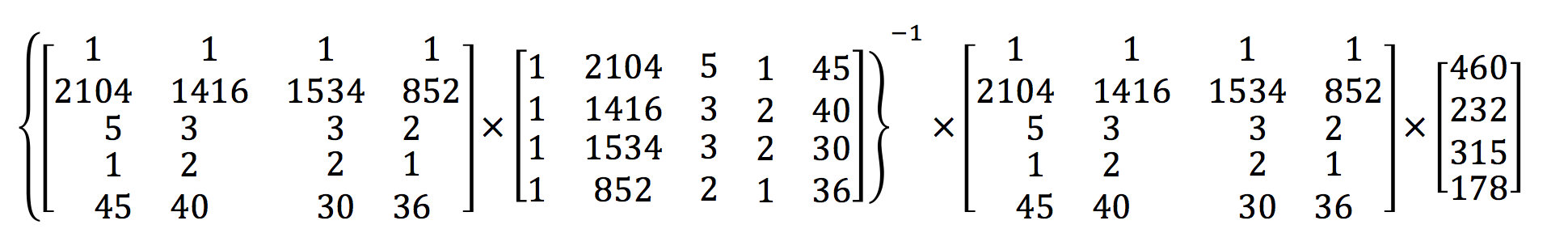

w = ( X T X ) ? 1 X T y w=(X^TX)^{-1}X^Ty w=(XTX)?1XTy

����:XΪ����ֵ����,yΪĿ��ֵ����ֱ������õĽ��

ȱ��:���������������ʱ,����ٶ�̫�����ҵò������

2.1.2 ���淽��������

���±�ʾ����Ϊ��:

��:

�������淽�̷���������:

2.2 �ݶ��½�(Gradient Descent)

2.2.1 ʲô���ݶ��½�

�ݶ��½����Ļ���˼��������Ϊһ����ɽ�Ĺ��̡�

��������һ������:

һ����������ɽ��,��Ҫ��ɽ������(i.e. �ҵ�ɽ����͵�,Ҳ����ɽ��)������ʱɽ�ϵ�Ũ���ܴ�,���¿��ӶȺܵ͡�

���,��ɽ��·������ȷ��,�����������Լ���Χ����Ϣȥ�ҵ���ɽ��·�������ʱ��,���Ϳ��������ݶ��½��㷨�������Լ���ɽ��

������˵����,������ǰ��������λ��Ϊ��,Ѱ�����λ����͵ĵط�,Ȼ����ɽ�ĸ߶��½��ĵط���,(ͬ��,������ǵ�Ŀ������ɽ,Ҳ��������ɽ��,��ô��ʱӦ���dz�����͵ķ���������)��Ȼ��ÿ��һ�ξ���,����������ͬһ������,�����ܳɹ��ĵִ�ɽ�ȡ�

�ݶ��½��Ļ������̾ͺ���ɽ�ij��������ơ�

����,������һ�����ֵĺ�������������ʹ�����һ��ɽ��

���ǵ�Ŀ������ҵ������������Сֵ,Ҳ����ɽ�ס�

����֮ǰ�ij�������,������ɽ�ķ�ʽ�����ҵ���ǰλ����͵ķ���,Ȼ�����Ŵ˷���������,��Ӧ��������,�����ҵ���������ݶ� ,Ȼ�����ݶ��෴�ķ���,�����ú���ֵ�½������!��Ϊ�ݶȵķ�����Ǻ���ֵ�仯���ķ���

����,�����ظ������������,������ȡ�ݶ�,�����ܵ���ֲ�����Сֵ,���������������ɽ�Ĺ��̡�����ȡ�ݶȾ�ȷ������͵ķ���,Ҳ���dz����в���������ֶΡ�

2.2.2 �ݶȵĸ���

�ݶ���������һ������Ҫ�ĸ���

- �ڵ������ĺ�����,�ݶ���ʵ���Ǻ�������,�����ź�����ij������������ߵ�б��;

- �ڶ����������,�ݶ���һ������,�����з���,�ݶȵķ����ָ���˺����ڸ�������������ķ���;

- ����������,�Զ�Ԫ�����IJ�����?ƫ����,����õĸ���������ƫ��������������ʽд����,�����ݶȡ�

��Ҳ��˵����Ϊʲô������Ҫǧ���ټƵ���ȡ�ݶ�!������Ҫ����ɽ��,����Ҫ��ÿһ���۲��ʱ��͵ĵط�,�ݶȾ�ǡ�ɸ�����������������ݶȵķ����Ǻ����ڸ������������ķ���,��ô�ݶȵķ�������Ǻ����ڸ������½����ķ���,��������������Ҫ�ġ���������ֻҪ�����ݶȵķ�����һֱ��,�����ߵ��ֲ�����͵�!

2.2.3 �ݶ��½�����

- 1. �������������ݶ��½�

���Ǽ�����һ���������ĺ��� : J ( �� ) = �� 2 J(\theta) = \theta^2 J(��)=��2

��������: J �� ( �� ) = 2 �� J^\prime(\theta) = 2\theta J��(��)=2��

��ʼ��,���Ϊ(��֪����ʼλ��): �� 0 = 1 \theta^0 = 1 ��0=1

ѧϰ��(����): �� = 0.4 \alpha = 0.4 ��=0.4

���ǿ�ʼ�����ݶ��½��ĵ����������:

KaTeX parse error: No such environment: eqnarray at position 15: \large \begin{?e?q?n?a?r?r?a?y?}? \theta^0 &=& 1��

��ͼ,�����Ĵε�����,Ҳ���������IJ�,�����͵ִ��˺�������͵�,Ҳ����ɽ��

- 2.������������ݶ��½�

���Ǽ�����һ��Ŀ�꺯�� : J ( �� ) = �� 1 2 + �� 2 2 J(\theta) = \theta_{1}^{2} + \theta_{2}^{2} J(��)=��12?+��22?

����Ҫͨ���ݶ��½������������������Сֵ������ͨ���۲���ܷ�����Сֵ��ʵ���� (0,0)�㡣���ǽ���

��,���ǻ���ݶ��½��㷨��ʼһ�������㵽�����Сֵ!

���Ǽ����ʼ�����Ϊ:

��

0

=

(

1

,

3

)

\theta^{0} = (1, 3)

��0=(1,3)

��ʼ��ѧϰ��(����): �� = 0.1 \alpha = 0.1 ��=0.1

�������ݶ�Ϊ: �� J ( �� ) = < 2 �� 1 , 2 �� 2 > \Delta J(\theta) =< 2\theta_{1} ,2\theta_{2}> ��J(��)=<2��1?,2��2?>

���ж�ε���:

$$

\begin{eqnarray}

\Theta^0 &=& (1, 3) \

\Theta^1 &=& \Theta^0-\alpha\Delta J(\Theta)\

&=&(1,3)-0.1(2,6)\

&=&(0.8, 2.4)\

\Theta^2 &=& (0.8, 2.4)-0.1(1.6, 4.8)\

&=&(0.64, 1.92)\

\Theta^3 &=& (0.512, 1.536)\

\Theta^4 &=& (0.4096, 1.2288)\

\vdots\

\Theta^{10} &=& (0.10737418240000003, 0.32212254720000005)\

\vdots\

\Theta^{50} &=& (1.1417981541647683e^{-5}, 3.425394462494306e^{-5})\

\vdots\

\Theta^{100} &=& (1.6296287810675902e^{-10}, 4.888886343202771e^{-10})\

\end{eqnarray}

$$

���Ƿ���,�Ѿ�����������������Сֵ��

2.2.4 �ݶ��½�**(**Gradient Descent)��ʽ

�� i + 1 = �� i ? �� ? ? �� i J ( �� ) \Large \theta_{i+1} = \theta_{i} - \alpha\frac{\partial}{\partial\theta_{i}}J(\theta) ��i+1?=��i??��?��i???J(��)

- 1) �� \alpha ����ʲô����?

�� \alpha �����ݶ��½��㷨�б�����Ϊѧϰ����������,��ζ�����ǿ���ͨ����������ÿһ���ߵľ���,���Ʋ�����Ҫ��̫��,������ʹ��ʧ����ȡ��Сֵ�ĵ㡣ͬʱҲҪ��֤��Ҫ�ߵ�̫��,����̫����ɽ��,��û���ߵ�ɽ�¡����Ԧ���ѡ�����ݶ��½����������Ǻ���Ҫ��!������̫��Ҳ����̫С,̫С�Ļ�,���ܵ��³ٳ��߲�����͵�,̫��Ļ�,�ᵼ�´�����͵�!

- 2) Ϊʲô�ݶ�Ҫ����һ������?

�ݶ�ǰ��һ������,����ζ�ų����ݶ��෴�ķ���ǰ��!������ǰ���ᵽ,�ݶȵķ���ʵ�ʾ��������ڴ˵��������ķ���!��������Ҫ�����½����ķ�����,��Ȼ���Ǹ����ݶȵķ���,���Դ˴���Ҫ���ϸ���

����ͨ������ͼ���������ݶ��½��Ĺ���

���������ݶ��½�����һ���Ż��㷨,�ع������"�Զ�ѧϰ"������

3 �ݶ��½������淽�̵ĶԱ�

3.1 ���ַ����Ա�

| �ݶ��½� | ���淽�� |

|---|---|

| ��Ҫѡ��ѧϰ�� | ����Ҫ |

| ��Ҫ������� | һ������ó� |

| ���������ϴ�(����10000��)����ʹ�� | ��Ҫ���㷽��,ʱ�临�Ӷȸ�O(n3) |

����ǰ��Ľ���,���Ƿ�����С���˷����ü���Ч,���ݶ��½������ĵ������ƺ�����ܶࡣ�����������Ǿ�������С���˷��ľ����ԡ�

- ����,��С���˷���Ҫ����

X

T

X

X^TX

XTX�������,�п��������������,������û�а취ֱ������С���˷��ˡ�

- ��ʱ����Ҫʹ���ݶ��½�������Ȼ,���ǿ���ͨ�����������ݽ�������,ȥ�������������� X T X X^TX XTX������ʽ��Ϊ0,Ȼ�����ʹ����С���˷���

- �ڶ�,����������n�dz��Ĵ��ʱ��,����

X

T

X

X^TX

XTX���������һ���dz���ʱ�Ĺ���(nxn�ľ�������),���������С�

- ��ʱ���ݶ��½�Ϊ�����ĵ�������Ȼ����ʹ�á�

- �����n�����Ͳ��ʺ���С���˷���?�����û�кܶ�ķֲ�ʽ�����ݼ�����Դ,���鳬��10000���������õ������ɡ�����ͨ�����ɷַ�������������ά�Ⱥ�������С���˷���

- ����,�����Ϻ����������Ե�,��ʱ��ʹ����С���˷�,��Ҫͨ��һЩ����ת��Ϊ���Բ���ʹ��,��ʱ�ݶ��½���Ȼ�����á�

- ����,�����������:

- ��������m����,С��������n��ʱ��,��ʱ��Ϸ�����Ƿ����,���õ��Ż���������ȥ������ݡ�

- ��������m����������n��ʱ��,�÷��������Ϳ����ˡ�

- ��m����nʱ,��Ϸ����dz�����,Ҳ�������dz�������С���˷��ij����ˡ�

3.2 �㷨ѡ������:

- С��ģ����:

- ���淽��:LinearRegression

- ��ع�: Ridge

- ���ģ����:

- �ݶ��½���:SGDRegressor

��

- ��ʧ������֪����

- ��С���˷�

- ���Իع��Ż�������֪����

- ���淽��

- �ݶ��½���

- ���淽�� �C һ�����͡�֪����

- ֻ���ʺ������������Ƚ��ٵ����

- �ݶ��½��� �C ѭ����֪����

- �ݶȵĸ���

- ������ �C ����

- ����� �C ����

- �ݶ��½����й�ע����������

- �� �C ���Dz���

- ����̫С �C ��ɽ̫��

- ����̫�� �C ����������Сֵ��

- Ϊʲô�ݶ�Ҫ��һ������

- �ݶȷ�����������췽��,���ž����½���췽��

- �� �C ���Dz���

- �ݶȵĸ���

- �ݶ��½��������淽��ѡ�����ݡ�֪����

- С��ģ����:

- ���淽��:LinearRegression

- ��ع�: Ridge

- ���ģ����:

- �ݶ��½���:SGDRegressor

- С��ģ����:

2.5 �ݶ��½���������

ѧϰĿ��

- �˽�ȫ�ݶ��½��㷨��ԭ��

- �˽�����ݶ��½��㷨��ԭ��

- �˽����ƽ���ݶ��½��㷨��ԭ��

- �˽�С�����ݶ��½��㷨��ԭ��

1 �ݶ��½��������

����,��������һ��,�������ݶ��½��㷨��:

- ȫ�ݶ��½��㷨(Full gradient descent),

- ����ݶ��½��㷨(Stochastic gradient descent),

- С�����ݶ��½��㷨(Mini-batch gradient descent),

- ���ƽ���ݶ��½��㷨(Stochastic average gradient descent)

���Ƕ���Ϊ����ȷ�ص���Ȩ������,ͨ��Ϊÿ��Ȩ�ؼ���һ���ݶ�,�Ӷ�����Ȩֵ,ʹĿ�꺯����������С������������������ʹ�÷�ʽ��ͬ��

1.1 ȫ�ݶ��½��㷨(FG)

�����ݶ��½���,���ݶ��½�����õ���ʽ,��������Ҳ�����ڸ��²���ʱʹ�����е����������и��¡�

����ѵ���������������,���������ȡƽ��ֵ��ΪĿ�꺯����

Ȩ�����������ݶ��෴�ķ����ƶ�,�Ӷ�ʹ��ǰĿ�꺯�����ٵ���ࡣ

����������ѵ�����ݼ��ϼ�����ʧ�������ڲ���

��

\theta

�� ���ݶ�:

��

i

+

1

=

��

i

?

��

��

j

=

0

m

(

h

��

(

x

0

(

j

)

,

x

1

(

j

)

,

?

?

,

x

n

(

j

)

)

?

y

j

)

x

i

(

j

)

\large \theta_{i+1} = \theta_i - \alpha\sum_{j=0}^{m}(h_{\theta}(x_0^{(j)},x_1^{(j)},\cdots,x_n^{(j)})-y_j)x_i^{(j)}

��i+1?=��i??��j=0��m?(h��?(x0(j)?,x1(j)?,?,xn(j)?)?yj?)xi(j)?

����������m������,�������ݶȵ�ʱ�����������m���������ݶ����ݡ�

ע��:

- ��Ϊ��ִ��ÿ�θ���ʱ,������Ҫ���������ݼ��ϼ������е��ݶ�,�������ݶ��½������ٶȻ����,ͬʱ,ȫ�ݶ��½��������������ڴ��������Ƶ����ݼ���

- ȫ�ݶ��½���ͬ��Ҳ�������߸���ģ��,�������еĹ�����,���������µ�������

1.2 ����ݶ��½��㷨(SG)

����FGÿ��������һ��Ȩ�ض���Ҫ���������������,��ʵ�������о��������ڵ�ѵ������,��Ч��ƫ��,����������ֲ����Ž�,������������ݶ��½��㷨��

��ÿ�ּ����Ŀ�꺯��������ȫ���������,�����ǵ����������,��ÿ��ֻ�������һ������Ŀ�꺯�����ݶ�������Ȩ��,��ȡ��һ�������ظ��˹���,ֱ����ʧ����ֵֹͣ�½�����ʧ����ֵС��ij���������̵���ֵ��

�˹��̼�,��Ч,ͨ�����ԽϺõر�����µ����������ֲ����Ž⡣�������ʽΪ

��

i

+

1

=

��

i

?

��

(

h

��

(

x

0

(

j

)

,

x

1

(

j

)

,

?

?

,

x

n

(

j

)

)

?

y

j

)

x

i

(

j

)

\large \theta_{i+1}=\theta_i-\alpha(h_{\theta}(x_0^{(j)},x_1^{(j)},\cdots,x_n^{(j)})-y_j)x_i^{(j)}

��i+1?=��i??��(h��?(x0(j)?,x1(j)?,?,xn(j)?)?yj?)xi(j)?

��������,SGÿ��ֻʹ��һ����������,��������������������ֲ����Ž⡣

1.3 С�����ݶ��½��㷨(mini-batch)

С�����ݶ��½��㷨��FG��SG�����з���,��һ���̶��ϼ�����������ַ������ŵ㡣

ÿ�δ�ѵ���������������ȡһ��С������,�ڳ������С�������ϲ���FG��������Ȩ�ء�

�������С����������������ĸ�����Ϊbatch_size,ͨ������Ϊ2���ݴη�,��������GPU���ٴ�����

�ر��,��batch_size=1,������SG;��batch_size=n,������FG.�������ʽΪ

��

i

+

1

=

��

i

?

��

��

j

=

t

t

+

x

?

1

(

h

��

(

x

0

(

j

)

,

x

1

(

j

)

,

?

?

,

x

n

(

j

)

)

?

y

j

)

x

i

(

j

)

\large \theta_{i+1}=\theta_i-\alpha\sum_{j=t}^{t+x-1}(h_{\theta}(x_0^{(j)},x_1^{(j)},\cdots,x_n^{(j)})-y_j)x_i^{(j)}

��i+1?=��i??��j=t��t+x?1?(h��?(x0(j)?,x1(j)?,?,xn(j)?)?yj?)xi(j)?

��ʽ��,Ҳ�������Ǵ�m��������,ѡ��x���������е���(1<x<m),

1.4 ���ƽ���ݶ��½��㷨(SAG)

��SG������,��Ȼ�ܿ�������ɱ��������,�����ڴ�����ѵ������,SGЧ��������������,��Ϊÿһ���ݶȸ��¶���ȫ����һ�ֵ����ݺ��ݶ��ء�

���ƽ���ݶ��㷨�˷����������,���ڴ���Ϊÿһ��������ά��һ���ɵ��ݶ�,���ѡ���i�����������´��������ݶ�,�����������ݶȱ��ֲ���,Ȼ����������ݶȵ�ƽ��ֵ,���������˲�����

���,ÿһ�ָ��½������һ���������ݶ�,����ɱ���ͬ��SG,�������ٶȿ�öࡣ

�������ʽΪ:

��

i

+

1

=

��

i

?

��

n

(

h

��

(

x

0

(

j

)

,

x

1

(

j

)

,

.

.

.

x

n

(

j

)

)

?

y

j

)

x

i

(

j

)

\large \theta_{i+1}=\theta _i-\frac{\alpha }{n}(h_\theta (x^{(j)}_0,x^{(j)}_1,...x^{(j)}_n)-y_j)x_i^{(j)}

��i+1?=��i??n��?(h��?(x0(j)?,x1(j)?,...xn(j)?)?yj?)xi(j)?

- ����֪��sgd�ǵ�ǰȨ�ؼ�ȥ���������ݶ�,�õ��µ�Ȩ�ء�sag�е�a,����ƽ������˼,����˵,�����ڵ�k��������ʱ��,�ҿ��ǵ���һ����ǰ��n-1���ݶȵ�ƽ��ֵ,��ǰȨ�ؼ�ȥ�����������n���ݶȵ�ƽ��ֵ��

- n���Լ����õ�,��n=1��ʱ��,������ͨ��sgd��

- ����뷨�dz��ļ�,���������������ȷ����,������mini-batch sgd������,����ͬ����,sag��û��ȥ������������,ֻ��������֮ǰ����������ݶ�,����ÿ�ε����ļ���ɱ�ԶС��mini-batch sgd,��sgd�൱��Ч������,sag�����sgd,�����ٶȿ��˺ܶࡣ��һ��������������о����������֤����

- SAG��������:https://arxiv.org/pdf/1309.2388.pdf

��

- ȫ�ݶ��½��㷨(FG)���˽⡿

- �ڽ��м����ʱ��,�����������������ƽ��ֵ,��Ϊ�ҵ�Ŀ�꺯��

- ����ݶ��½��㷨(SG)���˽⡿

- ÿ��ֻѡ��һ���������п���

- С�����ݶ��½��㷨(mini-batch)���˽⡿

- ѡ��һ�����������п���

- ���ƽ���ݶ��½��㷨(SAG)���˽⡿

- ���ÿ��������ά��һ��ƽ��ֵ,���ڼ����ʱ��,�ο����ƽ��ֵ

2.6 ���Իع鰸��

ѧϰĿ��

- �˽����淽�̵�api�����ò���

- �˽��ݶ��½���api�����ò���

- ֪����ζ����Իع�ģ�ͽ�������

1 ���Իع�API

- sklearn.linear_model.LinearRegression(fit_intercept=True)

- ͨ�����淽���Ż�

- ����

- fit_intercept:�Ƿ����ƫ��

- ����

- LinearRegression.coef_:�ع�ϵ��

- LinearRegression.intercept_:ƫ��

- sklearn.linear_model.SGDRegressor(loss=��squared_loss��, fit_intercept=True, learning_rate =��invscaling��, eta0=0.01)

- SGDRegressor��ʵ��������ݶ��½�ѧϰ,��֧�ֲ�ͬ��loss���������ͷ�����������Իع�ģ�͡�

- ����:

- loss:��ʧ����

- loss=��squared_loss��: ��ͨ��С���˷�

- fit_intercept:�Ƿ����ƫ��

- learning_rate : string, optional

- ѧϰ�����

- ��constant��: eta = eta0

- ��optimal��: eta = 1.0 / (alpha * (t + t0)) [default]

- ��invscaling��: eta = eta0 / pow(t, power_t)

- power_t=0.25:���ڸ��൱��

- ����һ������ֵ��ѧϰ����˵,����ʹ��learning_rate=��constant�� ,��ʹ��eta0��ָ��ѧϰ�ʡ�

- loss:��ʧ����

- ����:

- SGDRegressor.coef_:�ع�ϵ��

- SGDRegressor.intercept_:ƫ��

sklearn�ṩ����������ʵ�ֵ�API, ���Ը���ѡ��ʹ��

2 ����:��ʿ�ٷ���Ԥ��

2.1 ������������

- ���ݽ���

��������Щ����,��ר���ǵó���Ӱ�췿�۵Ľ�����ԡ����Ǵ˽β���Ҫ�Լ�ȥ̽�������Ƿ�����,ֻ��Ҫʹ����Щ�����������������ܶ�������Ҫ�����Լ�ȥѰ��

2.2 ��������

�ع鵱�е����ݴ�С��һ��,�Ƿ�ᵼ�½��Ӱ��ϴ�������Ҫ������������

- ���ݷָ����������

- �ع�Ԥ��

- ���Իع���㷨Ч������

2.3 �ع���������

�������(Mean Squared Error, MSE)���ۻ���:

M

S

E

=

1

m

��

i

=

1

m

(

y

i

?

y

^

)

2

\Large MSE = \frac{1}{m}\sum_{i=1}^{m}(y^i-\hat{y})^2

MSE=m1?i=1��m?(yi?y^?)2

ע:

y

i

y^i

yiΪԤ��ֵ,

y

^

\hat{y}

y^? Ϊ��ʵֵ

- sklearn.metrics.mean_squared_error(y_true, y_pred)

- �������ع���ʧ

- y_true:��ʵֵ

- y_pred:Ԥ��ֵ

- return:���������

2.4 ����ʵ��

����

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import mean_squared_error

from sklearn.linear_model import SGDRegressor

from sklearn.linear_model import LinearRegression

���淽��

# 1.��ȡ����

data = load_boston()

# 2.���ݼ�����

x_train, x_test, y_train, y_test = train_test_split(data.data, data.target, random_state=22)

# 3.��������-����

transfer = StandardScaler()

x_train = transfer.fit_transform(x_train)

x_test = transfer.transform(x_test)

# 4.����ѧϰ-���Իع�(���淽��)

estimator = LinearRegression()

estimator.fit(x_train, y_train)

# 5.ģ������

# 5.1 ��ȡϵ����ֵ

y_predict = estimator.predict(x_test)

print("Ԥ��ֵΪ:\n", y_predict)

print("ģ���е�ϵ��Ϊ:\n", estimator.coef_)

print("ģ���е�ƫ��Ϊ:\n", estimator.intercept_)

# 5.2 ����

# �������

error = mean_squared_error(y_test, y_predict)

print("���Ϊ:\n", error)

�ݶ��½���

# 1.��ȡ����

data = load_boston()

# 2.���ݼ�����

x_train, x_test, y_train, y_test = train_test_split(data.data, data.target, random_state=22)

# 3.��������-����

transfer = StandardScaler()

x_train = transfer.fit_transform(x_train)

x_test = transfer.fit_transform(x_test)

# 4.����ѧϰ-���Իع�(��������)

estimator = SGDRegressor(max_iter=1000)

estimator.fit(x_train, y_train)

# 5.ģ������

# 5.1 ��ȡϵ����ֵ

y_predict = estimator.predict(x_test)

print("Ԥ��ֵΪ:\n", y_predict)

print("ģ���е�ϵ��Ϊ:\n", estimator.coef_)

print("ģ���е�ƫ��Ϊ:\n", estimator.intercept_)

# 5.2 ����

# �������

error = mean_squared_error(y_test, y_predict)

print("���Ϊ:\n", error)

����Ҳ���Գ���ȥ��ѧϰ��

estimator = SGDRegressor(max_iter=1000,learning_rate="constant",eta0=0.1)

��ʱ���ǿ���ͨ��������,�ҵ�ѧϰ��Ч�����õ�ֵ��

��

- ���淽��

- sklearn.linear_model.LinearRegression()

- �ݶ��½���

- sklearn.linear_model.SGDRegressor()

- ���Իع�����������֪����

- �������:sklearn.metrics.mean_squared_error

2.7 Ƿ��Ϻ����

ѧϰĿ��

- ���չ���ϡ�Ƿ��ϵĸ���

- ���չ���ϡ�Ƿ��ϲ�����ԭ��

- ֪��ʲô������,�Լ����ķ���

1 ����

-

�����:һ��������ѵ���������ܹ���ñ�����������õ����, �����ڲ������ݼ���ȴ���ܺܺõ��������(������ȷ���½�),��ʱ��Ϊ�����������˹���ϵ�����(ģ���ڸ���)

-

Ƿ���:һ��������ѵ�������ϲ��ܻ�ø��õ����,�����ڲ������ݼ���Ҳ���ܺܺõ��������,��ʱ��Ϊ������������Ƿ��ϵ�����(ģ���ڼ�)

-

����Ϻ�Ƿ��ϵ�����:

- Ƿ�����ѵ�����Ͳ��Լ��ϵ����ϴ�

- �������ѵ����������С,�����Լ������ϴ�

-

ͨ��������ʶ����Ϻ�Ƿ���

-

��������

import numpy as np import matplotlib.pyplot as plt np.random.seed(666) # np.random.uniform() ��[-3,3)��Χ���������100���� x = np.random.uniform(-3,3,size = 100) # ת���ɶ�ά����,���һ�� X = x.reshape(-1,1) # np.random.normal() ����100��������̬�ֲ�����,��ֵΪ0,����Ϊ1 y = 0.5* x**2 + x+2 + np.random.normal(0,1,size = 100) from sklearn.linear_model import LinearRegression estimator = LinearRegression() estimator.fit(X,y) y_predict = estimator.predict(X) plt.scatter(x,y) plt.plot(x,y_predict,color = 'r') plt.show()[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-GXAeZjdK-1631190399729)(./pics/1.png)]

#���������� from sklearn.metrics import mean_squared_error mean_squared_error(y,y_predict) #3.0750025765636577 -

���Ӷ�����,����ͼ��

# ���Ӷ����� X2 = np.hstack([X,X**2]) estimator2 = LinearRegression() estimator2.fit(X2,y) y_predict2 = estimator2.predict(X2) plt.scatter(x,y) plt.plot(np.sort(x),y_predict2[np.argsort(x)],color = 'r') plt.show() #�����������ȷ�� from sklearn.metrics import mean_squared_error mean_squared_error(y,y_predict2) #1.0987392142417858[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-2rFw5c3P-1631190399731)(./pics/2.png)]

-

�ٴμ���ߴ���,����ͼ��,�۲���������

X5 = np.hstack([X2,X**3,X**4,X**5,X**6,X**7,X**8,X**9,X**10]) estimator3 = LinearRegression() estimator3.fit(X5,y) y_predict5 = estimator3.predict(X5) plt.scatter(x,y) plt.plot(np.sort(x),y_predict5[np.argsort(x)],color = 'r') plt.show() error = mean_squared_error(y, y_predict5) error #1.0508466763764157[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-BVmS9ckb-1631190399732)(./pics/3.png)]

ͨ�������۲췢��,���ż���ĸߴ���Խ��Խ��,��ϳ̶�Խ��Խ��,�������Ҳ���ż���Խ��ԽС��˵���Ѿ�����Ƿ����ˡ�

����:����жϳ��ֹ������?

-

�����ݼ����л���:�Ա�X��X2��X5�IJ��Լ��ľ������

-

X�IJ��Լ��������

X_train,X_test,y_train,y_test = train_test_split(X,y,random_state = 5) estimator = LinearRegression() estimator.fit(X_train,y_train) y_predict = estimator.predict(X_test) mean_squared_error(y_test,y_predict) #3.153139806483088 -

X2�IJ��Լ��������

X_train,X_test,y_train,y_test = train_test_split(X2,y,random_state = 5) estimator = LinearRegression() estimator.fit(X_train,y_train) y_predict = estimator.predict(X_test) mean_squared_error(y_test,y_predict) #1.111873885731967 -

X5�IJ��Լ��ľ������

X_train,X_test,y_train,y_test = train_test_split(X5,y,random_state = 5) estimator = LinearRegression() estimator.fit(X_train,y_train) y_predict = estimator.predict(X_test) mean_squared_error(y_test,y_predict) #1.4145580542309835

-

-

2 ԭ���Լ�����취

- Ƿ���ԭ���Լ�����취

- ԭ��:ѧϰ�����ݵ���������

- ����취:

- **1)��������������,**��ʱ������ģ�ͳ���Ƿ��ϵ�ʱ������Ϊ����������µ�,���������������������ܺõؽ����

- 2)���Ӷ���ʽ����,����ڻ���ѧϰ�㷨�����õĺ��ձ�,���罫����ģ��ͨ�����Ӷ��������������ʹģ�ͷ���������ǿ��

- �����ԭ���Լ�����취

- ԭ��:ԭʼ��������,����һЩ��������, ģ���ڸ�������Ϊģ�ͳ���ȥ��˸����������ݵ�

- ����취:

- 1)������ϴ����,���¹���ϵ�һ��ԭ��Ҳ�п��������ݲ������µ�,��������˹���Ͼ���Ҫ����������ϴ���ݡ�

- 2)�������ݵ�ѵ����,����һ��ԭ�������������ѵ����������̫С���µ�,ѵ������ռ�����ݵı�����С��

- 3)����

- 4)����������

3 ����

3.1 ʲô������

�ڽ���ع�������,����ѡ���������Ƕ�����������ѧϰ�㷨������㷨��˵Ҳ���������������,����һЩ�㷨��������֮��(��������������),���Ǹ����Ҳ��ȥ�Լ�������ѡ��,����֮ǰ˵��ɾ�����ϲ�һЩ����

���?

��ѧϰ��ʱ��,�����ṩ��������ЩӰ��ģ���ӶȻ���������������ݵ��쳣�϶�,�����㷨��ѧϰ��ʱ�����������������Ӱ��(����ɾ��ij��������Ӱ��),���������

ע:����ʱ��,�㷨����֪��ij������Ӱ��,����ȥ���������ó��Ż��Ľ��

3.2 �������

- L2����

- ����:����ʹ������W�ı�С,���к�С��wֵ��������0,����ijЩ������Ӱ��

- �ŵ�:ԽС�IJ���˵��ģ��Խ��,Խ��ģ����Խ�����ײ������������

- Ridge�ع�: from sklearn.linear_model import Ridge

- L1����

- ����:����ʹ������һЩ����W��ֱֵ��Ϊ0,ɾ����Щ������Ӱ��

- LASSO�ع�: from sklearn.linear_model import Lasso

- ������

X10 = np.hstack([X2,X**3,X**4,X**5,X**6,X**7,X**8,X**9,X**10])

estimator3 = LinearRegression()

estimator3.fit(X10,y)

y_predict3 = estimator3.predict(X10)

plt.scatter(x,y)

plt.plot(np.sort(x),y_predict3[np.argsort(x)],color = 'r')

plt.show()

# ��ӡ�ع�ϵ��

estimator3.coef_

## չʾ���

array([ 1.32292089e+00, 2.03952017e+00, -2.88731664e-01, -1.24760429e+00,

8.06147066e-02, 3.72878513e-01, -7.75395040e-03, -4.64121137e-02,

1.84873446e-04, 2.03845917e-03])

from sklearn.linear_model import Lasso # L1����

from sklearn.linear_model import Ridge # ��ع� L2����

X10 = np.hstack([X2,X**3,X**4,X**5,X**6,X**7,X**8,X**9,X**10])

estimator_l1 = Lasso(alpha=0.005,normalize=True) # ����alpha ����ǿ�� �鿴����Ч�� normalize=True ���ݱ���

estimator_l1.fit(X10,y)

y_predict_l1 = estimator_l1.predict(X10)

plt.scatter(x,y)

plt.plot(np.sort(x),y_predict_l1[np.argsort(x)],color = 'r')

plt.show()

estimator_l1.coef_ # Lasso �ع� L1���� �Ὣ�ߴη���ϵ����Ϊ0

array([ 0.97284077, 0.4850203 , 0. , 0. , -0. ,

0. , -0. , 0. , -0. , 0. ])

X10 = np.hstack([X2,X**3,X**4,X**5,X**6,X**7,X**8,X**9,X**10])

estimator_l2 = Ridge(alpha=0.005,normalize=True) # ����alpha ����ǿ�� �鿴����Ч��

estimator_l2.fit(X10,y)

y_predict_l2 = estimator_l2.predict(X10)

plt.scatter(x,y)

plt.plot(np.sort(x),y_predict_l2[np.argsort(x)],color = 'r')

plt.show()

estimator_l2.coef_ # l2 ���Ὣϵ����Ϊ0 ���ǶԸߴη���ϵ��Ӱ��ϴ�

array([ 9.91283840e-01, 5.24820573e-01, 1.57614237e-02, 2.34128982e-03,

7.26947948e-04, -2.99893698e-04, -8.28333499e-05, -4.51949529e-05,

-4.21312015e-05, -8.22992826e-07])

[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-IEkiST1C-1631190399735)(./pics/26img.png)]

4 ��ع鰸��

4.1 ��ع��API

- sklearn.linear_model.Ridge(alpha=1.0, fit_intercept=True,solver=��auto��, normalize=False)

- ����l2�������Իع�

- alpha:��������,Ҳ�� ��

- ��ȡֵ:0~1 1~10

- solver:����������Զ�ѡ���Ż�����

- sag:������ݼ����������Ƚϴ�,ѡ�������ݶ��½��Ż�

- normalize:�����Ƿ���б���

- normalize=False:������fit֮ǰ����preprocessing.StandardScaler��������

- Ridge.coef_:�ع�Ȩ��

- Ridge.intercept_:�ع�ƫ��

Ridge�����൱��SGDRegressor(penalty=��l2��, loss=��squared_loss��),ֻ����SGDRegressorʵ����һ����ͨ������ݶ��½�ѧϰ,�Ƽ�ʹ��Ridge(ʵ����SAG)

- sklearn.linear_model.RidgeCV(_BaseRidgeCV, RegressorMixin)

- ����l2�������Իع�,���Խ��н�����֤

- coef_:�ع�ϵ��

class _BaseRidgeCV(LinearModel):

def __init__(self, alphas=(0.1, 1.0, 10.0),

fit_intercept=True, normalize=False,scoring=None,

cv=None, gcv_mode=None,

store_cv_values=False):

4.2 ���̶ȵı仯,�Խ����Ӱ��

- ��������Խ��,Ȩ��ϵ����ԽС

- ��������ԽС,Ȩ��ϵ����Խ��

4.3 ��ع�ʵ�ֲ�ʿ�ٷ���Ԥ��

# 1.��ȡ����

data = load_boston()

# 2.���ݼ�����

x_train, x_test, y_train, y_test = train_test_split(data.data, data.target, random_state=22)

# 3.��������-����

transfer = StandardScaler()

x_train = transfer.fit_transform(x_train)

x_test = transfer.transform(x_test)

# 4.����ѧϰ-���Իع�(��ع�)

estimator = Ridge(alpha=1)

# estimator = RidgeCV(alphas=(0.1, 1, 10))

estimator.fit(x_train, y_train)

# 5.ģ������

# 5.1 ��ȡϵ����ֵ

y_predict = estimator.predict(x_test)

print("Ԥ��ֵΪ:\n", y_predict)

print("ģ���е�ϵ��Ϊ:\n", estimator.coef_)

print("ģ���е�ƫ��Ϊ:\n", estimator.intercept_)

# 5.2 ����

# �������

error = mean_squared_error(y_test, y_predict)

print("���Ϊ:\n", error)

��

- Ƿ��ϡ����ա�

- ��ѵ�����ϱ��ֲ���,�ڲ��Լ��ϱ��ֲ���

- �������:

- ����ѧϰ

- 1.��������������

- 2.���Ӷ���ʽ����

- ����ѧϰ

- ����ϡ����ա�

- ��ѵ�����ϱ��ֺ�,�ڲ��Լ��ϱ��ֲ���

- �������:

- 1.������ϴ���ݼ�

- 2.�������ݵ�ѵ����

- 3.����

- 4.����������

- �������ա�

- ͨ�����Ƹߴ����ϵ�����з�ֹ�����

- L1����

- ����:ֱ�ӰѸߴ���ǰ���ϵ����Ϊ0

- Lasso�ع�

- from sklearn.linear_model import Lasso

- L2����

- ����:�Ѹߴ���ǰ���ϵ������ر�С��ֵ

- ��ع�

2.8 ģ�͵ı���ͼ���

ѧϰĿ��

- ֪��sklearn��ģ�͵ı���ͼ���

1 sklearnģ�͵ı���ͼ���API

- from sklearn.externals import joblib

- ����:joblib.dump(estimator, ��test.pkl��)

- ����:estimator = joblib.load(��test.pkl��)

- ע��:0.21�汾���¿���ʹ��

sklearn.externals.joblib,�°汾��Ҫ��װjoblibpip install joblib,import joblib

2 ���Իع��ģ�ͱ�����ذ���

# 1.��ȡ����

data = load_boston()

# 2.���ݼ�����

x_train, x_test, y_train, y_test = train_test_split(data.data, data.target, random_state=22)

# 3.��������-����

transfer = StandardScaler()

x_train = transfer.fit_transform(x_train)

x_test = transfer.transform(x_test)

# 4.����ѧϰ-���Իع�(��ع�)

# 4.1 ģ��ѵ��

estimator = Ridge(alpha=1)

estimator.fit(x_train, y_train)

# 4.2 ģ�ͱ���

joblib.dump(estimator, "./data/test.pkl")

# 4.3 ģ�ͼ���

estimator = joblib.load("./data/test.pkl")

# 5.ģ������

# 5.1 ��ȡϵ����ֵ

y_predict = estimator.predict(x_test)

print("Ԥ��ֵΪ:\n", y_predict)

print("ģ���е�ϵ��Ϊ:\n", estimator.coef_)

print("ģ���е�ƫ��Ϊ:\n", estimator.intercept_)

# 5.2 ����

# �������

error = mean_squared_error(y_test, y_predict)

print("���Ϊ:\n", error)

3 ��

- sklearn.externals import joblib��֪����

- ����:joblib.dump(estimator, ��test.pkl��)

- ����:estimator = joblib.load(��test.pkl��)

- ע��:

- 1.�����ļ�,������**.pkl

- 2.����ģ������Ҫͨ��һ���������гн�

2.9 ���Իع�Ӧ��-�ع����

ѧϰĿ��

- ֪�����Իع�ij�����س���

1��ʲô�ǻع����

- �ع����о��Ա���x�������yӰ���һ�����ݷ�������

- ��Ļع�ģ����һԪ���Իع�,���Ա�ʾΪY=��0+��1x+��,����YΪ�����,xΪ�Ա���,��1ΪӰ��ϵ��,��0Ϊ�ؾ�,��Ϊ�����

- �ع�����ǹ㷺Ӧ�õ�ͳ�Ʒ�������,�����ڷ����Ա������������Ӱ���ϵ(ͨ���Ա����������),Ҳ���Է����Ա������������Ӱ�췽��(����Ӱ�컹�Ǹ���Ӱ��)��

- �ع��������ҪӦ�ó����ǽ���Ԥ��Ϳ���,�����ƻ��ƶ���KPI�ƶ���Ŀ���ƶ���;Ҳ���Ի���Ԥ���������ʵ�����ݽ��бȶԺͷ���,ȷ���¼���չ�̶Ȳ���δ���ж��ṩ������ָ����

- ���õĻع��㷨�������Իع顢����ʽ�ع��

- �ع�������ŵ�������ģʽ�ͽ����������,�����Իع���y=ax+b����ʽ����,�ڽ��ͺ������Ա������������ϵʽ�������;�ڻ��ں�����ʽ��ҵ��Ӧ����,����ֱ��ʹ�ô��뷨���,���Ӧ�������Ƚ����ס�

- �ع������ȱ����ֻ�ܷ�����������֮������ϵ,���������������������ù�ϵ,�����DZ�����ͬ���ض��������Ӱ��̶ȡ�

2���ع������س���

- �ڸ���ý����Ͷ�ŵĹ�������������������Ч���о�

- ��˾����Ͷ���Ӫ������

- ��ͳ����ý��:���� �㲥 ������

- ֱ��ý��:�����ʼ�,����,�绰

- ����ý��:app �� �罻Ӧ��

- ͨ���ع�������Իش�

- ��ͬӪ����������δٽ����۵�

- ��ε���Ӫ�����ʹÿһ��֧����ȡ�������

- ͬʱ�ڲ�ͬ�������й��Ӫ��,�ĸ�Ч��������

- ������ = Ӫ������ + �������

- Ӫ������ : �ɿص�����Ͷ��

- ���ϲ�Ʒ:�� �� ͷ��

- ��ͳ��Ʒ:���� �� ���� ����

- �������:���ɿص���������

- ���ô�,����,����,����

- Ӫ������ : �ɿص�����Ͷ��

- Ӫ��������������֮�������Թ�ϵ

- Ӫ��Ͷ��Խ��,������Ҳ���Ӧ�������

- �ع����ģ��:���۶� =93765+0.3* �ٶ�+0.15 * �罻ý��+0.05 *�绰ֱ��+0.02 * ����

- ���Իع�ģ��,������ͱ����������֮�������Թ�ϵ,��ʵ�����,�������벻�����Ź���Ͷ���һֱ����

- ʹ�ûع�ģ�͵Ľ��,����Ҫ�Ļ��ǹ۲�������صĴ�С������Ա�

- ��˾����Ͷ���Ӫ������

- �ع�����Ľ��,�����ڲ�ͬX����YӰ��ĶԱ�,ֱ��Ԥ��Y�ij�������

3���ع����ʵս

-

���۶�Ԥ�����

- ������ҵ,����Ŀ��

- ���̳��ŵ�����۶����Ԥ��

- �����������ܿ��Ƶĸ��ִ����������ܲ�����Ч��

- ��Ӫ����Դ���������滮

- ��ͳ������ҵ,�����ص�

- �ۺ��������

- �����ڶ�,�����˽��û�

- ������,ͨ���ع����ʵ�ֶԸ�������Ͷ���������������

- ��������

- ���ӹ��,����,����,�ŵ���,�������ȴ���Ͷ������۶�֮��Ĺ�ϵ

- ����˵�� (����Ϊ�۲ⴰ��)

- Revenue �ŵ����۶�

- Reach �Ź�����

- Local_tv ���ص��ӹ��Ͷ��

- Online ���Ϲ��Ͷ��

- Instore �ŵ��ں�����Ͷ��

- Person �ŵ������Ա

- Event �����¼�

- cobranding Ʒ�����ϴ���

- holiday �ڼ���

- special �ŵ��ر����

- non-event �����

- ��������:���ݸſ�����->����������->����Է�������ӻ�->�ع�ģ��

- ���ݸſ�����

- ������/������

- ȱʧֵ�ֲ�

- ����������

- �����ͱ���������ָ��(ƽ��ֵ,�����Сֵ,����)

- ����ͱ���(���ٸ�����,����ռ��)

- ����Է�������ӻ�

- �����Ա�

- ����֮�������Է���

- ɢ��ͼ/����ͼ

- �ع����

- ģ�ͽ���

- ģ���������Ż�

- ���ݸſ�����

- ��������

- ����

import pandas as pd #���ݶ�ȡ# #index_col=0 ,���ݵĵ�һ��������,ָ�������� store=pd.read_csv('data/store_rev.csv',index_col=0)[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-Env28gXu-1631190399737)(pics/p&g_regression1.png)]

#���ݵĻ�����Ϣ #����local_tv��50�����ֵ #����event��object,������ͱ��� store.info()[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-1bWVRy7F-1631190399737)(pics/p&g_regression2.png)]

#ͳ�Ƹ�������Щ�ǿ�ֵ #����local_tv��56����ֵ store.isnull().sum() ''' revenue 0 reach 0 local_tv 56 online 0 instore 0 person 0 event 0 dtype: int64 ''' #�˽����ݵķֲ� #�ж������Ƿ����ҵ�� store.describe()[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-dtR7jUmJ-1631190399738)(pics/p&g_regression3.png)]

#�˽�event�ľ���ֵ store.event.unique() #array(['non_event', 'special', 'cobranding', 'holiday'], dtype=object) #��Щ����Ӧ��revenue(���۶�)�������� store.groupby(['event'])['revenue'].describe()[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-cU5M1wHr-1631190399739)(pics/p&g_regression4.png)]

#�⼸������Ӧ��local_tv(���ص��ӹ��Ͷ��)�������� store.groupby(['event'])['local_tv'].describe()[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-Mu28SvwC-1631190399739)(pics/p&g_regression5.png)]

- ����Է���

#���б���,��������������ط��� #local_tv,person,instore�DZȽϺõ�ָ��,��revenue��ضȸ� store.corr()[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-yjIWvUKY-1631190399740)(pics/p&g_regression7.png)]

#����������revenue����ط��� #sort_values ��revenue����,ascendingĬ������,FalseΪ�������� #����ǰ3����ر���Ϊlocal_tv,person,instore store.corr()[['revenue']].sort_values('revenue',ascending=False) ''' revenue revenue 1.000000 local_tv 0.602114 person 0.559208 instore 0.311739 online 0.171227 event_special 0.033752 event_cobranding -0.005623 event_holiday -0.016559 event_non_event -0.019155 reach -0.155314 '''- ���ӻ�����

#���ӻ����� import seaborn as sns import matplotlib.pyplot as plt #���Թ�ϵ���ӻ� #б�������ϵ���й� sns.regplot(x='local_tv',y='revenue',data=store)[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-UKqwBqOB-1631190399741)(pics/p&g_regression8.png)]

#���Թ�ϵ���ӻ� sns.regplot(x='person',y='revenue',data=store)[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-xJrACbSX-1631190399742)(pics/p&g_regression9.png)]

sns.regplot(x='instore',y='revenue',data=store)[����ͼƬת��ʧ��,Դվ�����з���������,���齫ͼƬ��������ֱ���ϴ�(img-fcdcKFnX-1631190399743)(pics/p&g_regression10.png)]

- ���Իع����

#ɾ��ȱʧֵ,������ȱʧ,�������ᱨ��,��Ҫ����ȱʧֵ store.dropna(inplace=True) #�������ȱʧֵ #ȱʧֵ����,���0 store=store.fillna(0) #ȱʧֵ����,��ֵ��� store=store.fillna(store.local_tv.mean()) store.info() ''' <class 'pandas.core.frame.DataFrame'> Int64Index: 985 entries, 845 to 26 Data columns (total 10 columns): revenue 985 non-null float64 reach 985 non-null int64 local_tv 985 non-null float64 online 985 non-null int64 instore 985 non-null int64 person 985 non-null int64 event_cobranding 985 non-null uint8 event_holiday 985 non-null uint8 event_non_event 985 non-null uint8 event_special 985 non-null uint8 dtypes: float64(2), int64(4), uint8(4) memory usage: 57.7 KB ''' #�趨�Ա���������� y=store['revenue'] #��һ������ x=store[['local_tv','person','instore']] #�ڶ����ĸ� #x=store[['local_tv','person','instore','online']] #���ݱ������� from sklearn.preprocessing import MinMaxScaler scaler = MinMaxScaler() x1 = scaler.fit_transform(x) from sklearn.linear_model import LinearRegression # ʵ����api model=LinearRegression() # ѵ��ģ�� model.fit(x1,y) # LinearRegression(copy_X=True, fit_intercept=True, n_jobs=None, normalize=False) # �Ա���ϵ�� model.coef_ # array([41478.6429907 , 48907.03909284, 26453.89791677]) # ģ�͵Ľؾ� model.intercept_ # -17641.46438435701 # ���õ�x��y�Ĺ�ϵΪ:y=41478*local_tv + 48907*person + 26453*instore - 17641 # ģ�͵�����,xΪ'local_tv','person','instore' y_predict=model.predict(x)#����yԤ��ֵ from sklearn.metrics import mean_squared_error mean_squared_error(y,y_predict) # 1.9567577917634842e+18 - ������ҵ,����Ŀ��

4 ������

- ע��Ӧ�ûع�ģ��ʱ�о��Ա����Ƿ�����仯

- ��Ӧ�ûع�ģ����Ԥ��ʱ,�����о������������Ӱ����Ա����Ƿ�����仯,��Ҫ������������:

- �Ƿ�������µĶ������Ӱ�������Ա���

- �ڽ����ع�ģ��ʱ,��Ҫ�ۺϿ����Ա�����ѡ�����⡣�����©����Ҫ�ı���,��ôģ�ͺܿ�������ȷ��ӳʵ�����,���Ҳ�����������ƫ��,��ʱ�Ļع�ģ�ͼ��䲻�ȶ��ҷ���ϴ�

- ͬ����Ӧ�ûع�ģ��ʱ,��Ȼ��Ҫ���������������Ƿ���������,�����û��������Ԥ��ʱ�ǻ�����������״̬�µı���ʵ�ֵ�;�����������ʹ�����Ҵ��������û�б�����ع�ģ����ʱ,ԭ���Ļع�ģ��������ЧԤ�⡣

- ԭ���Ա����Ƿ���Ȼ������ѵ��ģ��ʱ�ķ�Χ֮����

������Ա����ı仯����ѵ��ģ�͵ķ�Χ,��ôԭ���ľ��鹫ʽ���������µ�ֵ��Χ������,���������ͨ����Ҫ�������о��ͽ�ģ��- �������ǽ�����һ���ع�ģ��,���Թ��Ͷ�ŷ���Ԥ��������,��ѵ���ع�ģ��ʱ����������[0,1000]������,���ڹ����ó���1000(����2000)ʱ,������֤����Ԥ��Ч������Ч�ԡ�

- �Ƿ�������µĶ������Ӱ�������Ա���

- ��Ӧ�ûع�ģ����Ԥ��ʱ,�����о������������Ӱ����Ա����Ƿ�����仯,��Ҫ������������:

- �ع��㷨�����Ա����ĸ�����ΪһԪ�ع�Ͷ�Ԫ�ع�,����Ӱ���Ƿ������Է�Ϊ���Իع�ͷ����Իع顣����Բ�ͬ�ع鷽����ѡ��ʱ,ע��ο���������:

- ���ŵĿ�ʼ���������Իع顣�����ѧϰΪ��,��ô����Ҫѡ���ôǿ���ģ��,������С���˷�����ͨ���Իع������;ͬʱ,���ʺ����ݼ������ṹ���ֲ��������������Թ�ϵ�ij�����

- ����Ա��������ٻ���ά��õ��˿���ʹ�õĶ�ά����(����Ԥ�����),��ô����ֱ��ͨ��ɢ��ͼ�����Ա���������������ϵ,Ȼ��ѡ����ѻع鷽����

- ������������жϷ����Ա������н�ǿ�������Թ�ϵ,���Կ�����ع顣

- ����ڸ�ά�ȱ�����,ʹ�����ع鷽��Ч������,����Lasso��Ridge

- ���ע��ģ�͵Ŀɽ�����,��ô������������Իع顢����ʽ�ع��Ƚ��ʺϡ�