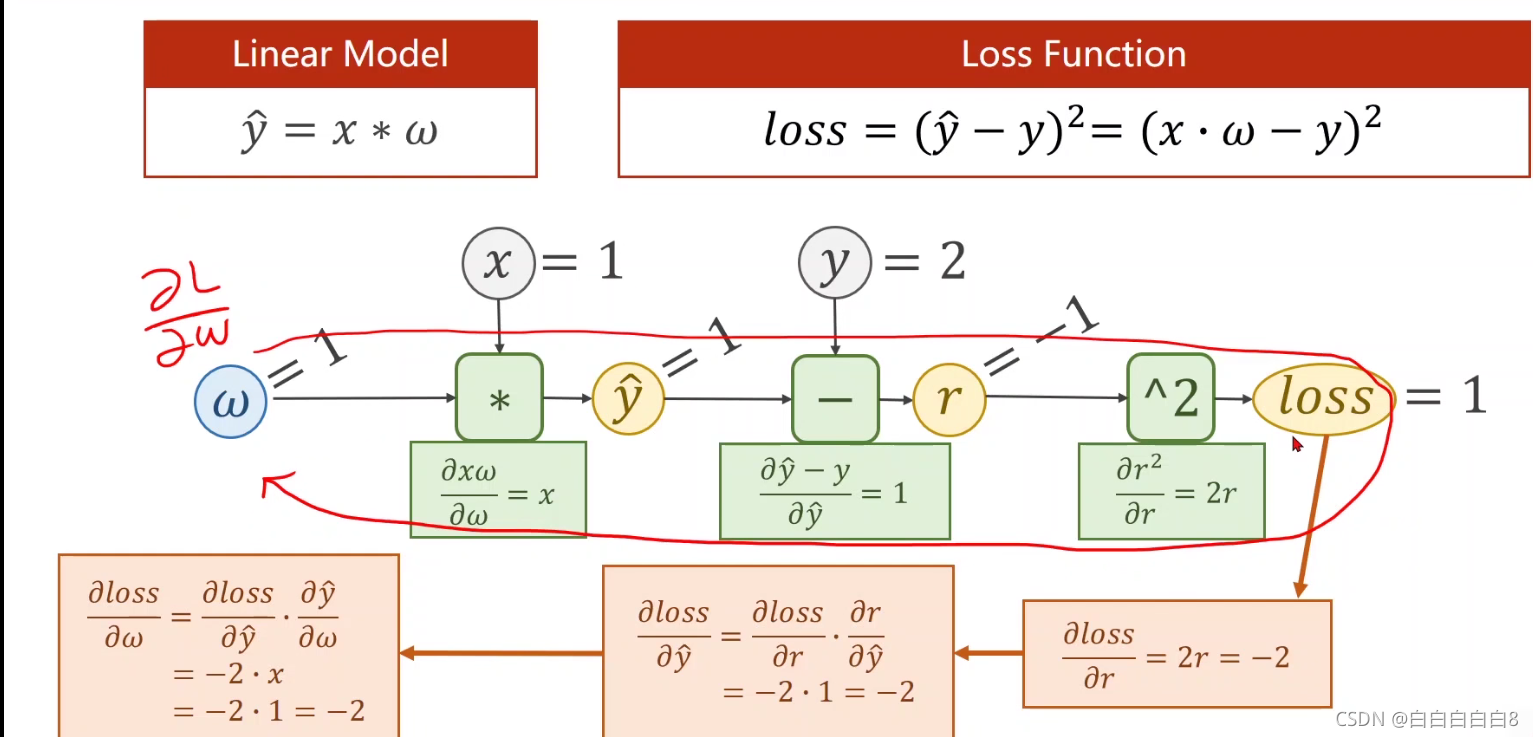

1、反向传播

????????先进行前馈运算(forward),然后反向传播算出损失函数对权重的倒数(即梯度),进而可以进行更新权重w。

?

?

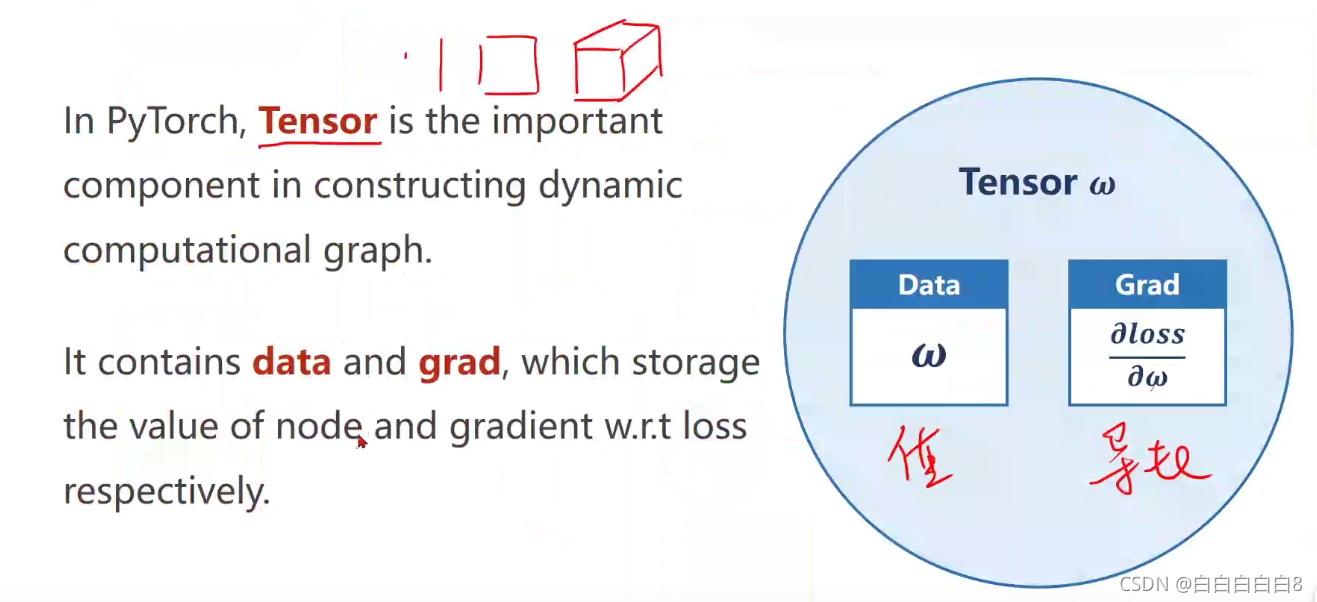

import torch

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = torch.Tensor([1.0])

w.requires_grad = True # 计算梯度

def forward(x):

return x * w

def loss(x, y):

y_pred = forward(x)

return (y_pred - y) ** 2

print('predict (before tarining)', 4, forward(4).item())

for i in range(100):

for x, y in zip(x_data, y_data):

ls = loss(x, y)

ls.backward()

w.data=w.data-0.01*w.grad.data

w.grad.data.zero_()

print("progress:",i,ls.item())

print('predict (after training)',4,forward(4).item())