小米应用商店的爬取

需求

爬取目标url: https://app.mi.com/category/6,爬取摄影摄像应用信息,名称,分类,详情界面的url。最后还要实现翻页的爬取

先用正常的方法爬取,然后再改写成多线程的方法,对比两种方法的爬取速度。

页面分析

搜索要爬取的应用的名称,通过右键、网页源码查看,发现要搜索的内容不在网页源码中,通过Network的XHR中查看。

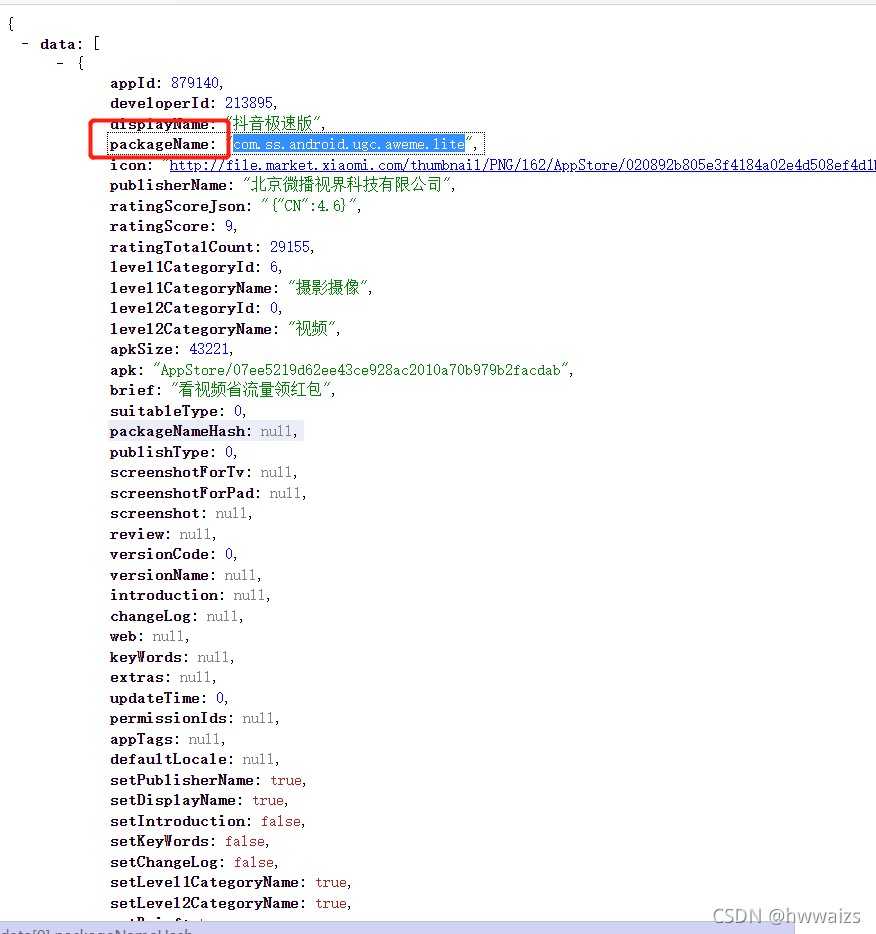

复制headers里的url 在浏览器中可以看到,应用的信息是以字典的形式存放在网页中。

点击某个应用,进入该应用的详情页,在跳转的url里可以发现url里包含的内容跟packageName里的内容一致

再点击几个应用,发现应用详情里的url,前面都一样,从"id=",后面的内容不一样。通过分析,我们爬取的时候可以动态更新等号后面的内容来切换到不同应用的不同界面,不同应用程序id的值是在数据接口中的,可以从url的字典中取出应用的名称,分类,应用的url地址。

代码实现

函数方式实现

1.函数实现

import requests

import csv

import time

def get_url(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36 Edg/93.0.961.47'

}

res = requests.get(url, headers=headers)

result = res.json()['data']

return result

def parse_url(html):

app_data = []

for app in html:

item = {}

item['name'] = app['displayName']

item['itemize'] = app['level1CategoryName']

item['url'] = 'https://app.mi.com/details?id=' + app['packageName']

app_data.append(item)

return app_data

def save_data(lis_data):

header = ['name', 'itemize', 'url']

with open('xiaomi_data.csv', 'a', encoding='utf-8', newline="") as f:

writ = csv.DictWriter(f, header)

if i == 0:

writ.writeheader()

writ.writerows(lis_data)

if __name__ == '__main__':

global i

for i in range(3):

url = f'https://app.mi.com/categotyAllListApi?page={i}&categoryId=6&pageSize=30'

html = get_url(url)

lis_data = parse_url(html)

save_data(lis_data)

time.sleep(2)

面向对象方式爬取第一页

2.1 面向对象读取一页

import requests

import csv

import time

class XiaomoShop():

def __init__(self):

self.url = 'https://app.mi.com/categotyAllListApi?page=0&categoryId=6&pageSize=30'

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36 Edg/93.0.961.47'

}

def get_url(self):

res = requests.get(self.url, headers=self.headers)

self.html = res.json()['data']

def parse_url(self):

self.app_data = []

for app in self.html:

item = {}

item['name'] = app['displayName']

item['itemize'] = app['level1CategoryName']

item['url'] = 'https://app.mi.com/details?id=' + app['packageName']

self.app_data.append(item)

def save_data(self):

header = ['name', 'itemize', 'url']

with open('xiaomi_data0.csv', 'a', encoding='utf-8', newline="") as f:

writ = csv.DictWriter(f, header)

writ.writeheader()

writ.writerows(self.app_data)

def main(self):

self.get_url()

self.parse_url()

self.save_data()

time.sleep(2)

if __name__ == '__main__':

X = XiaomoShop()

X.main()

面向对象方式翻页爬取

2.2 面向对象实现实现翻页

import requests

import csv

import time

class XiaomoShop():

def __init__(self):

self.url = 'https://app.mi.com/categotyAllListApi?page={}&categoryId=6&pageSize=30'

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36 Edg/93.0.961.47'

}

def get_url(self, url):

res = requests.get(url, headers=self.headers)

result = res.json()['data']

return result

def parse_url(self, html):

app_data = []

for app in html:

item = {}

item['name'] = app['displayName']

item['itemize'] = app['level1CategoryName']

item['url'] = 'https://app.mi.com/details?id=' + app['packageName']

app_data.append(item)

return app_data

def save_data(self, lis_data):

header = ['name', 'itemize', 'url']

with open('xiaomi_data2.csv', 'a', encoding='utf-8', newline="") as f:

writ = csv.DictWriter(f, header)

if i == 0:

writ.writeheader()

writ.writerows(lis_data)

def main(self):

global i

for i in range(3):

new_url = self.url.format(i)

html = self.get_url(new_url)

lis_data = self.parse_url(html)

self.save_data(lis_data)

# time.sleep(2)

if __name__ == '__main__':

start_time = time.time()

X = XiaomoShop()

X.main()

end_time = time.time()

print('程序运行时间:%s' % (end_time - start_time))

多线程方式爬取

改为多线程的方式进行爬取,首先要导入多线程,导入队列,在出现资源竞争的问题,创建线程锁,把待爬取的url都放到队列中,函数实现的是把url放入队列的操作,采用多个线程去函数中get出url,向得到的url发请求,获取数据,创建了线程的事件函数,这里只有3页,创建3个线程对应的只爬取了3次,最好写个死循环,让线程不断的去爬取,爬取得到的数据,保存到csv中。

import requests

import time

import threading

from queue import Queue

import csv

app_data = []

class XiaomiShop():

def __init__(self):

self.url = 'https://app.mi.com/categotyAllListApi?page={}&categoryId=6&pageSize=30'

# 创建队列

self.q = Queue()

# 创建线程锁

self.lock = threading.Lock()

self.app_data = []

# 把目标url放到队列中

def put_url(self):

for page in range(3):

new_url = self.url.format(page)

self.q.put(new_url) # 这里只是创建队列

# 发起请求,获取响应,解析数据

# 创建并启动多线程的时候是用的这个函数,可能出现资源竞争的地方在这个函数里

def parse_url(self):

while True:

# 创建线程锁

self.lock.acquire()

if not self.q.empty():

url = self.q.get()

# 取出之后跟队列无关了,就要解锁

self.lock.release()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36 Edg/93.0.961.47'

}

html = requests.get(url, headers=headers).json()['data']

for app in html:

item = {}

item['name'] = app['displayName']

item['itemize'] = app['level1CategoryName']

item['url'] = 'https://app.mi.com/details?id=' + app['packageName']

self.app_data.append(item)

header = ['name', 'itemize', 'url']

with open('xiaomi_mian.csv', 'w', encoding='utf-8', newline="")as f:

writ = csv.DictWriter(f, header)

writ.writeheader()

print(self.app_data)

writ.writerows(self.app_data)

else:

self.lock.release() # 在else处也要进行解锁,否则会在上锁状态堵塞

break

def run(self):

self.put_url()

# 创建2个多线程,如果线程多的话,可以放到列表里,逐个取出

t_list = []

for i in range(2):

t = threading.Thread(target=self.parse_url)

t_list.append(t)

t.start()

if __name__ == '__main__':

# 开始事件

start_time = time.time()

x = XiaomiShop()

x.run()

# 结束时间

end_time = time.time()

# 程序用时

print('程序运行时间:%s' % (end_time - start_time))

通过结束时间减去开始时间得到程序运行的时间,比不用多线程大大减少了运行时间,普通的爬取大概在0.69s左右,用多线程的时间为0.019s。