pytorch框架学习

一:Linear regression、Logistic Regression、Softmax Classifier

1.模型的继承和构建

import torch

from torch.autograd import Variable

# data define(3*1)

x_data = Variable(torch.Tensor([[1.0], [2.0], [3.0]]))

y_data = Variable(torch.Tensor([[2.0], [4.0], [6.0]]))

# model class

class Model(torch.nn.Module):

def __init__(self): # 定义构造方法

""" 构造函数中 实例化

In the constructor we instantiate two nn.Linear module

"""

super(Model, self).__init__()

self.linear = torch.nn.Linear(1, 1) # one in and one out

def forward(self, x):

"""

In the forward function we accept a Variable of input data

and we must return a Variable of output data. we can use modules

defined in the constructor as well as arbitrary operator on Variable.

"""

y_pred = self.linear(x)

return y_pred

# our model

model = Model()

# construct our loss function and an optimizer.

# The call to model.parameters() in the SGD constructor will contain the learnable

# parameters of the two nn.Linear modules which are members of the model.

criterion = torch.nn.MSELoss(size_average=False)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

# training loop

for epoch in range(500):

# forward pass: compute predicted y by passing x to the model

y_pred = model(x_data)

# compute and print loss

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

# zero gradients, perform a backward pass, and update the weights

optimizer.zero_grad()

loss.backward()

optimizer.step()

# after training -- test

hour_val = Variable(torch.Tensor([[4.0]]))

print("predict (after training)", 4, model.forward(hour_val).data[0][0])

二:模型学习nn.module

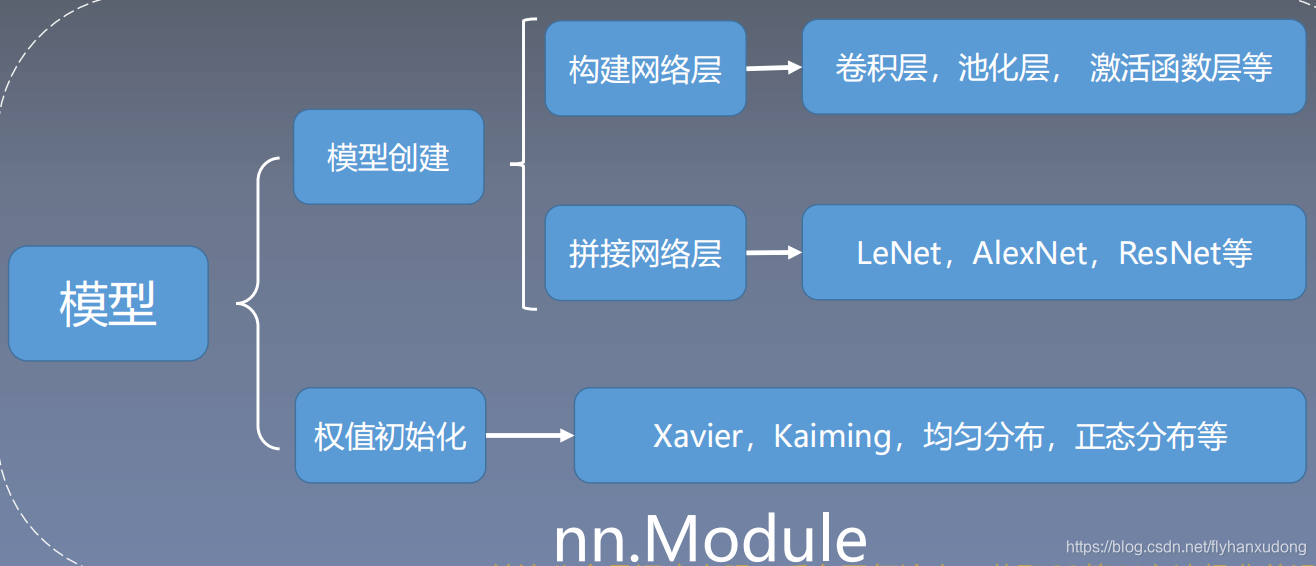

1.模型的建立 nn.module

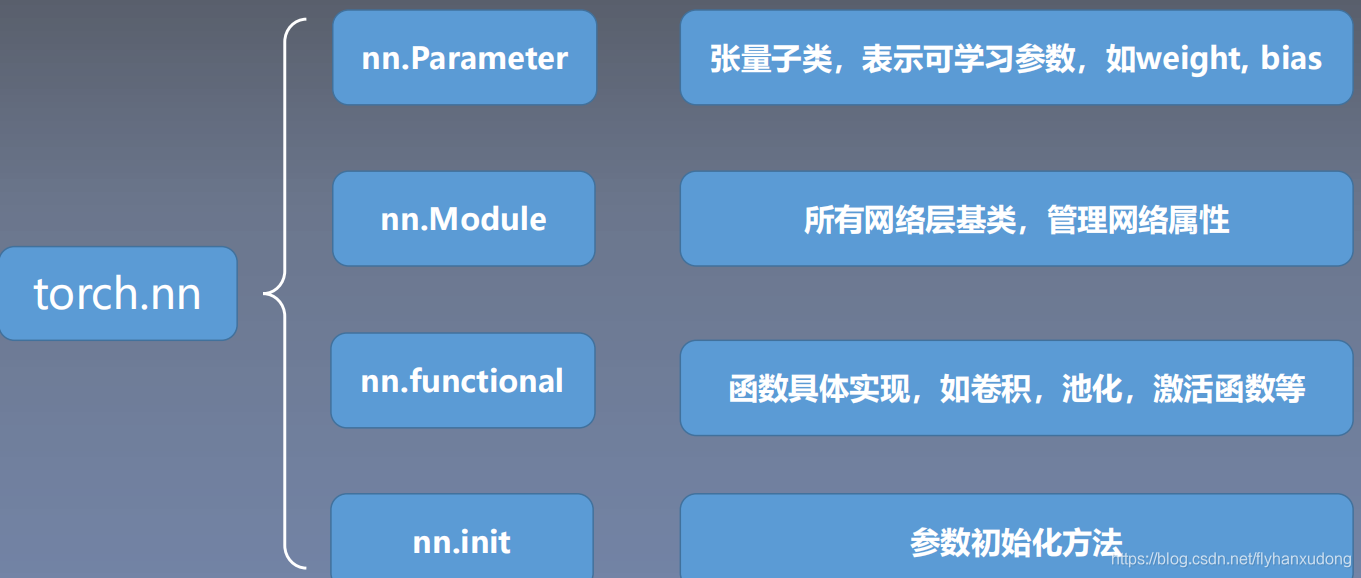

1.1nn.Module

? parameters : 存储管理nn.Parameter类

? modules : 存储管理nn.Module类

? buffers:存储管理缓冲属性,如BN层中的running_mean

? ***_hooks:存储管理钩子函数

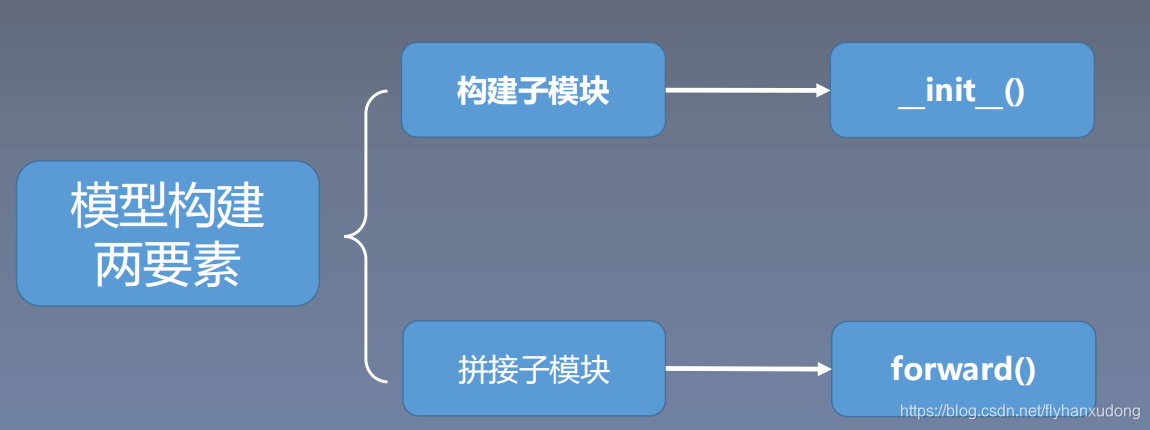

1.2.nn.Module总结

? 一个module可以包含多个子module

? 一个module相当于一个运算,必须实现forward()函数

? 每个module都有8个字典管理它的属性

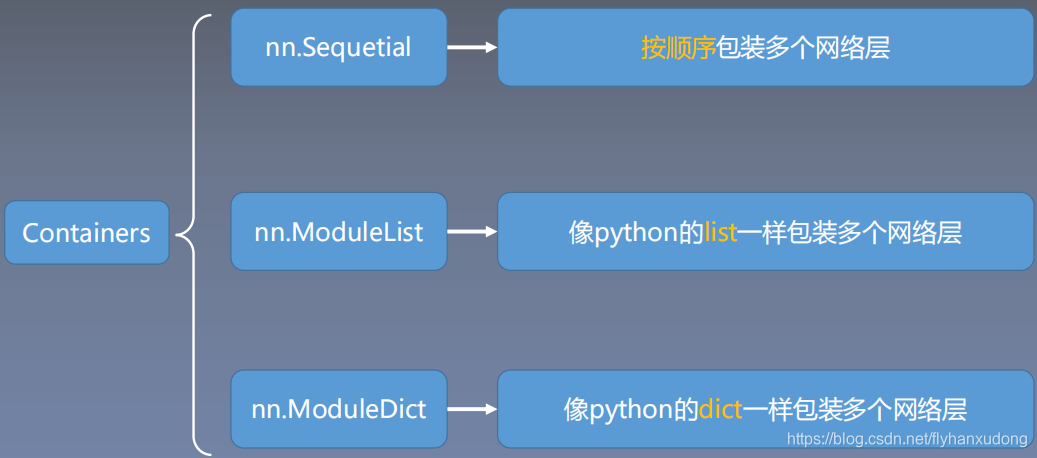

2. 模型容器——Containers

2.1 nn.Sequential

nn.Sequential 是 nn.module的容器,用于按顺序包装一组网络层

? 顺序性:各网络层之间严格按照顺序构建

? 自带forward():自带的forward里,通过for循环依次执行前向传播运算

2.2 nn.ModuleList

nn.ModuleList是 nn.module的容器,用于包装一组网络层,以迭代方式调用网络层

主要方法:

? append():在ModuleList后面添加网络层

? extend():拼接两个ModuleList

? insert():指定在ModuleList中位置插入网络层

2.3 nn.ModuleDict

nn.ModuleDict是 nn.module的容器,用于包装一组网络层,以索引方式调用网络层

主要方法:

? clear():清空ModuleDict

? items():返回可迭代的键值对(key-value pairs)

? keys():返回字典的键(key)

? values():返回字典的值(value)

? pop():返回一对键值,并从字典中删除

2.4 容器总结

Summary of Containers

? nn.Sequential:顺序性,各网络层之间严格按顺序执行,常用于block构建

? nn.ModuleList:迭代性,常用于大量重复网构建,通过for循环实现重复构建

? nn.ModuleDict:索引性,常用于可选择的网络层

三:权值初始化,损失函数,优化器

1.权值初始化

1.1 梯度消失和爆炸

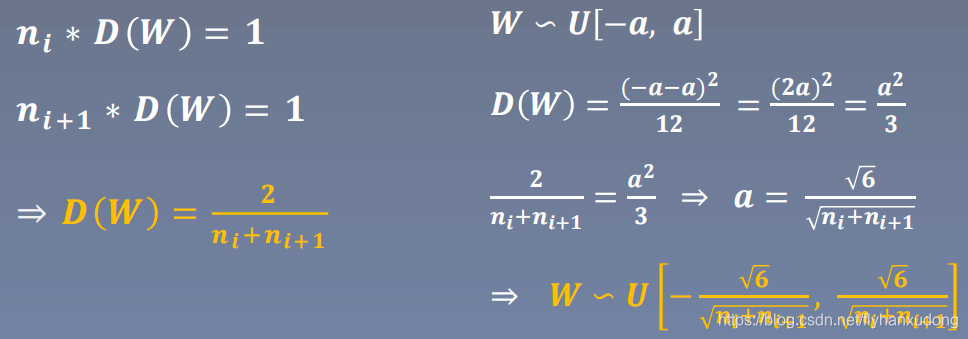

1.2 Xavier初始化

方差一致性:保持数据尺度维持在恰当范围,通常方差为1。激活函数:饱和函数,如Sigmoid,Tanh

# 激活函数是Tanh

a = np.sqrt(6 / (self.neural_num + self.neural_num))

tanh_gain = nn.init.calculate_gain('tanh')

a *= tanh_gain

nn.init.uniform_(m.weight.data, -a, a)

##########################################

nn.init.xavier_uniform_(m.weight.data, gain=tanh_gain) # pytorch提供的xavier初始化方法 与上面手动计算实现的结果一样

1.3 Kaiming初始化

方差一致性:保持数据尺度维持在恰当范围,通常方差为1。激活函数:ReLU及其变种

# nn.init.normal_(m.weight.data, std=np.sqrt(2 / self.neural_num))

nn.init.kaiming_normal_(m.weight.data) # pytorch自己提供的与上面一个语句等效

1.2 nn.init.calculate_gain

nn.init.calculate_gain(nonlinearity, param=None)

主要功能:计算激活函数的方差变化尺度

主要参数:

? nonlinearity: 激活函数名称

? param: 激活函数的参数,如Leaky ReLU的negative_slop

x = torch.randn(10000)

out = torch.tanh(x)

gain = x.std() / out.std()

print('gain:{}'.format(gain))

tanh_gain = nn.init.calculate_gain('tanh')

print('tanh_gain in PyTorch:', tanh_gain)

2.损失函数

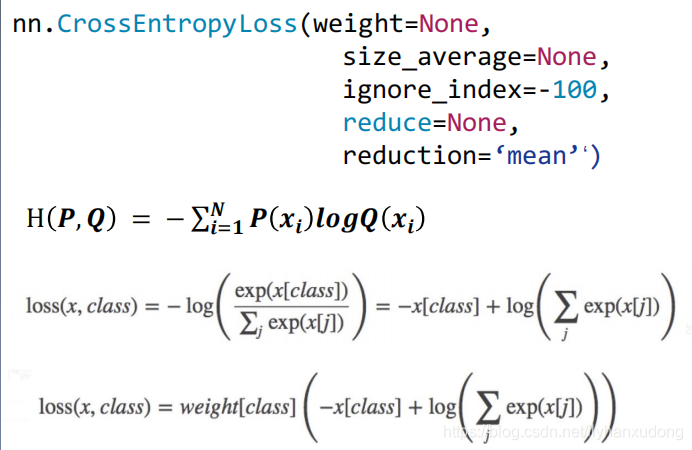

1、nn.CrossEntropyLoss

功能: nn.LogSoftmax ()与nn.NLLLoss ()结合,进行交叉熵计算

主要参数:

? weight:各类别的loss设置权值

? ignore _index:忽略某个类别

? reduction :计算模式,可为none/sum/mean

none- 逐个元素计算

sum- 所有元素求和,返回标量

mean- 加权平均,返回标量

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# fake data

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 1, 1], dtype=torch.long)

# ----------------------------------- CrossEntropy loss: reduction -----------------------------------

flag = 0

# flag = 1

if flag:

# def loss function

loss_f_none = nn.CrossEntropyLoss(weight=None, reduction='none')

loss_f_sum = nn.CrossEntropyLoss(weight=None, reduction='sum')

loss_f_mean = nn.CrossEntropyLoss(weight=None, reduction='mean') # 默认情况下

# forward

loss_none = loss_f_none(inputs, target)

loss_sum = loss_f_sum(inputs, target)

loss_mean = loss_f_mean(inputs, target)

# view

print("Cross Entropy Loss:\n ", loss_none, loss_sum, loss_mean)

# --------------------------------- compute by hand

flag = 0

# flag = 1

if flag:

idx = 0

input_1 = inputs.detach().numpy()[idx] # [1, 2]

target_1 = target.numpy()[idx] # [0]

# 第一项

x_class = input_1[target_1]

# 第二项

sigma_exp_x = np.sum(list(map(np.exp, input_1)))

log_sigma_exp_x = np.log(sigma_exp_x)

# 输出loss

loss_1 = -x_class + log_sigma_exp_x

print("第一个样本loss为: ", loss_1)

# ----------------------------------- weight -----------------------------------

flag = 0

# flag = 1

if flag:

# def loss function

weights = torch.tensor([1, 2], dtype=torch.float)

# weights = torch.tensor([0.7, 0.3], dtype=torch.float)

loss_f_none_w = nn.CrossEntropyLoss(weight=weights, reduction='none')

loss_f_sum = nn.CrossEntropyLoss(weight=weights, reduction='sum')

loss_f_mean = nn.CrossEntropyLoss(weight=weights, reduction='mean')

# forward

loss_none_w = loss_f_none_w(inputs, target)

loss_sum = loss_f_sum(inputs, target)

loss_mean = loss_f_mean(inputs, target)

# view

print("\nweights: ", weights)

print(loss_none_w, loss_sum, loss_mean)

D:\Anaconda3\envs\pytorch\python.exe D:/PythonProject/深度之眼pytorch/04-02-代码-损失函数(一)/lesson-15/loss_function_1.py

Cross Entropy Loss:

tensor([1.3133, 0.1269, 0.1269]) tensor(1.5671) tensor(0.5224)

weights: tensor([1., 2.])

tensor([1.3133, 0.2539, 0.2539]) tensor(1.8210) tensor(0.3642)

Process finished with exit code 0

3.优化器 optimizer

3.1 概念

pytorch的优化器:管理并更新模型中可学习参数的值,使得模型输出更接近真实标签

class Optimizer(object):

def __init__(self, params, defaults):

self.defaults = defaults

self.state = defaultdict(dict)

self.param_groups = []

......

param_groups = [{'params': param_groups}]

基本属性

? defaults:优化器超参数 (学习率等)

? state:参数的缓存,如momentum的缓存

? params_groups:管理的参数组

? _step_count:记录更新次数,学习率调整中使用

基本方法

? zero_grad():清空所管理参数的梯度 (pytorch特性:张量梯度不自动清零)

? step():执行一步更新

? add_param_group():添加参数组

? state_dict():获取优化器当前状态信息字典

? load_state_dict() :加载状态信息字典

# -*- coding: utf-8 -*-

import os

import torch

import torch.optim as optim

from nn网络层和卷积层n.code.tools.common_tools import set_seed

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

set_seed(1) # 设置随机种子

weight = torch.randn((2, 2), requires_grad=True)

weight.grad = torch.ones((2, 2))

optimizer = optim.SGD([weight], lr=0.1)

# ----------------------------------- step -----------------------------------

flag = 0

# flag = 1

if flag:

print("weight before step:{}".format(weight.data))

optimizer.step() # 修改lr=1 0.1观察结果

print("weight after step:{}".format(weight.data))

# ----------------------------------- zero_grad -----------------------------------

flag = 0

# flag = 1

if flag:

print("weight before step:{}".format(weight.data))

optimizer.step() # 修改lr=1 0.1观察结果

print("weight after step:{}".format(weight.data))

print("weight in optimizer:{}\nweight in weight:{}\n".format(id(optimizer.param_groups[0]['params'][0]), id(weight)))

print("weight.grad is {}\n".format(weight.grad))

optimizer.zero_grad()

print("after optimizer.zero_grad(), weight.grad is\n{}".format(weight.grad))

# ----------------------------------- add_param_group -----------------------------------

# flag = 0

flag = 1

if flag:

print("optimizer.param_groups is\n{}".format(optimizer.param_groups))

w2 = torch.randn((3, 3), requires_grad=True)

optimizer.add_param_group({"params": w2, 'lr': 0.0001})

print("optimizer.param_groups is\n{}".format(optimizer.param_groups))

# ----------------------------------- state_dict -----------------------------------

flag = 0

# flag = 1

if flag:

optimizer = optim.SGD([weight], lr=0.1, momentum=0.9)

opt_state_dict = optimizer.state_dict()

print("state_dict before step:\n", opt_state_dict)

for i in range(10):

optimizer.step()

print("state_dict after step:\n", optimizer.state_dict())

torch.save(optimizer.state_dict(), os.path.join(BASE_DIR, "optimizer_state_dict.pkl"))

# -----------------------------------load state_dict -----------------------------------

flag = 0

# flag = 1

if flag:

optimizer = optim.SGD([weight], lr=0.1, momentum=0.9)

state_dict = torch.load(os.path.join(BASE_DIR, "optimizer_state_dict.pkl"))

print("state_dict before load state:\n", optimizer.state_dict())

optimizer.load_state_dict(state_dict)

print("state_dict after load state:\n", optimizer.state_dict())

tensorboard使用

文件夹下没有runs文件:tensorboard --logdir=./runs

文件夹下有runs文件:tensorboard --logdir=./