这里写目录标题

论文精读

先验知识

编码器 - 解码器结构(Encoder - Decoder frame)

编码器结构:

由普通卷积层和下采样层将特征图尺寸缩小

解码器结构:

由普通卷积、上采样层和融合层组成,用来逐渐恢复空间维度,在尽可能减少信息损失的前提下完成同尺寸输入输出

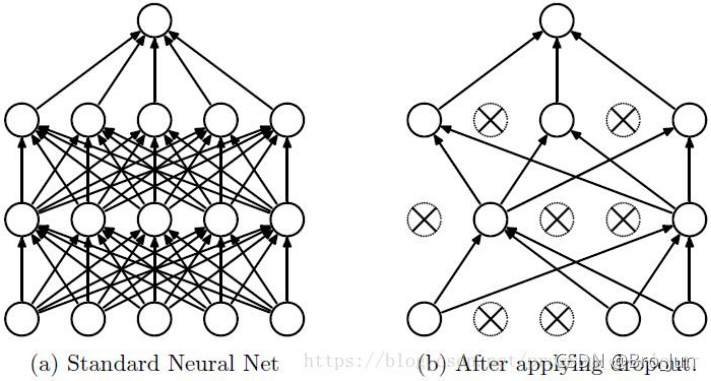

随机丢弃层(Dropout)

当一个复杂的前馈神经网络被训练在小数据集上时,容易造成过拟合。Dropout通过忽略特征检测器来减少过拟合现象。

反池化(unpooling)

解码器中每一个最大池化层的索引都存储起来,用于之后在解码器中进行反池化操作。

- 有助于保持高频信息的完整性

- 对低分辨率的特征图进行反池化时,会忽略临近的信息

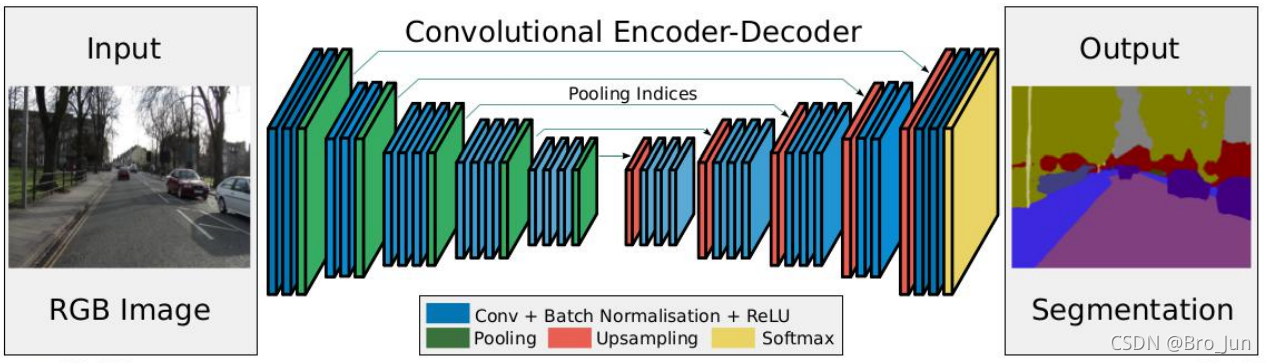

SegNet

本文创新点

- SegNet 核心的训练引擎包括一个 encoder 网络和一个对称的 decoder 网络,即编码器 - 解码器结构

- decoder 中直接利用与之对应的 encoder 阶段中最大池化时保留的 pooling index 来反池化

算法架构

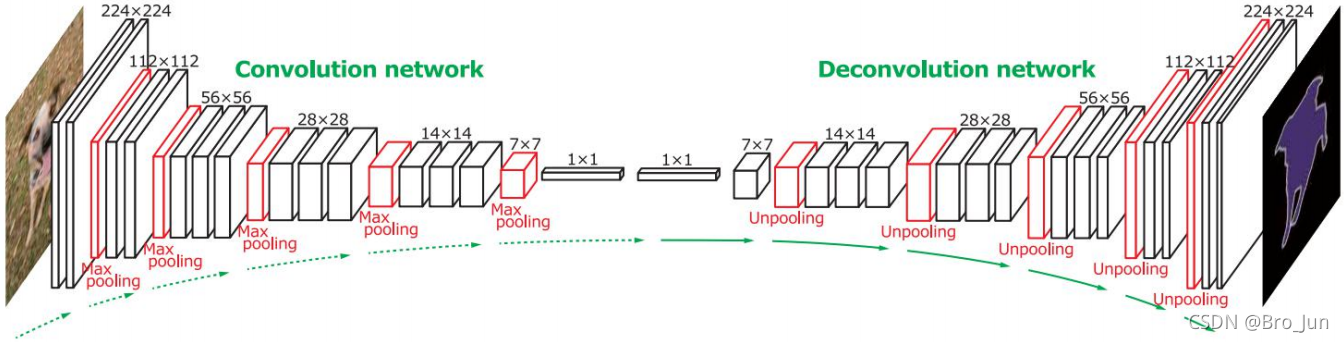

DeconvNet

本文创新点

- 编码部分使用 VGG-16 卷积层

- 解码部分使用反卷积和反池化

- 特殊方法:将 object proposal 送入训练后的网络,整幅图像是由 proposal 分割结果的组合,解决了物体太大或太小带来的分割问题

算法架构

DeconvNet 与 SegNet 的不同之处主要在于

- 反池化与反卷积结合来形成 decoder

- encoder 和 decoder 之间加入了全连接

算法实现

除了模型以外,其他部分与FCN无异

SegNet.py

导入包

import torch

import torchvision.models as models

from torch import nn

解码器中的卷积部分的定义

def decoder(input_channel, output_channel, num=3):

if num == 3:

decoder_body = nn.Sequential(

nn.Conv2d(input_channel, input_channel, 3, padding=1),

nn.Conv2d(input_channel, input_channel, 3, padding=1),

nn.Conv2d(input_channel, output_channel, 3, padding=1))

elif num == 2:

decoder_body = nn.Sequential(

nn.Conv2d(input_channel, input_channel, 3, padding=1),

nn.Conv2d(input_channel, output_channel, 3, padding=1))

return decoder_body

构建网络

vgg16_pretrained = models.vgg16(pretrained=True)

class VGG16_seg(torch.nn.Module):

def __init__(self):

super(VGG16_seg, self).__init__()

pool_list = [4, 9, 16, 23, 30]

for index in pool_list:

vgg16_pretrained.features[index].return_indices = True # 让pooling层返回索引

self.encoder1 = vgg16_pretrained.features[:4]

self.pool1 = vgg16_pretrained.features[4]

self.encoder2 = vgg16_pretrained.features[5:9]

self.pool2 = vgg16_pretrained.features[9]

self.encoder3 = vgg16_pretrained.features[10:16]

self.pool3 = vgg16_pretrained.features[16]

self.encoder4 = vgg16_pretrained.features[17:23]

self.pool4 = vgg16_pretrained.features[23]

self.encoder5 = vgg16_pretrained.features[24:30]

self.pool5 = vgg16_pretrained.features[30]

self.decoder5 = decoder(512, 512)

self.unpool5 = nn.MaxUnpool2d(2, 2)

self.decoder4 = decoder(512, 256)

self.unpool4 = nn.MaxUnpool2d(2, 2)

self.decoder3 = decoder(256, 128)

self.unpool3 = nn.MaxUnpool2d(2, 2)

self.decoder2 = decoder(128, 64, 2)

self.unpool2 = nn.MaxUnpool2d(2, 2)

self.decoder1 = decoder(64, 12, 2)

self.unpool1 = nn.MaxUnpool2d(2, 2)

def forward(self, x): # 3, 352, 480

encoder1 = self.encoder1(x) # 64, 352, 480

output_size1 = encoder1.size() # 64, 352, 480

pool1, indices1 = self.pool1(encoder1) # 64, 176, 240

encoder2 = self.encoder2(pool1) # 128, 176, 240

output_size2 = encoder2.size() # 128, 176, 240

pool2, indices2 = self.pool2(encoder2) # 128, 88, 120

encoder3 = self.encoder3(pool2) # 256, 88, 120

output_size3 = encoder3.size() # 256, 88, 120

pool3, indices3 = self.pool3(encoder3) # 256, 44, 60

encoder4 = self.encoder4(pool3) # 512, 44, 60

output_size4 = encoder4.size() # 512, 44, 60

pool4, indices4 = self.pool4(encoder4) # 512, 22, 30

encoder5 = self.encoder5(pool4) # 512, 22, 30

output_size5 = encoder5.size() # 512, 22, 30

pool5, indices5 = self.pool5(encoder5) # 512, 11, 15

unpool5 = self.unpool5(input=pool5, indices=indices5, output_size=output_size5) # 512, 22, 30

decoder5 = self.decoder5(unpool5) # 512, 22, 30

unpool4 = self.unpool4(input=decoder5, indices=indices4, output_size=output_size4) # 512, 44, 60

decoder4 = self.decoder4(unpool4) # 256, 44, 60

unpool3 = self.unpool3(input=decoder4, indices=indices3, output_size=output_size3) # 256, 88, 120

decoder3 = self.decoder3(unpool3) # 128, 88, 120

unpool2 = self.unpool2(input=decoder3, indices=indices2, output_size=output_size2) # 128, 176, 240

decoder2 = self.decoder2(unpool2) # 64, 176, 240

unpool1 = self.unpool1(input=decoder2, indices=indices1, output_size=output_size1) # 64, 352, 480

decoder1 = self.decoder1(unpool1) # 12, 352, 480

return decoder1

DeconvNet.py

除了结构中加入了

导入包

import torch

import torchvision.models as models

from torch import nn

解码器中反卷积的部分的定义

与 SegNet 的不同点之一就是 decoder 部分采用了反卷积

def decoder(input_channel, output_channel, num=3):

if num == 3:

decoder_body = nn.Sequential(

nn.ConvTranspose2d(input_channel, input_channel, 3, padding=1),

nn.ConvTranspose2d(input_channel, input_channel, 3, padding=1),

nn.ConvTranspose2d(input_channel, output_channel, 3, padding=1))

elif num == 2:

decoder_body = nn.Sequential(

nn.ConvTranspose2d(input_channel, input_channel, 3, padding=1),

nn.ConvTranspose2d(input_channel, output_channel, 3, padding=1))

return decoder_body

构建网络

构建网络中,与 SegNet 不同点在于其加入了全连接部分,并在全连接后将数据 reshape 成了解码器的结束时的图片尺寸

class VGG16_deconv(torch.nn.Module):

def __init__(self):

super(VGG16_deconv, self).__init__()

pool_list = [4, 9, 16, 23, 30]

for index in pool_list:

vgg16_pretrained.features[index].return_indices = True

self.encoder1 = vgg16_pretrained.features[:4]

self.pool1 = vgg16_pretrained.features[4]

self.encoder2 = vgg16_pretrained.features[5:9]

self.pool2 = vgg16_pretrained.features[9]

self.encoder3 = vgg16_pretrained.features[10:16]

self.pool3 = vgg16_pretrained.features[16]

self.encoder4 = vgg16_pretrained.features[17:23]

self.pool4 = vgg16_pretrained.features[23]

self.encoder5 = vgg16_pretrained.features[24:30]

self.pool5 = vgg16_pretrained.features[30]

self.classifier = nn.Sequential(

torch.nn.Linear(512 * 11 * 15, 4096),

torch.nn.ReLU(),

torch.nn.Linear(4096, 512 * 11 * 15),

torch.nn.ReLU(),

)

self.decoder5 = decoder(512, 512)

self.unpool5 = nn.MaxUnpool2d(2, 2)

self.decoder4 = decoder(512, 256)

self.unpool4 = nn.MaxUnpool2d(2, 2)

self.decoder3 = decoder(256, 128)

self.unpool3 = nn.MaxUnpool2d(2, 2)

self.decoder2 = decoder(128, 64, 2)

self.unpool2 = nn.MaxUnpool2d(2, 2)

self.decoder1 = decoder(64, 12, 2)

self.unpool1 = nn.MaxUnpool2d(2, 2)

def forward(self, x): # 3, 352, 480

encoder1 = self.encoder1(x) # 64, 352, 480

output_size1 = encoder1.size() # 64, 352, 480

pool1, indices1 = self.pool1(encoder1) # 64, 176, 240

encoder2 = self.encoder2(pool1) # 128, 176, 240

output_size2 = encoder2.size() # 128, 176, 240

pool2, indices2 = self.pool2(encoder2) # 128, 88, 120

encoder3 = self.encoder3(pool2) # 256, 88, 120

output_size3 = encoder3.size() # 256, 88, 120

pool3, indices3 = self.pool3(encoder3) # 256, 44, 60

encoder4 = self.encoder4(pool3) # 512, 44, 60

output_size4 = encoder4.size() # 512, 44, 60

pool4, indices4 = self.pool4(encoder4) # 512, 22, 30

encoder5 = self.encoder5(pool4) # 512, 22, 30

output_size5 = encoder5.size() # 512, 22, 30

pool5, indices5 = self.pool5(encoder5) # 512, 11, 15

# 在输入全连接前要将其打平成(batchsize, -1)

pool5 = pool5.view(pool5.size(0), -1)

fc = self.classifier(pool5) # 在全连接结束后返回的也是(batchsize, -1)的打平形式

fc = fc.reshape(1, 512, 11, 15)

unpool5 = self.unpool5(input=fc, indices=indices5, output_size=output_size5) # 512, 22, 30

decoder5 = self.decoder5(unpool5) # 512, 22, 30

unpool4 = self.unpool4(input=decoder5, indices=indices4, output_size=output_size4) # 512, 44, 60

decoder4 = self.decoder4(unpool4) # 256, 44, 60

unpool3 = self.unpool3(input=decoder4, indices=indices3, output_size=output_size3) # 256, 88, 120

decoder3 = self.decoder3(unpool3) # 128, 88, 120

unpool2 = self.unpool2(input=decoder3, indices=indices2, output_size=output_size2) # 128, 176, 240

decoder2 = self.decoder2(unpool2) # 64, 176, 240

unpool1 = self.unpool1(input=decoder2, indices=indices1, output_size=output_size1) # 64, 352, 480

decoder1 = self.decoder1(unpool1) # 12, 352, 480

return decoder1