Pytorch 常见损失函数实现

1.CrossEntropyLoss()函数

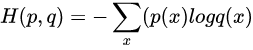

1.1.基本计算公式

1.2.Pytorch自带计算

Pytorch中计算的交叉熵:

其中自带的CrossEntropyLoss()函数的主要是将softmax-log-NLLLoss合并到一块得到的结果:

- Softmax后的数值都在0~1之间,所以ln之后值域是负无穷到0

- 然后将Softmax之后的结果取log,将乘法改成加法减少计算量,同时保障函数的单调性

- NLLLoss的结果就是把上面的输出与Label对应的那个值拿出来,去掉负号,再求均值。

nn.CrossEntropyLoss(weight: Optional[Tensor] = None, size_average=None,

ignore_index: int = -100,reduce=None, reduction: str = 'mean')

- weight(tensor): 1-D tensor,n个元素,分别代表n类的权重,如果你的训练样本很不均衡的话,是非常有用的。默认值为None。

- input : 包含每个类的得分,2-D tensor,shape为 batch*n

- target: 大小为 n 的 1—D tensor,包含类别的索引(0到 n-1)。

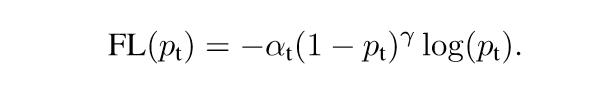

2.Focal Loss

2.1.基本计算公式基本计算表达式:

2.2.Pytorch实现

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

class FocalLoss(nn.Module):

r"""

Args:

alpha(1D Tensor, Variable) : the scalar factor for this criterion

gamma(float, double) : gamma > 0; reduces the relative loss for

well-classi?ed examples (p > .5), putting more focus on hard,

misclassi?ed examples

size_average(bool): By default, the losses are averaged over observations

for each minibatch.However, if the field size_average is set to False,

the losses are instead summed for each minibatch.

"""

def __init__(self, class_num, alpha=None, gamma=2, size_average=True):

super(FocalLoss, self).__init__()

if alpha is None:

self.alpha = Variable(torch.ones(class_num, 1))

else:

if isinstance(alpha, Variable):

self.alpha = alpha

else:

self.alpha = Variable(alpha)

self.gamma = gamma

self.class_num = class_num

self.size_average = size_average

def forward(self, inputs, targets):

N = inputs.size(0)

C = inputs.size(1)

P = F.softmax(inputs)

class_mask = inputs.data.new(N, C).fill_(0)

class_mask = Variable(class_mask)

ids = targets.view(-1, 1)

class_mask.scatter_(1, ids.data, 1.)

#print(class_mask)

if inputs.is_cuda and not self.alpha.is_cuda:

self.alpha = self.alpha.cuda()

alpha = self.alpha[ids.data.view(-1)]

probs = (P*class_mask).sum(1).view(-1,1)

log_p = probs.log()

#print('probs size= {}'.format(probs.size()))

#print(probs)

batch_loss = -alpha*(torch.pow((1-probs), self.gamma))*log_p

#print('-----bacth_loss------')

#print(batch_loss)

if self.size_average:

loss = batch_loss.mean()

else:

loss = batch_loss.sum()

return loss

参考:知乎专栏/Focal Loss 的Pytorch 实现以及实验

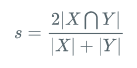

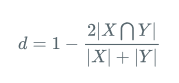

3.二分类Dice Loss

3.1.基本计算公式

以此计算二分类损失,X为预测每个像素点的概率值,Y为真实值,是一个同等大小的0/1矩阵,二者相乘即为X∩Y,再将每个元素求和得到|X∩Y|

当一个批次有 N 张图片时,可以将图片压缩为一维向量,对应的 label 也做相应的变换,最后一起计算 N张图片的 Dice 系数 和 Loss。

3.2.Pytorch实现

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

def make_one_hot(input, num_classes):

"""Convert class index tensor to one hot encoding tensor.

Args:

input: A tensor of shape [N, 1, *]

num_classes: An int of number of class

Returns:

A tensor of shape [N, num_classes, *]

"""

shape = np.array(input.shape)

shape[1] = num_classes

shape = tuple(shape)

result = torch.zeros(shape)

result = result.scatter_(1, input.cpu(), 1)

return result

class BinaryDiceLoss(nn.Module):

"""Dice loss of binary class

Args:

smooth: A float number to smooth loss, and avoid NaN error, default: 1

p: Denominator value: \sum{x^p} + \sum{y^p}, default: 2

predict: A tensor of shape [N, *]

target: A tensor of shape same with predict

reduction: Reduction method to apply, return mean over batch if 'mean',

return sum if 'sum', return a tensor of shape [N,] if 'none'

Returns:

Loss tensor according to arg reduction

Raise:

Exception if unexpected reduction

"""

def __init__(self, smooth=1, p=2, reduction='mean'):

super(BinaryDiceLoss, self).__init__()

self.smooth = smooth

self.p = p

self.reduction = reduction

def forward(self, predict, target):

assert predict.shape[0] == target.shape[0], "predict & target batch size don't match"

predict = predict.contiguous().view(predict.shape[0], -1)

target = target.contiguous().view(target.shape[0], -1)

num = torch.sum(torch.mul(predict, target), dim=1) + self.smooth

den = torch.sum(predict.pow(self.p) + target.pow(self.p), dim=1) + self.smooth

loss = 1 - num / den

if self.reduction == 'mean':

return loss.mean()

elif self.reduction == 'sum':

return loss.sum()

elif self.reduction == 'none':

return loss

else:

raise Exception('Unexpected reduction {}'.format(self.reduction))

参考:GitHub/hubutui/DiceLoss-PyTorch

4.多分类Dice Loss损失函数

4.1.基本计算思路

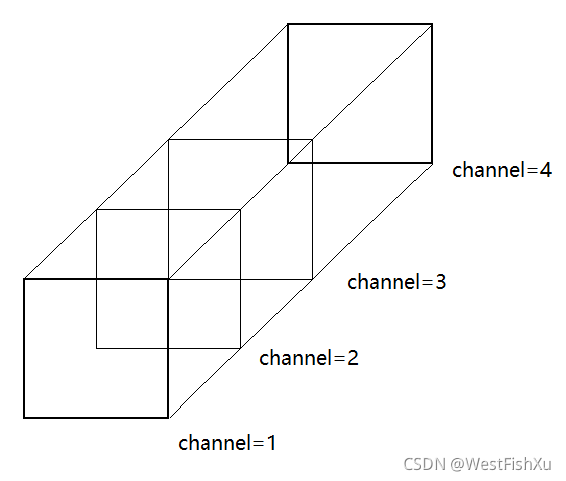

当有多个分类时,label 通过 one hot 转化为多个二分类,如下图所示:

每个channel 切面,可以看作是一个二分类问题,所以多分类 DiceLoss 损失函数,可以通过计算每个类别的二分类 DiceLoss 损失,最后再求均值得到。

4.2.Pytorch实现

class DiceLoss(nn.Module):

"""Dice loss, need one hot encode input

Args:

weight: An array of shape [num_classes,]

ignore_index: class index to ignore

predict: A tensor of shape [N, C, *]

target: A tensor of same shape with predict

other args pass to BinaryDiceLoss

Return:

same as BinaryDiceLoss

"""

def __init__(self, weight=None, ignore_index=None, **kwargs):

super(DiceLoss, self).__init__()

self.kwargs = kwargs

self.weight = weight

self.ignore_index = ignore_index

def forward(self, predict, target):

assert predict.shape == target.shape, 'predict & target shape do not match'

dice = BinaryDiceLoss(**self.kwargs)

total_loss = 0

predict = F.softmax(predict, dim=1)

for i in range(target.shape[1]):

if i != self.ignore_index:

dice_loss = dice(predict[:, i], target[:, i])

if self.weight is not None:

assert self.weight.shape[0] == target.shape[1], \

'Expect weight shape [{}], get[{}]'.format(target.shape[1], self.weight.shape[0])

dice_loss *= self.weights[i]

total_loss += dice_loss

return total_loss/target.shape[1]