前言:暑期实习做了一些目标检测方面的工作,按照目标检测的发展史也逐步进行了学习,但是总感觉看论文只是纸上谈兵,训练模型也只是调用模块中的函数fit别人准备好的数据,这个过程中没有自己的东西。恰好呢看了Faster RCNN的论文,Faster RCNN又是一个使用anchor、RPN网络、端到端训练的经典的算法,又想学习一下tensorflow,更恰巧手边有一些实际项目的数据,所以历时一个月左右,管理零碎的时间有限的资源跑通了Faster RCNN网络,取得了理想的效果。

当然也不是完全自己复现,搭建网络过程中参考了这位老哥的文章,对其中的实现细节进行了更深入的了解,并对训练过程中出现的问题以及解决方法进行了进一步记录。

1、utils.py实用程序函数说明

导入需要的包,wandhG数组存放9个anchor先验框的高宽尺寸,是基于训练数据集中的gt框进行聚类生成的(聚类生成先验anchor框)。输入图片的尺寸为512*512,可自行调整,想计算速度快一点的就设置小一点的图像尺寸。

import numpy as np

import cv2

from xml.dom.minidom import parse

import tensorflow as tf

# box width and height

wandhG = np.array([[ 45.5 , 48.47058824],

[ 48.5 , 105.17647059],

[ 91.5 , 76.23529412],

[ 60., 103.52941177],

[112.25 , 48.],

[ 75. , 96. ],

[ 24. , 26.82352941],

[107. , 61.17647059],

[ 87. , 26.35294118]], dtype=np.float32)

image_height = 512

image_width = 512

load_gt_boxes函数将图片的标注文件进行解析,可解析labelimg标注的xml文件以及yolov格式的txt文件,最终返回一张图像上的多个gt框的label以及左上和右下角坐标。

def load_gt_boxes(path):

'''

load the ground truth bounding box info: label, xmin, ymin, xmax, ymax

'''

## parse xml file

# dom_tree = parse(path)

# root element

# root_node = dom_tree.documentElement

# print('root node', root_node.nodeName)

# # extract image size

# size = root_node.getElementsByTagName('size')

# # size info

# width = size[0].getElementsByTagName('width')[0].childNodes[0].data

# height = size[0].getElementsByTagName('height')[0].childNodes[0].data

# depth = size[0].getElementsByTagName('depth')[0].childNodes[0].data

# print([int(width), int(height), int(depth)])

# extract BB objects

# objects = root_node.getElementsByTagName('object')

# boxes = []

# for obj in objects:

# # name = obj.getElementsByTagName('name')[0].childNodes[0].data

# bndbox = obj.getElementsByTagName('bndbox')[0]

# xmin = int(bndbox.getElementsByTagName('xmin')[0].childNodes[0].data)

# ymin = int(bndbox.getElementsByTagName('ymin')[0].childNodes[0].data)

# xmax = int(bndbox.getElementsByTagName('xmax')[0].childNodes[0].data)

# ymax = int(bndbox.getElementsByTagName('ymax')[0].childNodes[0].data)

# # w = np.abs(xmax - xmin)

# # h = np.abs(ymax - ymin)

# boxes.append([xmin, ymin, xmax, ymax])

# boxes = np.array(boxes)

# return boxes

## parse txt files

boxes = []

with open(path, 'r') as f:

lines = f.readlines()

for line in lines:

data = line.split(' ')

x_center = np.float64(data[1])*2*image_width

y_center = np.float64(data[2])*2*image_height

w = np.float64(data[3])*image_width

h = np.float64(data[4])*image_height

xmin = (x_center - w)/2

xmax = (x_center + w)/2

ymin = (y_center - h)/2

ymax = (y_center + h)/2

boxes.append([xmin, ymin, xmax, ymax])

return boxes?plot_boxes_on_image函数将boxes坐标绘制在图片上,并返回RGB格式的图像。(可测试坐标数据解析是否正确)

def plot_boxes_on_image(image_with_boxes, boxes, thickness=2, color=[255, 0, 0]):

'''plot boxes on image'''

boxes = np.array(boxes).astype(np.int32)

for box in boxes:

cv2.rectangle(image_with_boxes, pt1=(box[0], box[1]), pt2=(box[2], box[3]), color=color, thickness=thickness)

image_with_boxes = cv2.cvtColor(image_with_boxes, cv2.COLOR_BGR2RGB)

return image_with_boxescompute_iou计算两个坐标框的交并比,iou是衡量预测框和gt框的重合和接近程度,iou越接近1,预测框和gt框越接近。

def compute_iou(box1, box2):

"""

compute the IOU(Intersection Over Union)

:param box1:

:param box2:

:return: iou

"""

w_1 = box1[2] - box1[0]

h_1 = box1[3] - box1[1]

w_2 = box2[2] - box2[0]

h_2 = box2[3] - box2[1]

x = [box1[0], box1[2], box2[0], box2[2]]

y = [box1[1], box1[3], box2[1], box2[3]]

delta_x = np.max(x) - np.min(x)

delta_y = np.max(y) - np.min(y)

w_in = w_1 + w_2 - delta_x

h_in = h_1 + h_2 - delta_y

if w_in <= 0 or h_in <= 0:

iou = 0

else:

area_in = w_in*h_in

area_un = w_1*h_1 + w_2*h_2 - area_in

iou = area_in/area_un

return iou

regression_box_shift函数计算检测到目标并且得分大于positive_threshold,于gt框的交并比大于iou阈值的proposal框向ground_truth框的变换量,tx,ty为坐标平移量,tw,th为高度和宽度的缩放量。一定要注意变换的顺序,要不然训练和测试的时候会发现候选框离目标框越来越远,得分越来越低,loss越来越爆炸。?

def regression_box_shift(p, g):

"""

compute t to transform p to g

:param p: proposal box

:param g: ground truth

:return: t

"""

w_p = p[2] - p[0]

h_p = p[3] - p[1]

w_g = g[2] - g[0]

h_g = g[3] - g[1]

tx = (g[0] - p[0])/w_p

ty = (g[1] - p[1])/h_p

tw = np.log(w_g/w_p)

th = np.log(h_g/h_p)

t = [tx, ty, tw, th]

return toutput_decode函数对预测的boxes和得分进行解码。根据Faster RCNN的网络结构,图像经过backbone网络进行了4次Maxpool,最后得到的feature map大小为输入图像尺寸的十六分之一,也就是512/16=32。feature map中的每一个像素对应原输入图像上的一个16*16大小的grid。此函数先计算原输入图像上的每个grid的中心坐标,以及以此坐标为中心的9个anchor框的坐标。再将anchor先验框与预测得到的变换量进行变换得到所有anchor的预测框,在经过预测框得分的阈值筛选,得到最终的预测框和对应得分。

def output_decode(pred_bboxes, pred_scores, score_thresh=0.5):

grid_x, grid_y = tf.range(32, dtype=tf.int32), tf.range(32, dtype=tf.int32)

grid_x, grid_y = tf.meshgrid(grid_x, grid_y)

grid_x, grid_y = tf.expand_dims(grid_x, -1), tf.expand_dims(grid_y, -1)

grid_xy = tf.stack([grid_x, grid_y], axis=-1)

center_xy = grid_xy * 16 + 8

center_xy = tf.cast(center_xy, tf.float32)

anchor_xymin = center_xy - 0.5 * wandhG

anchor_xymin = np.expand_dims(anchor_xymin, axis=0)

# print(anchor_xymin.shape)

xy_min = pred_bboxes[..., 0:2] * wandhG[:, 0:2] + anchor_xymin

xy_max = tf.exp(pred_bboxes[..., 2:4]) * wandhG[:, 0:2] + xy_min

pred_bboxes = tf.concat([xy_min, xy_max], axis=-1)

pred_scores = pred_scores[..., 1]

score_mask = pred_scores > score_thresh

pred_bboxes = tf.reshape(pred_bboxes[score_mask], shape=[-1, 4]).numpy()

pred_scores = tf.reshape(pred_scores[score_mask], shape=[-1, ]).numpy()

return pred_bboxes, pred_scoresnms函数为非极大抑制(Non-Maximum Suppression)过程,目的是筛选每张图像每个目标的预测框中得分最高的框,并滤除与之重合的框。

def nms(pred_boxes, pred_score, iou_threshold):

"""Non-Maximum Suppression"""

nms_boxes = []

while len(pred_boxes) > 0:

max_id = np.argmax(pred_score)

selected_box = pred_boxes[max_id]

nms_boxes.append(selected_box)

del pred_boxes[max_id]

del pred_score[max_id]

ious = compute_iou(selected_box, pred_boxes)

iou_mask = ious <= iou_threshold

pred_boxes = pred_boxes[iou_mask]

pred_score = pred_score[iou_mask]

nms_boxes = np.array(nms_boxes)

return nms_boxes2、demo.py测试上述函数

这其中for循环为代码主要部分,其对每个anchor框进行遍历,步骤是先计算每个anchor框的坐标,检验其是否超出边界,接着计算anchor框与此张图像中的所有gt框的交并比,根据正反例iou阈值判断是否检测到目标,并相应进行更新target_boxes, target_scores, target_mask三个tensor(numpy),其中target_boxes只有在检测到目标时进行更新,并选取与之交并比最大的gt框计算坐标偏移量。最终的效果和直接将标注框绘制在图像上无异啦,即说明前面的代码是正常运行的。

这里是一个坑,如果你的图像数据中存在待检测目标位于图像边缘区域,即待检测目标很小一部分位于图像内,这时就会出现anchor框易超出边界的情况,超出图像边界一定范围的anchor框都会被过滤掉,进一步造成训练过程中出现nan的情况。

import matplotlib.pyplot as plt

import cv2

from utils import load_gt_boxes, compute_iou, regression_box_shift, nms, output_decode, wandhG, plot_boxes_on_image

import numpy as np

# 标记为正例的阈值(检测到目标)

pos_thresh = 0.5

# 标记为反例的阈值(未检测到目标)

neg_thresh = 0.1

iou_thresh = 0.5

image_height = 512

image_width = 512

grid_height = 16

grid_width = 16

# 测试样例

label_path = '2821.txt'

img_path = '2821.png'

gt_boxes = load_gt_boxes(label_path)

raw_img = cv2.imread(img_path)

img_boxes = np.copy(raw_img)

print(gt_boxes)

img_with_boxes = plot_boxes_on_image(img_boxes, np.array(gt_boxes)*2)

plt.figure()

plt.imshow(img_with_boxes)

plt.show()

# 初始化预测框坐标,得分,以及是否检测到目标的mask

# shape对应32*32的feature map上每一个像素对应原图16*16的grid,每一个grid对应9个anchor,每个anchor有4个坐标

# 得分中为检测到目标的正例得分和未检测到目标的得分

# mask中检测到目标记为1,未检测到目标记为-1,其它记为0

target_boxes = np.zeros(shape=[32, 32, 9, 4])

target_scores = np.zeros(shape=[32, 32, 9, 2])

target_mask = np.zeros(shape=[32, 32, 9])

"*********************************"

"*********将feature map分成32*32个小块"

#

encoding_img = np.copy(raw_img)

encoding_img = cv2.resize(encoding_img, dsize=(512, 512), interpolation=cv2.INTER_CUBIC)

for i in range(32):

for j in range(32):

for k in range(9):

center_y = i*grid_height + grid_height*0.5

center_x = j*grid_width + grid_width*0.5

# calculate the cordinates

xmin = center_x - wandhG[k][0]*0.5

xmax = center_x + wandhG[k][0]*0.5

ymin = center_y - wandhG[k][1]*0.5

ymax = center_y + wandhG[k][1]*0.5

# filter the cross-boundary anchors

if (xmin > -5) & (ymin > -5) & (xmax < (image_width + 5)) & (ymax < (image_height + 5)):

anchor_boxes = np.array([xmin, ymin, xmax, ymax])

# print(anchor_boxes)

anchor_boxes = np.expand_dims(anchor_boxes, axis=0)

print(anchor_boxes)

# compute iou between anchor_box and gt

ious = []

for gt_box in gt_boxes:

iou = compute_iou(anchor_boxes[0], gt_box)

ious.append(iou)

ious = np.array(ious)

positive_masks = ious > pos_thresh

negative_masks = ious < neg_thresh

# identify positive or negative

if np.any(positive_masks):

plot_boxes_on_image(encoding_img, anchor_boxes, thickness=1)

cv2.circle(encoding_img, center=(int(0.5 * (xmin + xmax)), int(0.5 * (ymin + ymax))), radius=1,

color=[255, 0, 0], thickness=1)

# 标记检测到物体

target_scores[i, j, k, 1] = 1

target_mask[i, j, k] = 1

# 找出最匹配此anchor box的gt

max_iou_id = np.argmax(ious)

selected_gt_boxes = gt_boxes[max_iou_id]

target_boxes[i, j, k] = regression_box_shift(anchor_boxes[0], selected_gt_boxes)

if np.all(negative_masks):

target_scores[i, j, k, 0] = 0

target_mask[i, j, k] = -1

cv2.circle(encoding_img, center=(int(0.5 * (xmin + xmax)), int(0.5 * (ymin + ymax))), radius=1,

color=[0, 0, 0], thickness=1)

cv2.namedWindow('encoded image', cv2.WINDOW_NORMAL)

cv2.imshow('encoded image', encoding_img)

cv2.waitKey(0)

# cv2.imwrite('encoding_img.png', encoding_img)

# print(target_boxes)

faster_decode_img = np.copy(raw_img)

pred_boxes = np.expand_dims(target_boxes, 0).astype(np.float32)

pred_scores = np.expand_dims(target_scores, 0).astype(np.float32)

pred_boxes, pred_scores = output_decode(pred_boxes, pred_scores, 0.9)

nms_pred_boxes = nms(pred_boxes, pred_scores, 0.1)

img_with_predbox = plot_boxes_on_image(faster_decode_img, pred_boxes*2, color=[255, 0, 0], thickness=1)

cv2.namedWindow('pred_img', cv2.WINDOW_NORMAL)

cv2.imshow('pred_img', img_with_predbox)

cv2.waitKey(0)

cv2.imwrite('img_demo.png', img_with_predbox)

3、rpn.py搭建Faster RCNN网络

继承keras中的model并重写call方法进行Faster RCNN网络的搭建,其中在RPN网络层中,参考文章中的kernel_size为[5, 2],暂时没弄清楚为什么要这样设置,难道是为了使得RPN网络产生的预测框更倾向于细长形的?由于自己数据集的关系,将kernel_size设置成了[3,3]。最终网络返回对应的预测框坐标以及得分。

import tensorflow as tf

from tensorflow.keras.layers import Conv2D, Dropout, BatchNormalization, MaxPool2D, Flatten, Dense, InputLayer

print(tf.__version__)

class RPN(tf.keras.Model):

def __init__(self):

super(RPN, self).__init__()

self.conv1_1 = Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same')

self.conv1_2 = Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same')

self.pool1 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.conv2_1 = Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same')

self.conv2_2 = Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same')

self.pool2 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.conv3_1 = Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same')

self.conv3_2 = Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same')

self.conv3_3 = Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same')

self.pool3 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.dropout3 = Dropout(rate=0.1)

self.conv4_1 = Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same')

self.conv4_2 = Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same')

self.conv4_3 = Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same')

self.pool4 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.dropout4 = Dropout(rate=0.2)

self.conv5_1 = Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same')

self.conv5_2 = Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same')

self.conv5_3 = Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same')

self.pool5 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.dropout5 = Dropout(rate=0.25)

# region proposal conv

self.rpn_conv1 = Conv2D(filters=256, kernel_size=[3, 3], activation='relu', padding='same', use_bias=False)

self.rpn_conv2 = Conv2D(filters=512, kernel_size=[3, 3], activation='relu', padding='same', use_bias=False)

# bboox regression layer

self.bbox_conv = Conv2D(filters=36, kernel_size=[1, 1], padding='same', activation=None, use_bias=False)

# box score layer

self.score_conv = Conv2D(filters=18, kernel_size=[1, 1], activation=None, padding='same', use_bias=False)

def call(self, x, training=True, mask=None):

output = self.conv1_1(x)

output = self.conv1_2(output)

output = self.pool1(output)

output = self.conv2_1(output)

output = self.conv2_2(output)

output = self.pool2(output)

output = self.conv3_1(output)

output = self.conv3_2(output)

output = self.conv3_3(output)

output = self.pool3(output)

# output = self.dropout3(output)

pool3_p = self.pool3(output)

pool3_p = self.rpn_conv1(pool3_p)

output = self.conv4_1(output)

output = self.conv4_2(output)

output = self.conv4_3(output)

output = self.pool4(output)

# output = self.dropout4(output)

pool4_p = self.rpn_conv2(output)

output = self.conv5_1(output)

output = self.conv5_2(output)

output = self.conv5_3(output)

# output = self.dropout5(output)

#

pool5_p = self.rpn_conv2(output)

region_proposal = tf.concat([pool3_p, pool4_p, pool5_p], axis=-1)

# region_proposal = tf.concat([pool3_p, pool4_p], axis=-1)

# compute the bbox and scores

conv_cl_boxes = self.bbox_conv(region_proposal)

conv_cl_scores = self.score_conv(region_proposal)

cl_boxes = tf.reshape(conv_cl_boxes, [-1, 32, 32, 9, 4])

cl_scores = tf.reshape(conv_cl_scores, [-1, 32, 32, 9, 2])

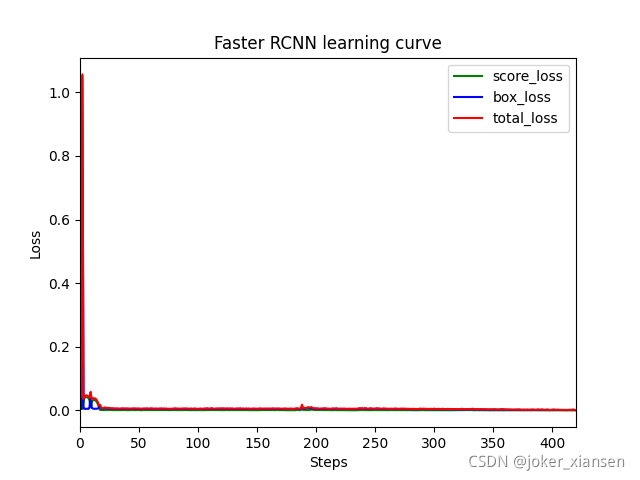

return cl_boxes, cl_scores4、metrices.py绘制训练过程中的loss变化曲线以及混淆矩阵

此py文件中的函数对各个部分的损失函数进行绘制可视化,并绘制分类结果的混淆矩阵。

#!/usr/bin/python3

# -*- coding: utf-8 -*-

# Author: Joker_xiansen

# @Time :2021/9/8 15:46

import numpy as np

import matplotlib.pyplot as plt

def confusion_matrix():

pass

def plot_learning_curve(loss_path):

with open(loss_path, 'r') as f:

lines = f.readlines()

score_loss = []

box_loss = []

total_loss = []

for line in lines:

split_line = line.split(' ')

score_loss.append(np.float32(split_line[2]))

box_loss.append(np.float32(split_line[3]))

total_loss.append(np.float32(split_line[4]))

plt.plot(score_loss, '-g')

plt.plot(box_loss, '-b')

plt.plot(total_loss, '-r')

plt.xlim((0, len(score_loss)))

plt.legend(['score_loss', 'box_loss', 'total_loss'])

plt.xlabel('Steps')

plt.ylabel('Loss')

plt.title('Faster RCNN learning curve')

plt.savefig('loss.png')

plt.show()

if __name__ == "__main__":

plot_learning_curve('loss.txt')5、train.py

import random

import os

from utils import *

from rpn import RPN

from metrices import plot_learning_curve

from argparse import ArgumentParser

import yaml

parser = ArgumentParser(description='paras for train')

parser.add_argument('--data', default='data.yaml', help='data config yaml')

parser.add_argument('--img_size', default=512, help='input image size')

parser.add_argument('--grid_size', default=16, help='image grid size')

# parser.add_argument('--weights', default='RPN1.h5', help='trained model weights')

parser.add_argument('--pos_thresh', default=0.5, help='threshold to judge positive box')

parser.add_argument('--neg_thresh', default=0.1, help='threshold to judge negative box')

parser.add_argument('--task', default='train', help='choose from test, train, val')

parser.add_argument('--epochs', default=100, help='train budget')

parser.add_argument('--samples', default=1000, help='sample num')

parser.add_argument('--batch_size', default=16)

parser.add_argument('--lamda_scale', default=1, help='balance score loss and boxes loss')

parser.add_argument('--lr', default=1e-3, help='learning rate')

args = parser.parse_args()

# 对输入图像以及标注文件进行解析,主要为计算每个anchor框的坐标,判断是否符合要求(anchor框位于图像内部),对于符合要求的anchor框计算IOU并判断正负样例,并相应更新得分和是否检测到物体的mask,对于检测到物体的anchor框,还需要选取iou最大的gt框与之计算shift坐标变换量存储在target_boxes中。

def encode_label(gt_boxes):

target_boxes = np.zeros(shape=[32, 32, 9, 4])

target_scores = np.zeros(shape=[32, 32, 9, 2])

target_mask = np.zeros(shape=[32, 32, 9])

for i in range(32):

for j in range(32):

for k in range(9):

center_y = i * args.grid_size + args.grid_size * 0.5

center_x = j * args.grid_size + args.grid_size * 0.5

# calculate the cordinates

xmin = center_x - wandhG[k][0] * 0.5

xmax = center_x + wandhG[k][0] * 0.5

ymin = center_y - wandhG[k][1] * 0.5

ymax = center_y + wandhG[k][1] * 0.5

# filter the cross-boundary anchors

if (xmin > -5) & (ymin > -5) & (xmax < (image_width + 5)) & (ymax < (image_height + 5)):

anchor_boxes = np.array([xmin, ymin, xmax, ymax])

# print(anchor_boxes)

anchor_boxes = np.expand_dims(anchor_boxes, axis=0)

# compute iou between anchor_box and gt

ious = []

for gt_box in gt_boxes:

iou = compute_iou(anchor_boxes[0], gt_box)

ious.append(iou)

ious = np.array(ious)

positive_masks = ious > args.pos_thresh

negative_masks = ious < args.neg_thresh

# identify positive or negative

if np.any(positive_masks):

# 标记检测到物体

target_scores[i, j, k, 1] = 1

target_mask[i, j, k] = 1

# 找出最匹配此anchor box的ground truth

max_iou_id = np.argmax(ious)

selected_gt_boxes = gt_boxes[max_iou_id]

target_boxes[i, j, k] = regression_box_shift(anchor_boxes[0], selected_gt_boxes)

if np.all(negative_masks):

target_scores[i, j, k, 0] = 1

target_mask[i, j, k] = -1

return target_boxes, target_scores, target_mask

# 解析yaml配置文件,返回一个字典类型的数据结构

def parse_yaml(path):

with open(args.data, 'r', encoding='utf-8') as f:

data = f.read()

yaml_data = yaml.load(data, Loader=yaml.FullLoader)

return yaml_data

# 预处理图像及标签,返回归一化后的图像和准备好的target数据

def process_image_label(image_path, label_path):

raw_image = cv2.imread(image_path)

gt_boxes = load_gt_boxes(label_path)

target = encode_label(gt_boxes)

# image normalization

raw_image = raw_image/255.0

return raw_image, target

# 随机成对返回image和对应的label文件

def image_label_generator(data_path):

image_label_paths = [(os.path.join(data_path, 'image/%d.png') % (i+1),

os.path.join(data_path, 'label/%d.txt') % (i+1)) for i in range(args.samples)]

images = os.listdir(os.path.join(data_path, 'images'))

labels = os.listdir(os.path.join(data_path, 'labels'))

sample_num = len(images)

image_label_paths = [(os.path.join(data_path, 'images', '%s.png' % name.split('.')[0]),

os.path.join(data_path, 'labels', '%s.txt' % name.split('.')[0])) for name in images]

while True:

random.shuffle(image_label_paths)

for i in range(sample_num):

yield image_label_paths[i]

# 根据batch_size生成对应batch,size,通道的张量图像数据

def data_generator(data_path, batch_size):

image_label_path_generator = image_label_generator(data_path)

while True:

images = np.zeros(shape=[batch_size, image_height, image_width, 3])

target_bboxes = np.zeros(shape=[batch_size, 32, 32, 9, 4], dtype=np.float32)

target_scores = np.zeros(shape=[batch_size, 32, 32, 9, 2], dtype=np.float32)

target_mask = np.zeros(shape=[batch_size, 32, 32, 9], dtype=np.int32)

for i in range(batch_size):

image_path, label_path = next(image_label_path_generator)

# print(label_path)

print(image_path)

image, target = process_image_label(image_path, label_path)

input_image = cv2.resize(image, dsize=(512, 512), interpolation=cv2.INTER_CUBIC)

images[i] = input_image

target_bboxes[i] = target[0]

target_scores[i] = target[1]

target_mask[i] = target[2]

yield images, target_bboxes, target_scores, target_mask

# 计算损失函数得分损失采用交叉熵损失函数,box损失采用平滑L1损失

def compute_loss(target_scores, target_bboxes, target_masks, pred_scores, pred_bboxes):

"""

target_scores shape: [1, 64, 64, 9, 2], pred_scores shape: [1, 68, 64, 9, 2]

target_bboxes shape: [1, 68, 64, 9, 4], pred_bboxes shape: [1, 68, 64, 9, 4]

target_masks shape: [1, 68, 64, 9]

"""

score_loss = tf.nn.softmax_cross_entropy_with_logits(labels=target_scores, logits=pred_scores)

foreground_background_mask = (np.abs(target_masks) == 1).astype(np.int32)

score_loss = tf.reduce_sum(score_loss * foreground_background_mask, axis=[1, 2, 3]) / np.sum(foreground_background_mask)

score_loss = tf.reduce_mean(score_loss)

boxes_loss = tf.abs(target_bboxes - pred_bboxes)

boxes_loss = 0.5 * tf.pow(boxes_loss, 2) * tf.cast(boxes_loss < 1, tf.float32) + (boxes_loss - 0.5) * tf.cast(boxes_loss >=1, tf.float32)

boxes_loss = tf.reduce_sum(boxes_loss, axis=-1)

foreground_mask = np.array(target_masks > 0).astype(np.float32)

boxes_loss = tf.reduce_sum(boxes_loss * foreground_mask, axis=[1,2,3]) / np.sum(foreground_mask)

boxes_loss = tf.reduce_mean(boxes_loss)

return score_loss, boxes_loss

yaml_data = parse_yaml(args.data)

data_path = os.path.join(yaml_data['path'], yaml_data[args.task])

TrainSet = data_generator(data_path, args.batch_size)

model = RPN()

optimizer = tf.keras.optimizers.Adam(learning_rate=args.lr)

writer = tf.summary.create_file_writer("./log")

global_steps = tf.Variable(0, trainable=False, dtype=tf.int64)

with open('loss.txt', 'w') as fl:

for epoch in range(args.epochs):

for step in range(int(args.samples/args.batch_size)):

global_steps.assign_add(1)

image_data, target_bboxes, target_scores, target_masks = next(TrainSet)

# image_data, target_bboxes, target_scores, target_masks = data_generator_test(1)

# if np.any(np.isnan(target_bboxes)):

# print('target_bboxes contain nan')

# if np.any(np.isnan(target_scores)):

# print('target_scores contain nan')

with tf.GradientTape() as tape:

pred_bboxes, pred_scores = model(image_data)

# pred_scores1 = tf.nn.softmax(pred_scores, axis=-1)

# pred_scores_test = pred_scores1.numpy().reshape((-1, 2))

# pred_boxes2, pred_scores2 = output_decode(pred_bboxes, pred_scores1, 0.)

# pred_boxes_test = pred_boxes2.reshape((-1, 4))

if np.any(np.isnan(pred_scores)):

print('pred_scores contain nan')

if np.any(np.isnan(pred_bboxes)):

print('pred_bboxes contain nan')

score_loss, boxes_loss = compute_loss(target_scores, target_bboxes, target_masks, pred_scores, pred_bboxes)

print(score_loss)

if np.any(np.isnan(score_loss)):

score_loss = tf.constant(1e-8, dtype=tf.float32)

print('score_loss contain nan')

if np.any(np.isnan(boxes_loss)):

boxes_loss = tf.constant(1e-8, dtype=tf.float32)

print('boxes_loss contain nan')

total_loss = score_loss + args.lamda_scale * boxes_loss

gradients = tape.gradient(total_loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

print("=> epoch %d step %d total_loss: %.6f score_loss: %.6f boxes_loss: %.6f" % (epoch + 1, step + 1,

total_loss.numpy(),

score_loss.numpy(),

boxes_loss.numpy()))

fl.write('%d %d %.6f %.6f %.6f' % (epoch, step, score_loss.numpy(), boxes_loss.numpy(), total_loss.numpy()))

fl.write('\n')

# writing summary data

with writer.as_default():

tf.summary.scalar("total_loss", total_loss, step=global_steps)

tf.summary.scalar("score_loss", score_loss, step=global_steps)

tf.summary.scalar("boxes_loss", boxes_loss, step=global_steps)

writer.flush()

model.save_weights("RPN.h5")

fl.close()

plot_learning_curve('loss.txt')train函数中采用parser进行参数管理,相关函数在代码中进行了解释,值得注意的是在损失函数计算过程中,得分的部分采用softmax交叉熵,因为网络原本的最后输出是未经过激活函数激活的;box部分的损失采用L1平滑损失进行计算,优点在于在远离原点的部分斜率稳定,不会造成梯度爆炸,训练过程等稳定,在原点的损失函数倒数不为0,可求解。

另外在计算损失函数过程中,为了防止出现网络神经元坏死的现象即loss出现nan值,代码中设置了损失截断,以一个极小值代替0损失,实验训练结果证明是有效果的。还有一种方法就是在对anchor框判断是否位于图像内部时,可以将边界适当放宽(此代码中为5),即使anchor框部分位于图像之外,也是符合要求不会被滤除。

6、test.py

import os

from argparse import ArgumentParser

from utils import output_decode, plot_boxes_on_image, nms

import cv2

import numpy as np

import tensorflow as tf

from rpn import RPN

import yaml

from tqdm import tqdm

parser = ArgumentParser(description='paras for test')

parser.add_argument('--data', default='data.yaml', help='test data config yaml')

parser.add_argument('--img_size', default=512, help='input image size')

parser.add_argument('--weights', default='RPN1.h5', help='trained model weights')

parser.add_argument('--score_thresh', default=0.3, help='threshold to filter low confidence boxes')

parser.add_argument('--nms_thresh', default=0.1, help='threshold to filter overlapped predict boxes')

parser.add_argument('--task', default='test', help='choose from test, train, val')

args = parser.parse_args()

def test():

print('Testing...')

# parse yaml config file

with open(args.data, 'r', encoding='utf-8') as f:

data = f.read()

yaml_data = yaml.load(data, Loader=yaml.FullLoader)

test_data_path = os.path.join(yaml_data['path'], yaml_data[args.task])

pred_result_path = os.path.join(yaml_data['path'], 'pred')

# pred_result_path = str(pred_result_path)

print(test_data_path)

print(pred_result_path)

# test_data_path = '/'

# load weights of rpn

model = RPN()

fake_data = np.ones(shape=(1, args.img_size, args.img_size, 3), dtype=np.float64)

model(x=fake_data)

model.load_weights(args.weights)

if not os.path.exists(pred_result_path):

os.mkdir(pred_result_path)

for file in tqdm(os.listdir(test_data_path)):

# print('Predicting...', file)

raw_img = cv2.imread(os.path.join(test_data_path, file))

# prepare data

input_data = cv2.resize(raw_img, (args.img_size, args.img_size), interpolation=cv2.INTER_CUBIC)

# print(input_data.shape)

input_data = np.expand_dims(input_data/255.0, 0)

# prediction

pred_boxes, pred_scores = model(input_data)

# decode prediction

pred_scores1 = tf.nn.softmax(pred_scores, axis=-1)

pred_boxes2, pred_scores2 = output_decode(pred_boxes, pred_scores1, args.score_thresh)

# non maximum suppression

pred_boxes3, pred_scores3 = nms(pred_boxes2, pred_scores2, args.nms_thresh)

# plot prediction result on raw image

img_with_boxes = plot_boxes_on_image(raw_img, np.array(pred_boxes3)*2, [256, 56, 56])

for i in range(len(pred_scores3)):

xmin = int(pred_boxes3[i, 0])*2

xmax = int(pred_boxes3[i, 0])*2 + 145

ymin = int(pred_boxes3[i, 1])*2 - 20

ymax = int(pred_boxes3[i, 1])*2

cv2.rectangle(img_with_boxes, pt1=(xmin, ymin), pt2=(xmax, ymax), color=[56, 56, 255], thickness=-1)

cv2.putText(img_with_boxes, 'target %.3f' % pred_scores3[i],

(xmin, ymax - 4),

cv2.FONT_HERSHEY_SIMPLEX, 0.55, [255, 255, 255], 2)

cv2.imwrite(os.path.join(pred_result_path, str(file)), img_with_boxes)

if __name__ == '__main__':

test()test中先使用fake_data加载训练好的模型参数初始化模型,也就是RPN.h5文件,接着按顺序对测试文件进行预处理并喂入模型进行预测,并对结果进行解码,最后将预测框和标签及得分绘制在图像上进行保存。

7、data.yaml配置文件的格式

# Faster RCNN data config yaml

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: C:/Users/1234/Desktop/data_split # dataset root dir

train: train/images # train images (relative to 'path') 128 images

test: new_test

#val: val/images # val images (relative to 'path') 128 images

#test: test/images # test images (optional)

# Classes

nc: 1 # number of classes

names: ['target'] # class names8、有关训练的记录

能看到这里相信你一定非常想看到自己的模型跑出正确的结果,但常常不是那么顺利。构建网络准备数据的过程十分顺利,对函数逐个进行测试也没问题,但整体运行就容易出问题。下面总结的是我在训练Faster RCNN过程中出现的问题:

(1)训练速度慢,会报tensorflow的warning,一般是GPU拉跨。需要将输入图像尺寸缩小一点,batch_size缩小一点。

(2)训练过程中出现神经元坏死,这个问题很常见,一般是anchor框超出图像范围被滤除了,之前一篇文章已经解释过了。

(3)训练过程中出现梯度爆炸,第一可适当将learning_rate调小一点,学习率过大会导致网络参数的调整幅度太大,学习过程不稳定;第二检查代码中各个函数是否发生传参问题,比如计算anchor框向gt框偏移量的函数,传参反了会导致anchor框越来越偏离gt框,损失当然会越来越大。

(4)可适当增加batch_size,防止batch过小引起的训练过程不稳定。

9、结果展示

损失函数:损失收敛的比较快

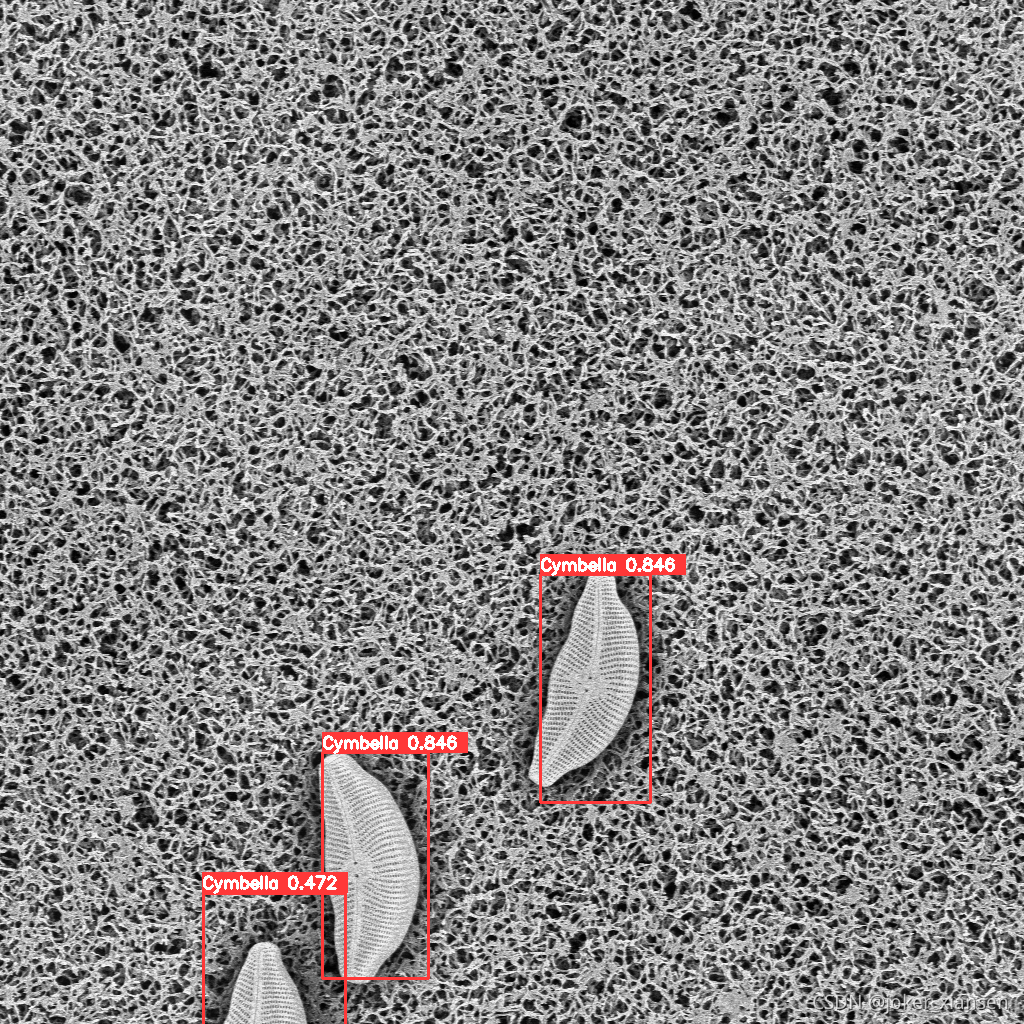

?训练好的模型的检测结果:定位框的精度还不够高,可能是因为colab有资源限制,训练轮数不够。

才疏学浅,欢迎指正!